Article classification and recovery method based on multi-modal active perception

A multi-modal, item technology, applied in the direction of manufacturing tools, chucks, manipulators, etc., can solve problems such as being unsuitable for tactile data measurement, failure of tactile material identification, and inability to output the direction of the manipulator grasping items, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

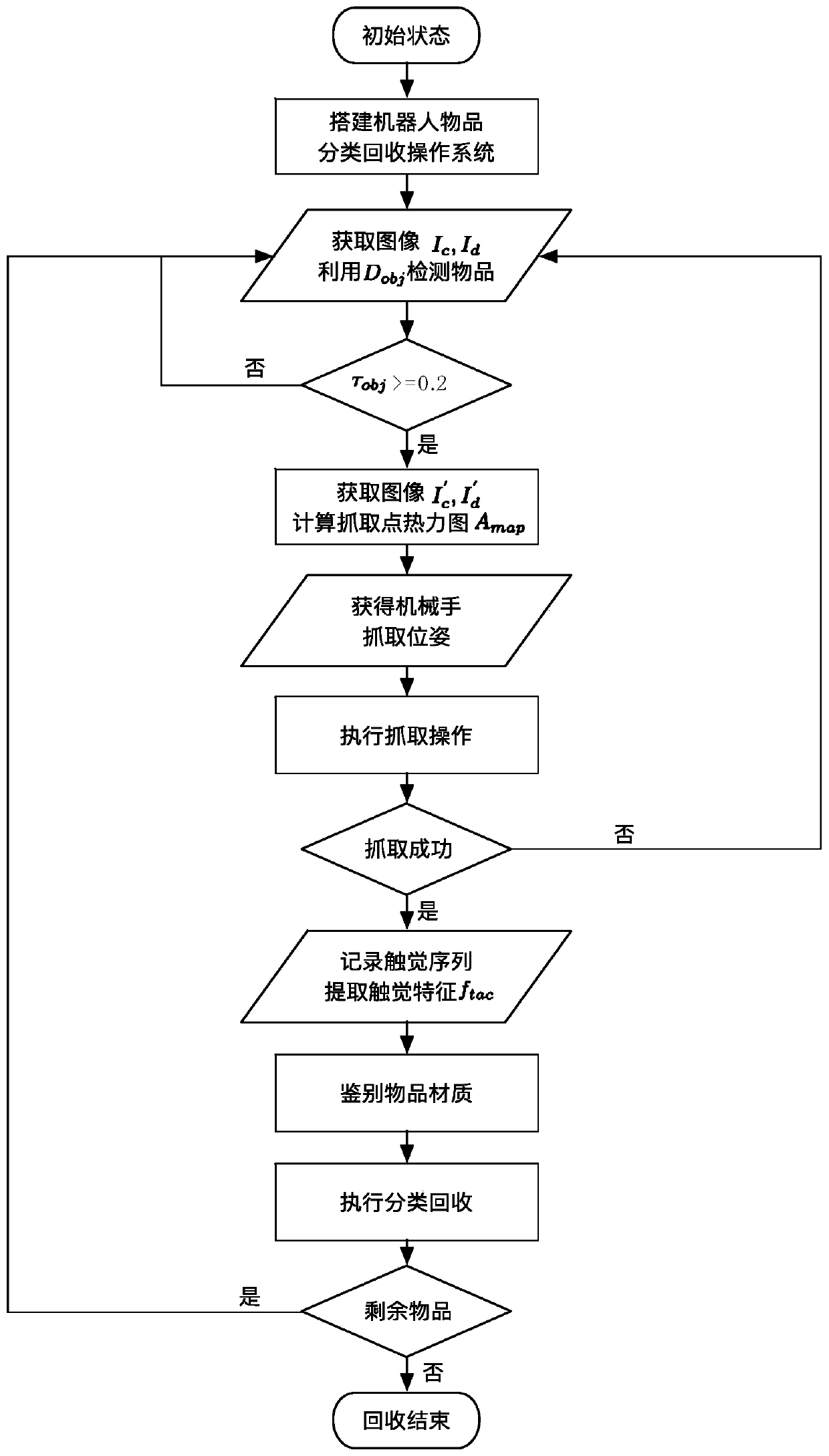

[0052] The article classification and recycling method based on multi-modal active perception proposed by the present invention, its flow chart is as follows figure 1 As shown, the specific steps are as follows:

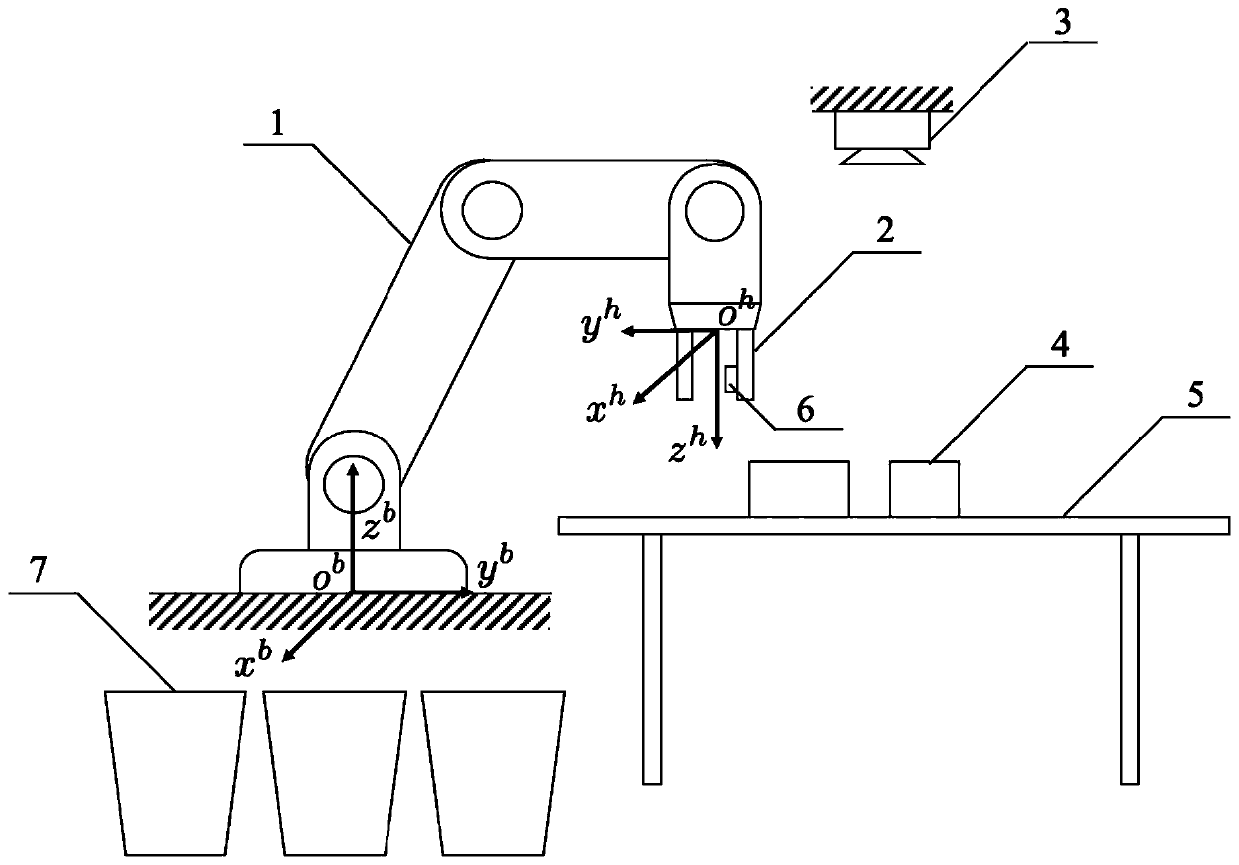

[0053] (1) Build a system such as figure 2 Actual robotic item sorting and recycling operating system shown:

[0054] Including: a mechanical arm 1 (the present embodiment is UniversalRobot 5), a manipulator 2 (such as the CobotCohand212 model) that includes a suction cup, a color depth camera 3 (the present embodiment is the KinectV2 camera), a tactile sensor 6 (the present embodiment An example is a 5×5 piezoresistive flexible tactile sensor array, which can be a conventional model), an operating table 5 that can place items 4, and an item recovery container 7, a color depth camera 3, a tactile sensor 6, a manipulator 2 and Mechanical arm 1 links to each other with controller; In the embodiment of the present invention, controller is notebook computer;

[0055]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com