Patents

Literature

52 results about "Active perception" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Active Perception is where an agents' behaviors are selected in order to increase the information content derived from the flow of sensor data obtained by those behaviors in the environment in question. In other words, in order to understand the world we move around and explore it. We sample the world through our eyes, ears, nose, skin, and tongue as we explore and construct an understanding (Perception) of the environment on the basis of this behavior (Action). Within the construct of Active Perception, the interpretation of sensor data (perception) is inherently inseparable from the behaviors required to capture that data - action (behaviors) and perception (interpretation of sensor data) are tightly coupled. This has been developed most comprehensively with respect to vision (Active Vision) where an agent (animal, robot, human, camera mount) changes position in order to improve the view of a specific object and/or where the agent uses movement in order to perceive the environment (e.g. for obstacle avoidance).

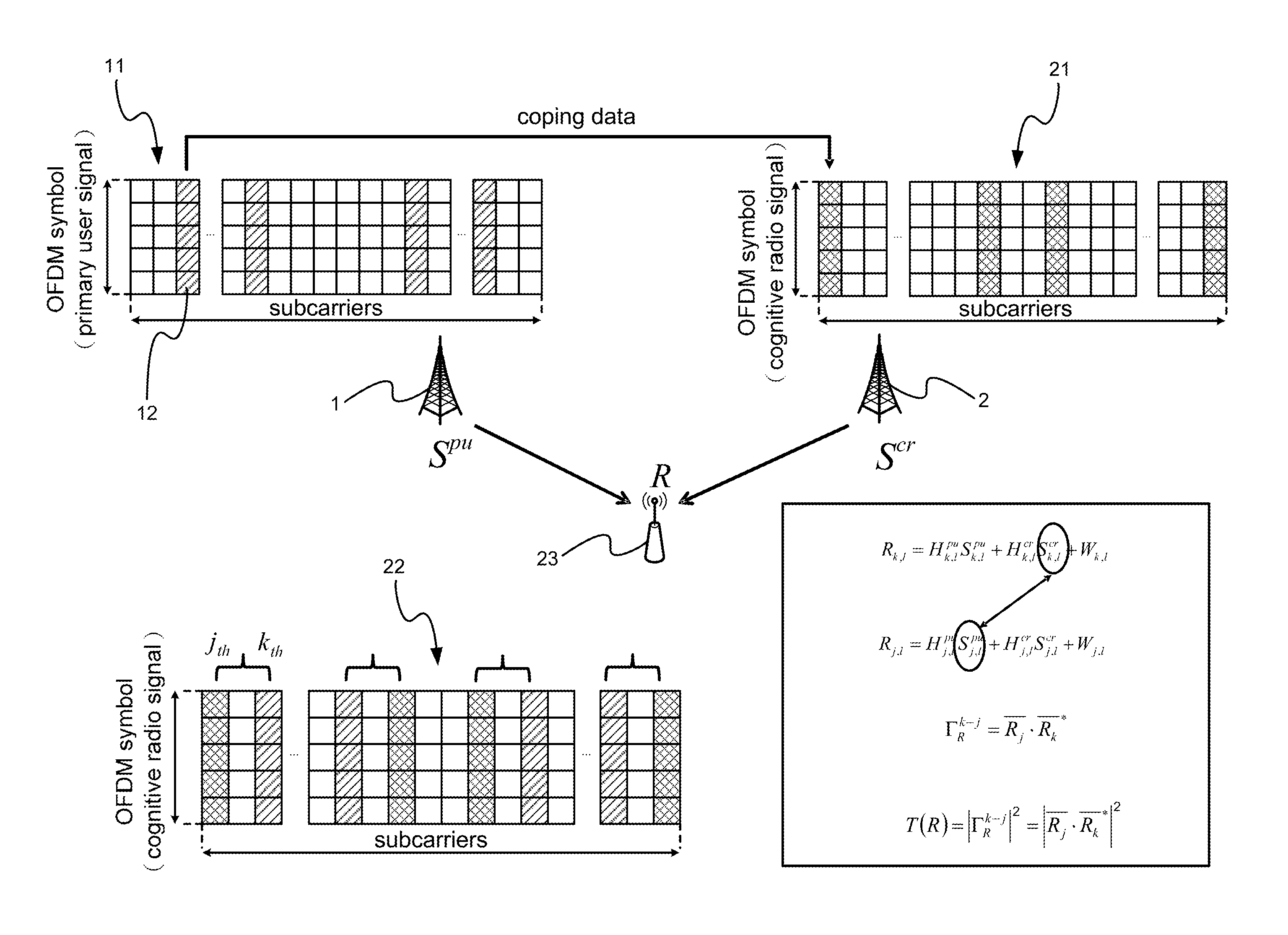

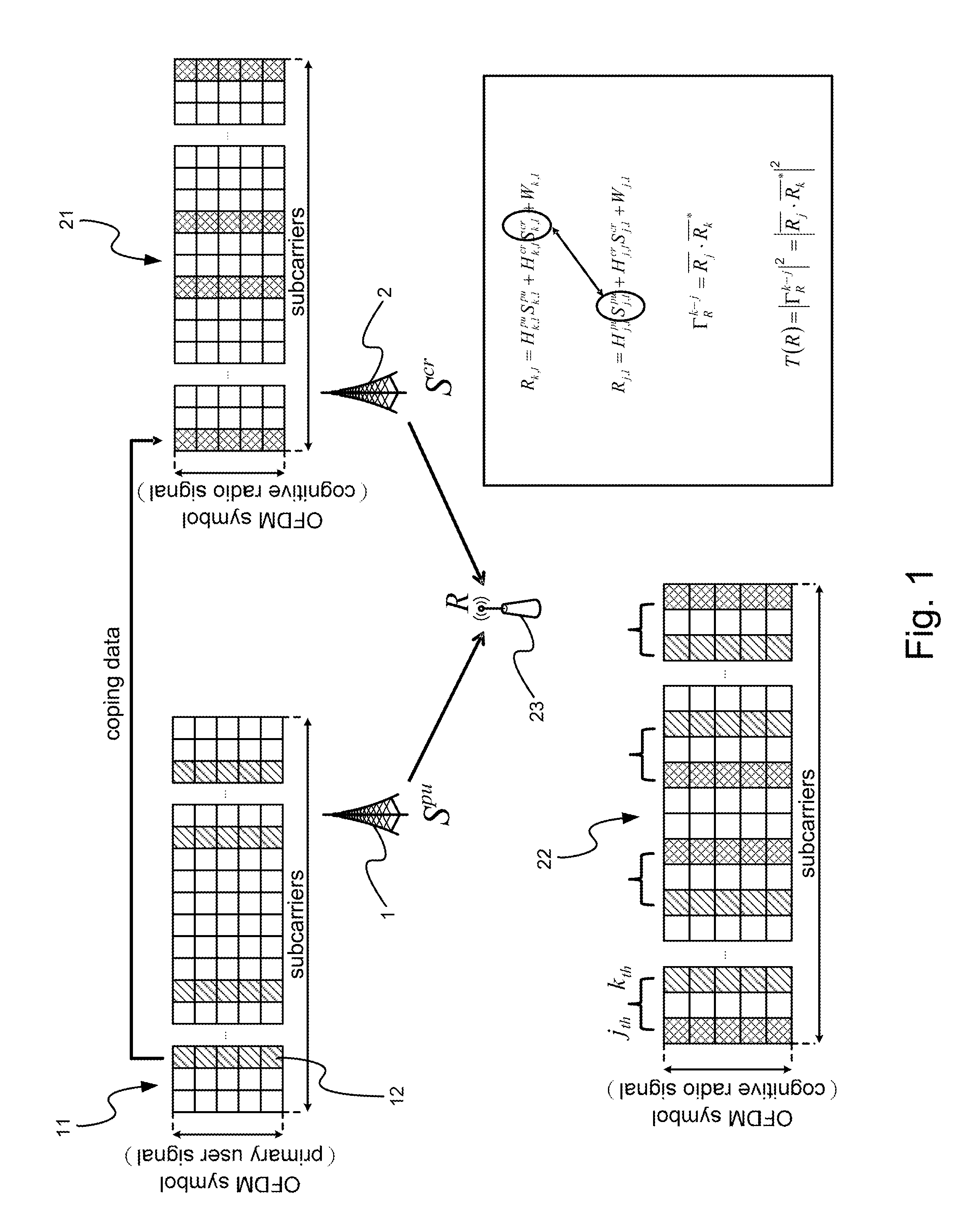

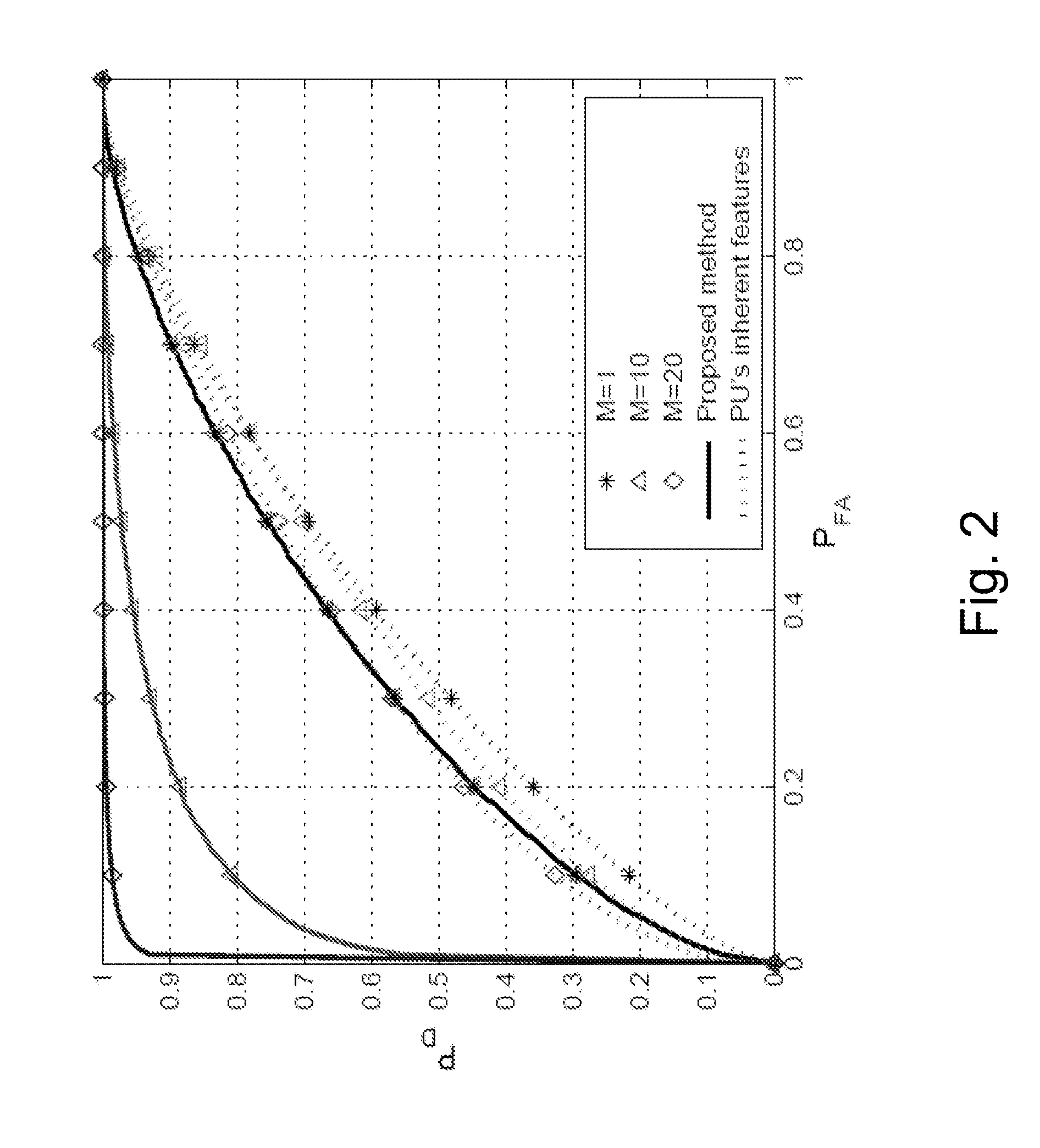

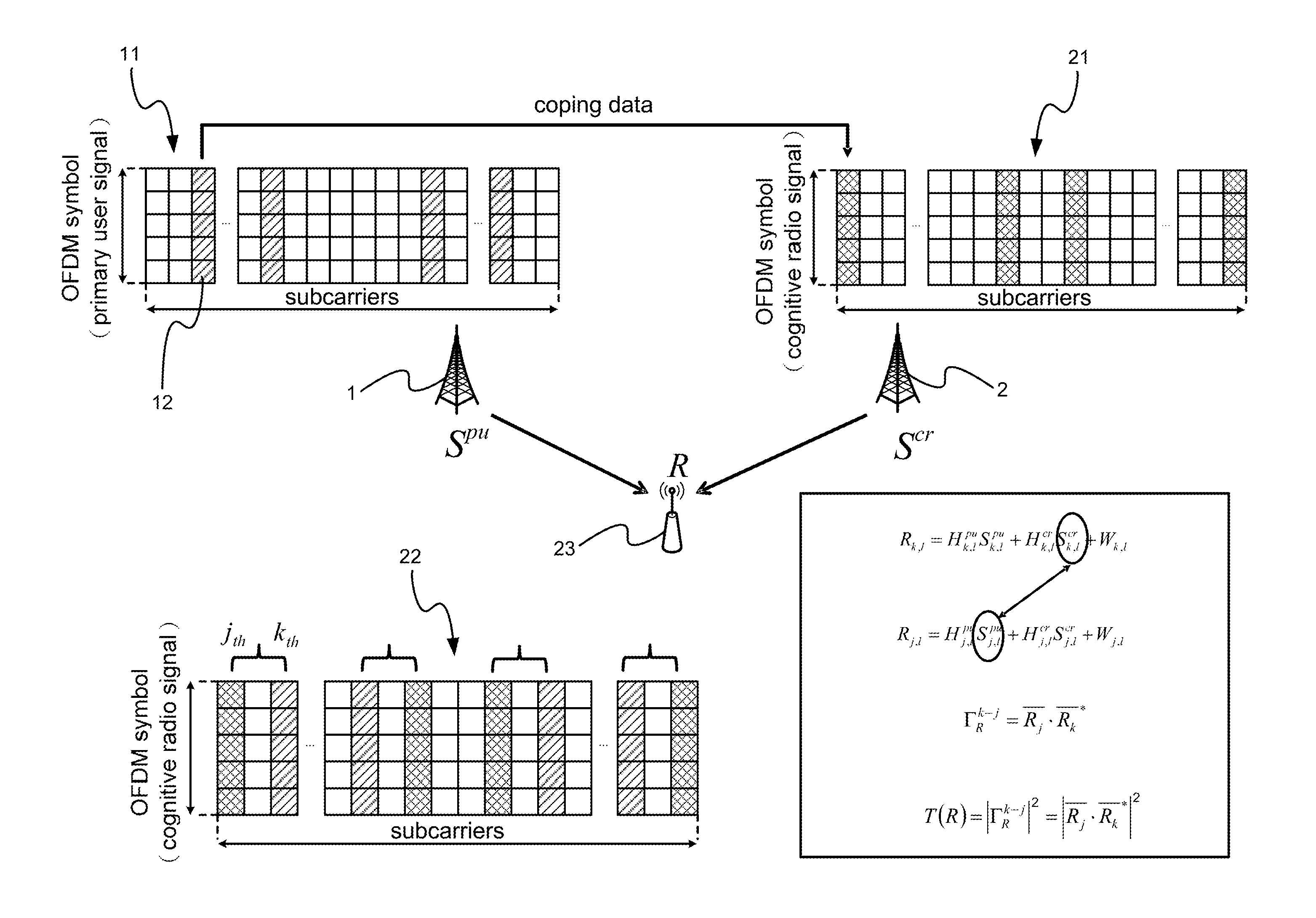

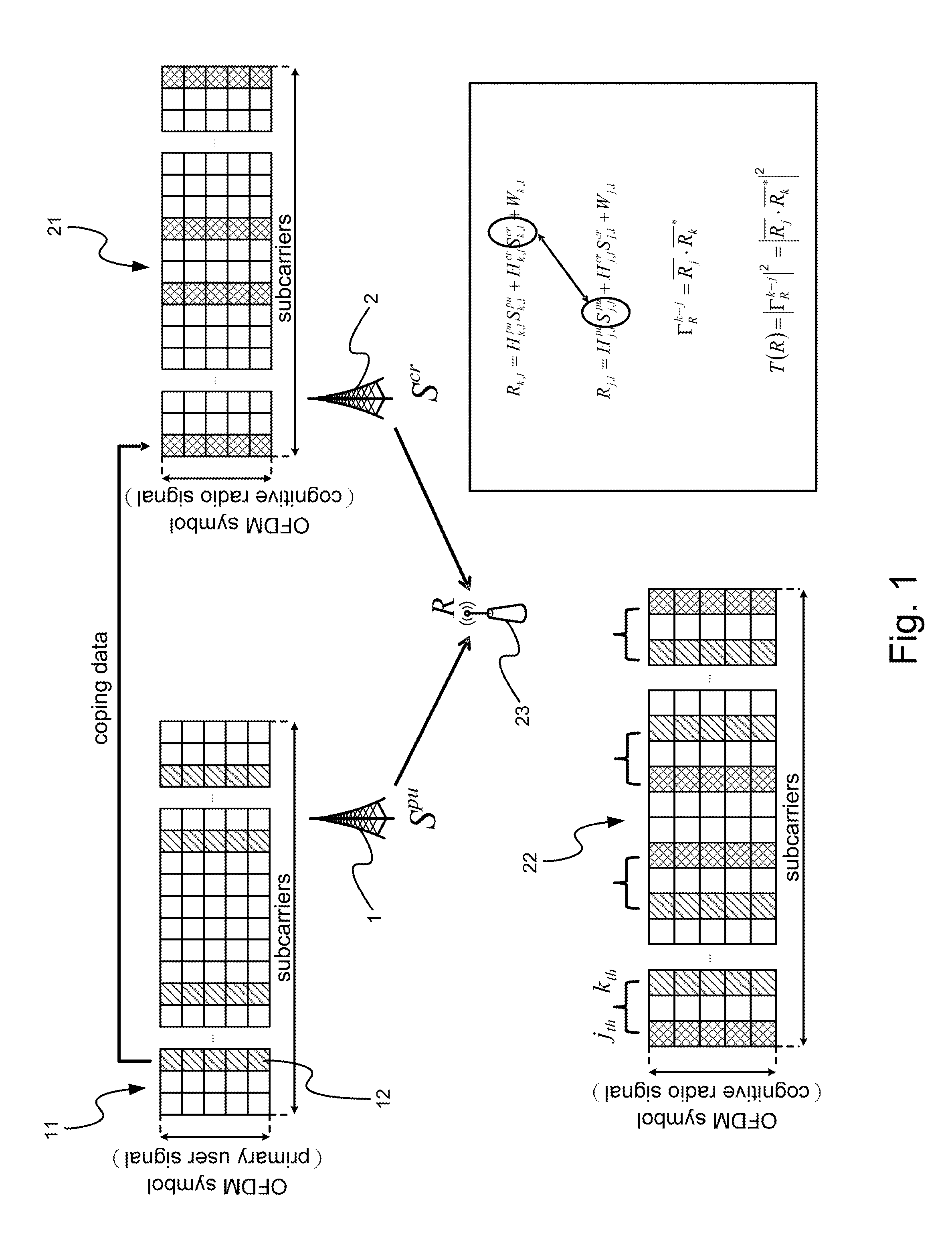

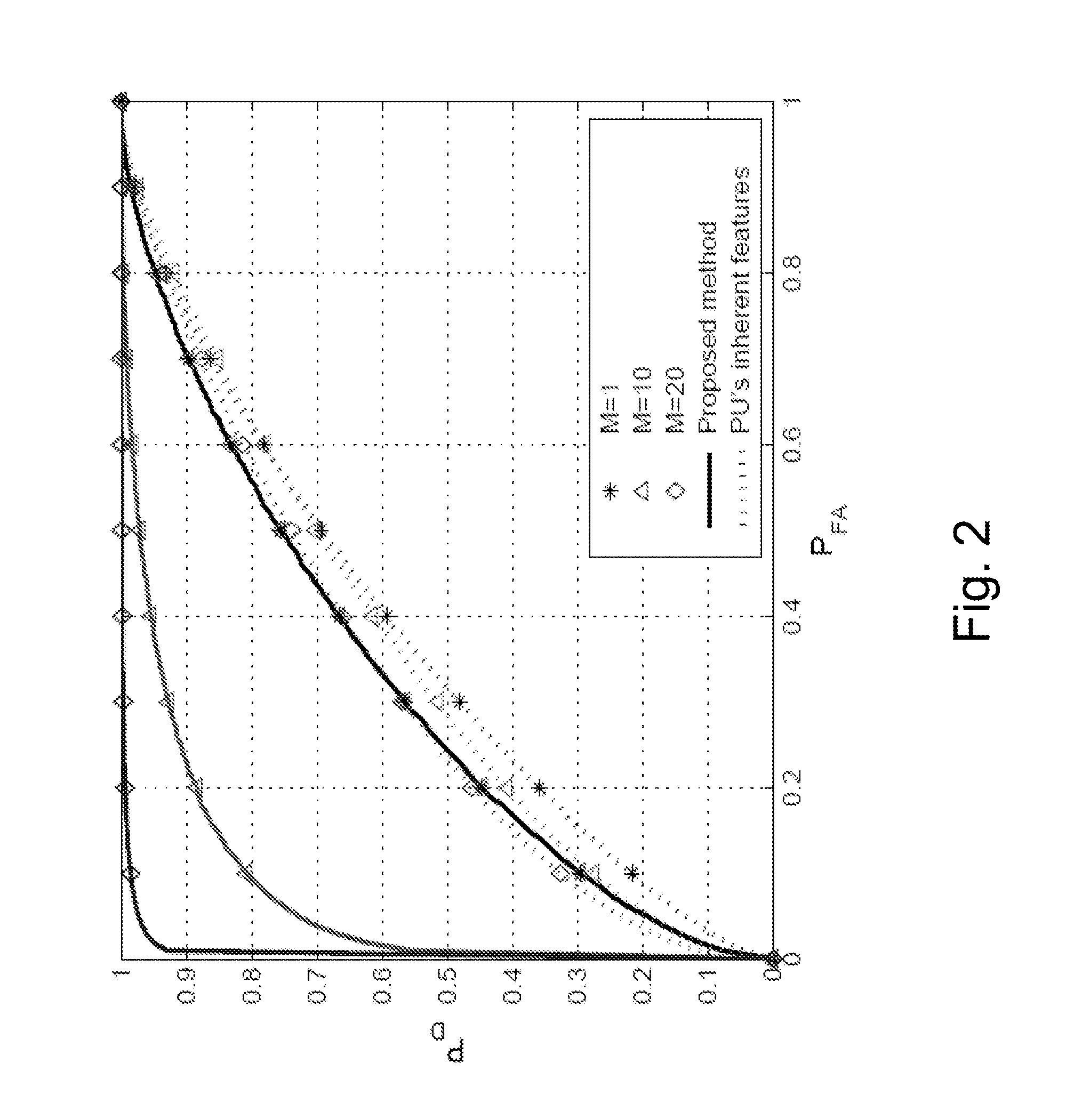

Active sensing method based on spectral correlation for cognitive radio systems

ActiveUS20140064114A1Improve performanceMaintain qualitySpectral gaps assessmentError preventionFrequency spectrumActive perception

A design of cognitive radio (CR) signal structure which based on the spectral correlation can be used for active sensing. In this signal structure, the known pilots used for the primary users (PUs) are duplicated and reallocated in the CR transmission signal properly. With this CR signal structure, the received signal of spectrum sensors will become correlated on the subcarriers when PU reoccupation occurs while the CR transmission is active, and thus PU activities can easily be detected by computing the spectral correlation function. As compare with the traditional cyclostationary feature detection scheme, this method can enhance the active sensing performance while remaining the service quality of the CR system, achieving better detection performance in the same detection time, reducing sensing time (about 1 / 10 of the traditional sensing time), and still reaching the satisfactory outcome even in the circumstances of low SNR and SINR.

Owner:NATIONAL TSING HUA UNIVERSITY

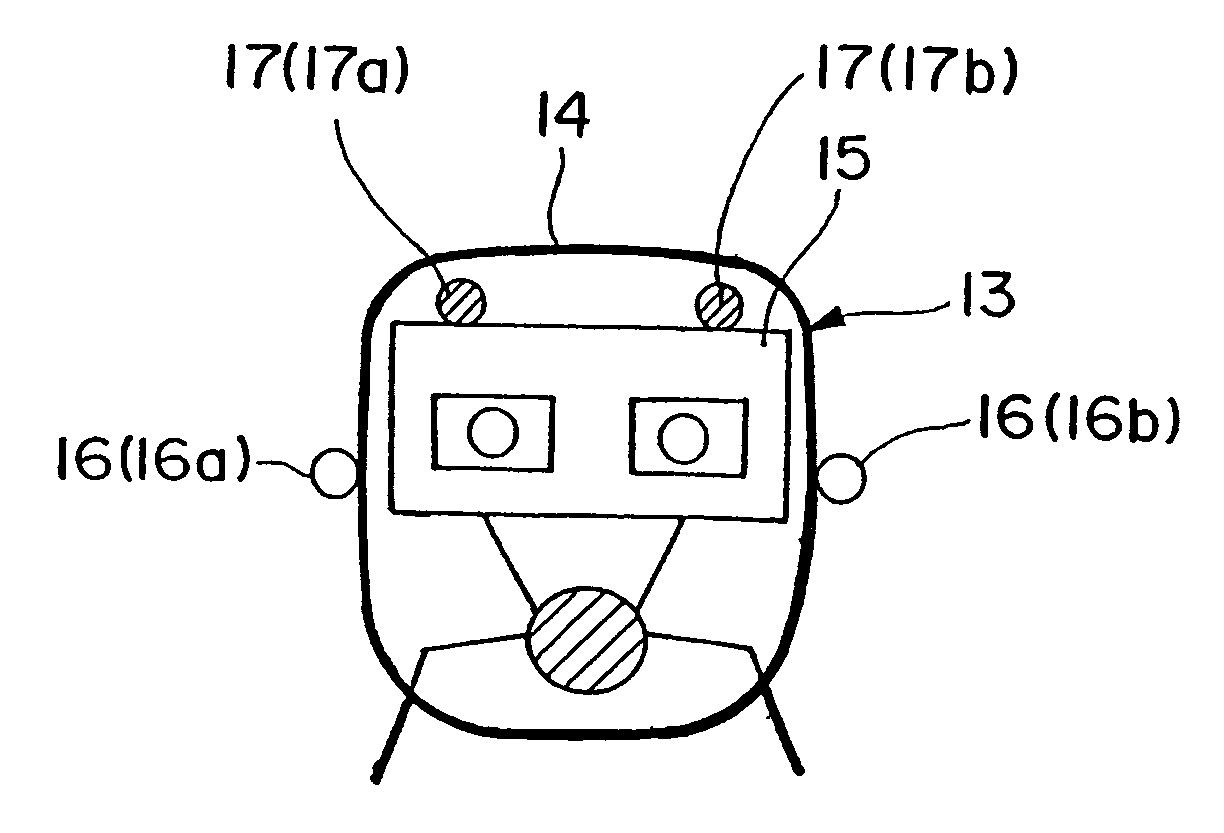

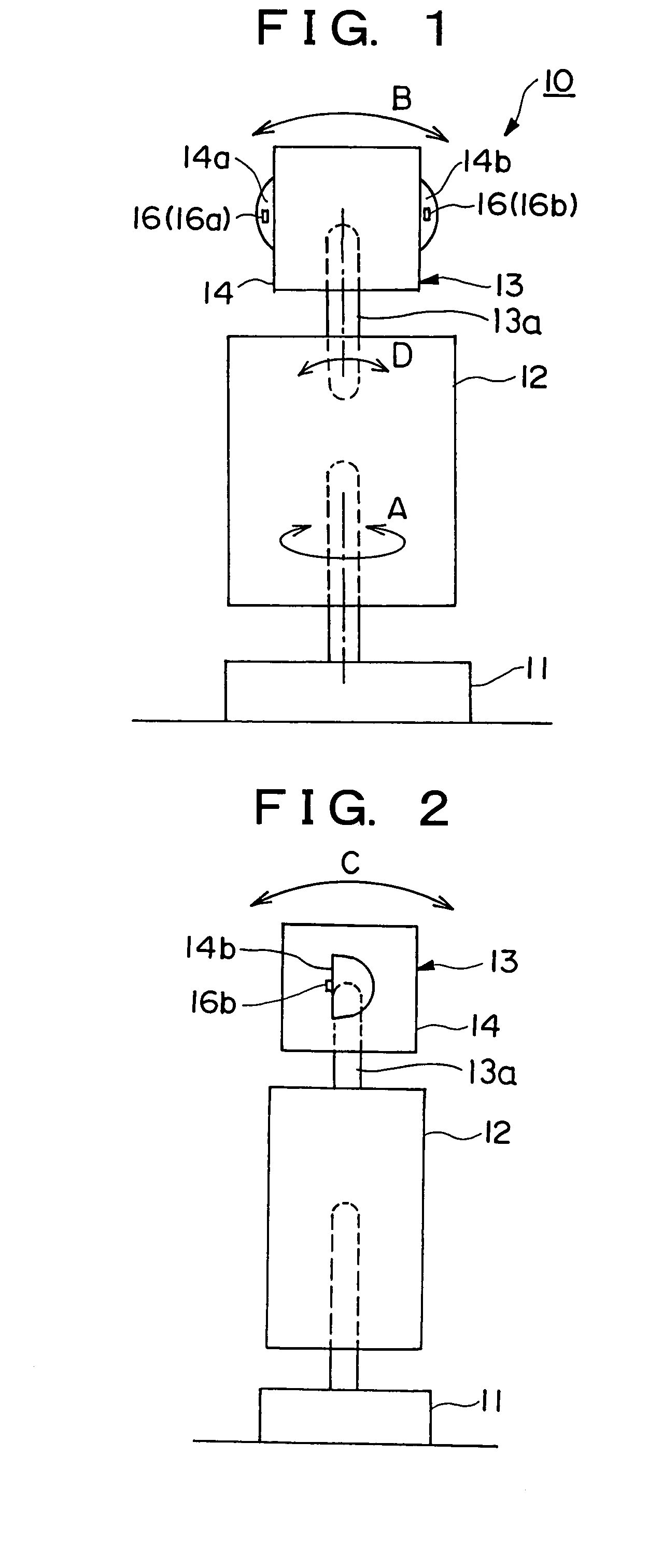

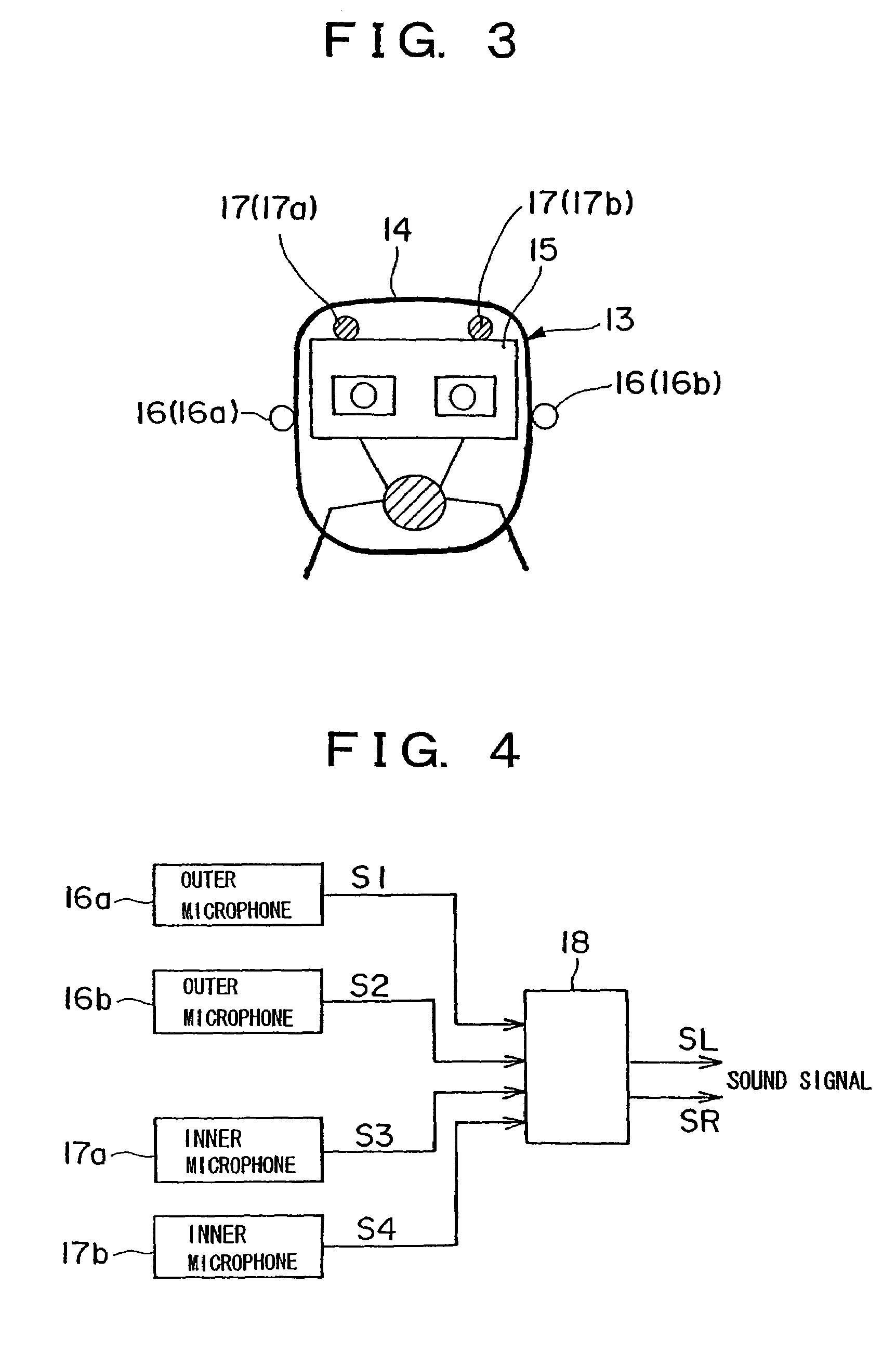

Robot acoustic device

InactiveUS7016505B1Lower Level RequirementsDecrease in levelEar treatmentNoise generationActive perceptionInternal noise

The invention is directed to an auditory robot for a human or animal like robot, e.g., a human like robot (10) having a noise generating source such as a driving system in its interior. The apparatus includes a sound insulating cover (14) with which at least a head part (13) of the robot is covered; a pair of outer microphones (16; 16a and 16b) installed outside of the cover and located at a pair of positions where a pair of ears may be provided spaced apart for the robot, respectively, for collecting an external sound primarily; at least one inner microphone (17; 17a and 17b) installed inside of the cover for primarily collecting a noise from the noise generating source in the robot interior; and a processing module (18) on the basis of signals from the outer and inner microphones for removing from sound signals from the outer microphones (16a and 16b), a noise signal from the internal noise generating source. Thus, the robot auditory apparatus of the invention is made capable of effecting active perception by permitting an external sound from a target to be collected unaffected by a noise in the inside of the robot such as from the driving system.

Owner:HONDA MOTOR CO LTD

Intelligent control method for visual tracking

InactiveCN1570949AEasy to useReally easy to useImage enhancementCharacter and pattern recognitionActive perceptionComputer pattern recognition

This invention is a vision tracking control method that can realize real time tracking eye's sight point movement by computer pattern identification. This method can realize the tracking of eye's sight point by building view angle boundary proportion model as data imitation sample. By this method, computer can acquire people's command information automatically by actively apperceive mode.

Owner:万众一

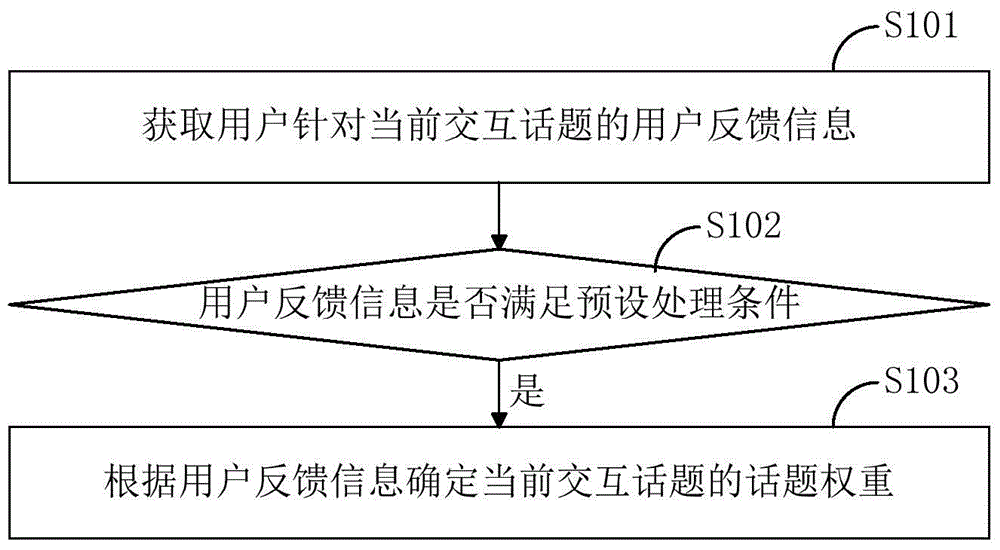

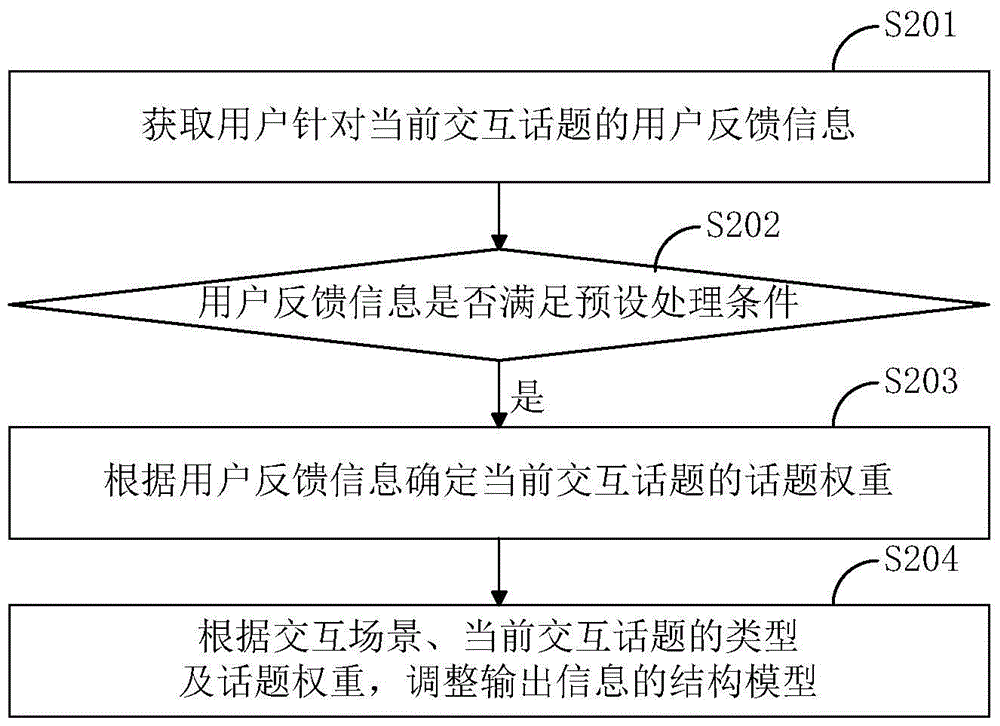

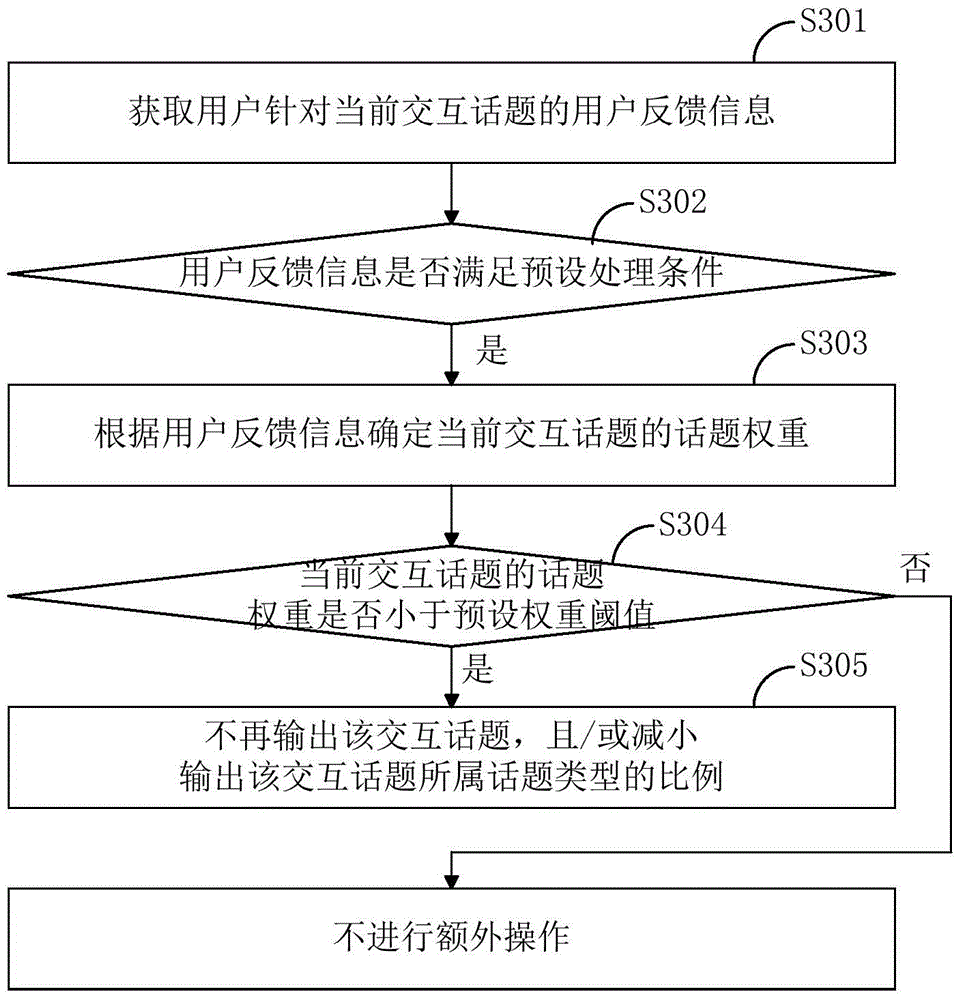

Question and answer evaluating method and device for intelligent robot

ActiveCN105760362AAccurate responseImprove experienceSemantic analysisSpecial data processing applicationsActive perceptionQuestions and answers

The invention discloses a question and answer evaluating method and device for an intelligent robot.The method comprises the steps of obtaining interaction information, and acquiring user feedback information input by a user according to the current interaction topic; determining topic weight, judging whether the user feedback information meets a preset processing condition, and determining the top weight of the current interaction topic according to the user feedback information if yes, wherein the top weight is used for representing the dislike degree of the user to the current interaction topic.By the adoption of the method, the affective state of the user to the current interaction topic can be sensed actively during man-machine interaction, the dislike degree of the user to the current interaction topic can be determined quantitatively according to the topic weight so that the intelligent robot can respond accurately, and then user experience during man-machine interaction is improved.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

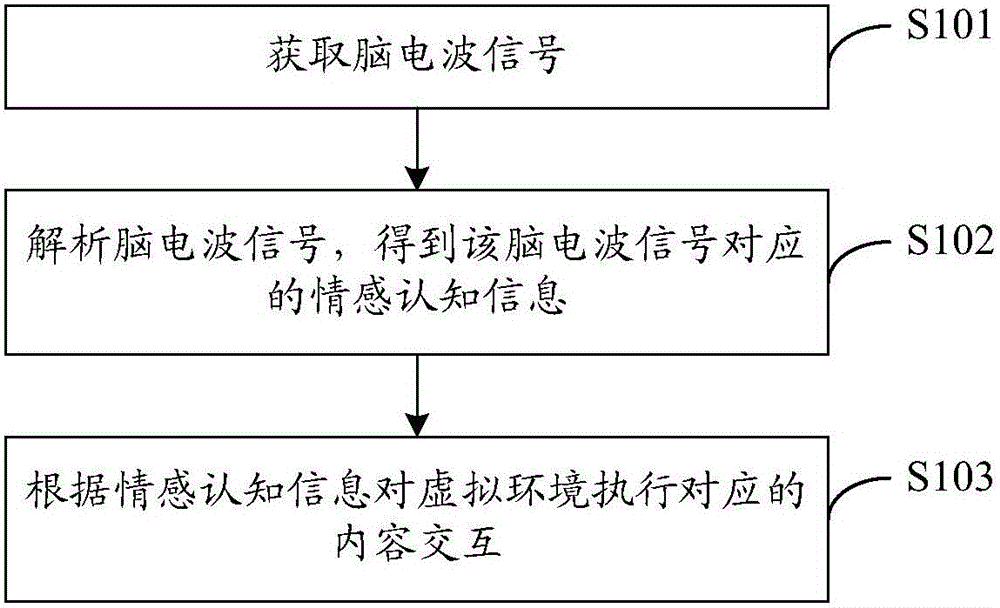

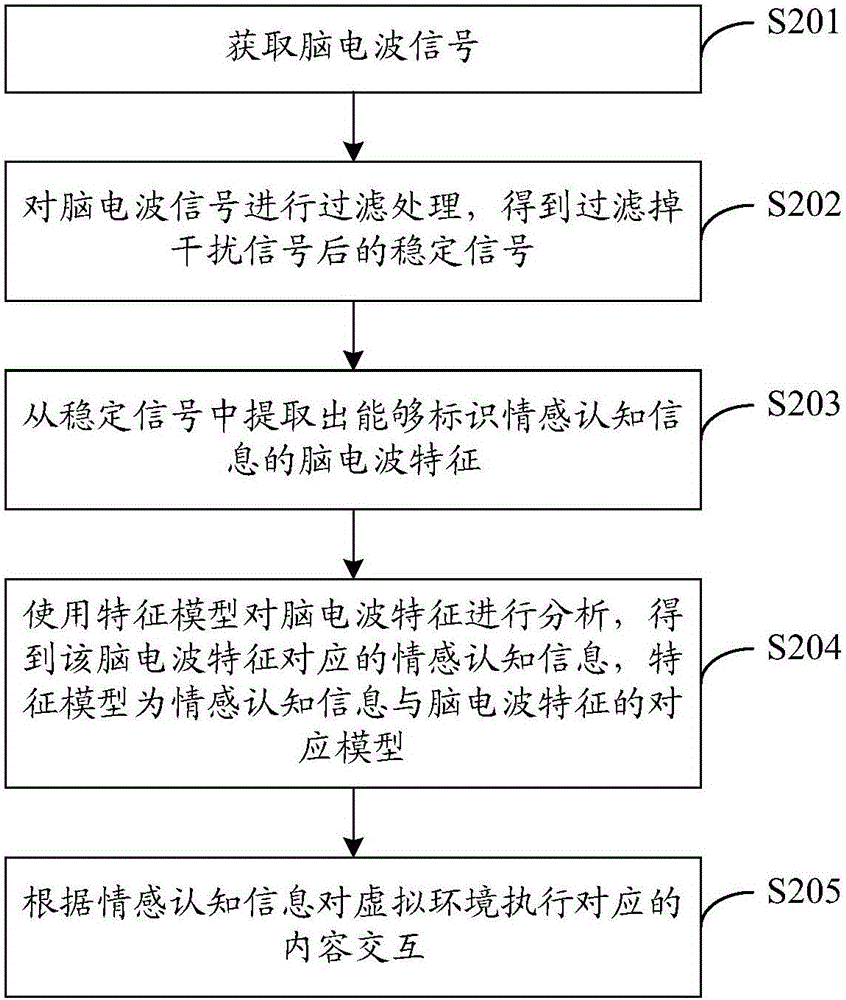

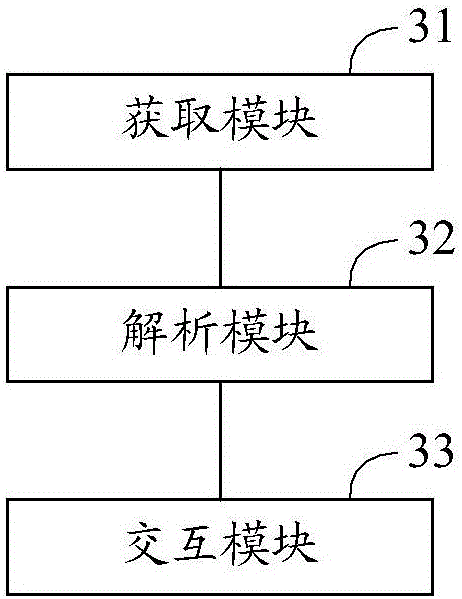

Method and device for content interaction in virtual reality

InactiveCN106560765AControl is no longer limitedNo longer limited to interactive behaviorInput/output for user-computer interactionGraph readingActive perceptionCognition.knowledge

The invention, which is suitable for the technical field of the computer, provides a method and device for content interaction in virtual reality, so that problems that virtual environment control by the user is limited to a body movement or manual instruction range and the virtual environment only receives a control instruction from a user passively to carry out interaction feedback can be solved. The method comprises: acquiring a brain wave signal; analyzing the brain wave signal to obtain emotion cognition information corresponding to the brain wave signal; and according to the emotion cognition information, executing corresponding content interaction on the virtual environment. Therefore, on the premise that no body movement of manual instruction needs to be carried out, the virtual environment control is completed by using the brain wave signal directly; the emotion cognition of the user is sensed by analyzing the brain wave signal, so that the virtual environment carries out interaction feedback with the user actively; and the interaction behavior is not limited to image and touching and both the emotion state and attention state can be used as interaction ways.

Owner:EEGSMART TECHNPLOGY CO LTD

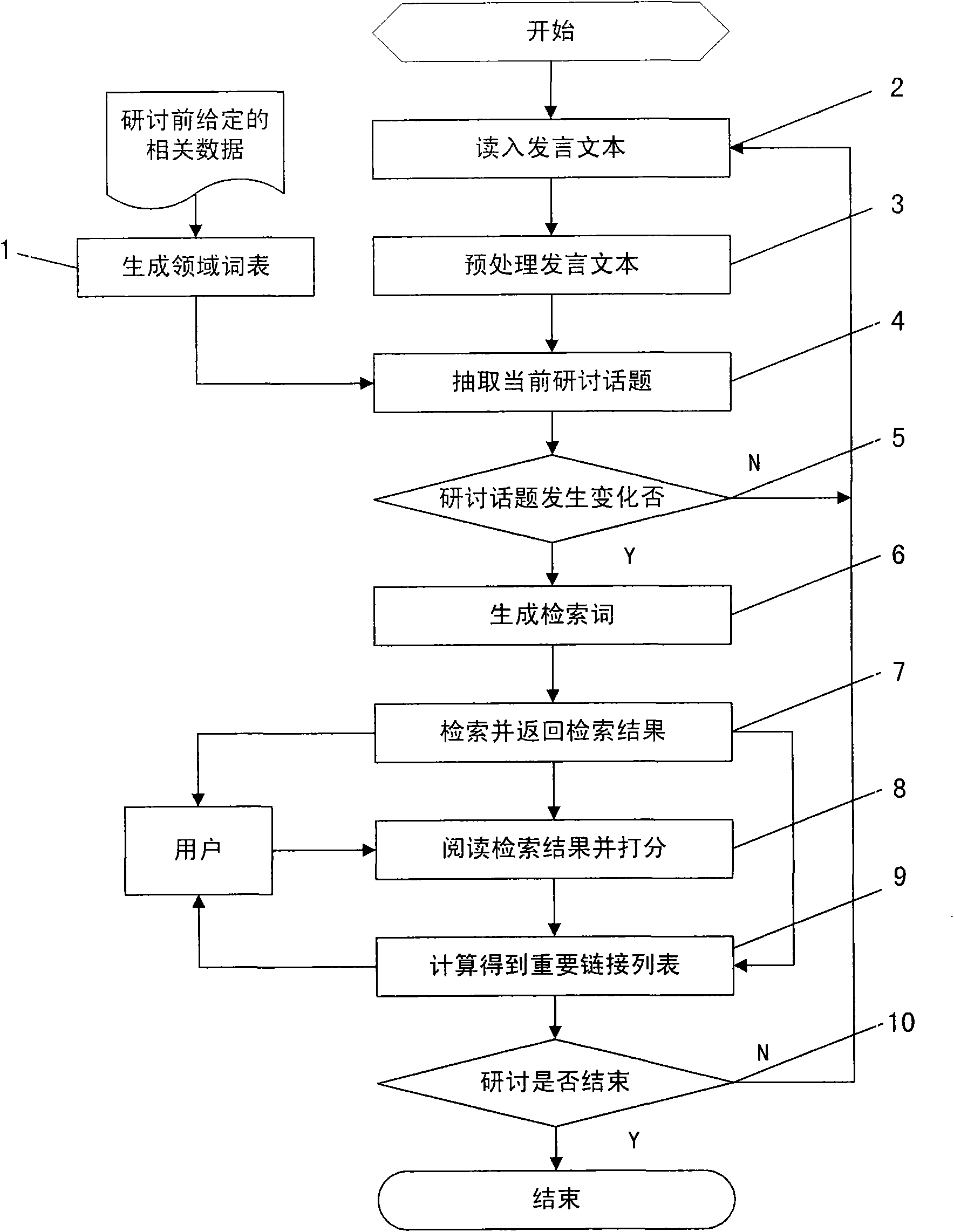

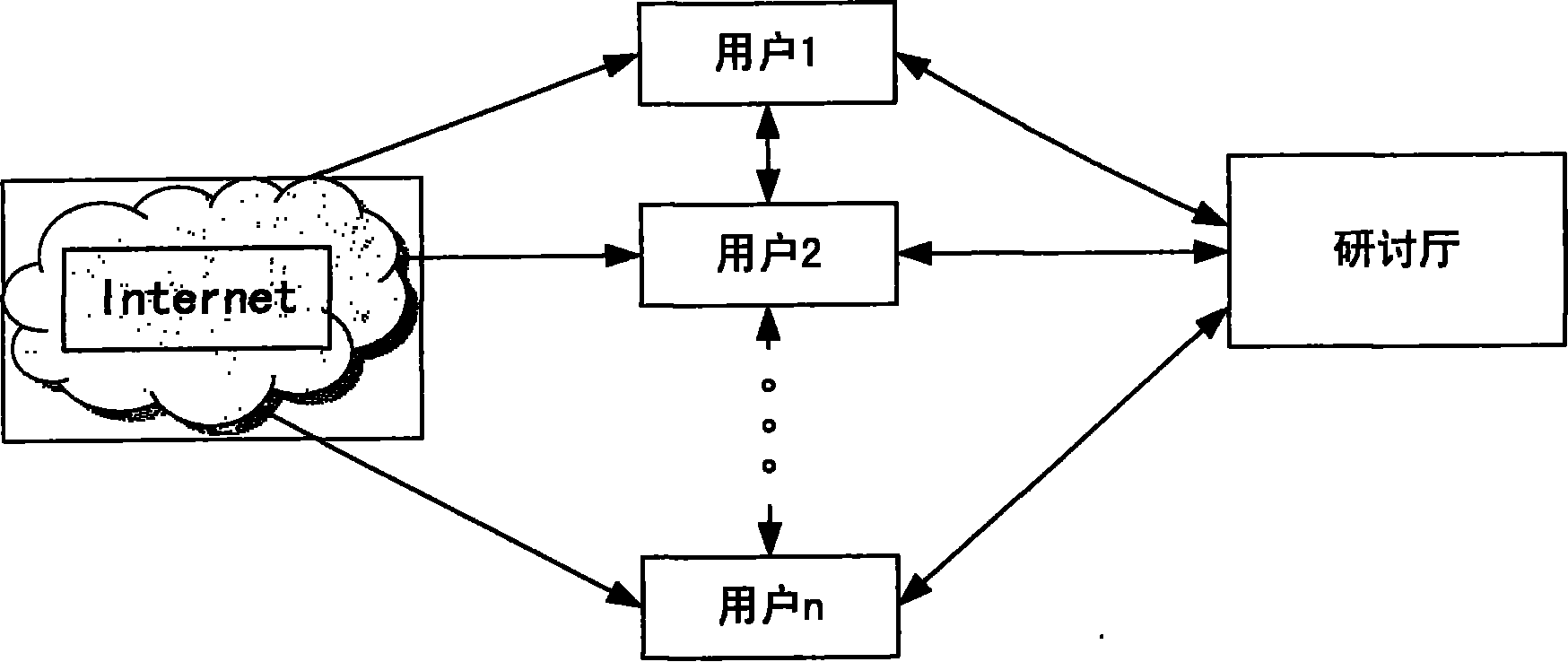

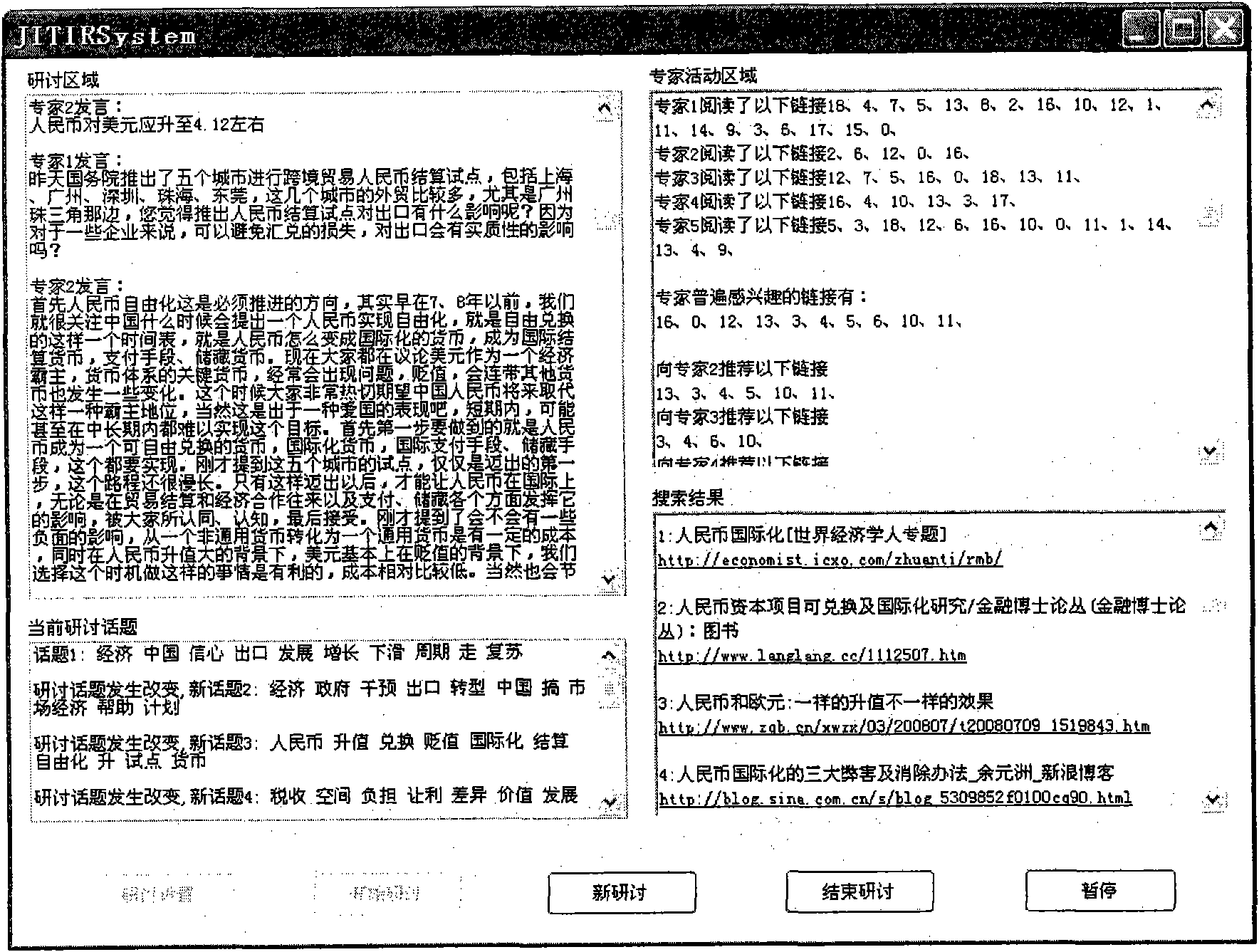

Integrated session environment-oriented information recommendation method

ActiveCN101782920ACutting costsIncrease profitSpecial data processing applicationsActive perceptionInformation searching

The invention discloses an integrated session environment-oriented information recommendation method. In the method, a real-time active information acquiring technique is adopted and the method comprises the following steps of: during the session, actively perceiving specific topics in the current session; judging the change of the topics; automatically generating index words to retrieve under the condition that the topic changes; and presenting the search results to users. At the same time, the method integrates the characteristics of continuous change and flow of the integrated session environment information; the topic of a speech text is analyzed by adopting the method of the combination of field characteristics and the common characteristics, and the screening of important information is realized through the coordination of a plurality of users so that the information recommendation is realized. The method, which is tested in the integrated session environment, can be applied in the environments such as instant communication, network meeting and the like, so that the information searching cost is greatly reduced and the information use ratio is effectively increased.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

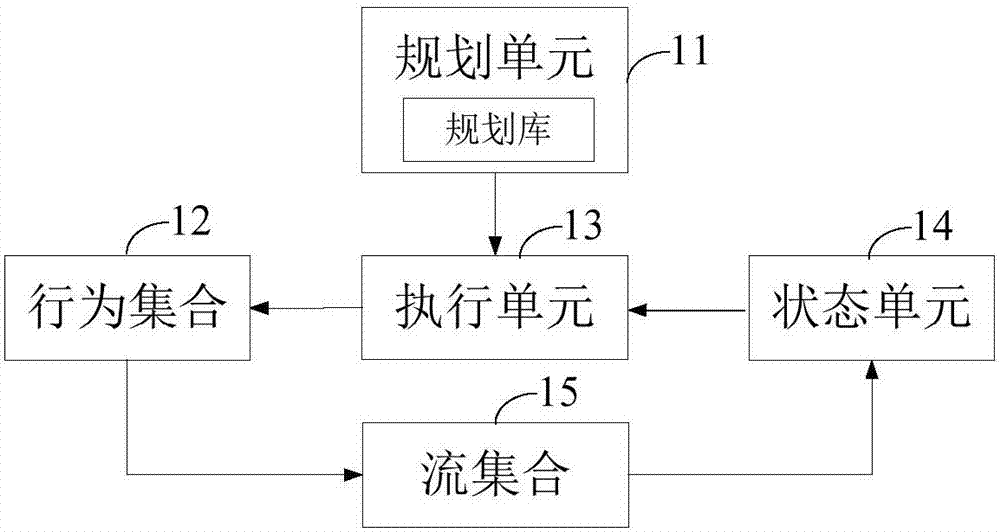

Virtual human and operation method thereof

ActiveCN106940594AEnhanced ability to perform tasksImplement featuresInput/output for user-computer interactionSoftware designActive perceptionExecution unit

The invention is applicable to the field of virtual reality, and provides a virtual human and an operation method thereof. The virtual human comprises a planning unit, a behavior set, an executing unit, a state unit and a flow set, wherein the planning unit is used for resolving tasks contained in a user instruction according to the received user instruction; a planning triple corresponding to the task is obtained and is sent to the executing unit; the executing unit is connected with the behavior set and the state unit, is used for determining an executing two-tuples and executes the tasks; the behavior set is used for storing behaviors contained in the task; the flow set is used for storing flow contained by the virtual human; the state unit is used for providing the current state. The virtual human provided by the invention has the advantages that the current state of the located virtual environment can be actively sensed, so that the virtual human is like a real person in a real environment; the action to be implemented can be automatically decided according to the tasks to be executed.

Owner:SHENZHEN INSTITUTE OF INFORMATION TECHNOLOGY

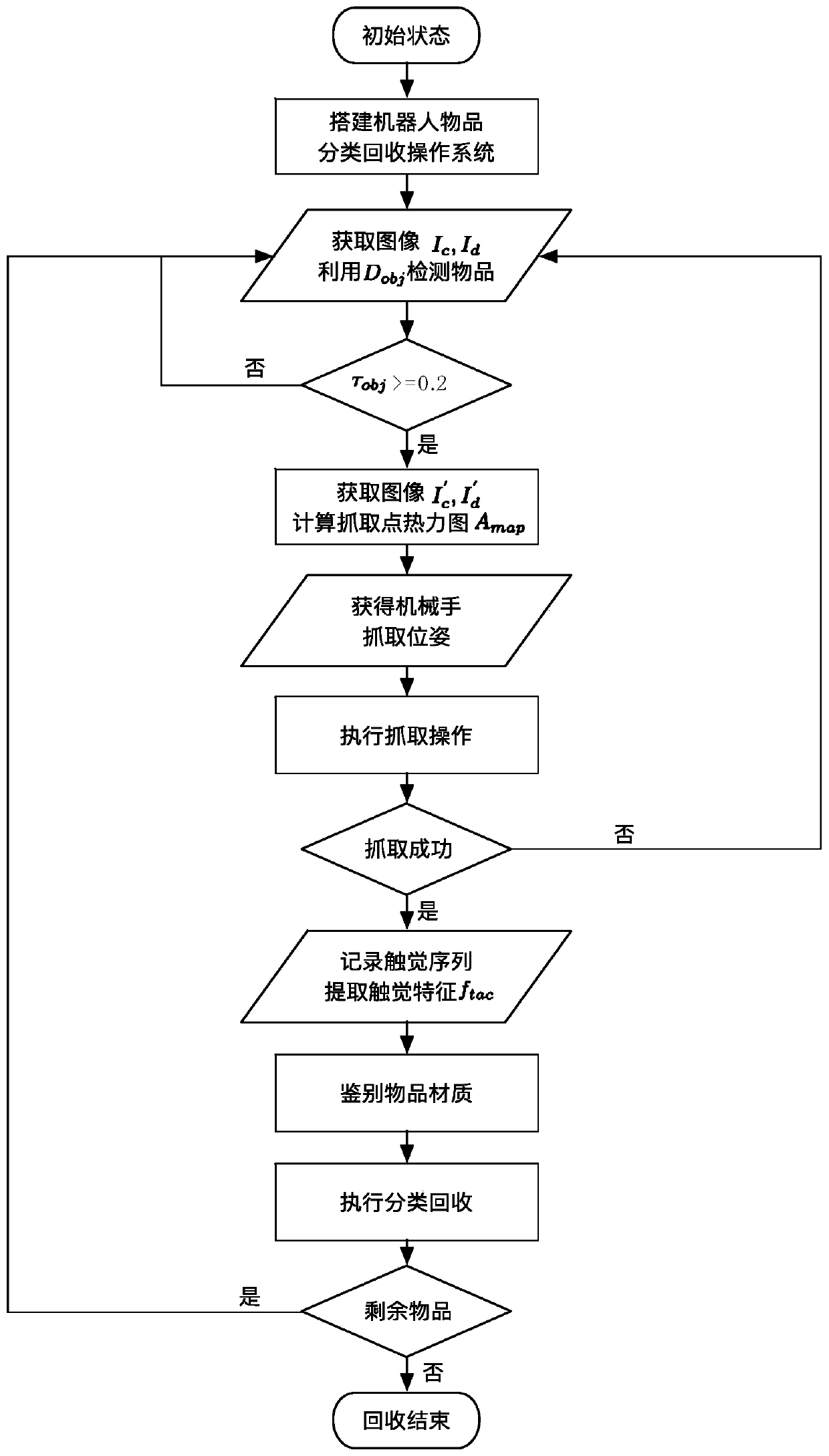

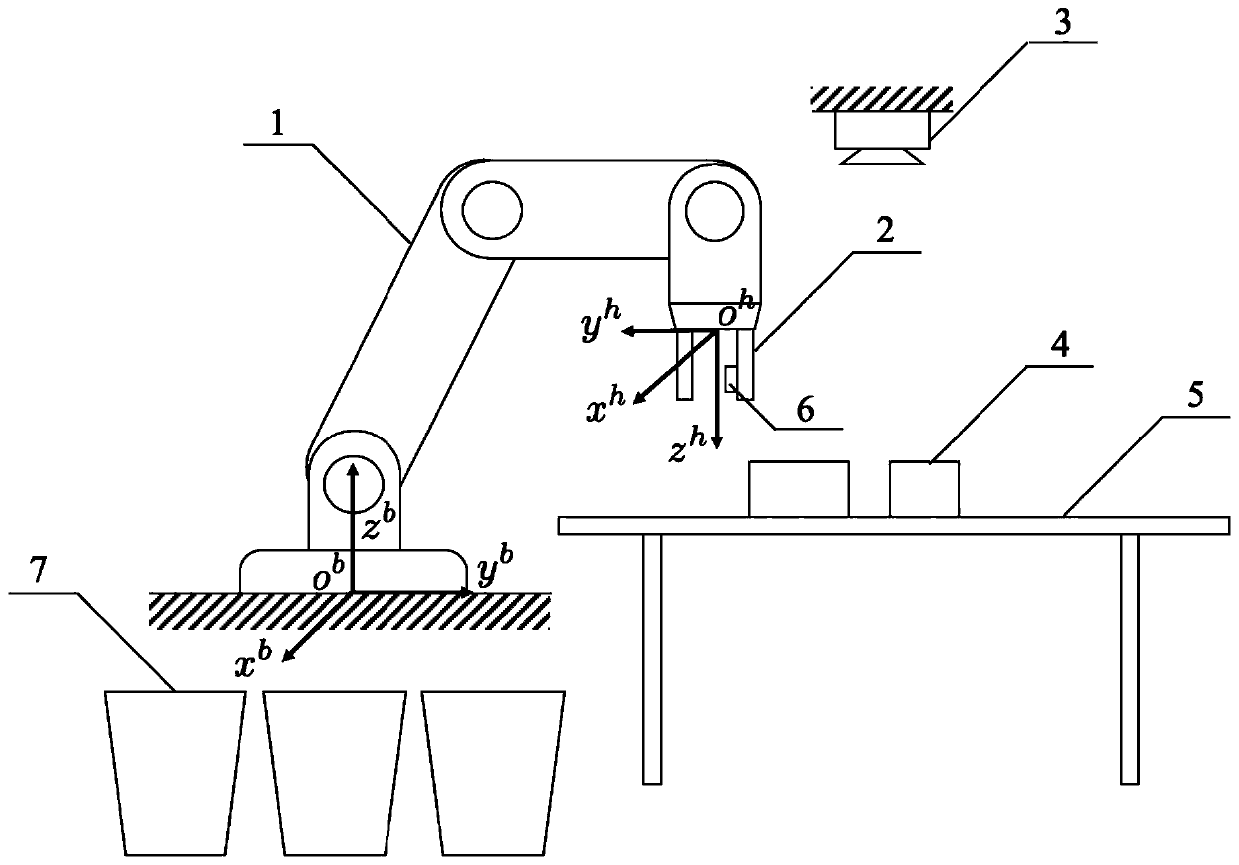

Article classification and recovery method based on multi-modal active perception

ActiveCN111590611ARealize material identificationAccurate classificationGripping headsActive perceptionSignal on

The invention relates to an article classification and recovery method based on multi-modal active perception, and belongs to the technical field of robot application. The method comprises the steps that firstly, a target detection network model facing a target article is built, then a grabbing pose for grabbing the target article is obtained, and a mechanical arm system is guided to actively grabthe target article in a pinching mode according to the grabbing pose; the tail ends of fingers of a mechanical arm are provided with touch sensors, and touch signals on the surface of the target article can be obtained in real time while the target article is grabbed; and feature extraction is conducted on the obtained touch information, the feature information is input into a touch classifier for identifying the material of the article, and classification and recovery of the target article are completed. According to the article classification and recovery method based on multi-modal activeperception, visual and touch multi-modal information is utilized, a robot is guided to actively grab the target article in the most suitable pose through a visual detection result and collect the touch information, article material identification is achieved, and article classification and recovery are completed; and various recyclable articles made of different materials can be automatically identified, and high universality and practical significance are achieved.

Owner:北京具身智能科技有限公司

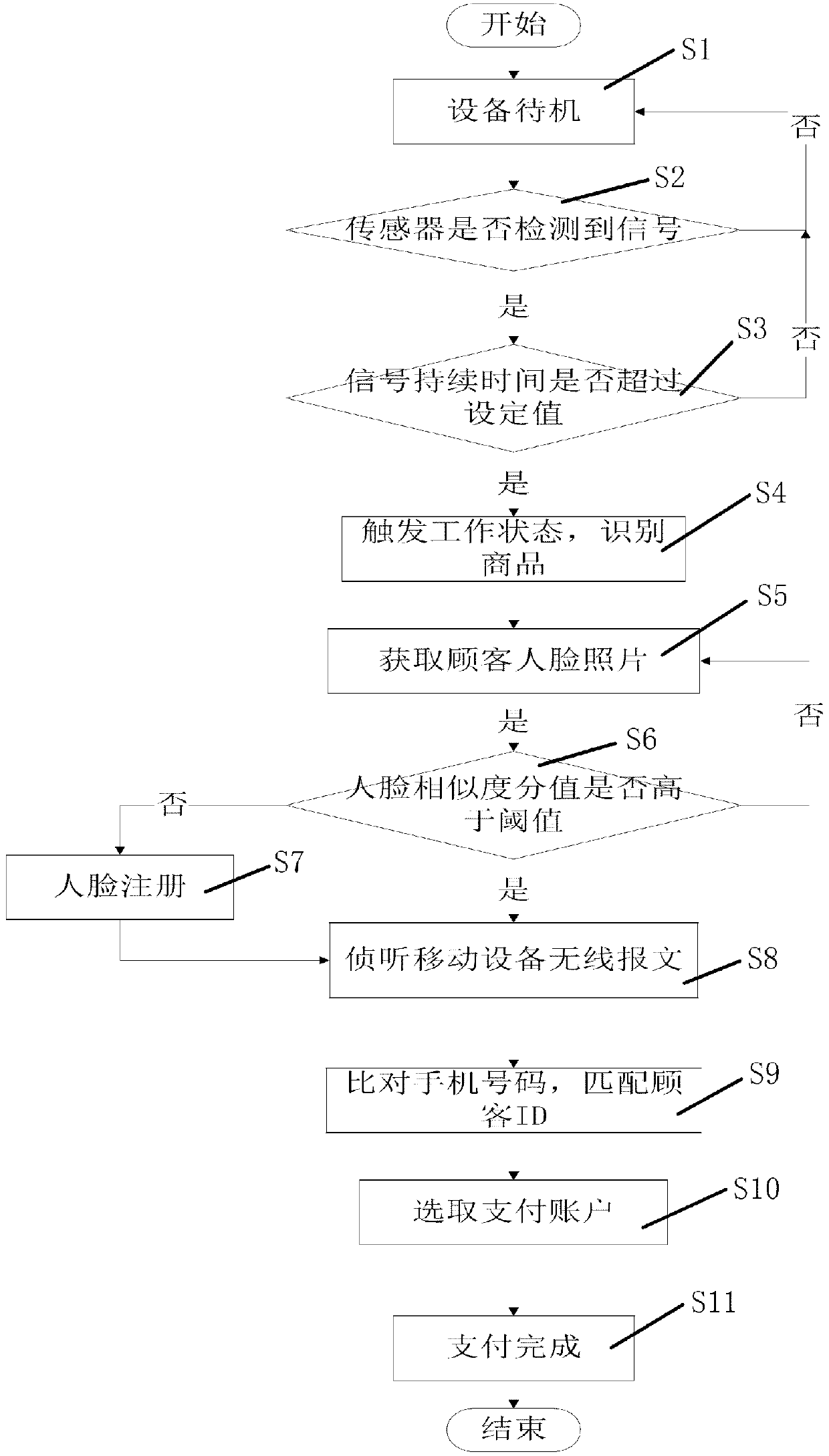

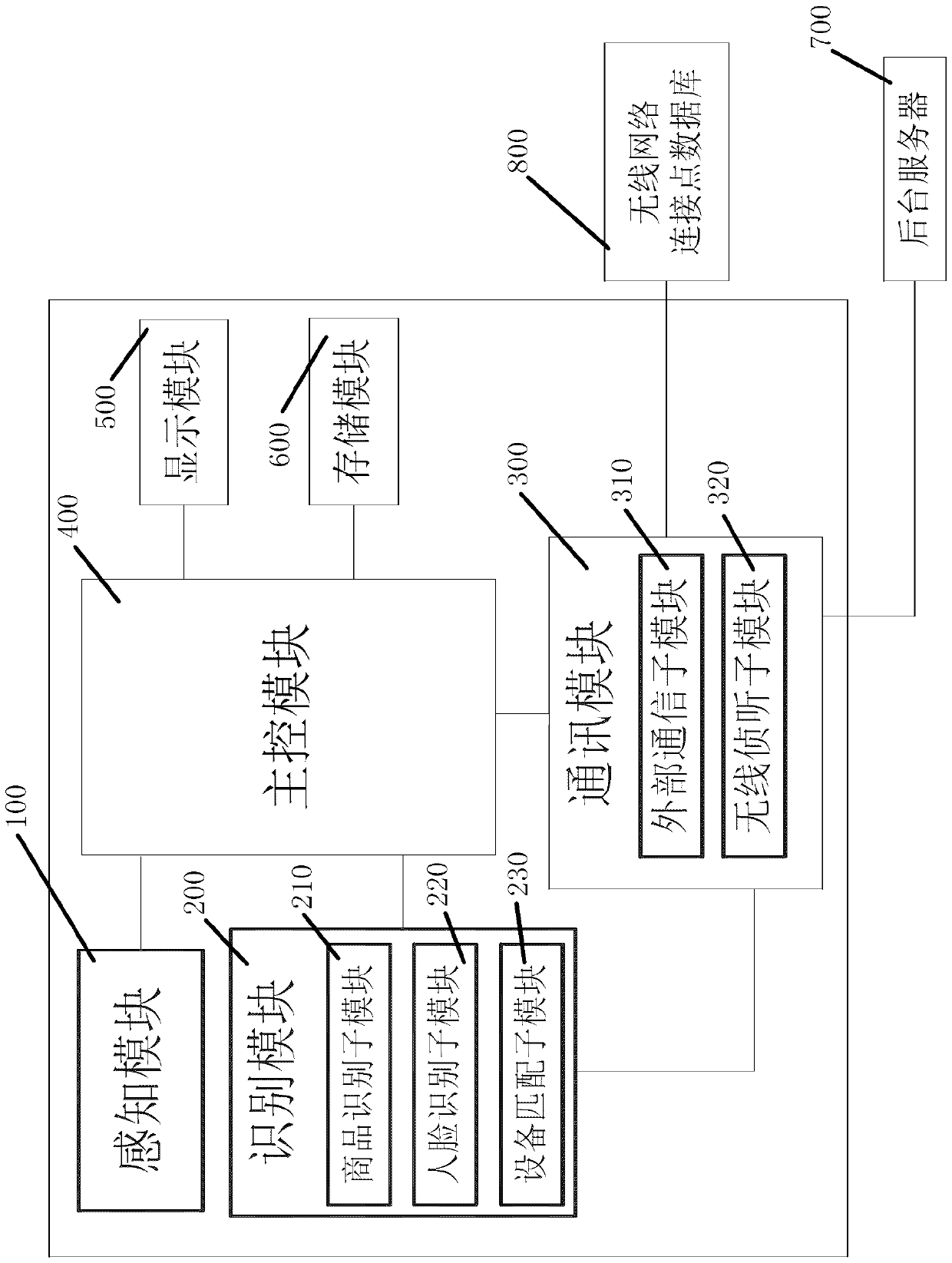

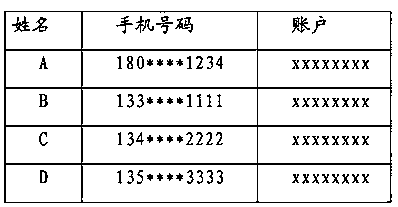

Self-checkout equipment and self-checkout method

InactiveCN109903484AEasy to deployReduce damage rateCash registersPoint-of-sale network systemsHuman bodyPayment

The invention relates to self-checkout equipment and a self-checkout method. The equipment is provided with a sensing module used for sensing whether a human body is close to the self-checkout equipment and outputting a sensing signal under a condition that the human body is sensed, an identification module used for identifying commodity identification information and human body biological characteristic information, a communication module used for communicating with the outside and wirelessly intercepting mobile equipment, and a main control module used for receiving the sensing signal and judging whether the self-checkout equipment needs to be triggered to work and be matched with a customer equipment address and a payment account based on the sensing signal. According to the self-checkout equipment and the self-checkout method, the human body can be actively sensed and a service can be started, and the payment based on the biological characteristics of the human body can be optimized; and the self-checkout equipment is easy to arrange and does not need to be matched with other equipment.

Owner:CHINA UNIONPAY

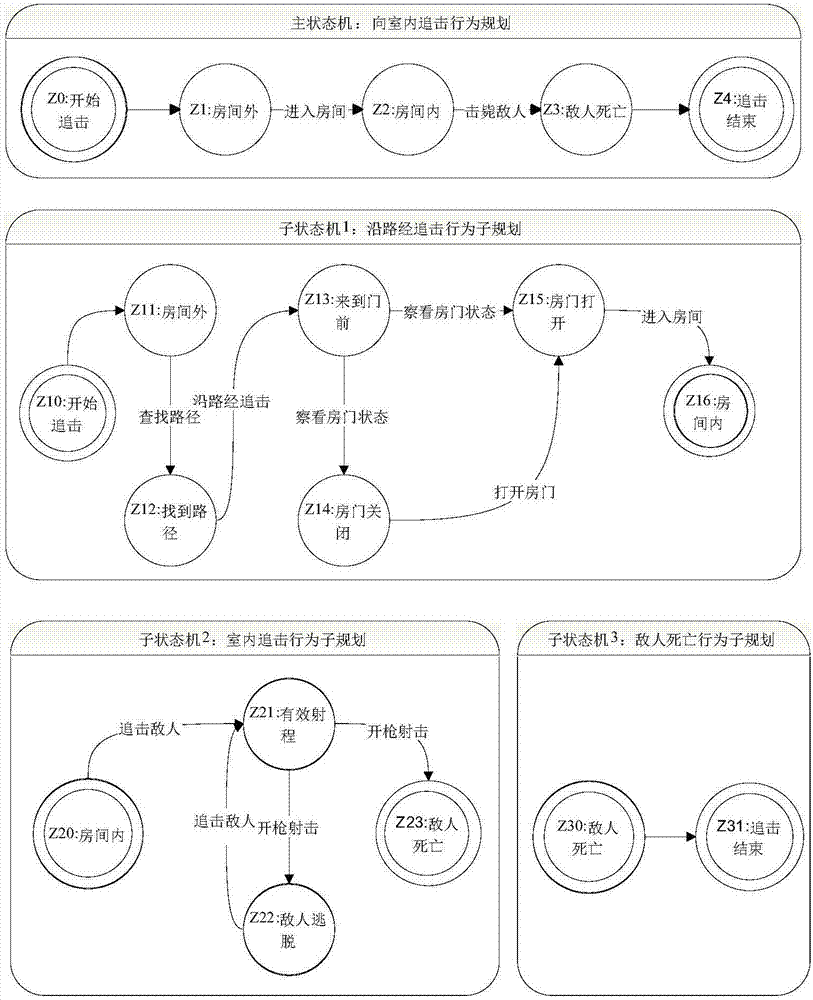

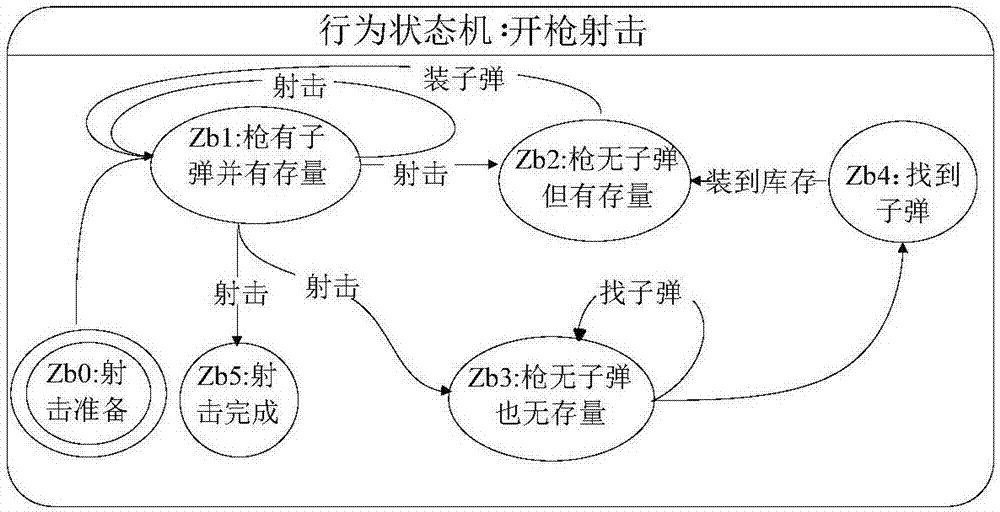

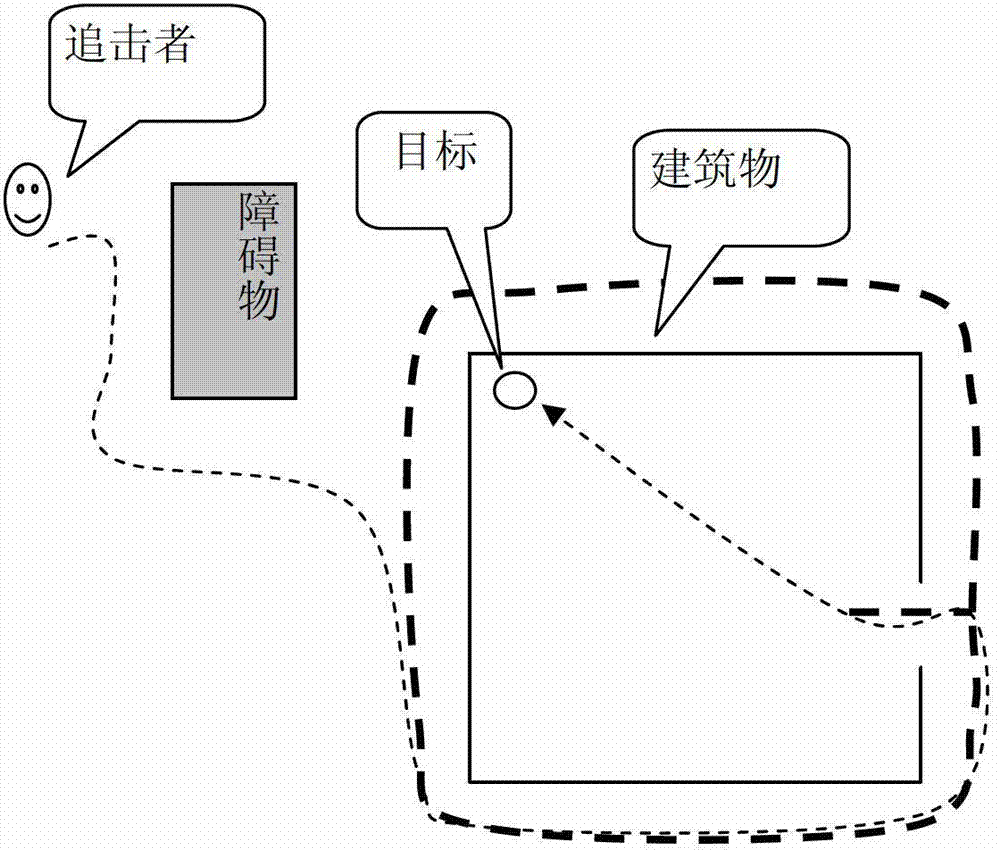

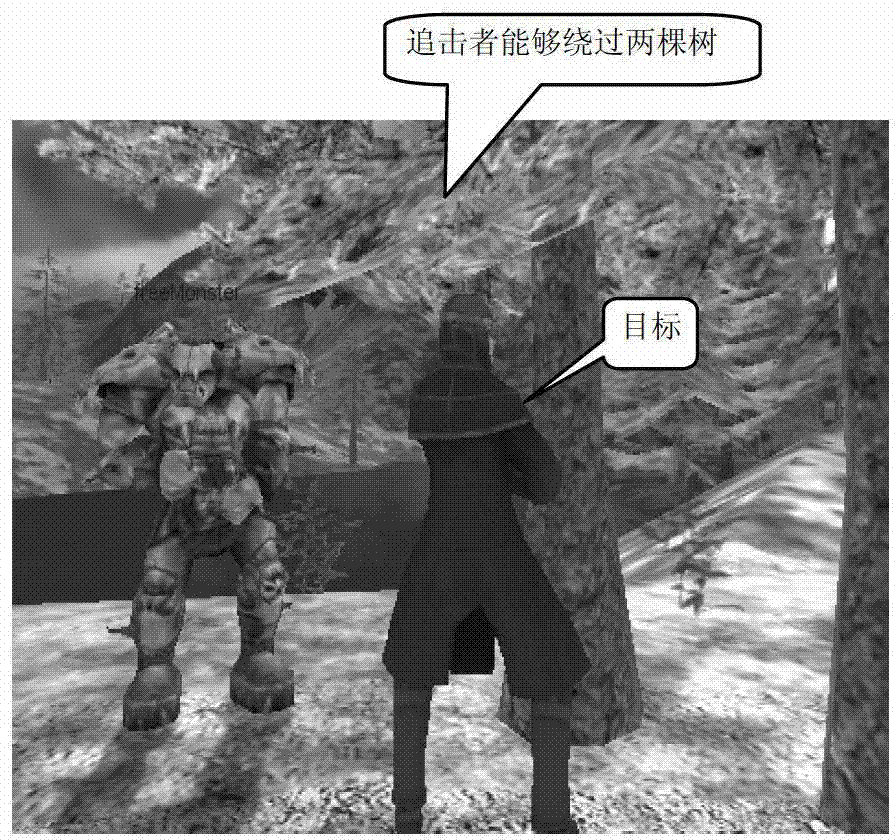

Method and system for environmental perception simulation of virtual human

The invention provides a method and system for environmental perception simulation of a virtual human. The method comprises the steps that the virtual human and a target chased by the virtual human are created, so that the position and the speed of the virtual human are determined; binocular vision is achieved; according to the speed of the virtual human, the binocular vision is periodically sent to the target chased by the virtual human and according to the binocular vision, information of barrier impact points in the environment where the virtual human is located and a preset behavior inference knowledge base, chasing motion of the virtual human is determined; the chasing motion of the virtual human is executed, so that the purposes that the virtual human percepts the chased target and determines a chasing path actively in the dynamic virtual environment which is not completely known are achieved.

Owner:SHENZHEN INSTITUTE OF INFORMATION TECHNOLOGY

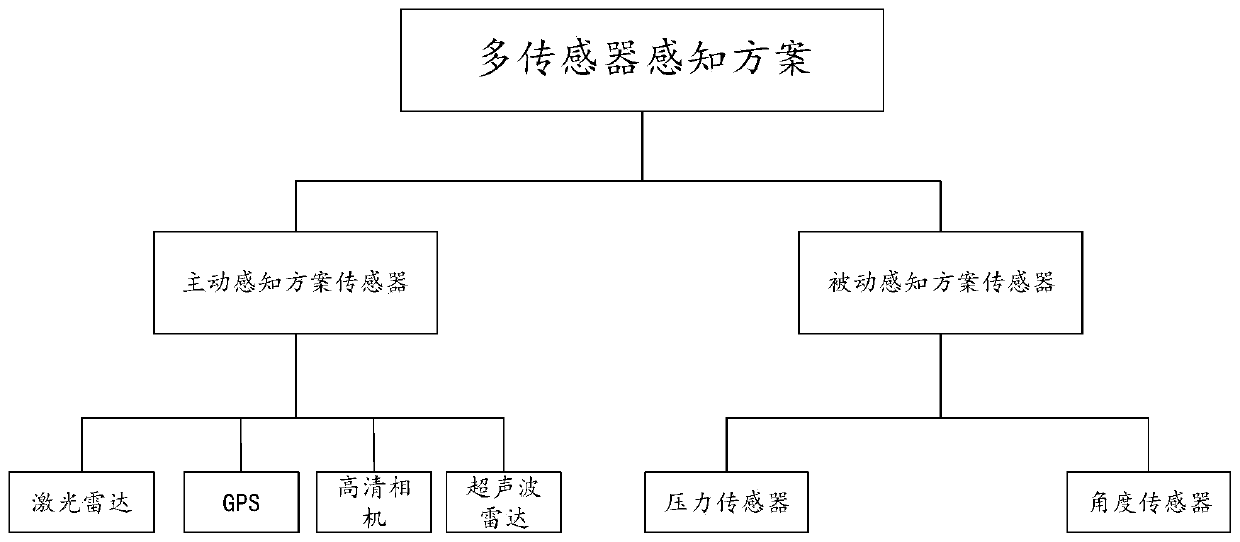

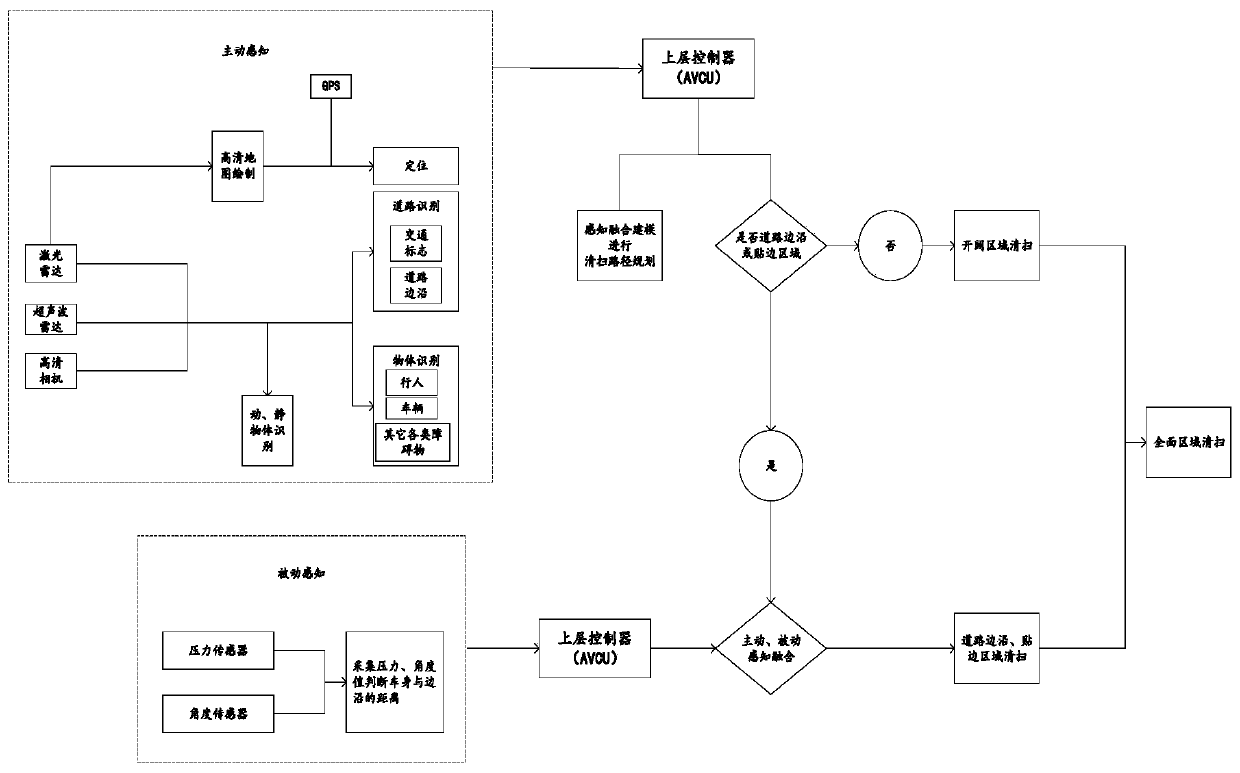

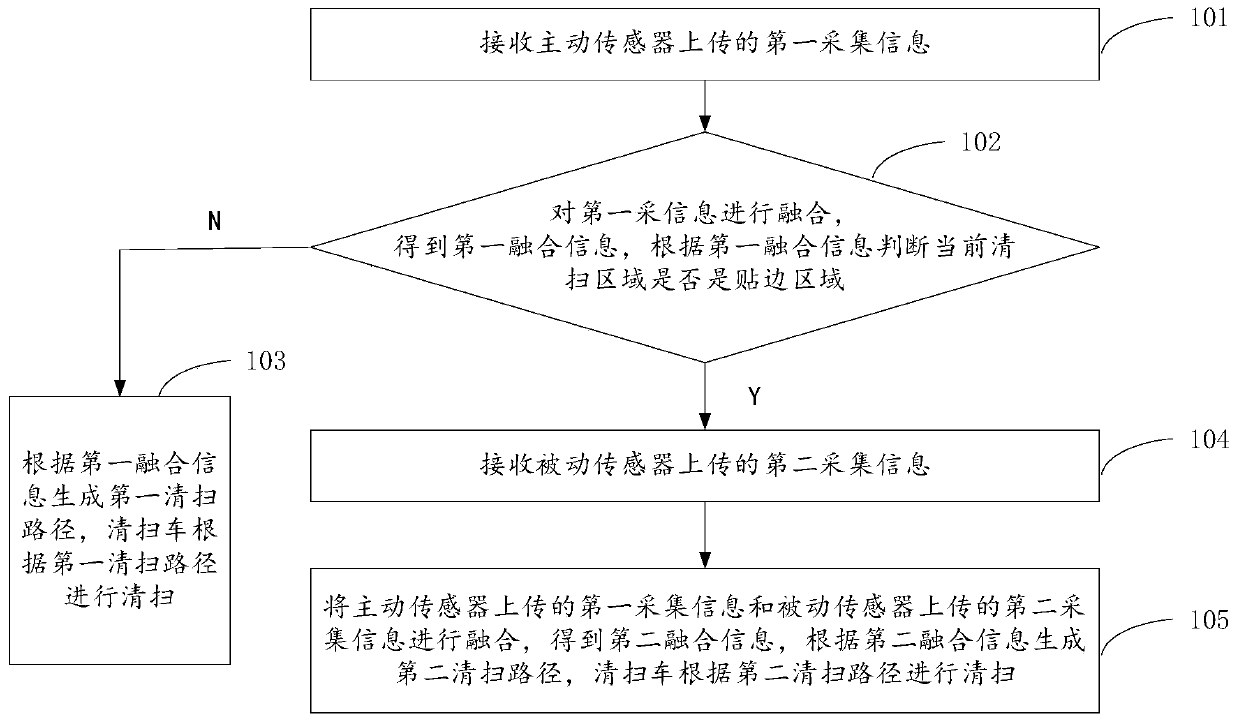

Multi-sensor based facing sweeping method and sweeping vehicle

ActiveCN110499727AThorough cleaning of the areaRoad cleaningPosition/course control in two dimensionsActive perceptionSimulation

The embodiment of the invention relates to a multi-sensor based facing sweeping method and a sweeping vehicle. The multi-sensor based facing sweeping method comprises the following steps: receiving first acquisition information uploaded by a drive sensor; fusing the first acquisition information to obtain first fused information, and judging whether a current sweeping area is a facing area or notaccording to the first fused information; when the current sweeping area is not the facing area, generating a first sweeping path according to the first fused information, and enabling the sweeping vehicle to sweep according to the first sweeping path; when the current sweeping area is the facing area, receiving second acquisition information uploaded by a driven sensor; fusing the first acquisition information uploaded by the drive sensor with second acquisition information uploaded by the driven sensor to obtain second fused information; and generating a second sweeping path according to thesecond fused information, and sweeping by the sweeping vehicle according to the second sweeping path. The multi-sensor based facing weeping method adopts an active perception and passive perception fusing scheme, so that the sweeping vehicle can sweep the area more comprehensively.

Owner:BEIJING ZHIXINGZHE TECH CO LTD

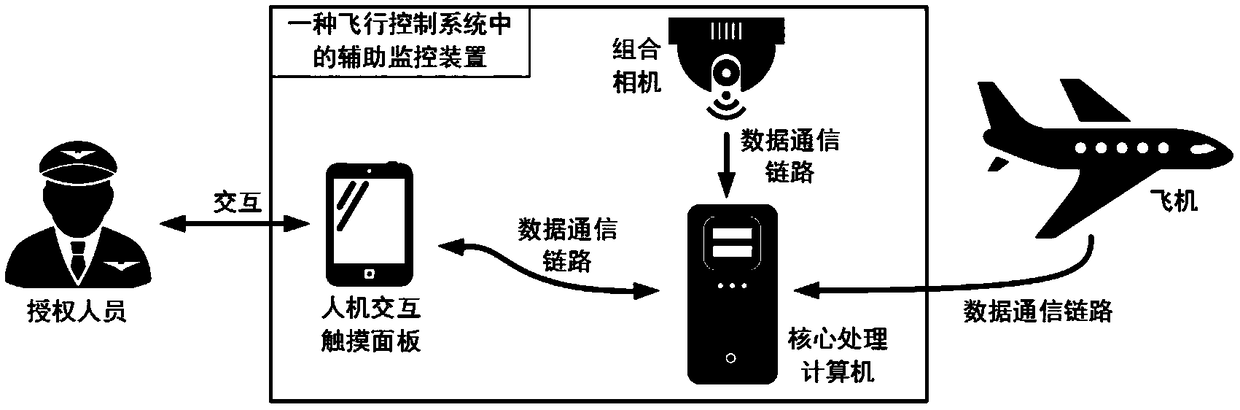

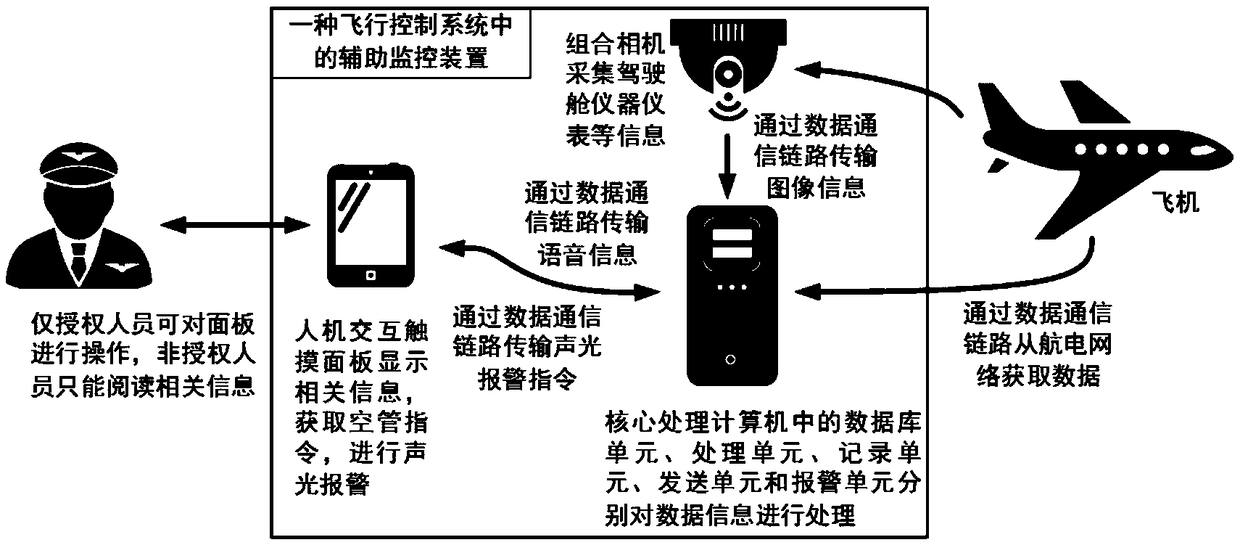

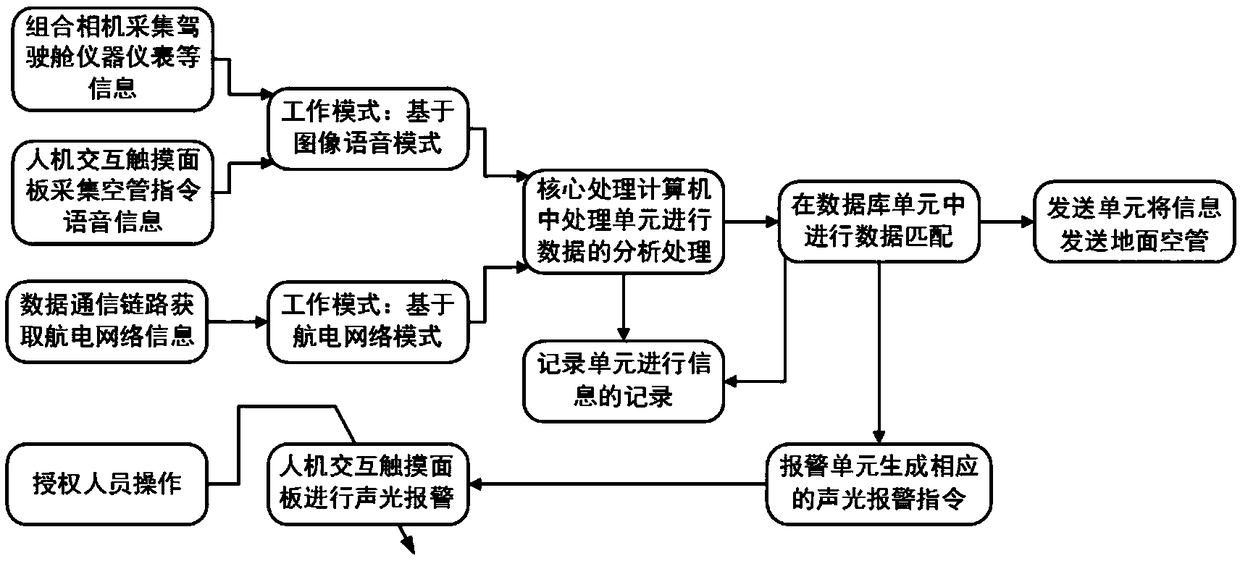

Auxiliary monitoring device in flight control system

ActiveCN108717300AEffective monitoringImprove operational safetyAircraft componentsSpecial data processing applicationsActive perceptionFlight control modes

The invention relates to the field of flight control systems, in particular to an auxiliary monitoring device in a flight control system. The auxiliary monitoring device comprises a core processing module; the core processing module comprises a database unit, a processing unit and a sending unit; rule data violating flight regulations and unsafe flight control data are stored in the database unit,the processing unit acquires a real-time flight airway and attitude information of an aircraft, the real-time flight airway and attitude information of the aircraft and the rule data violating the flight regulations and the unsafe flight control data are compared and analyzed, once the real-time flight airway and attitude information of the aircraft and the rule data violating the flight regulations and the unsafe flight control data are anastomotic, anastomotic information is transmitted to the sending unit; and the sending unit transmits the anastomotic information to a ground blank pipe. The auxiliary monitoring device in the flight control system has the advantages that through the effective combined design of flight status information of the aircraft and active perception informationof the system, the flight is monitored in real time, it is achieved that effective supervision and alarm of driving are provided for flight crews, and the occurrence of flight accidents caused by man-made operation is avoided.

Owner:BEIJING AERONAUTIC SCI & TECH RES INST OF COMAC +1

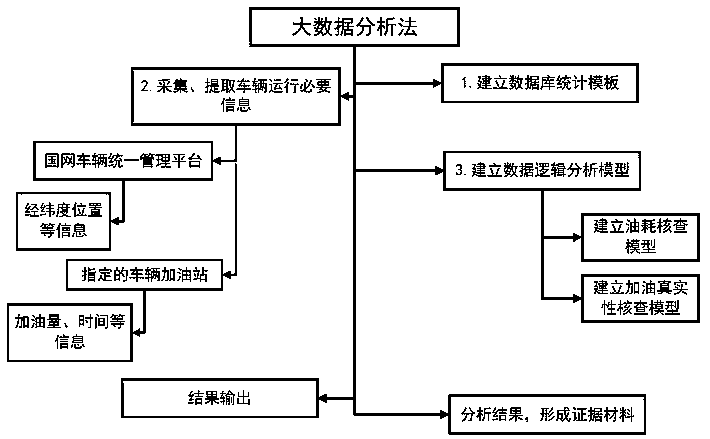

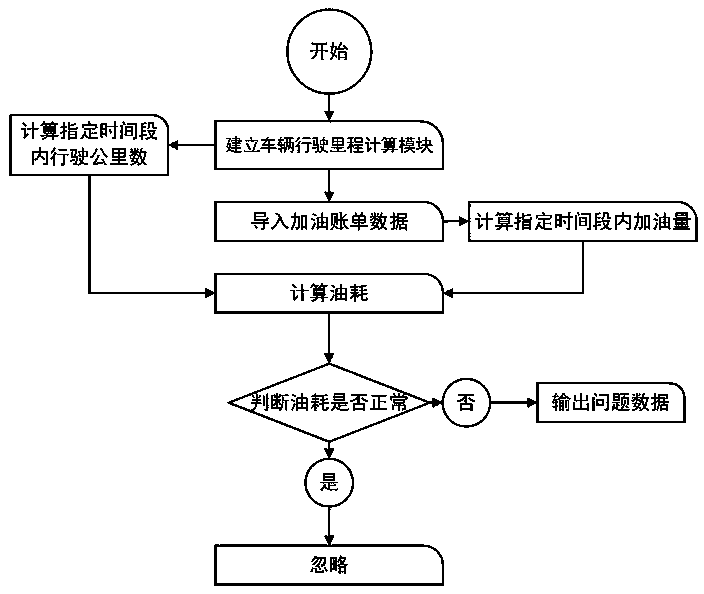

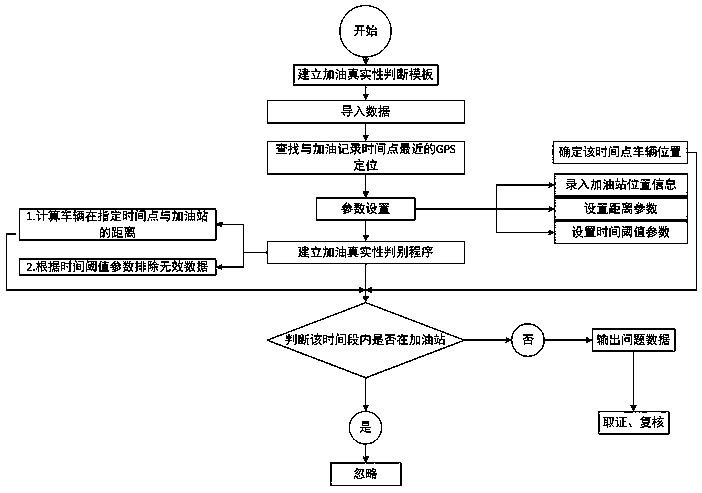

Digital auditing method for vehicle

PendingCN110490537AResolve irrelevanceSolve the resultFinanceOffice automationActive perceptionLongitude

The invention discloses a digital auditing method for a vehicle, and the method comprises the following steps: A, extracting a vehicle driving track and refueling bill data to the local, processing the data, and importing the data into a database template; B, recording by using a vehicle GPS system, and performing integral operation and weighted calculation on longitude and latitude data difference values; and C, establishing a logic analysis model by taking the driving kilometers and the refueling cost as driving data, editing a logic judgment program, and finally performing vehicle fuel consumption comparison and refueling authenticity verification. The method has the advantages of active sensing, instant early warning and auxiliary decision making; the auditing work efficiency can be greatly improved. Meanwhile, the oil consumption condition of the vehicle can be dynamically monitored. Longitudinal comparison on a time axis and transverse comparison among different drivers are formed, so that the burden of auditors is reduced, human errors can be reduced, the auditing quality is improved, and the problems of irrelevance of vehicle management mass data, non-intuitiveness of dataanalysis results and the like in the supervision work are solved.

Owner:国网江西省电力有限公司超高压分公司 +1

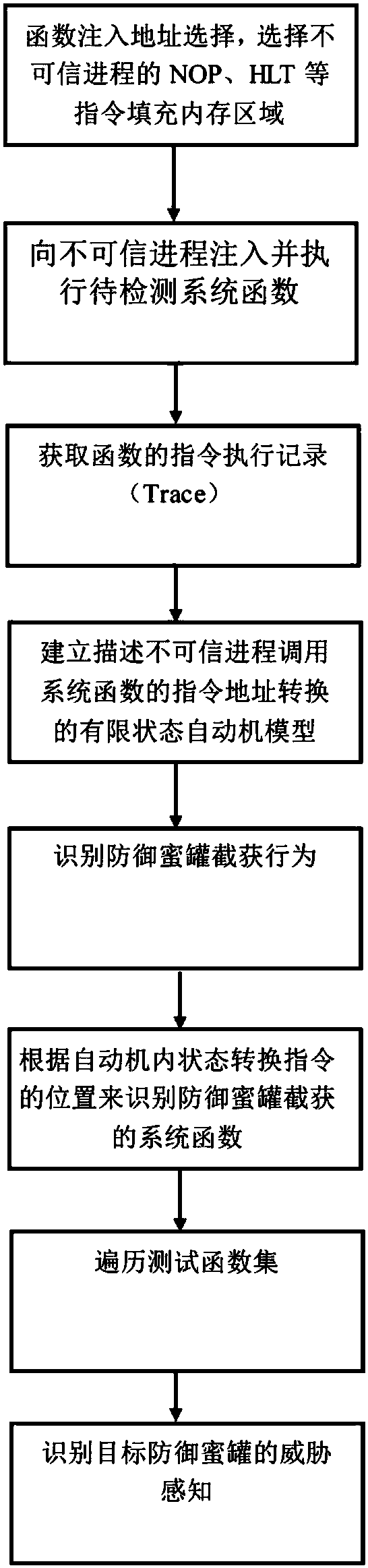

Defense honeypot-based security threat active sensing method

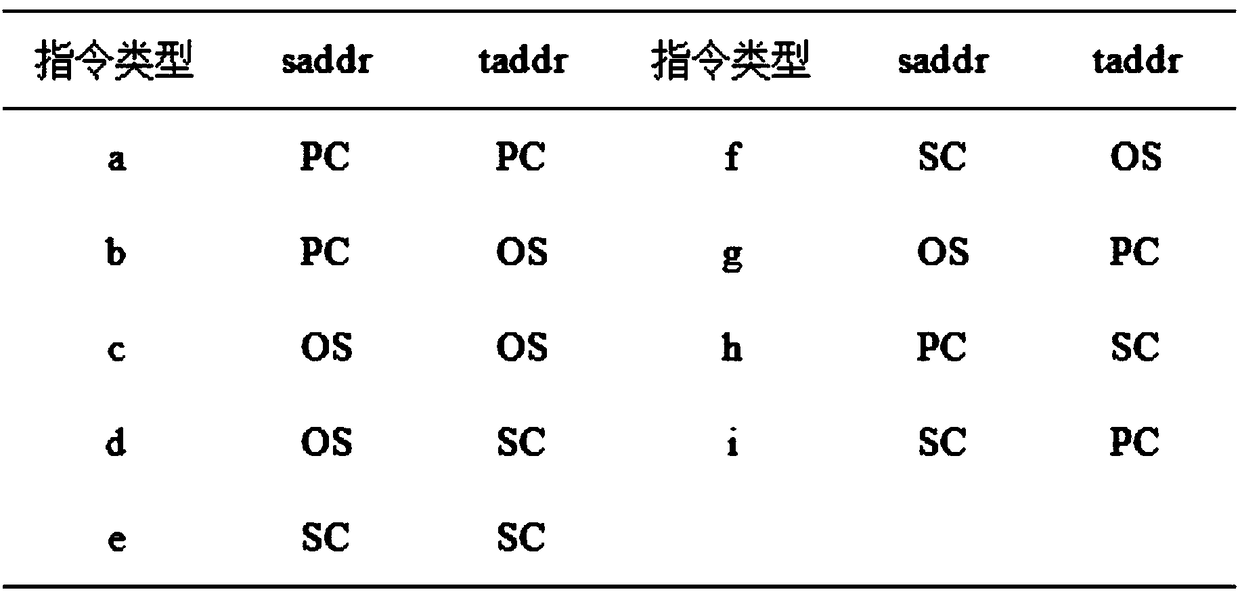

ActiveCN108446557AThe same threat perception and identification capabilitiesImprove efficiencyPlatform integrity maintainanceTransmissionActive perceptionAutomaton

The invention discloses a defense honeypot-based security threat active sensing method. Based on understanding of a Hook technology, whether a defense honeypot intercepts and captures a test functionor not is judged by analyzing an execution Trace of a system function; firstly the to-be-detected system function is injected into an untrusted process and is executed to obtain an instruction execution record (Trace) of the function; further according to a characteristic that the defense honeypot intercepts and captures the Trace of the system function, an address space finite state automata is designed, and the obtained Trace is analyzed in the automata to judge the system function intercepted and captured by the defense honeypot; and finally, a test function set is traversed to identify threat sensation of the target defense honeypot. The threat sensation of the target defense honeypot can be identified; and compared with an existing threat sensation identification method, the method has the same defense honeypot-based threat sensation identification capability, and is more automatic and more efficient.

Owner:江苏中天互联科技有限公司

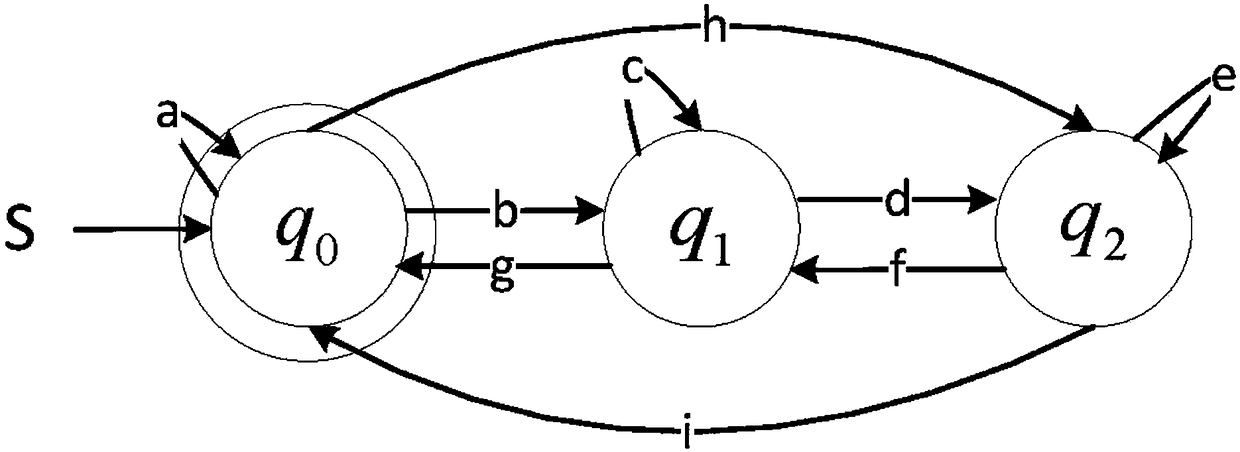

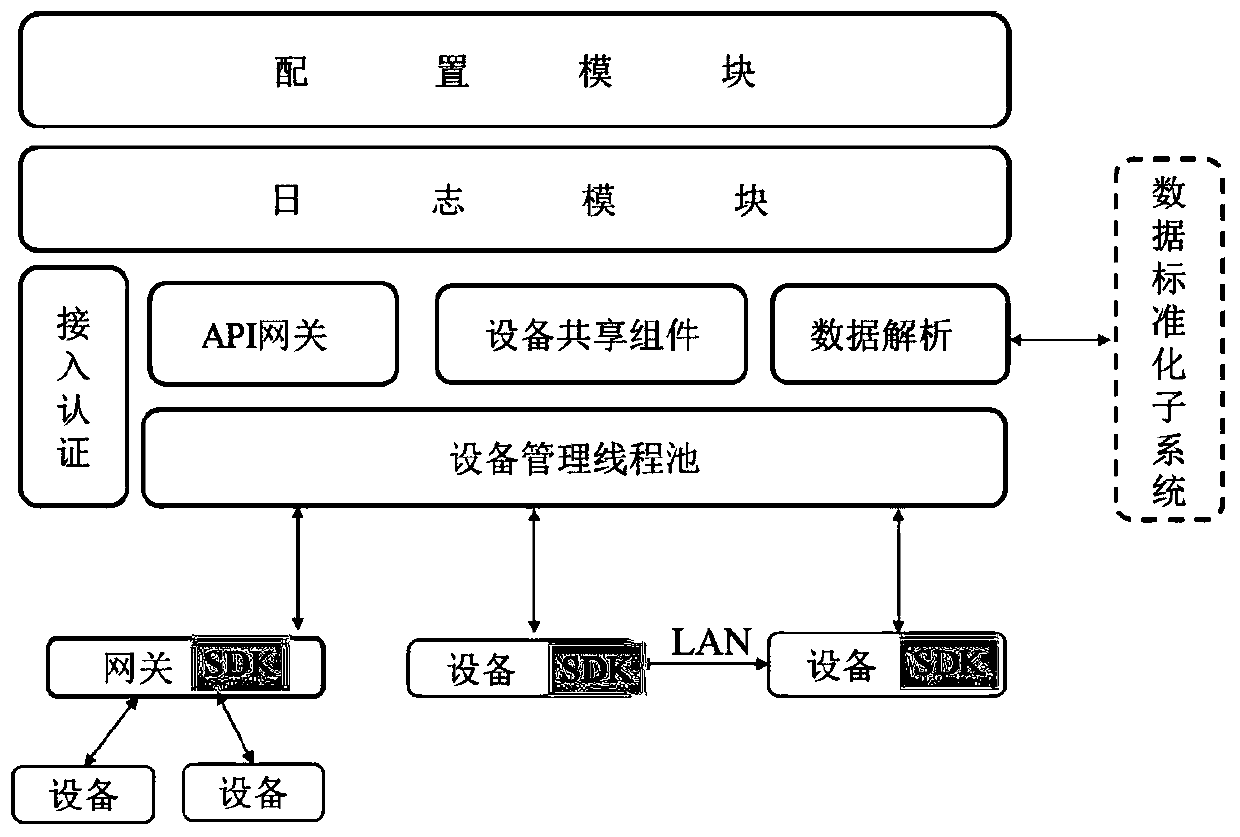

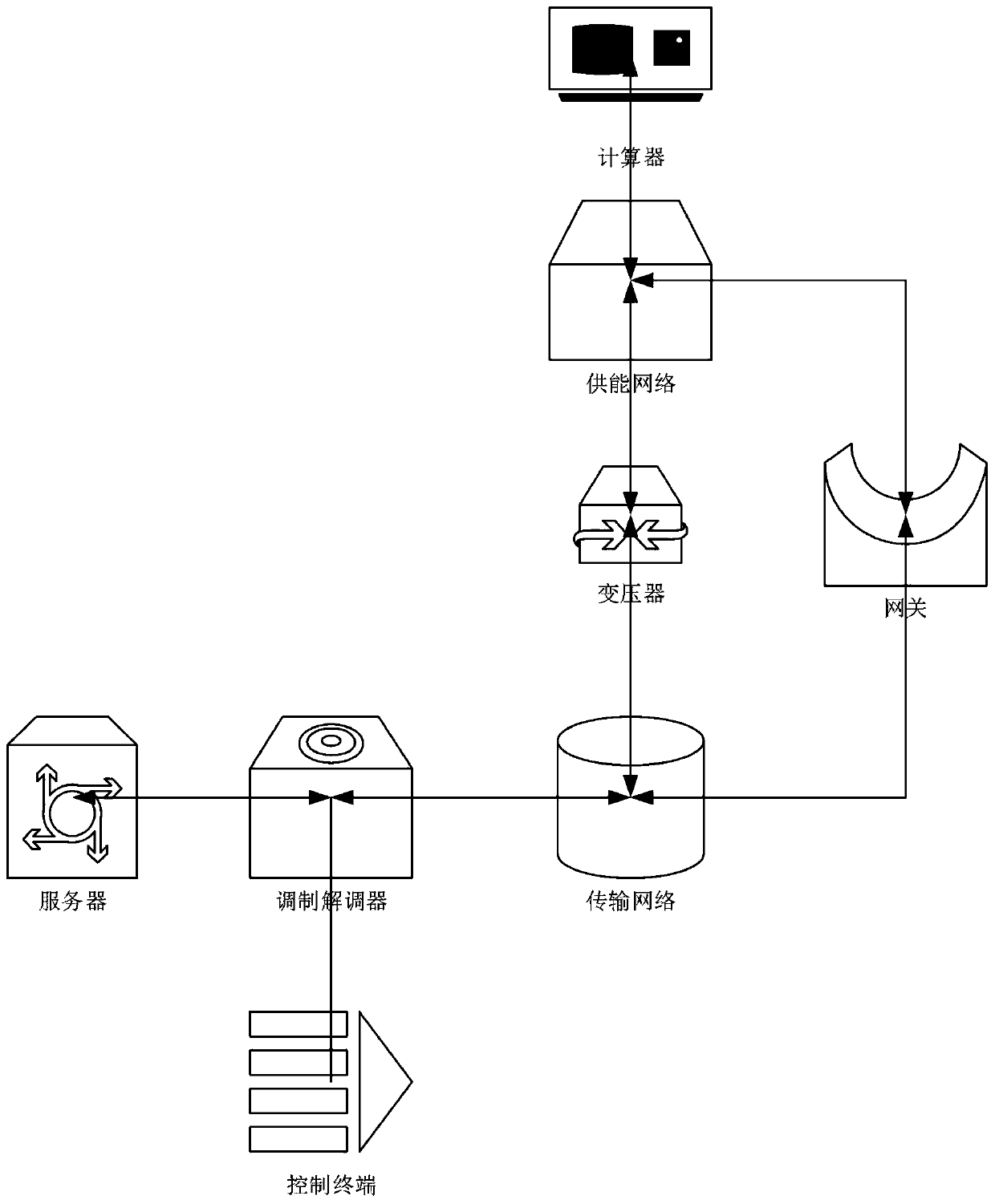

Tourism intelligent perception system based on Internet of Things

InactiveCN109785192AAchieve accessRealization cycleData processing applicationsTransmissionActive perceptionFull life cycle

The invention provides a tourism intelligent perception system based on the Internet of Things, and the system comprises a perception equipment module which is tourism and traffic perception equipment; a intelligent gateway platform comprising a data access subsystem which is in communication connection with the sensing equipment module and can realize access, full life cycle management and onlinemanagement of the heterogeneous sensing equipment; a data processing subsystem connected with the data access subsystem and is used for carrying out standardized processing and forwarding on the datatransmitted by the sensing equipment module; and an intelligent service module connected with the data processing subsystem and is used for realizing various services of the traffic and tourism intelligent service. According to the system, through data fusion of the Internet of Things, tourists are taken as the center, and relevant tourism information can be actively sensed by means of terminal device, so that tourism plans can be arranged and adjusted in time.

Owner:桂林市鼎耀信息科技有限公司

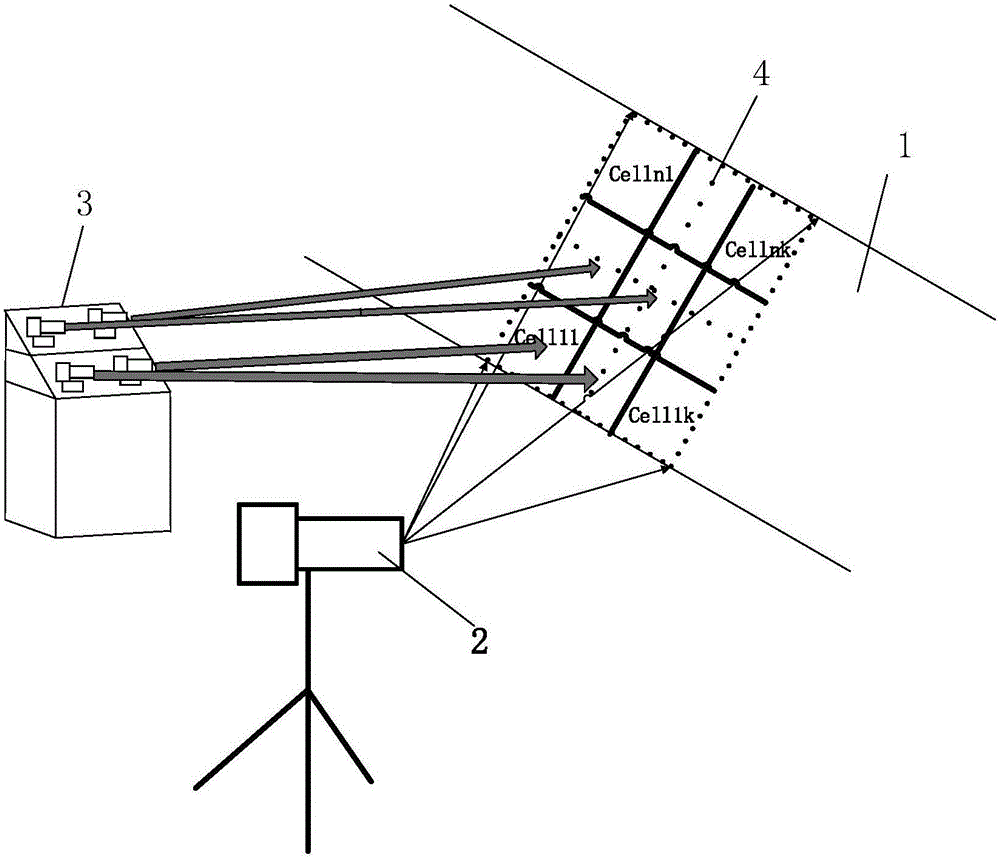

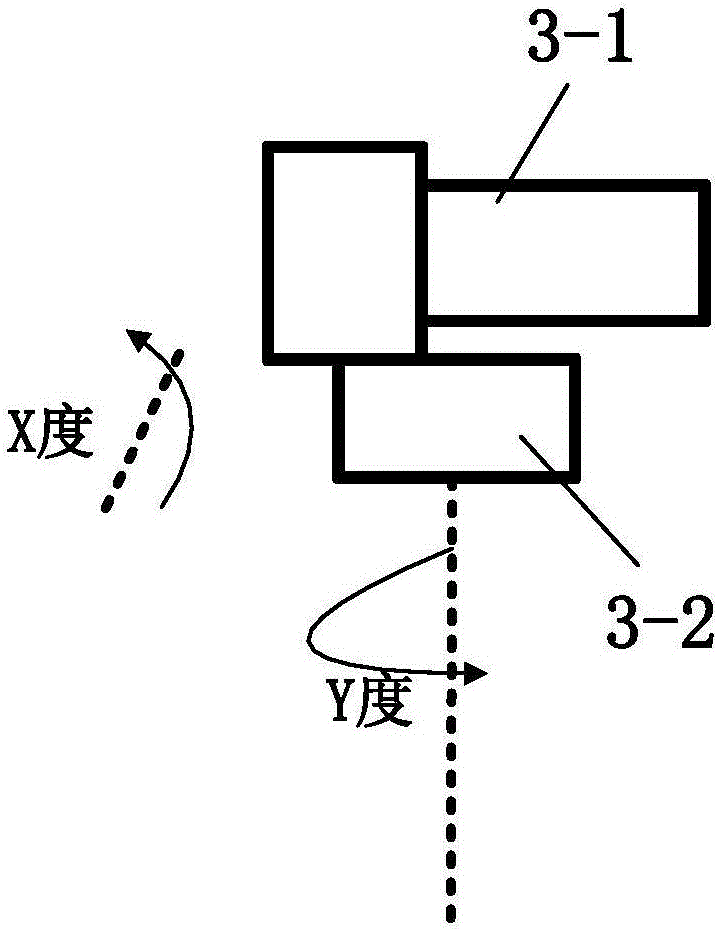

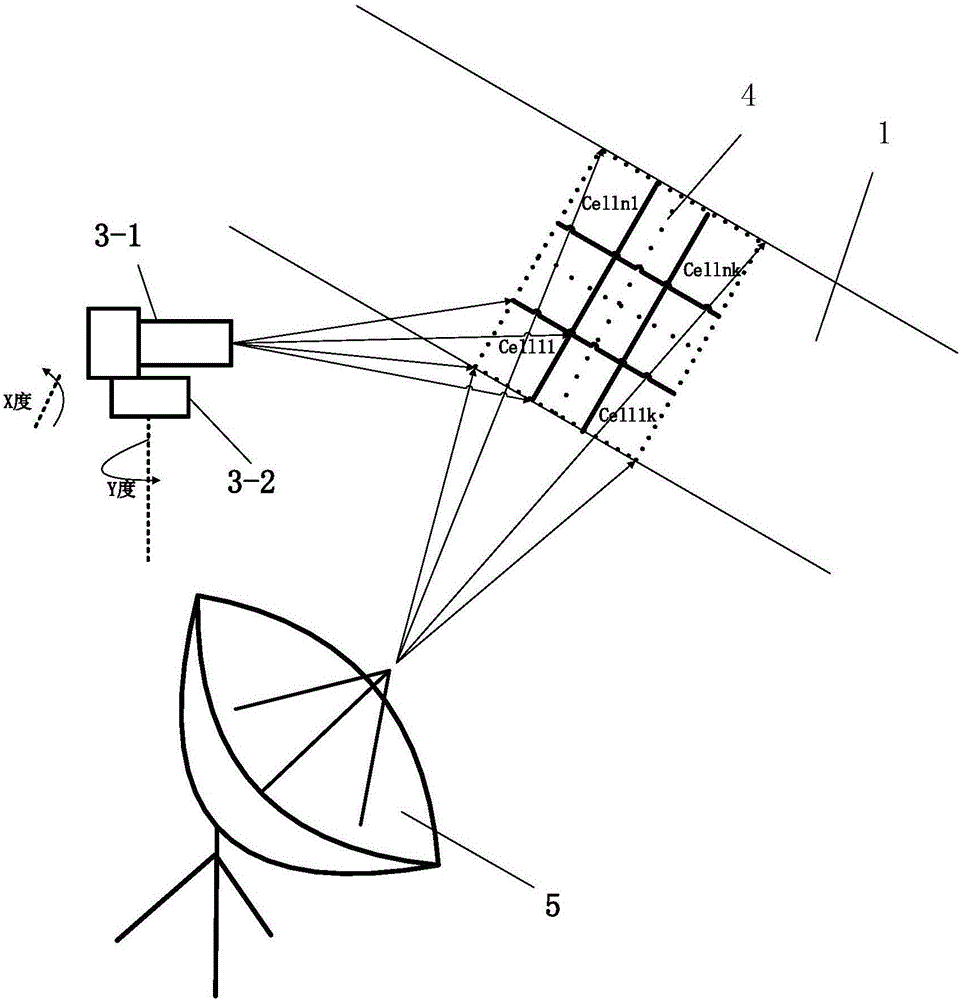

Cell-based active intelligent sensing device and method

InactiveCN106846284AEasy to shootThe process is convenient and fastImage enhancementImage analysisImaging processingActive perception

The invention provides a cell-based active intelligent sensing device. The device comprises a detector, an active camera unit and an image processing unit; wherein the detector is used for detecting whether a target object exists in a to-be-detected area; the area which can be detected by the detector is called as a cell area; the cell area is divided into n*k cell units; the active camera unit includes a controller and a plurality of active cameras; each active camera is provided with a rotation holder for adjusting a rotation angle of the active camera and a capturing range of each active camera is the same as one cell unit; the controller is used for adjusting the rotation holder of the corresponding active camera according to a signal of the detector; and the image processing unit is used for synthesizing pictures captured by the active cameras. The cell-based active intelligent sensing device can actively sense a target object, the area which can be detected by the detector is divided into subareas, clear images are captured for the subareas by use of the calibrated active cameras when the target object is available, and the active cameras are facilitated to capture one or more cell units.

Owner:WUHAN UNIV OF TECH

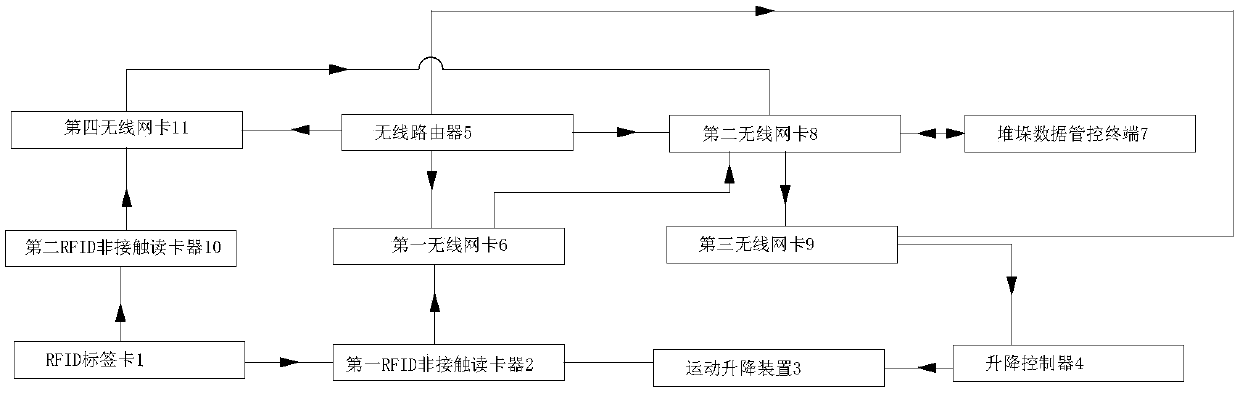

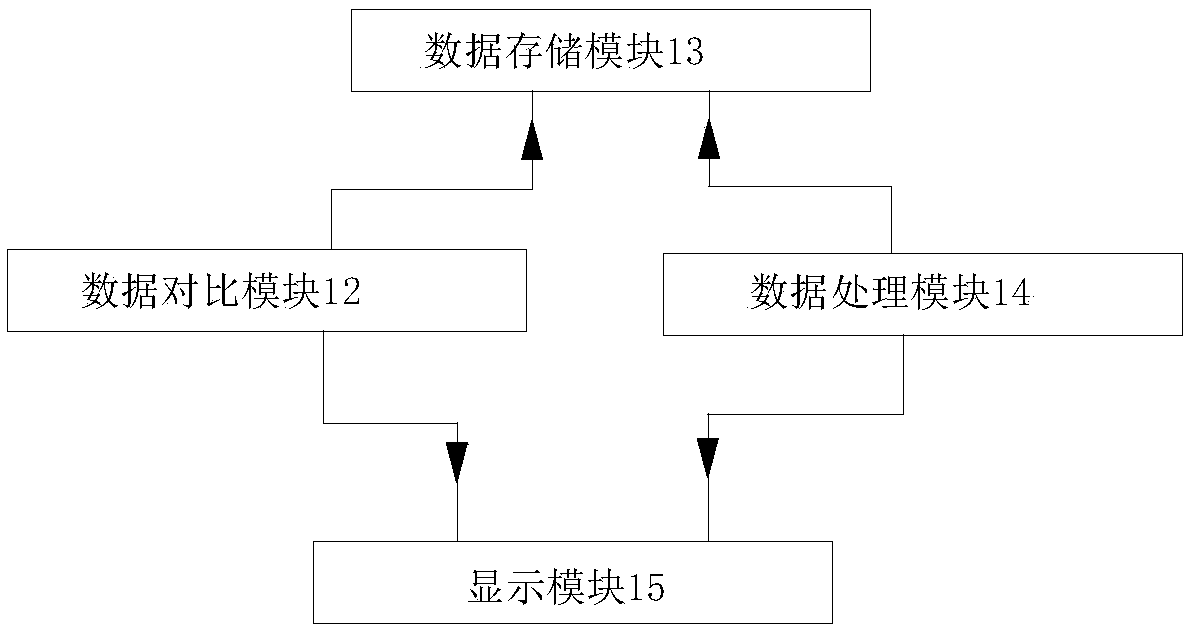

A T-shaped active sensing system and method applied to long material stacking batch number recognition

InactiveCN109598314APrecise managementStatistically accurateCo-operative working arrangementsLogisticsActive perceptionCard reader

The invention relates to a T-shaped active perception system and method applied to long material stacking batch number recognition, and belongs to the technical field of long material product storagestacking information management of steel rolling production lines of iron and steel enterprises. The system comprises an RFID tag card, a first RFID non-contact card reader, a motion lifting device, alifting controller, a wireless router, a first wireless network card, a stacking data management and control terminal, a second wireless network card, a third wireless network card, a second RFID non-contact card reader, a fourth wireless network card and the like. When long products are hoisted in and out of a warehouse through a travelling crane, the long products are hoisted; the batch information of each bundle is updated in real time; therefore, the accurate management of the stacking position of the long material product bundle is realized; Accurate statistics of warehouse-in and warehouse-out conditions and long material product inventory information is realized, the problems of errors and the like caused by ever-changing production site conditions when manual statistics is used inthe past are solved, the human resource cost is reduced, and the automation and intelligent management level of a long material finished product warehouse is improved.

Owner:YUNNAN KUNGANG ELECTRONICS INFORMATION TECH CO LTD

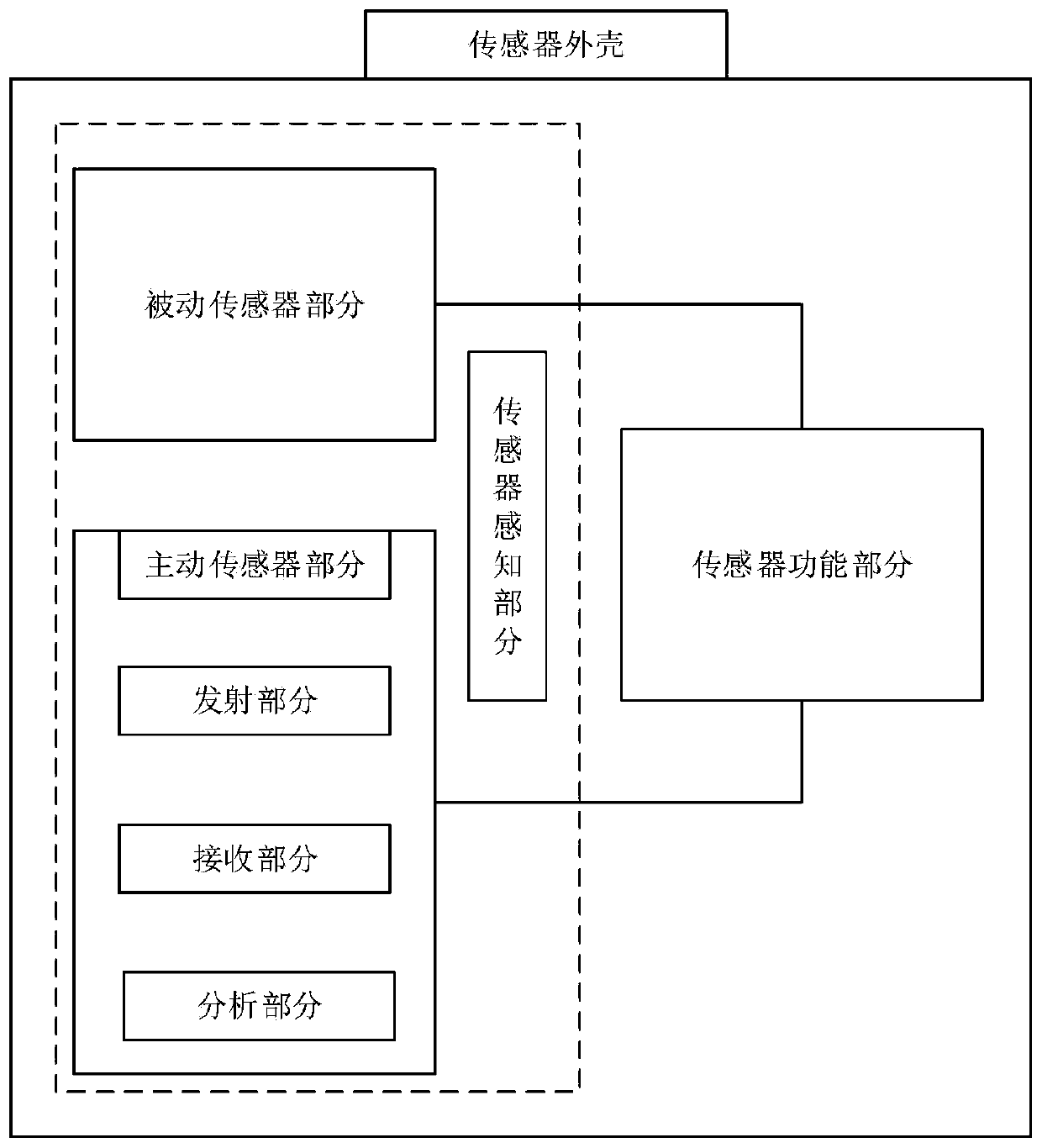

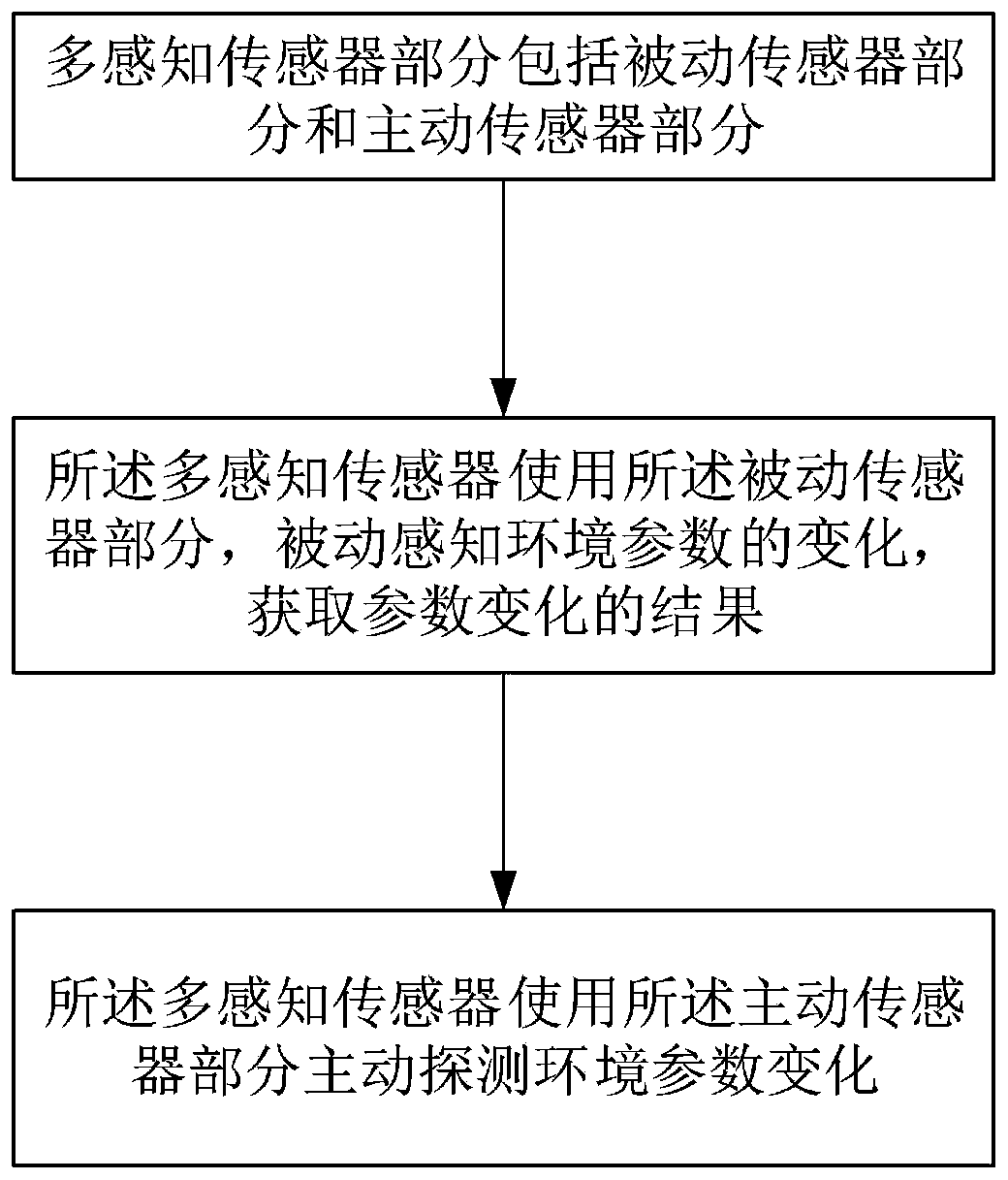

Multi-perception sensor, sensor network and perception method applied to Internet of Things

InactiveCN111551208AExtend the range of detection and perceptionImprove perceived efficiencyElectric signal transmission systemsMeasurement devicesActive perceptionThe Internet

The invention relates to the technical field of sensors, in particular to a multi-perception sensor applied to the Internet of Things, a sensor network and a perception method. The sensor comprises asensor shell, a multi-perception sensor part and a sensor energy supply part, wherein the multi-perception sensor part and the sensor energy supply part are arranged in the sensor shell; the sensor energy supply part is used for supplying energy to the multi-perception sensor part; wherein the multi-perception sensor part comprises a passive sensor part and an active sensor part; the passive sensor part is used for passively sensing the change of environmental parameters and acquiring a parameter change result; the method has the advantages of high sensing efficiency, high sensing accuracy andhigh safety, and has an active sensing function.

Owner:魏磊

Retail trade system of geographical position-based AR (Augmented Reality) platform

PendingCN108512887AImprove sales conversion rateBuying/selling/leasing transactionsTransmissionActive perceptionGlobal Positioning System

The invention relates to a retail trade system of a geographical position-based AR (Augmented Reality) platform. The system comprises a customer mobile phone terminal (1) and an LBS (Location Based Service) positioning server (2) connecting with the customer mobile phone terminal in a wireless manner, wherein a GPS (Global Positioning System) positioning module is arranged inside the customer mobile phone terminal (1), and position information is sent to the LBS positioning server (2); the LBS positioning server (2) sends AR promotional information to an APP of the mobile phone terminal according to a position; and the APP of the mobile phone terminal searches a dedicated SSID (Service Set Identifier) channel of a portable wifi probe in each of surrounding real stores at the same time; andonce the position is matched, a customer is determined entering the real store, which is taken as an AR communication performance basis, so as to prove that customers are attracted by the AR promotional information. The method is simpler and easier to operate, and helps the real stores to directly perform interactive promotion and communication with neighboring customers without intermedia; and meanwhile, the customers can actively know the promotion information of the stores on the site, so that the customers are stimulated to enter the stores to increase the sales conversion rate.

Owner:时趣信息科技(上海)有限公司

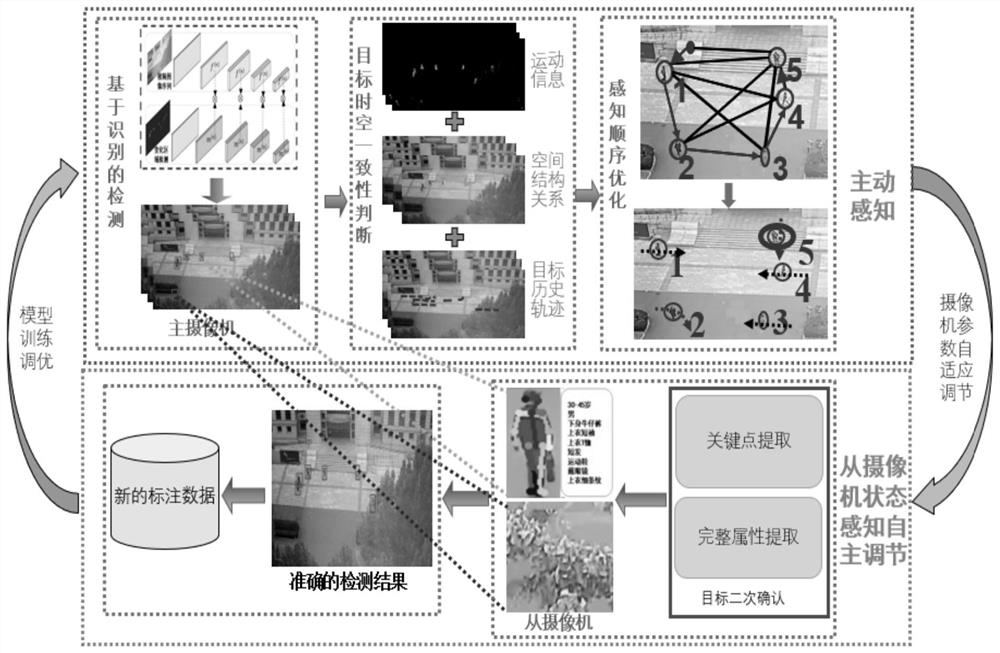

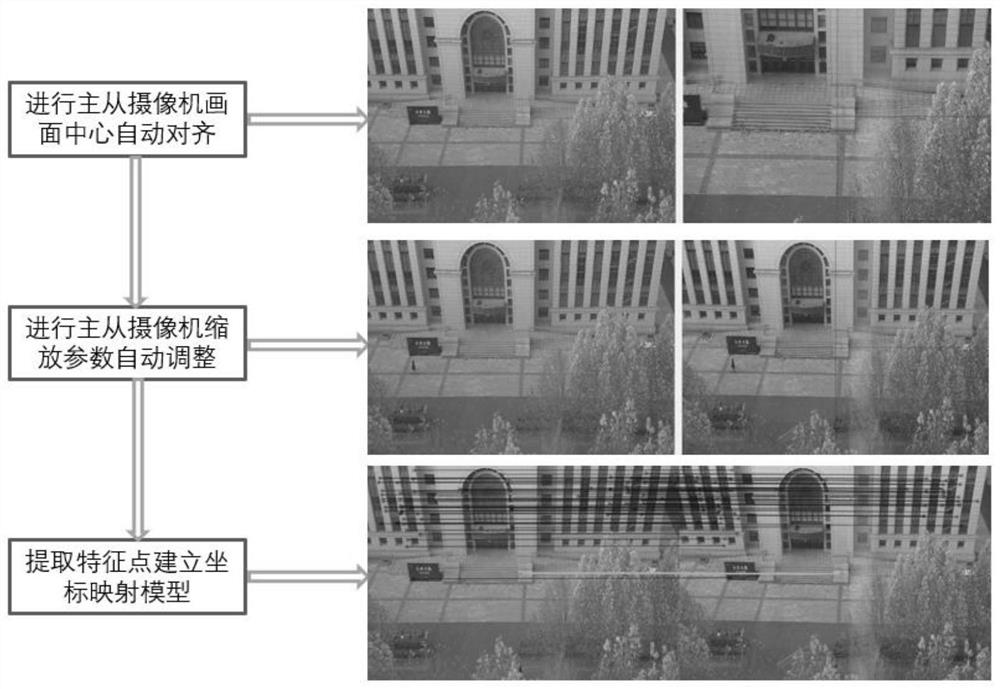

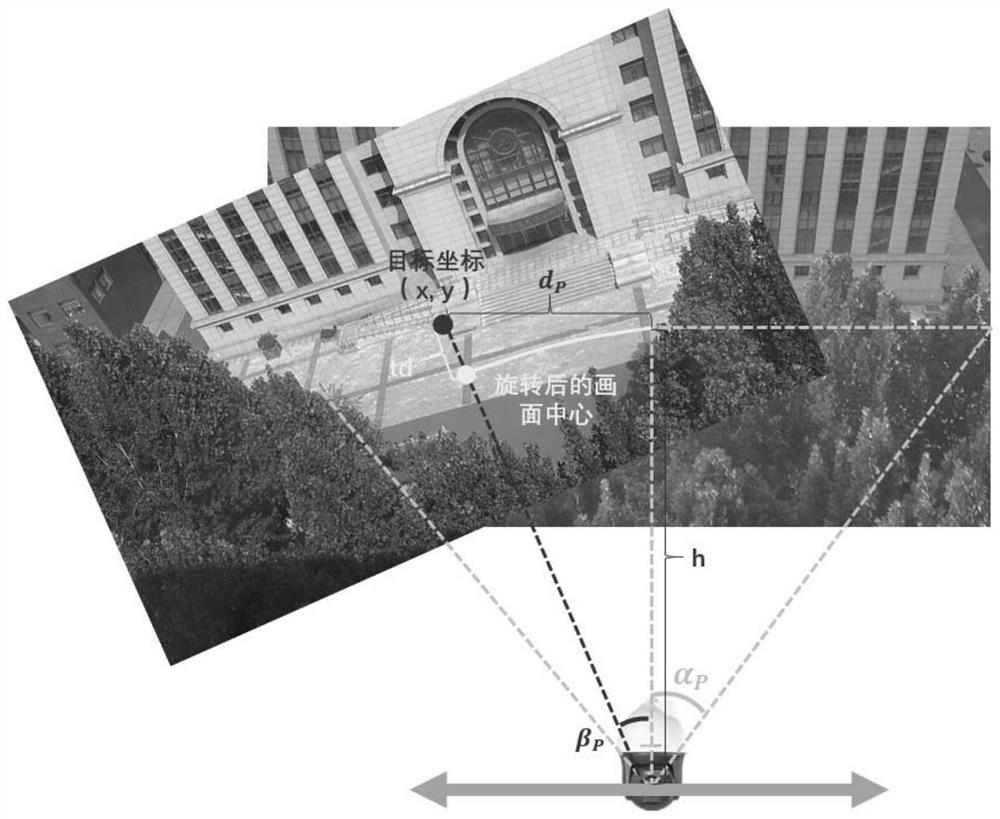

Camera active sensing method and system

The invention designs an active sensing method and system for video monitoring. The invention provides a high-efficiency cooperative control method for master and slave cameras. A heuristic algorithm is adopted to automatically adjust camera parameters to establish a master-slave camera coordinate mapping model, and a geometric model between a slave camera and a scene is constructed, so that the slave camera can quickly position a target. The invention provides a pedestrian attribute identification method based on target state perception. Pedestrian state judgment is carried out according to the human body key points to guide validity judgment of a pedestrian target attribute recognition result. On the basis, a pedestrian target attribute recognition result is updated based on the multi-frame image sequence, and then a complete pedestrian attribute recognition result is obtained. The invention provides an automatic data labeling method based on master-slave collaboration. The slave camera is utilized to confirm the target detected by the master camera, the master camera periodically generates the background image, the confirmed target and the generated background image are fused to form new annotation data, then the target detection model of the master camera is adjusted and optimized, and the adaptive capacity of the target detection method to a new scene is improved.

Owner:BEIHANG UNIV

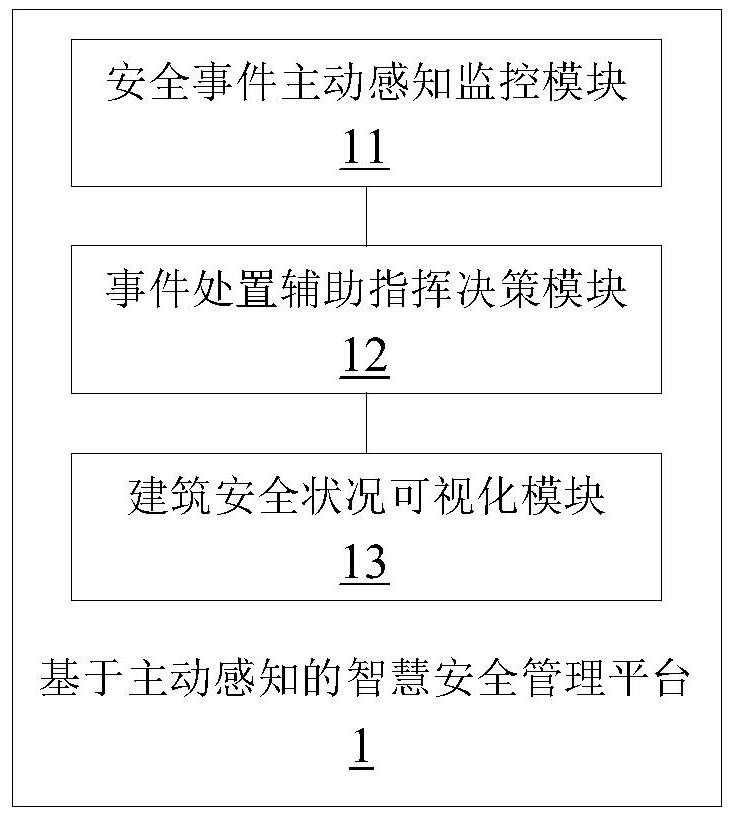

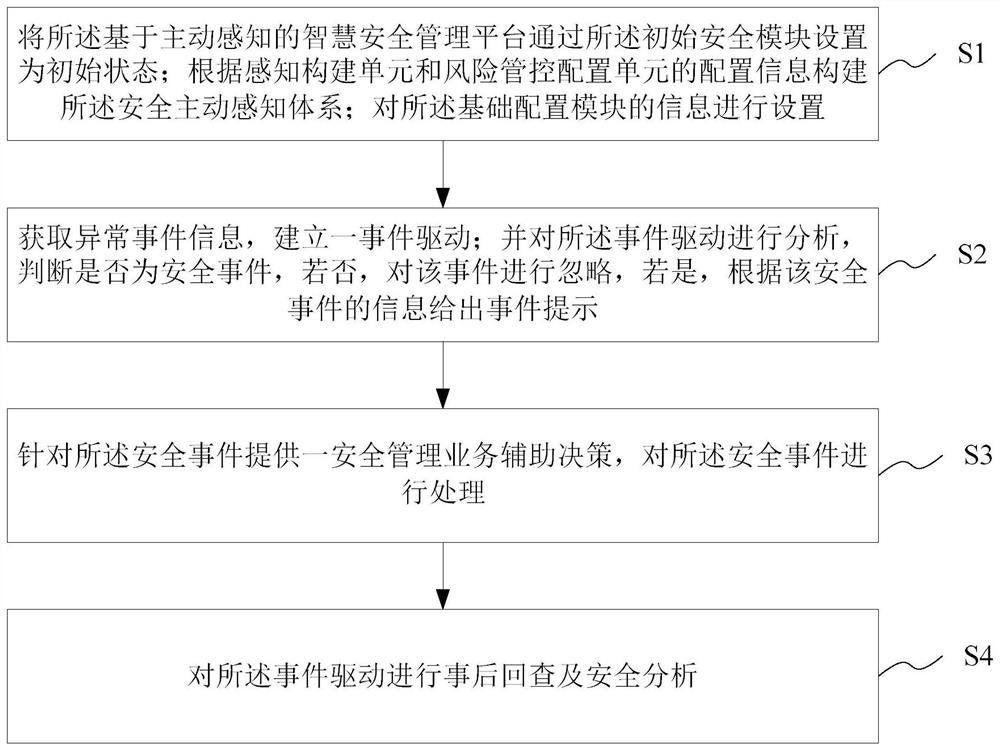

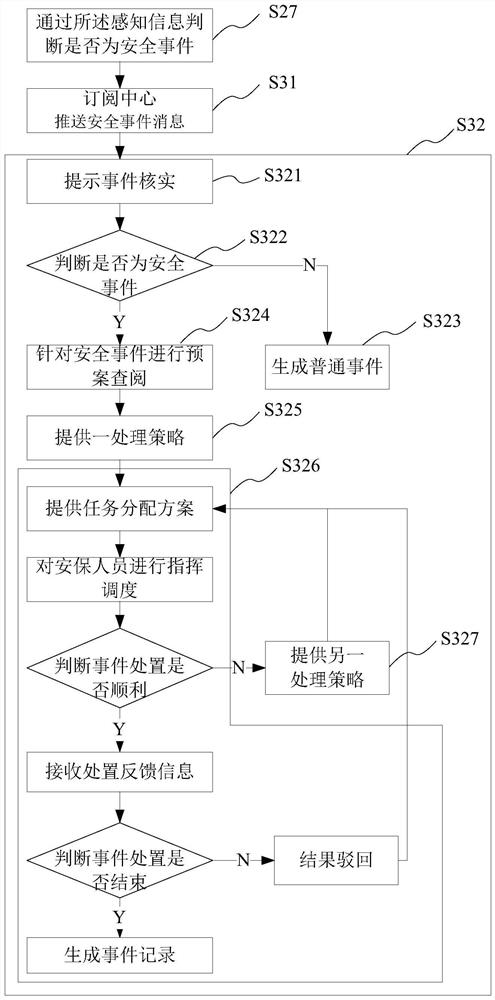

Intelligent safety management platform and method based on active perception, medium and equipment

InactiveCN111754054AReduce labor cost consumptionScientific and efficient managementResourcesActive perceptionStatistical analysis

The invention provides an intelligent safety management platform and a method based on active perception, a medium and equipment. The intelligent safety management platform based on active perceptioncomprises a safety event active perception monitoring module which is used for obtaining abnormal event information, establishing an event driver, analyzing the event driver, judging whether the eventdriver is a safety event or not, if not, ignoring the event, and if yes, giving an event prompt according to the information of the safety event; an event handling auxiliary command decision module which is used for providing a safety management service auxiliary decision corresponding to the safety event for the safety event and handling the safety event; and a building safety condition visualization module which is used for carrying out after-event review and statistical analysis on the event driver. The problem of security difference strategies in different scenes is solved by actively sensing security events, so that security personnel can manage buildings more scientifically and efficiently, and humanized security management service is provided.

Owner:上海云思智慧信息技术有限公司

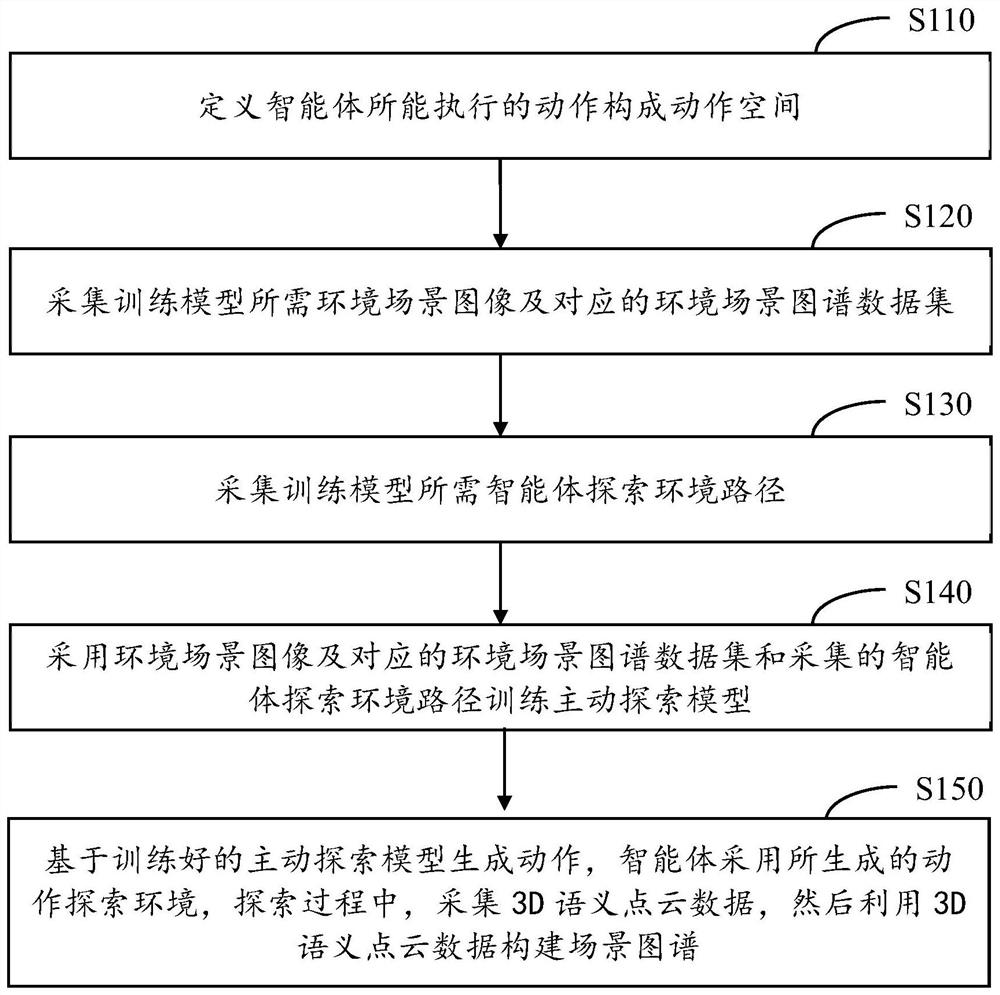

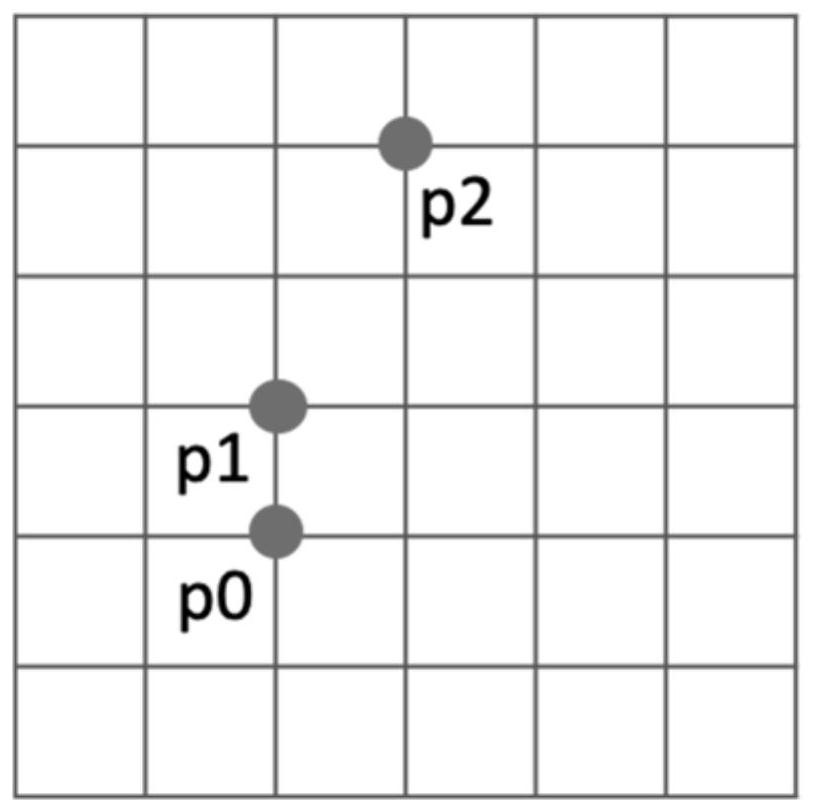

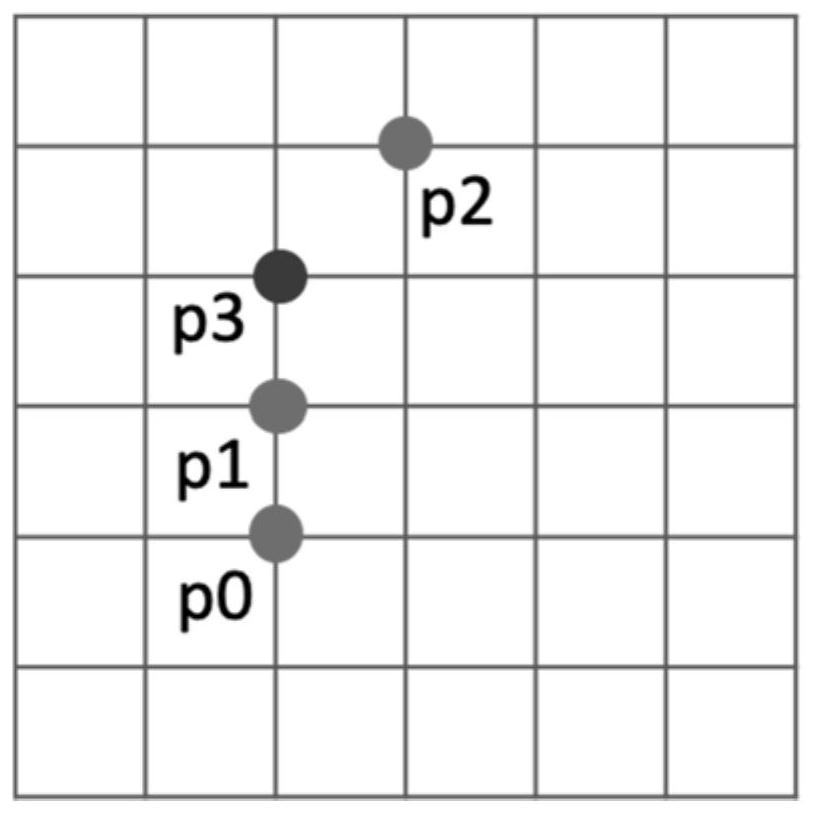

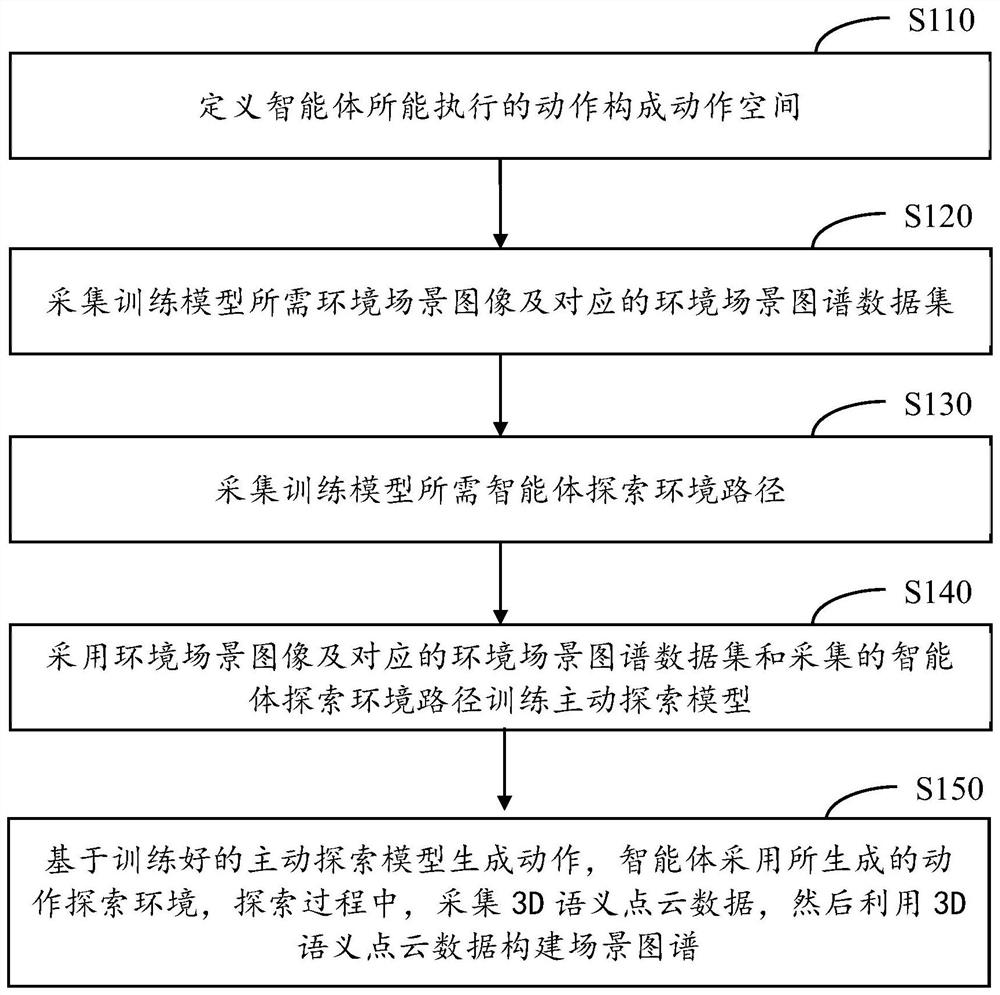

Method and device for actively constructing environment scene map by intelligent agent and exploration method

ActiveCN113111192AOvercome limitationsNeural architecturesNeural learning methodsData setActive perception

The invention provides a method for actively constructing an environment scene map by an intelligent agent based on visual information, an environment exploration method and intelligent equipment. The method comprises the following steps: collecting an environment scene image required by a training model and a corresponding environment scene map data set; collecting an intelligent agent exploration environment path required by the training model; training an active exploration model by adopting an environment scene image, a corresponding environment scene map data set and a collected agent exploration environment path; and an action is generated based on the trained active exploration model, the intelligent agent explores the environment by adopting the generated action, 3D semantic point cloud data is obtained in the exploration process, and then an environment scene map is constructed by utilizing the 3D semantic point cloud data. According to the method, the limitation that a traditional computer vision task can only passively perceive the environment can be overcome, the active exploration characteristic of the intelligent agent is utilized, the perception ability and the motion ability are combined to achieve scene maps of active perception, active exploration of the environment and active construction of the environment, and the method is applied to various vision tasks.

Owner:TSINGHUA UNIV

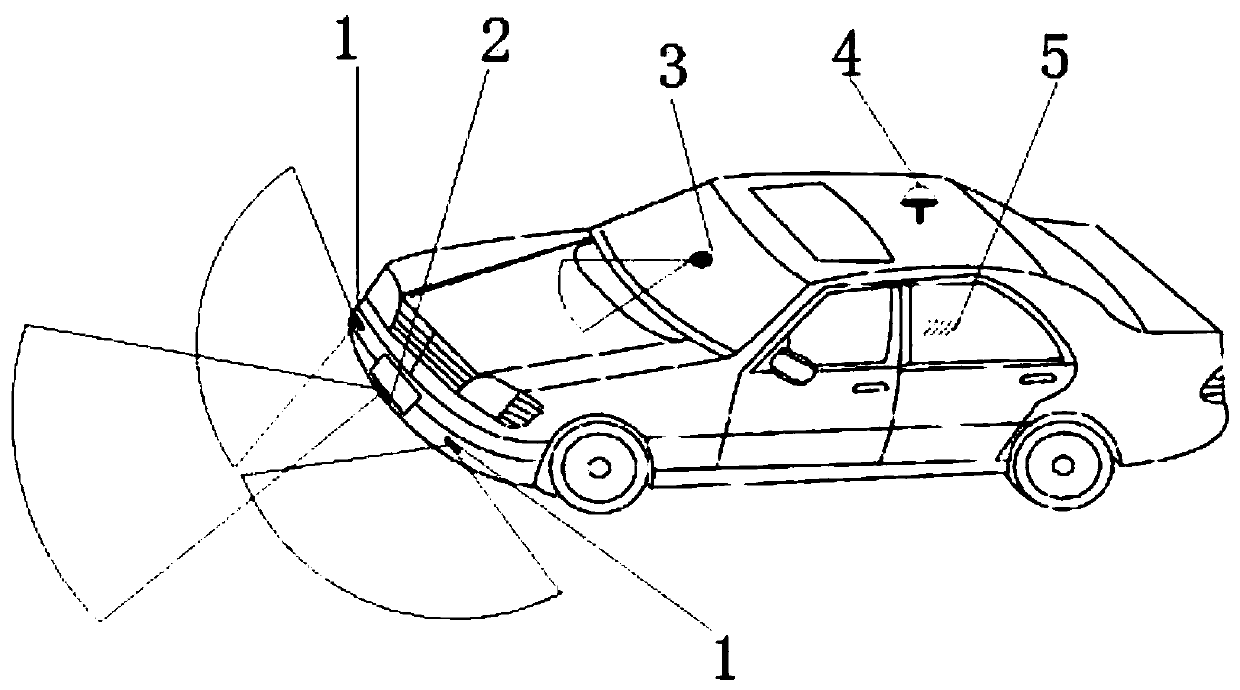

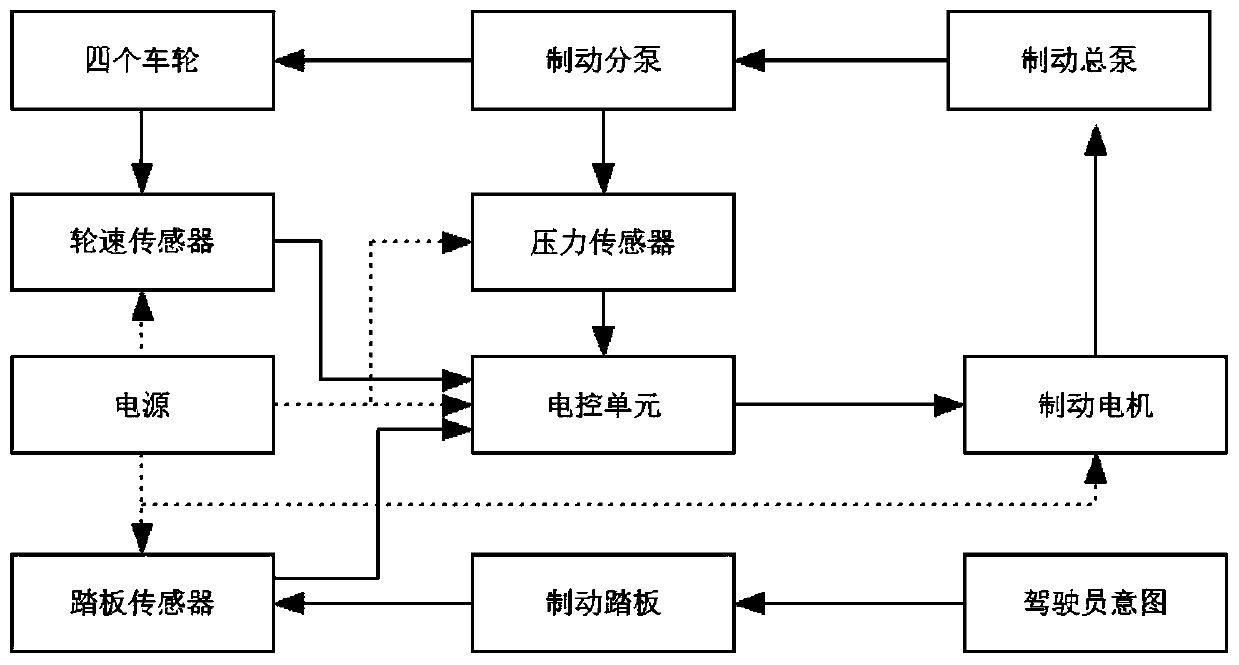

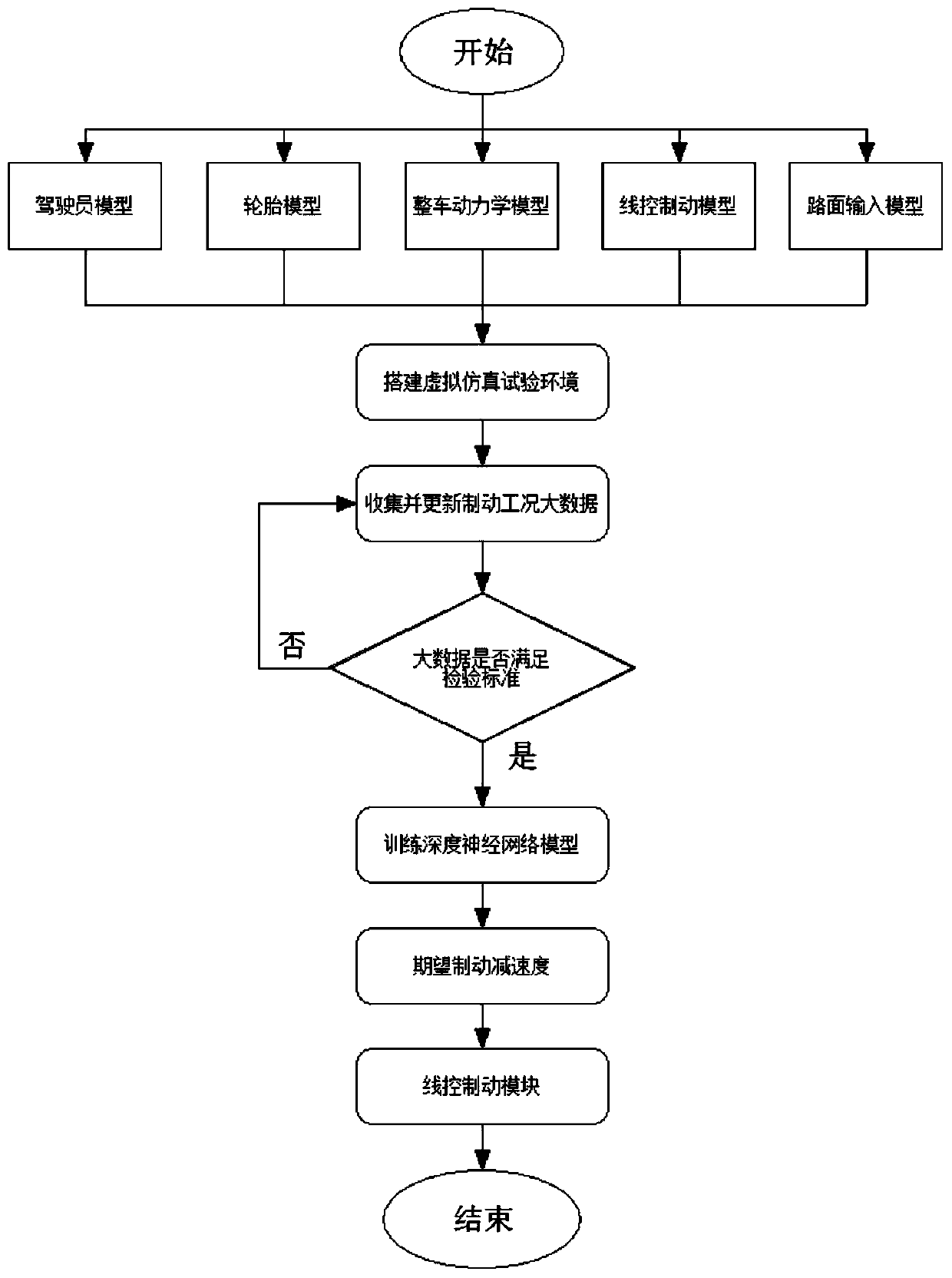

Man-machine coupled longitudinal collision avoidance control method

The invention relates to a man-machine coupled longitudinal collision avoidance control method. Theman-machine coupled longitudinal collision avoidance control method comprises a wire control brakingmodule, an active sensing module, and an anthropomorphic control module;theanthropomorphic control module comprises a driver model and a deep neural network anthropomorphic decision-making controller,the active sensing module obtains a real-time traffic condition and inputs to a driver model to output desired brake deceleration;according to basic experimental data of a driver and the active sensing module, the deep neural network anthropomorphic decision-making controller generates a large amount of experimental data by utilizing generative adversarial net technology, the deep neural networkis trained to generate a brake collision avoidance controller, and output information of the brake collision avoidance controller is transmitted to the wire control braking module to complete the braking collision avoidance.According to the man-machine coupled longitudinal collision avoidance control method, the problems that the collision avoidance controller of a vehicle has a narrow range of application and control is harsh are effectively solved, and adaptability of the brake collision avoidance system and the comfort of the driver and passenger are improved.

Owner:SOUTHEAST UNIV

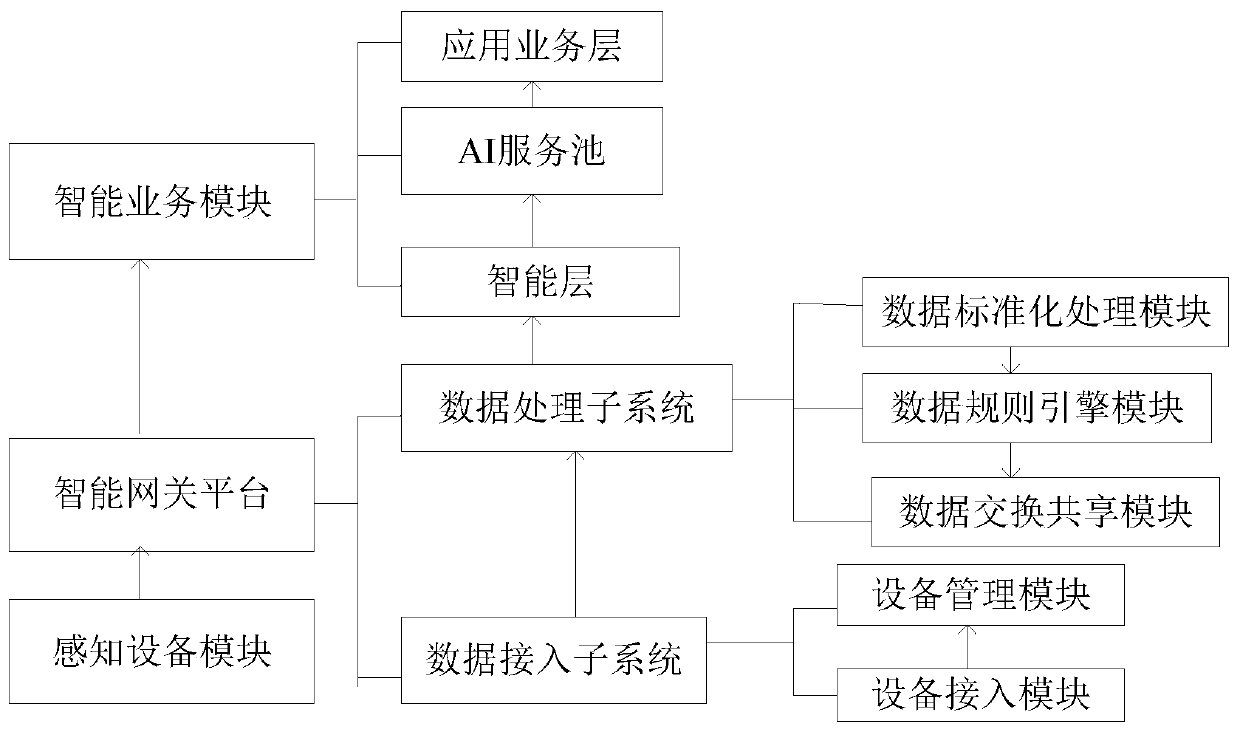

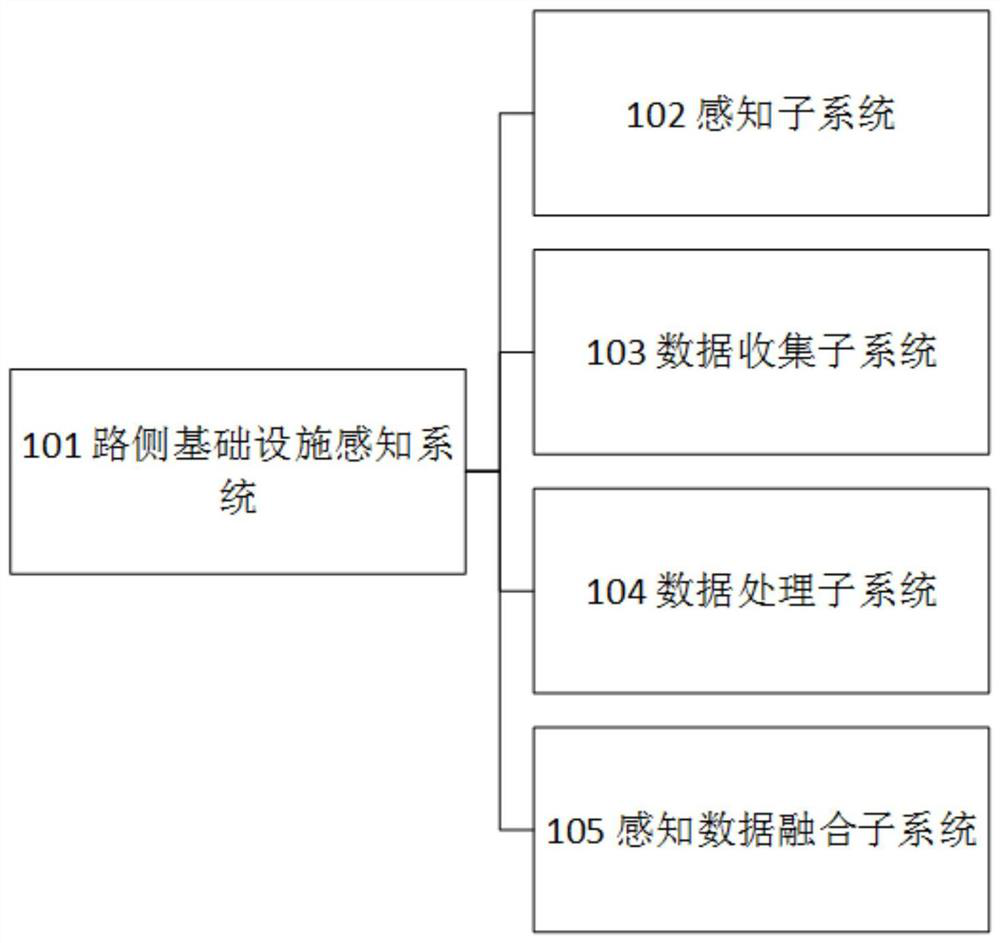

An active perception system for vehicle-road cooperative automatic driving

ActiveCN111216731BReduce complexityFast perceptionRoad vehicles traffic controlSensing dataActive perception

The invention discloses an active perception system for vehicle-road cooperative automatic driving, which includes a roadside infrastructure perception system, which is used to provide perception data for an automatic networked traffic system; the roadside infrastructure perception system includes data collection Subsystem, data processing subsystem, perception subsystem, data fusion subsystem; the perception subsystem, data collection subsystem, data processing subsystem, and data fusion subsystem are connected in sequence; the perception subsystem is used for different perception points on the road Provide different sensing functions; the data collection subsystem is used to collect sensing data; the data processing subsystem is used to process the sensing data; the data fusion subsystem is used to fuse the processed sensing data, and through wireless or wired network Send to the automatic network traffic system. The invention effectively reduces the complexity of the perception system, and realizes fast, accurate and comprehensive perception of vehicle and environment information.

Owner:上海丰豹商务咨询有限公司

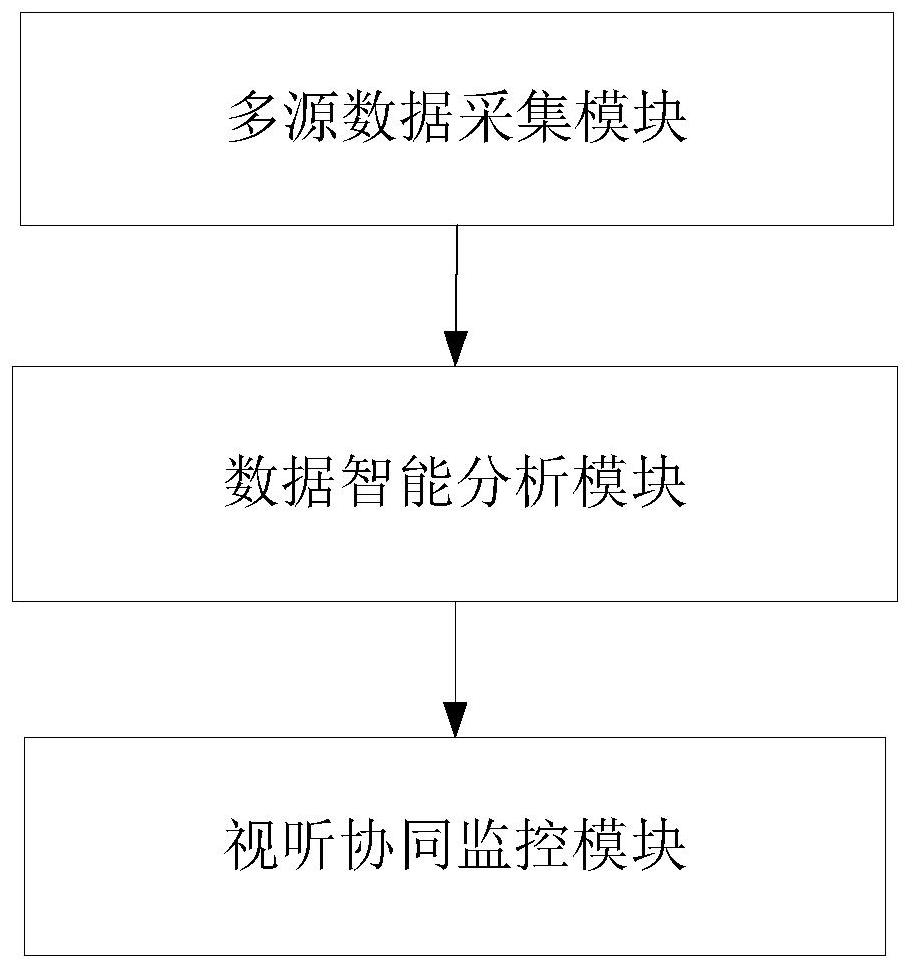

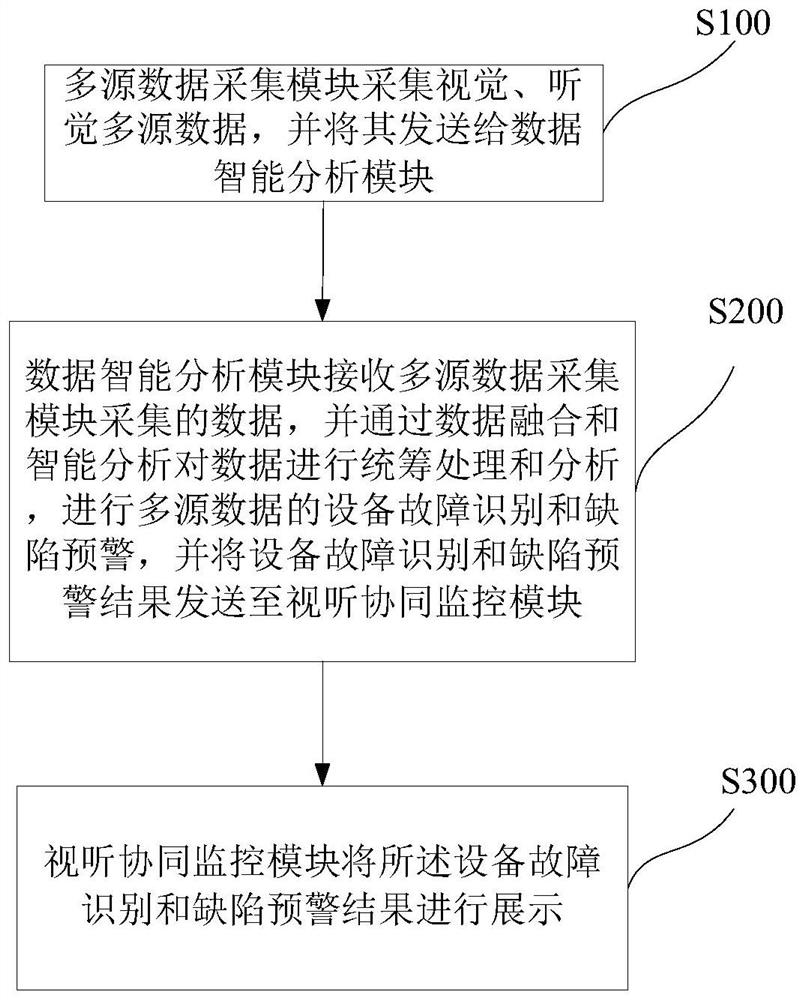

Visual and auditory collaborative power equipment inspection system and method

PendingCN114093145AImprove the active perception of statusEnhance attribute synergy cognitionMeasurement devicesAlarmsAuditory senseActive perception

The invention discloses a visual and auditory collaborative power equipment inspection system and method. The inspection system comprises a multi-source data acquisition module, a data intelligent analysis module and an audio and visual collaborative monitoring module; the multi-source data acquisition module is used for acquiring visual and auditory multi-source data and sending the visual and auditory multi-source data to the data intelligent analysis module; the data intelligent analysis module is used for receiving the data acquired by the multi-source data acquisition module, carrying out overall processing and analysis on the data through data fusion and intelligent analysis, carrying out equipment fault identification and defect early warning on the multi-source data, and sending an equipment fault identification and defect early warning result to the audio and visual collaborative monitoring module; and the audio and visual collaborative monitoring module displays the equipment fault identification and defect early warning result. According to the inspection system and method, multi-source data monitored by visual and auditory sense and other sensors are fully applied, state active perception and attribute collaborative cognition of the power equipment are comprehensively improved, and the inspection efficiency and accuracy of the power equipment are improved.

Owner:XUJI GRP

Active sensing method based on spectral correlation for cognitive radio systems

ActiveUS9210016B2Improve performanceMaintain qualitySpectral gaps assessmentPilot signal allocationQuality of serviceFrequency spectrum

A design of cognitive radio (CR) signal structure which based on the spectral correlation can be used for active sensing. In this signal structure, the known pilots used for the primary users (PUs) are duplicated and reallocated in the CR transmission signal properly. With this CR signal structure, the received signal of spectrum sensors will become correlated on the subcarriers when PU reoccupation occurs while the CR transmission is active, and thus PU activities can easily be detected by computing the spectral correlation function. As compare with the traditional cyclostationary feature detection scheme, this method can enhance the active sensing performance while remaining the service quality of the CR system, achieving better detection performance in the same detection time, reducing sensing time (about 1 / 10 of the traditional sensing time), and still reaching the satisfactory outcome even in the circumstances of low SNR and SINR.

Owner:NATIONAL TSING HUA UNIVERSITY

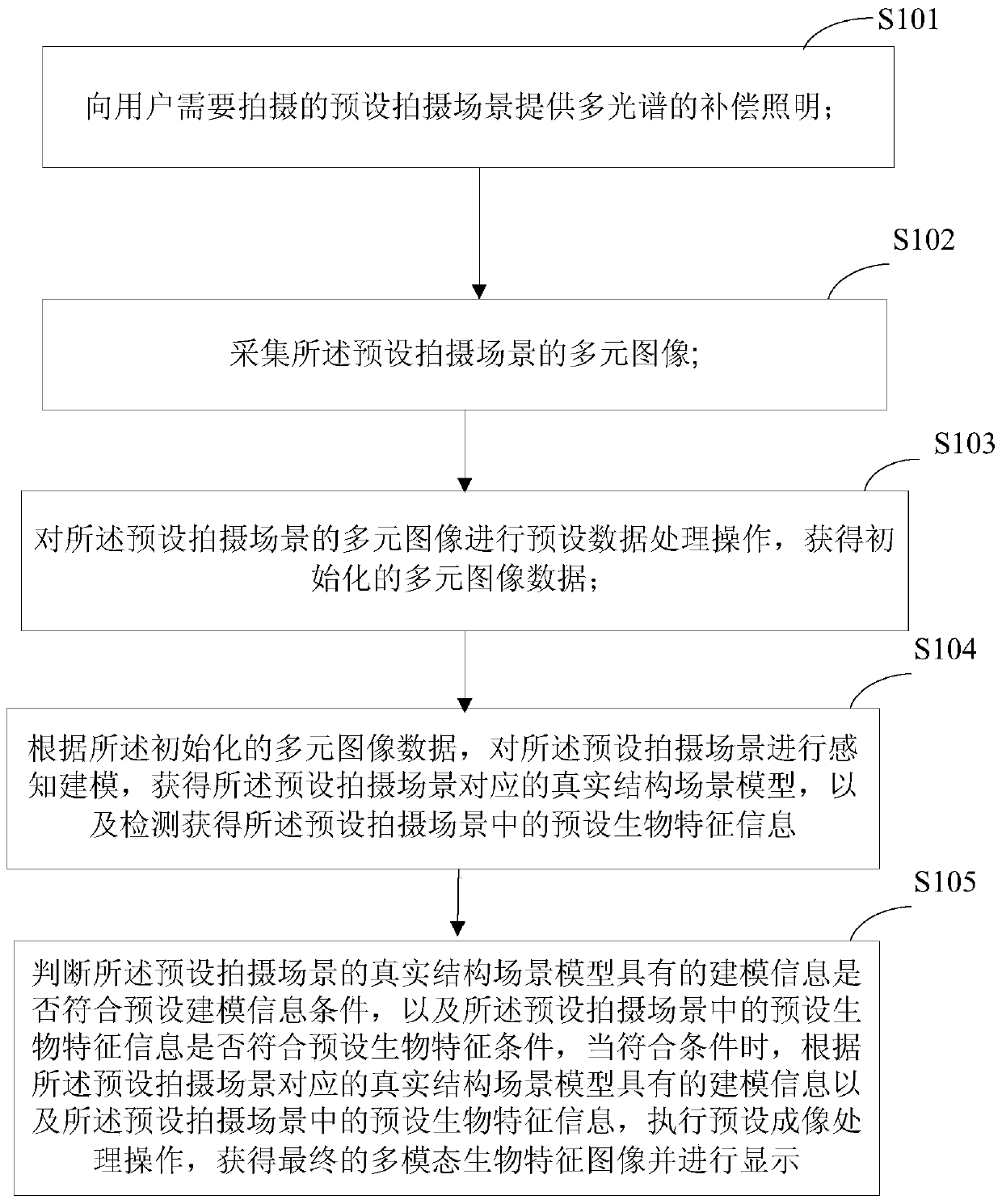

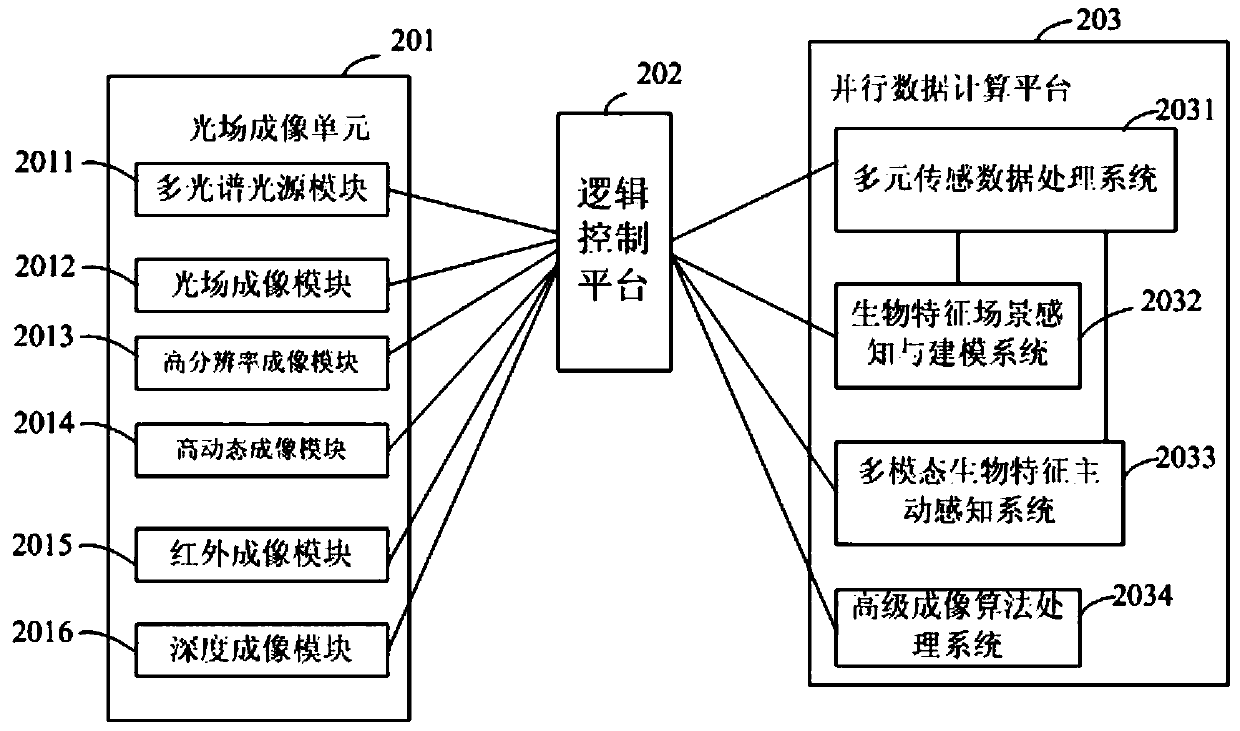

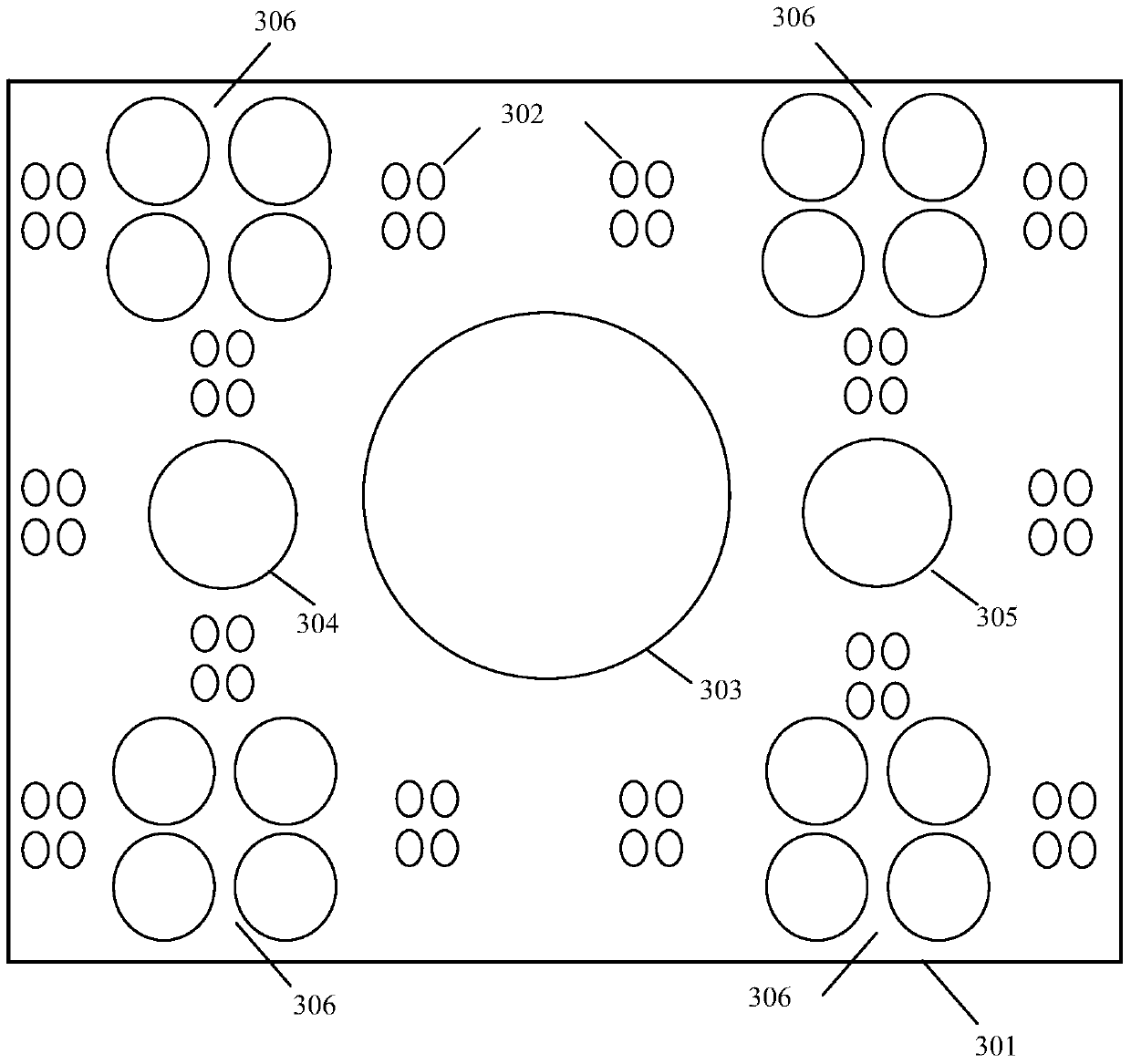

A method and device for acquiring multimodal biometric images in complex scenes

ActiveCN105574525BEasy to useEfficient acquisitionCharacter and pattern recognitionPattern recognitionData processing system

The invention discloses a complex scene multi-modal biometric image acquisition device, comprising: an active multi-component imaging unit, a logic control platform, and a parallel data computing platform, wherein the logic control platform is connected with the active multi-component imaging unit for parallel data computing The platform is connected to the logic control platform, and the parallel data computing platform specifically includes a multi-sensing data processing system, a biometric scene perception and modeling system, a multimodal biometric active perception system, and an advanced imaging algorithm processing system. In addition, the invention also discloses a method for acquiring multi-modal biological feature images of complex scenes. The present invention discloses a complex scene multi-modal biological feature image acquisition method and its device, which can perform multi-modal recognition of the biological features of multiple users under realistic complex scene conditions, and meet the identity recognition of multiple users in complex imaging scenes To realize the effective acquisition of multi-modal biometric features of iris, face and gait in a wide range.

Owner:TIANJIN IRISTAR TECH LTD

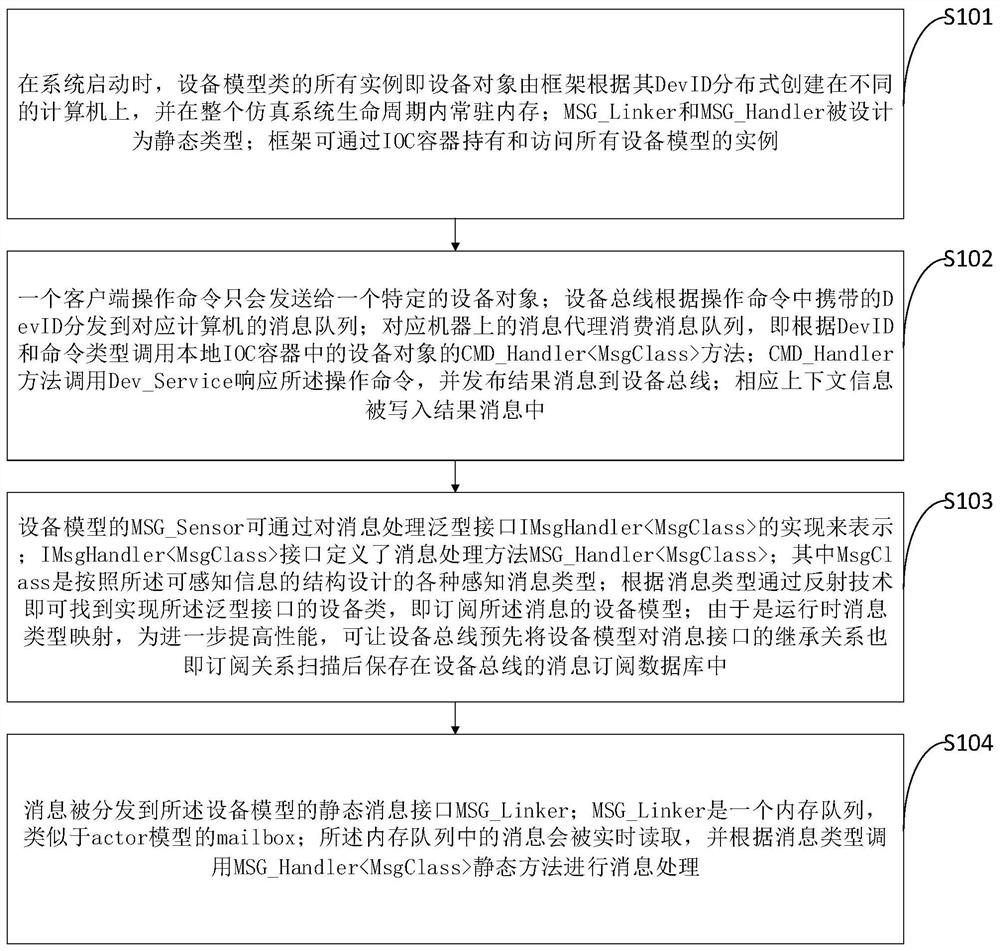

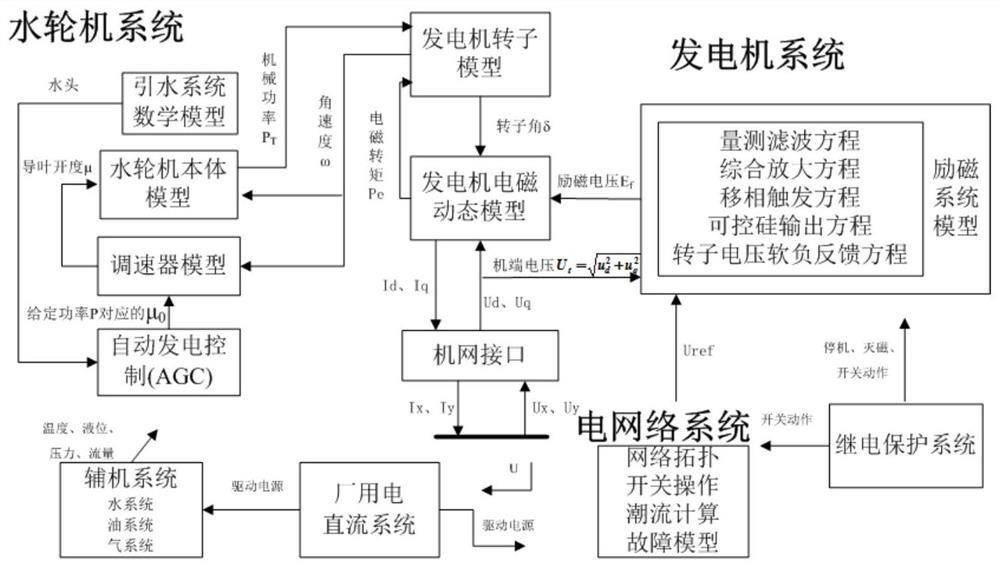

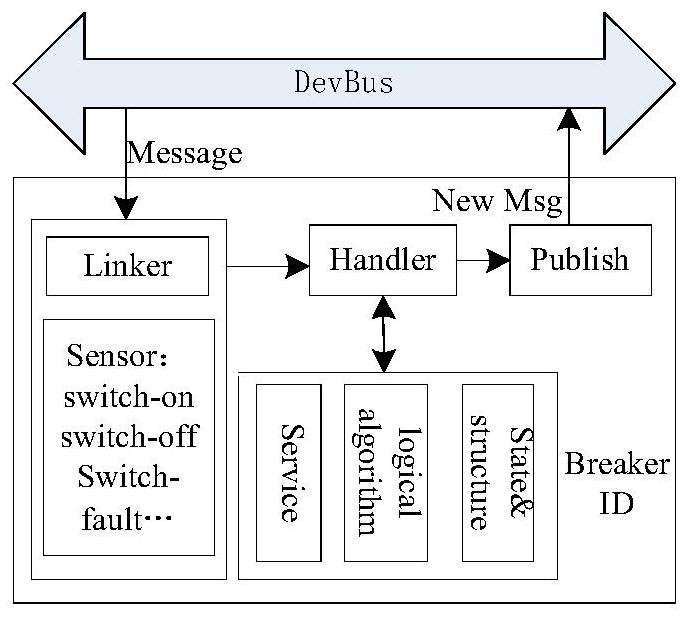

A hydropower station simulation method and simulation system based on active perception

ActiveCN110161881BImprove refinementFacilitates loose couplingSimulator controlService compositionActive perception

The invention belongs to the technical field of hydropower station simulation control, and discloses a hydropower station simulation method and simulation system based on active perception. The hydropower station equipment is used as the organization unit for decoupling and simulation services, and the intelligent perception and active service of hydropower equipment are used as the main line to drive the construction. model and simulation process. By rationally integrating the service composition and interaction ideas in SOA and EDA, and seamlessly integrating them into the modeling object of the hydropower environment—the simulation model of intelligent hydropower equipment, the on-demand distribution of device perception information and event-driven service collaboration are realized. , to complete the construction of the entire hydropower station simulation operating environment. The modeling method of the hydropower station simulation system provided by the present invention uses equipment as the basic unit of system decoupling and model organization, which is more in line with the operating principle of a real hydropower station, and is more clear and natural in the division of responsibilities, and is more conducive to the refinement of modeling and modeling Loose coupling, reusability and scalability, as well as mass customization of hydropower station simulation systems.

Owner:CHINA THREE GORGES UNIV

Intelligent control method for visual tracking

InactiveCN100342388CEasy to useReally easy to useImage enhancementCharacter and pattern recognitionActive perceptionComputer pattern recognition

This invention is a vision tracking control method that can realize real time tracking eye's sight point movement by computer pattern identification. This method can realize the tracking of eye's sight point by building view angle boundary proportion model as data imitation sample. By this method, computer can acquire people's command information automatically by actively apperceive mode.

Owner:万众一

Method, device and exploration method for intelligent agent to actively construct environmental scene map

ActiveCN113111192BOvercome limitationsNeural architecturesNeural learning methodsData setActive perception

Provides a method for an agent to actively construct an environmental scene map based on visual information, an environment exploration method, and an intelligent device. The method includes: collecting the environmental scene image and the corresponding environmental scene map data set required for training the model; collecting the agent exploring the environment required for the training model path; the active exploration model is trained by using the environmental scene image and the corresponding environmental scene map data set and the collected agent to explore the environmental path; the action is generated based on the trained active exploration model, and the agent uses the generated action to explore the environment. , to obtain 3D semantic point cloud data, and then use the 3D semantic point cloud data to construct an environmental scene map. The invention can overcome the limitation that the traditional computer vision tasks can only passively perceive the environment in the past, and utilize the active exploration characteristics of the intelligent body to combine the perception ability and the movement ability to realize active perception, actively explore the environment, and actively construct the scene of the environment Atlas, applied to a variety of vision tasks.

Owner:TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com