Task load scheduling method, device and equipment and readable storage medium

A load scheduling and task technology, applied in the field of artificial intelligence, can solve problems such as low task processing efficiency, waste of computing resources, and inability to fully utilize processor computing resources.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

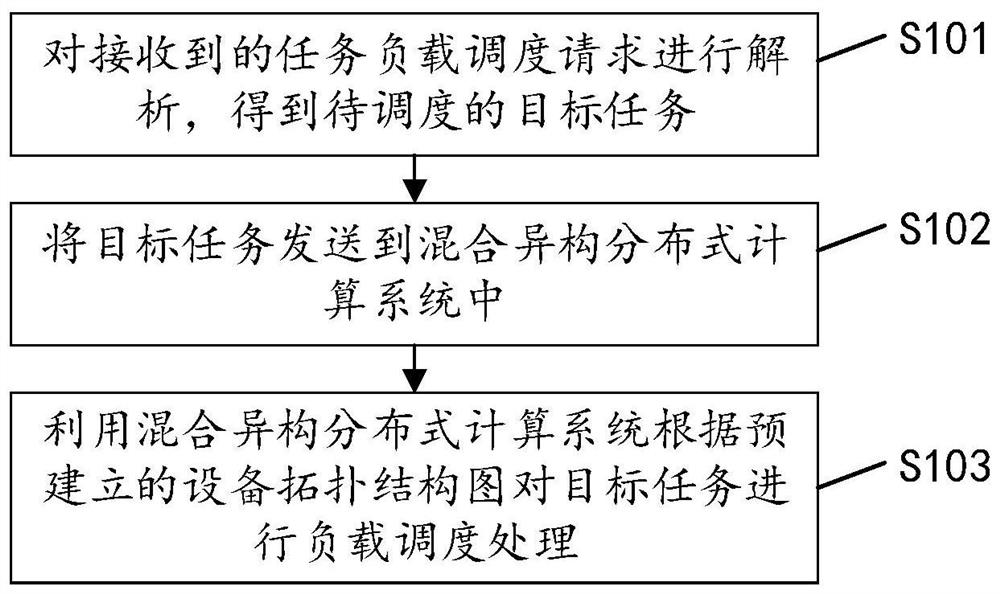

[0054] see figure 1 , figure 1 It is an implementation flowchart of the task load scheduling method in the embodiment of the present invention, and the method may include the following steps:

[0055] S101: Analyze the received task load scheduling request to obtain the target task to be scheduled.

[0056] When a deep learning network model training task needs to be processed, a task load scheduling request is sent to the task load scheduling center, and the task load scheduling request includes the target task to be scheduled. The task load scheduling center receives the task load scheduling request, and analyzes the received task load scheduling request to obtain the target task to be scheduled.

[0057] S102: Send the target task to the hybrid heterogeneous distributed computing system.

[0058] Wherein, the hybrid heterogeneous distributed computing system includes multiple computing devices with different computing architectures.

[0059] A hybrid heterogeneous distr...

Embodiment 2

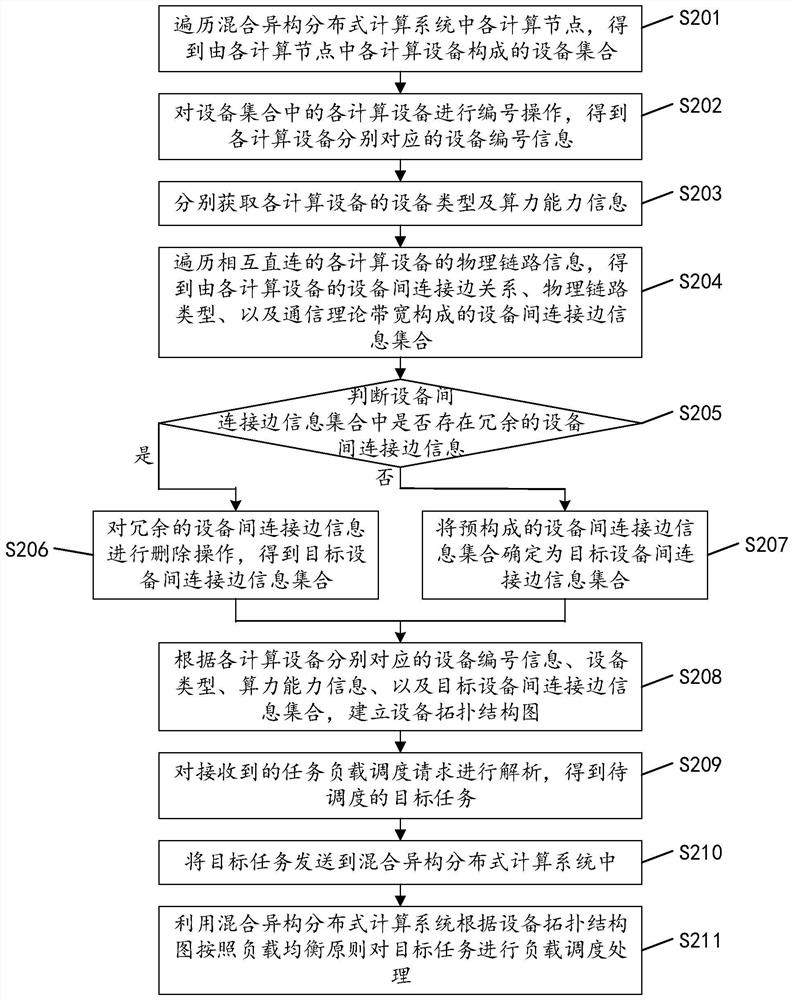

[0065] see figure 2 , figure 2 It is another implementation flowchart of the task load scheduling method in the embodiment of the present invention, and the method may include the following steps:

[0066] S201: Traversing each computing node in the hybrid heterogeneous distributed computing system to obtain a device set composed of computing devices in each computing node.

[0067] The hybrid heterogeneous distributed computing system includes multiple computing nodes, and each computing node includes at least one computing device Device_Num. Each computing node in the hybrid heterogeneous distributed computing system is traversed to obtain a device set composed of computing devices in each computing node.

[0068] S202: Perform a numbering operation on each computing device in the device set to obtain device number information corresponding to each computing device.

[0069] After obtaining the device set composed of each computing device in each computing node, perform...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com