Human body action recognition method and device based on multi-modal feature fusion

A technology for human action recognition and feature fusion, which is applied in the field of action recognition and can solve problems such as unsatisfactory results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

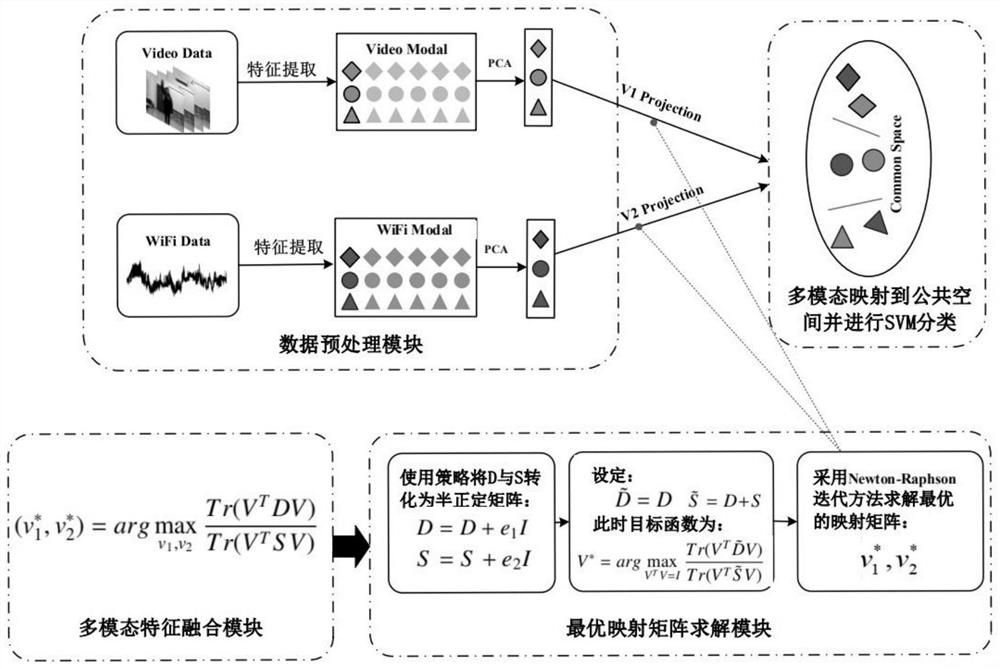

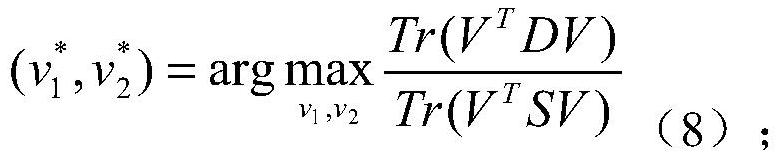

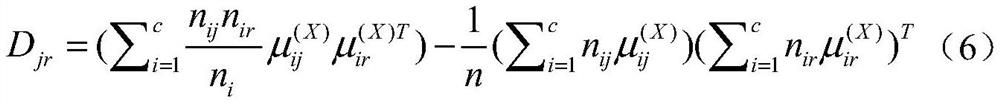

[0066] This embodiment provides a human action recognition method based on multi-modal feature fusion, which uses multi-modal feature fusion to fuse the CSI features of WiFi signals and video features, and maps these two features to the same common Carry out discriminant analysis in space, and finally identify the category of human actions; figure 1 As shown, the multi-modal data set is processed by the data preprocessing module to process the video and Wifi into numbers, and then a multi-modal feature fusion model is constructed to solve the objective function of the mapping matrix; then the model is solved for the global optimal mapping matrix, Finally, the input multimodal samples are mapped to the public space by using the mapping matrix, and then SVM classification is performed to obtain the final classification result. Include the following steps:

[0067] Step 1, data set preprocessing: the Vi-Wi15 data set includes video information and the CSI information data of the...

Embodiment 2

[0141] This embodiment provides a human action recognition device based on multimodal feature fusion, including:

[0142] The data set preprocessing unit is used to extract the video feature in the Vi-Wi15 data set by using a convolutional neural network, and extract the CSI feature of the WiFi signal in the Vi-Wi15 data set according to a standard statistical algorithm;

[0143] The construction unit of the multimodal feature fusion model is used to use the obtained video features and the CSI features of the WiFi signal as two modes respectively, establish a multimodal feature fusion model and define an objective function for solving the mapping matrix;

[0144] The mapping matrix global optimal solution solving unit is used to calculate the objective function to obtain the global optimal solution of the mapping matrix in the multimodal feature fusion model;

[0145] The action recognition unit is used to obtain the global optimal solution about the mapping matrix, and then p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com