Point cloud three-dimensional reconstruction method based on RGB data and generative adversarial network

A technology of three-dimensional reconstruction and RGB image, applied in the research field of point cloud data processing, can solve the problems of complex operation and rough model of image sequence point cloud generation and three-dimensional reconstruction method, and achieves convenient processing, low hardware equipment requirements, and convenient data collection. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

[0039] Embodiment technical scheme

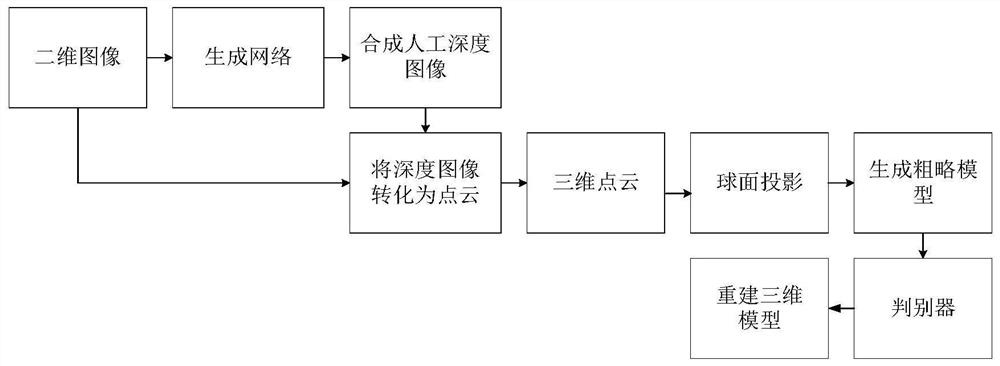

[0040] Step 1: Create a depth image via a generative network.

[0041] To convert RGB images into corresponding depth images, the generation network part of the GAN network uses a modified pix2pixHD, which enables it to create high-quality synthetic depth images from RGB images and reduces computer hardware requirements.

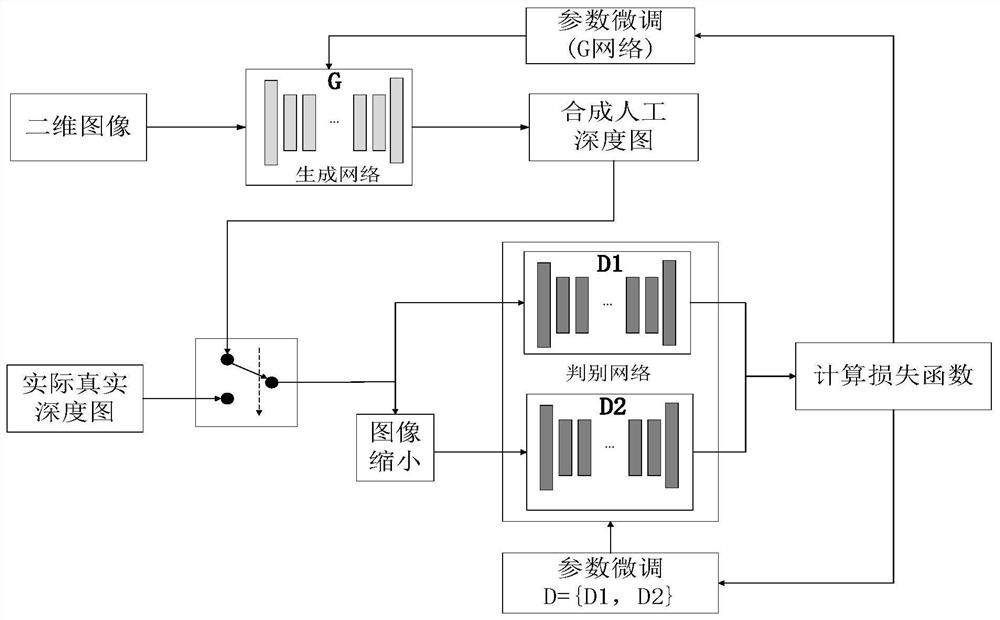

[0042]Use a single global generator for the pix2pixHD model. where the generator G consists of three components: a convolutional front-end, a set of residual blocks and a transposed convolutional back-end. The discriminator D is broken down into two sub-discriminators D1 and D2. The discriminator D1 processes the full-resolution synthetic images generated by the generator, while D2 processes the half-scale synthetic images. Therefore, discriminator D1 provides a global view of the depth image to guide ge...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com