Mass computing node resource monitoring and management method for high-performance computer

A computing node and resource monitoring technology, applied in computing, resource allocation, program control design, etc., can solve the problems of reducing system performance, increasing the load of control nodes, increasing the width of communication tree, etc., to achieve the effect of improving system performance and reducing load

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

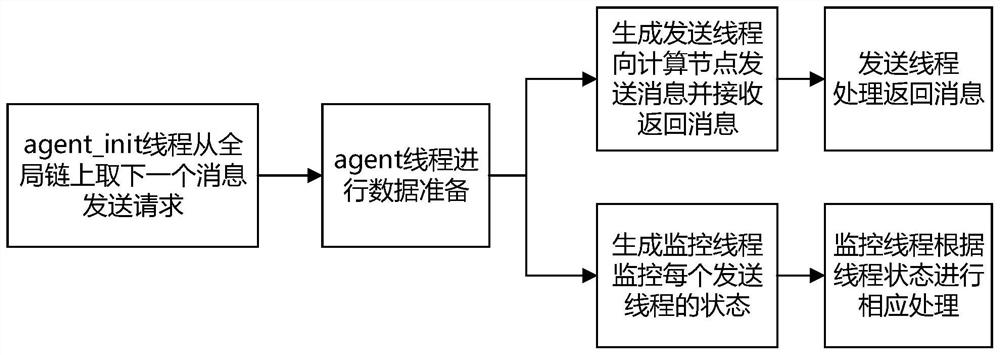

[0055] First of all, this embodiment proposes an implementation method in which the entire process of processing and sending requests is independently completed by the control node. In the application scenario of large-scale nodes, it will bring various loads to the control node. The entire processing process is as follows: figure 1 Shown: as figure 1 As shown, the specific workflow is as follows:

[0056] The first step is that the control thread (agent_init thread) continuously removes a message sending request from the chain under the premise that the total number of relevant threads does not exceed the thread upper limit, and generates a worker thread (agent thread) to process the request;

[0057] The second step is data preparation for worker threads. Data preparation is mainly to determine whether the message is sent through a star structure or a tree structure, and if it is sent through a tree structure, the target node is also grouped;

[0058] The third step is for...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com