Multi-sensor fused intelligent parking system and method

A multi-sensor fusion, sensor subsystem technology, applied in driving system, intelligent parking system, parking space detection and autonomous parking path planning practice field, can solve the problem of single parking scene, the type of obstacles cannot be detected, parking space detection problems such as low accuracy, to achieve the effect of a wide recognition range

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

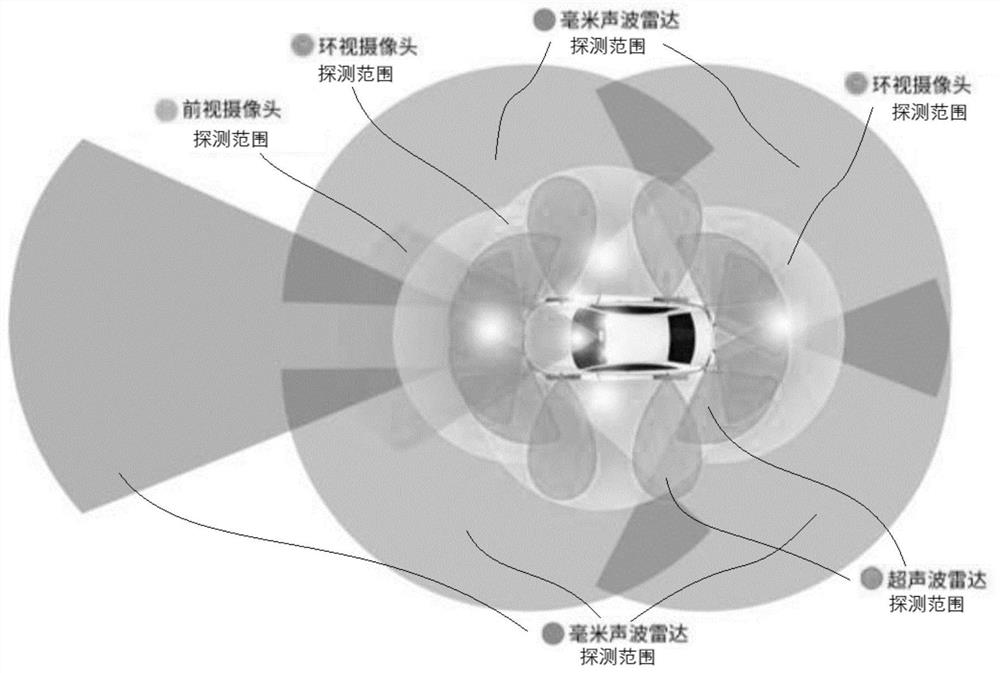

[0070] as follows figure 1 The sensor subsystem (unit) shown includes a (high-definition) camera and a radar positioning detection component. Further, the (high-definition) camera includes a front-view camera and multiple surround-view (fisheye / wide-angle) cameras; the radar positioning detection component includes multiple ultrasonic radar and multiple mmWave radars.

[0071] 1. Sensor unit (subsystem):

[0072] The sensor unit includes: four fisheye (wide-angle) cameras located on the front, rear, left, and right sides of the vehicle and a front-view camera on the front of the vehicle, a total of 4 ultrasonic ranging sensors located on both sides of the vehicle, and a total of 4 ultrasonic ranging sensors located at the front and rear of the vehicle A total of 8 ultrasonic ranging sensors, as well as four millimeter-wave radars and forward-facing main millimeter-wave radars located on the four corners of the car body. Among them, the installation positions of the four came...

Embodiment 2

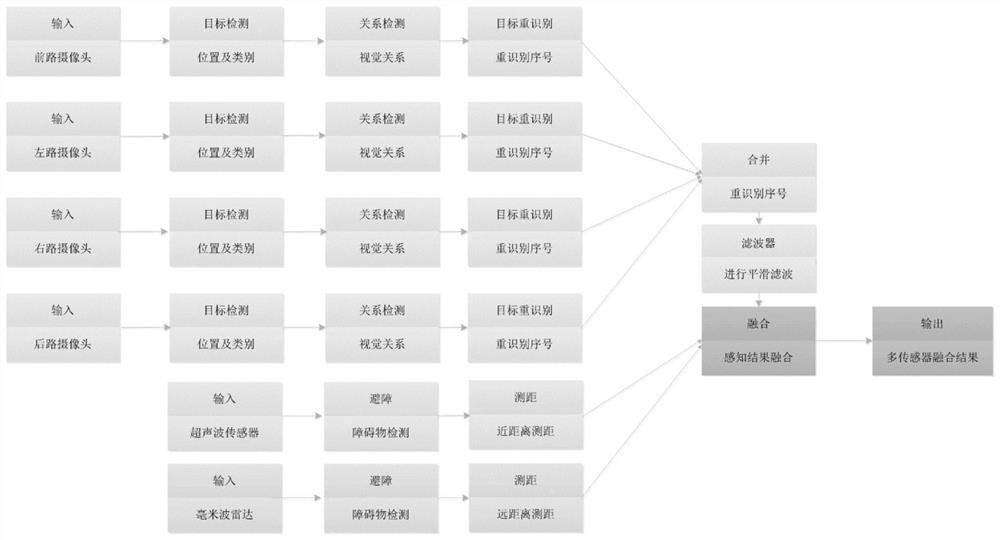

[0125] The information processing subsystem also includes an original target detection component and a target fusion detection component. Further, the original target detection component includes a graphic target detection module, a relationship detection module, an ID number tagging module, a tag merging module and a tag filtering (preprocessing) module ; The target fusion detection component includes a point cloud target detection module, a multi-sensor fusion module and a path planning module.

[0126] as follows figure 2 shown

[0127] (1) Obtain at least four-way fisheye cameras in the front, rear, left, and right sides of the vehicle to collect fisheye images and point cloud data from ultrasonic sensors and millimeter-wave radars;

[0128] (2) The graphic target detection module inputs the fisheye image collected by the camera into the convolutional neural network, and the ID number marking module uses the target detection algorithm to obtain the category and position ...

Embodiment 3

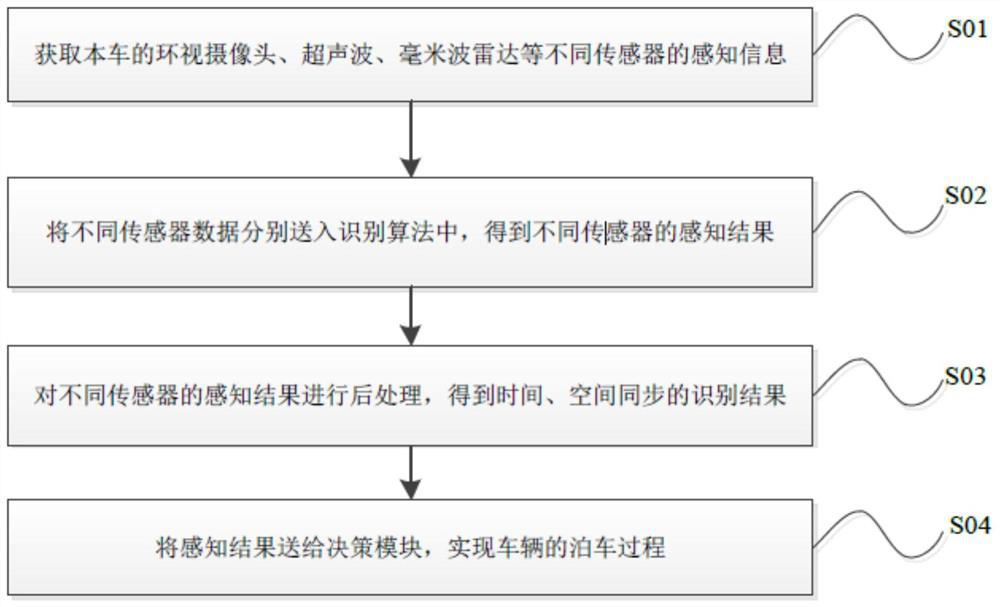

[0138] Such as image 3 As shown, the steps of the intelligent parking system method for multi-sensor fusion are as follows:

[0139] S01: Obtain the perception information of different sensors such as the surround view camera, ultrasonic wave, and millimeter wave radar of the (this) vehicle;

[0140] S02: Send different sensor data into the recognition algorithm respectively, and obtain the perception results of different sensors;

[0141] S03: Post-processing the perception results of different sensors to obtain time and space synchronization recognition results;

[0142] S04: Send the sensing result to the decision-making module to realize the parking process of the vehicle.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com