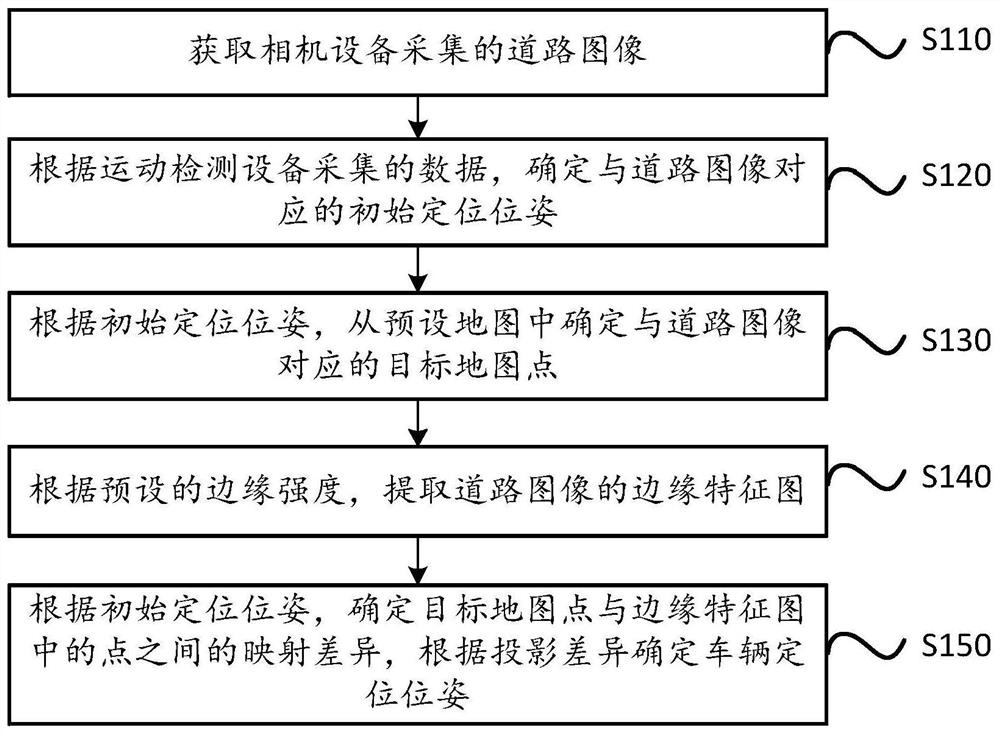

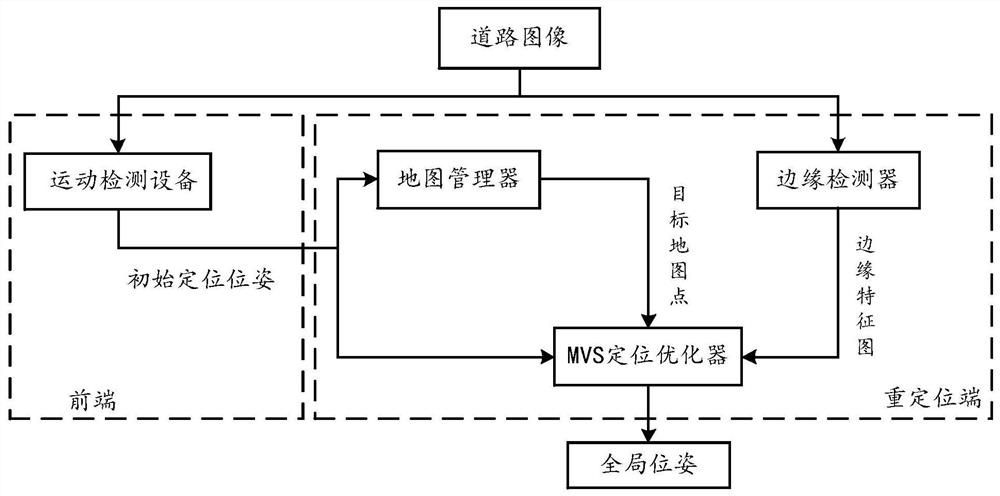

Vision-based vehicle positioning method and device and vehicle-mounted terminal

A vehicle positioning and vision technology, applied in the details of processing steps, image data processing, instruments, etc., can solve problems such as inability to perform visual positioning, low visual positioning effectiveness, and difficulty in high-precision maps.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

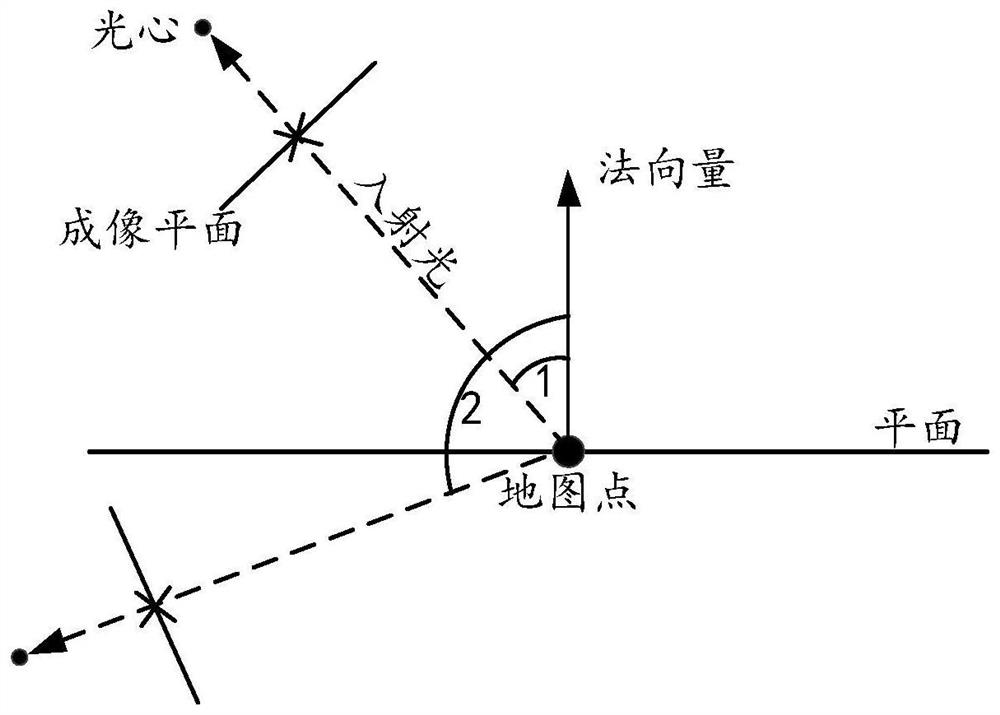

[0155] Embodiment 1: According to the value of the estimated pose, the transformation matrix between the world coordinate system and the camera coordinate system is determined, and according to the transformation matrix and the projection relationship between the camera coordinate system and the image coordinate system, the target map The point is mapped to the image coordinate system to obtain the first mapped position of the target map point, and the projection difference between the first mapped position and the position of the point in the edge feature map in the image coordinate system is calculated.

[0156] Wherein, the camera coordinate system is the three-dimensional coordinate system where the camera device is located, and the image coordinate system is the coordinate system where the road image is located. The estimated pose is the pose of the vehicle in the world coordinate system. According to the value of the estimated pose, the transformation matrix between the w...

Embodiment approach 2

[0160] Embodiment 2: Determine the transformation matrix between the world coordinate system and the camera coordinate system according to the value of the estimated pose. According to the transformation matrix and the projection relationship between the camera coordinate system and the image coordinate system, the edge feature map The points of are mapped to the world coordinate system, the second mapped position of the point in the edge feature map is obtained, and the projection difference between the second mapped position and the position of the target map point in the world coordinate system is calculated.

[0161] To sum up, in this embodiment, according to the mutual conversion relationship between the world coordinate system, the camera coordinate system and the image coordinate system, the position information of the target map point can be mapped to the image coordinate system, or the point in the edge feature map can be mapped to To the world coordinate system, spec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com