GPU scheduling method and system based on asynchronous data transmission

A scheduling method and asynchronous data technology, applied in the field of GPU scheduling, can solve problems such as high latency and low throughput, and achieve the effect of smoothing delay changes, ensuring throughput and low latency, and expanding delay time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

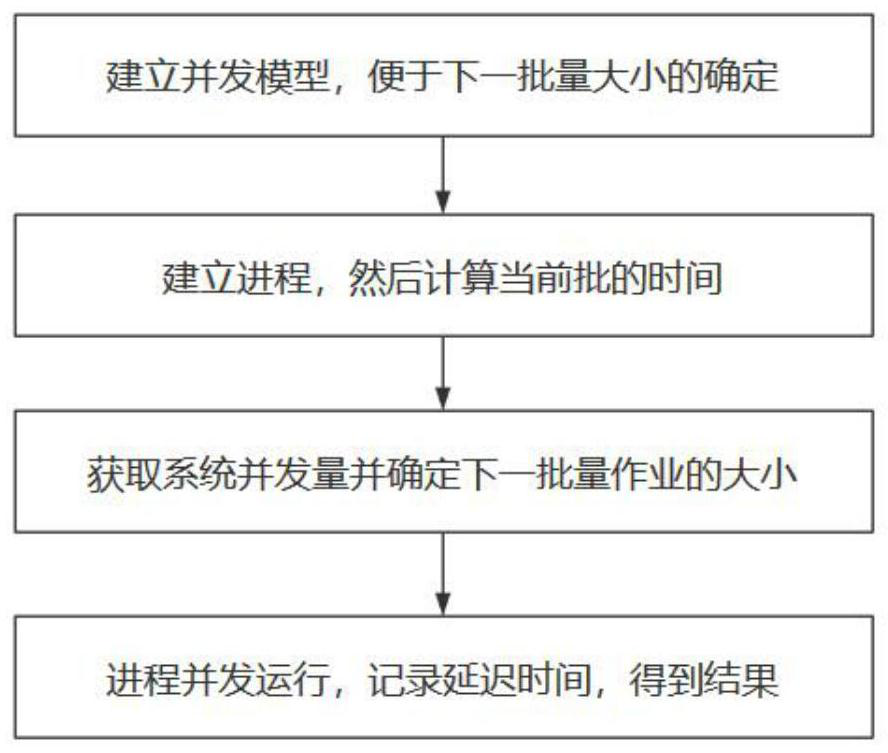

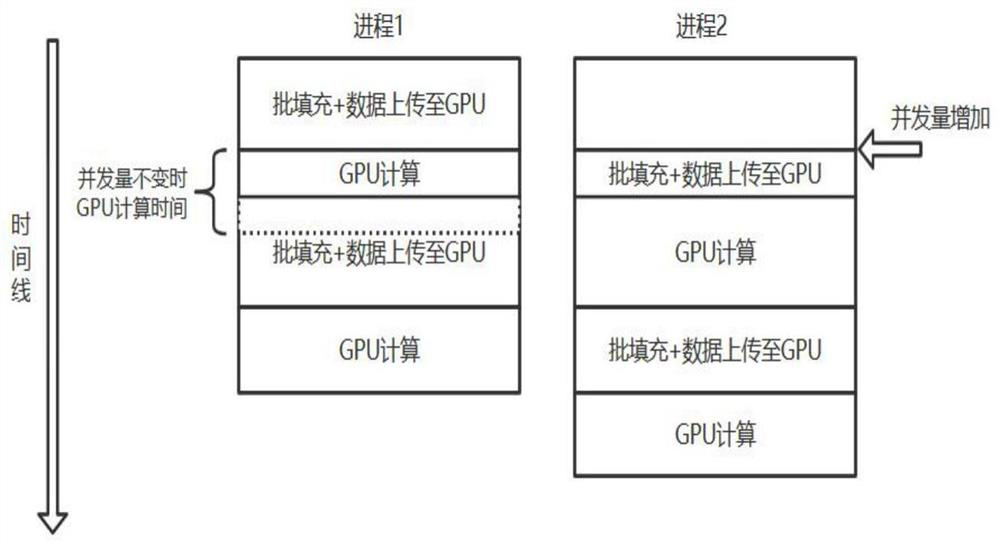

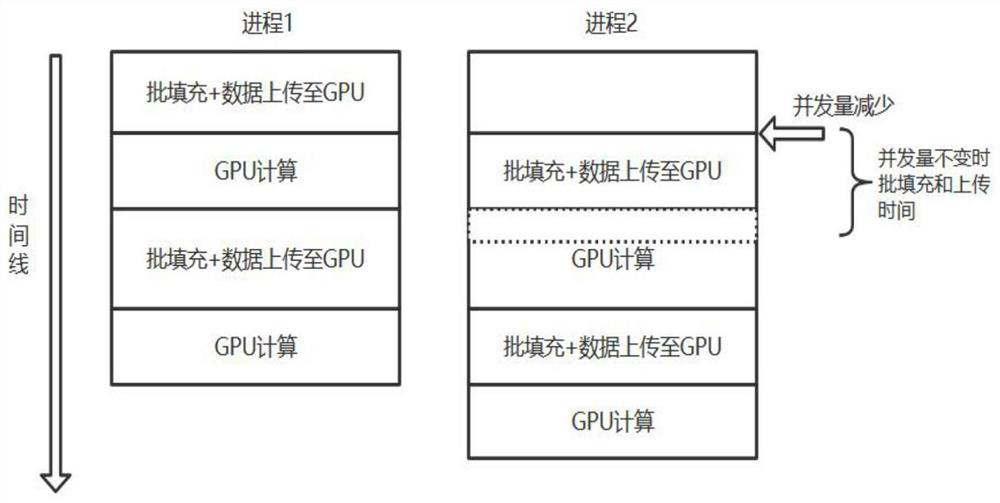

[0058] After investigating the characteristics of the GPU, the inventor found that the GPU supports asynchronous execution of data transmission and calculation. It can be seen that during deep learning inference, the CPU-to-GPU data transmission and GPU calculation can be performed asynchronously, that is, the hidden data transmission will greatly reduce the final delay time. At the same time, in the existing methods, deep learning reasoning strives to achieve high throughput and low latency at the same time, but in fact, it is difficult to achieve both at the same time. Therefore, the present invention proposes a quantitative model with concurrency as an independent variable and system throughput and time delay as dependent variables. Based on this model, a scheduling algorithm using two processes to hide data transmission delay is implemented to improve system performance. The present invention can calculate and determine the next batch size according to the batch job infor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com