Feature graph up-sampling method, terminal and storage medium

A storage medium and feature map technology, applied in the field of image processing, can solve the problem of lack of feature semantic information, and achieve the effect of improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0034] The feature map up-sampling method provided by the present invention may be applied in a terminal, and the terminal may perform up-sampling of a feature map through the feature map up-sampling method provided by the present invention. Terminals can be, but are not limited to, various computers, mobile phones, tablet computers, vehicle-mounted computers, and portable wearable devices.

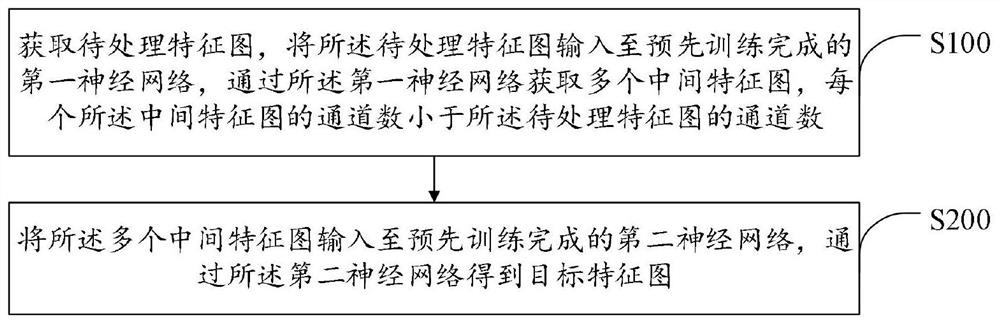

[0035] Such as figure 1 As shown, in an embodiment of the sampling method on the feature map, the steps are:

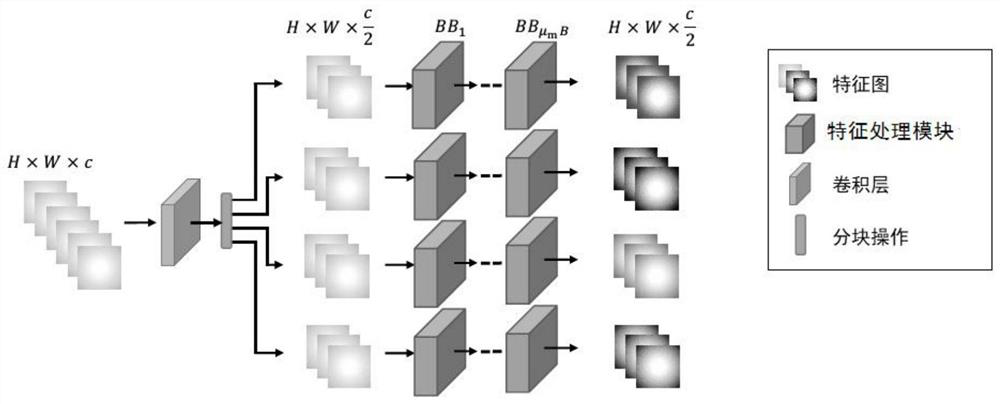

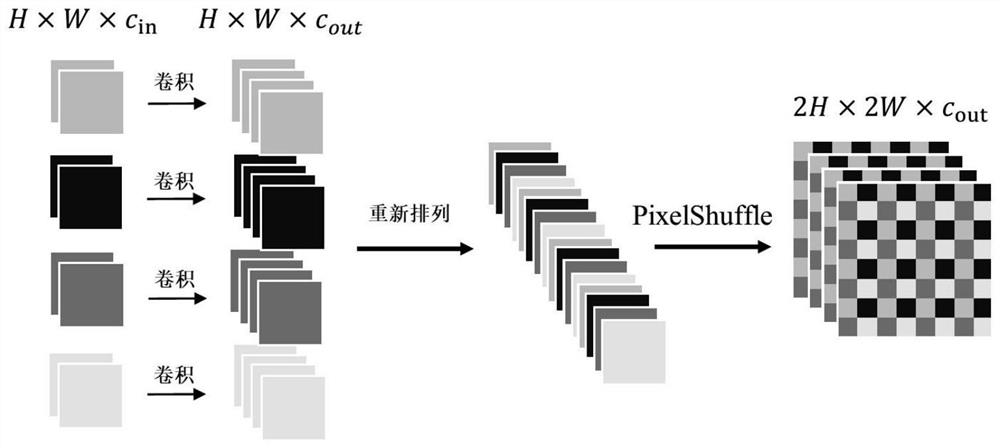

[0036] S100. Obtain a feature map to be processed, input the feature map to be processed into a pre-trained first neural network, and obtain multiple intermediate feature maps through the first neural network.

[0037] Specifically, the feature map upsampling method provided in this embodiment can be implemented as a part of the image processing task through the upsampling module in the neural network that performs the image processing task. The image processing task includes bu...

Embodiment 2

[0076] Based on the above embodiments, the present invention also provides a corresponding terminal, such as Figure 4 As shown, the terminal includes a processor 10 and a memory 20 . Understandably, Figure 4 Only some components of the terminal are shown, but it should be understood that implementation of all illustrated components is not required, and more or fewer components may be implemented instead.

[0077] The storage 20 may be an internal storage unit of the terminal in some embodiments, such as a hard disk or memory of the terminal. In other embodiments, the memory 20 may also be an external storage device of the terminal, such as a plug-in hard disk equipped on the terminal, a smart memory card (Smart Media Card, SMC), a secure digital (Secure Digital, SD ) card, flash memory card (Flash Card), etc. Further, the memory 20 may also include both an internal storage unit of the terminal and an external storage device. The memory 20 is used to store application sof...

Embodiment 3

[0080] The present invention also provides a storage medium, wherein one or more programs are stored, and the one or more programs can be executed by one or more processors, so as to realize the steps of the feature map upsampling method described above.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com