Method for improving trueness of marine scene simulation picture

A technology of scene simulation and realism, applied in neural learning methods, image enhancement, image analysis, etc., can solve the problem of sample scarcity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0066] 1. Prepare the data set

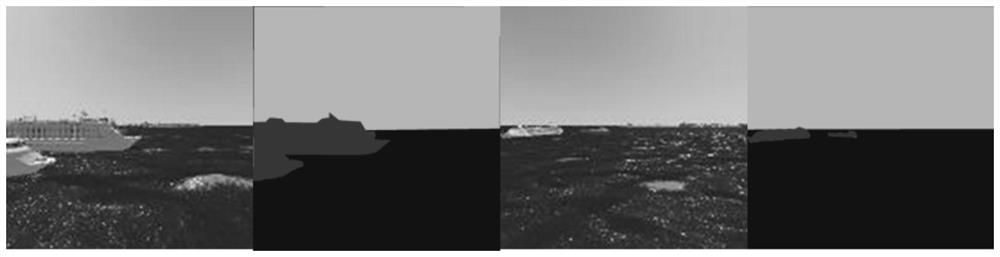

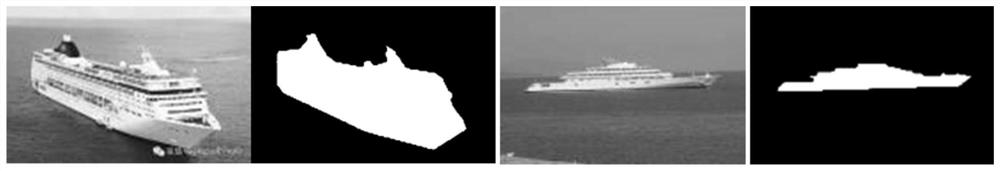

[0067] This method needs to prepare a total of three data sets, (1) the sea scene simulation picture data set Train_CG and its labels, which require the labels to divide the picture into three parts: the sky, the sea surface, and the foreground target. (2) Prepare the real sea scene photo dataset Train_real and its labels, and require the labels to divide the photos into foreground and background parts. (3) The sea surface photo dataset Train_sea without targets.

[0068] 2. Randomly select a sample image from Train_sea, and use the region growing algorithm to segment it.

[0069] 3. According to the semantic label of Train_CG and the segmentation result of Train_sea, it detects the sea antenna.

[0070] Randomly select a picture from each of Train_CG and Train_sea, and perform multiple samplings on the contact points between the sea surface and the sky in the two segmentation pictures to obtain a set of sampling point samples, and remove the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com