A parallelization-based brain-like simulation compilation acceleration method

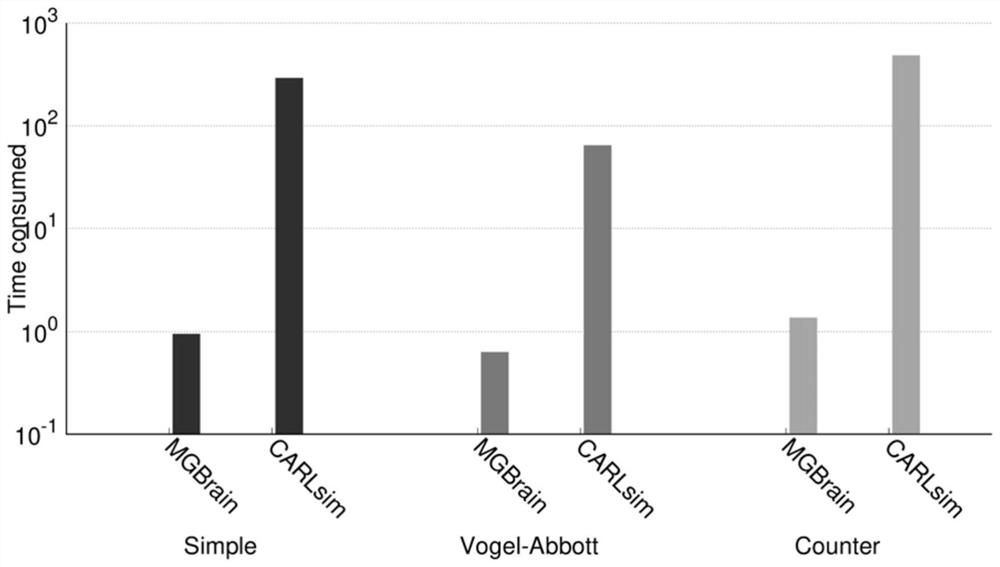

A technology of neurons and groups, applied in the field of neural network simulation, can solve problems such as poor user experience, long time consumption, and large time consumption, and achieve good user experience, high efficiency, and fast speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0034] A method for accelerating brain-inspired simulation compilation based on parallelization, comprising the following steps:

[0035] S1. When constructing a neural network, create several groups, each containing millions of neurons;

[0036] S2. Constructing neuron arrays in parallel according to neuron groups;

[0037] S3. Construct synapse arrays and the mapping relationship between neurons and synapse arrays in parallel according to the connections between groups.

[0038] At present, many mainstream brain-inspired simulation frameworks allow users to input neuron data in the form of neuron groups when users input neural network data, and allow users to establish synapses between neuron groups in a way specified by users. Connection, the so-called group refers to a group of neuron nodes with the same model and the same attributes. When constructing a neural network, several groups are created, and each group contains millions of neurons. In step S2, because all Neuro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com