Real-time video face key point detection method based on deep learning

A face key point, real-time video technology, applied in neural learning methods, instruments, biological neural network models, etc., can solve problems such as inability to use global inter-frame information, poor real-time performance, and poor detection accuracy for large face poses.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be further described below in conjunction with the accompanying drawings, but the protection scope of the present invention is not limited thereto.

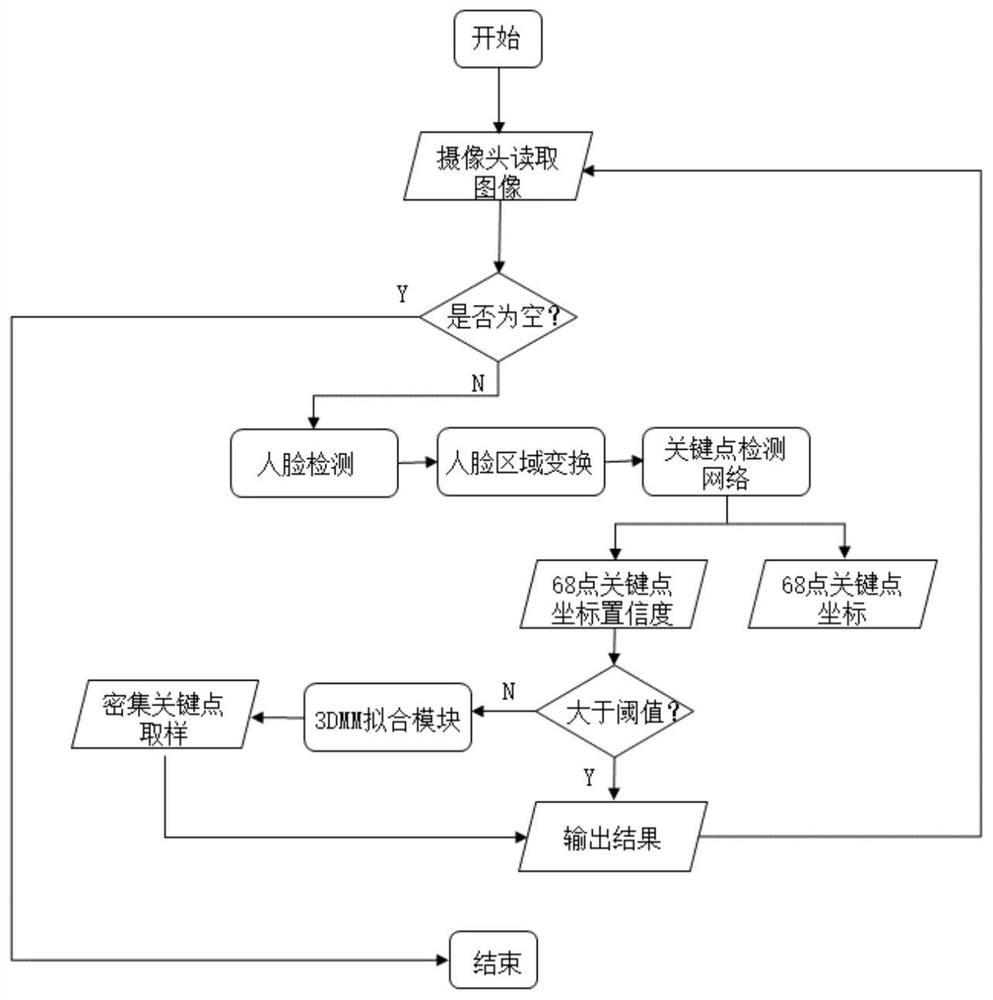

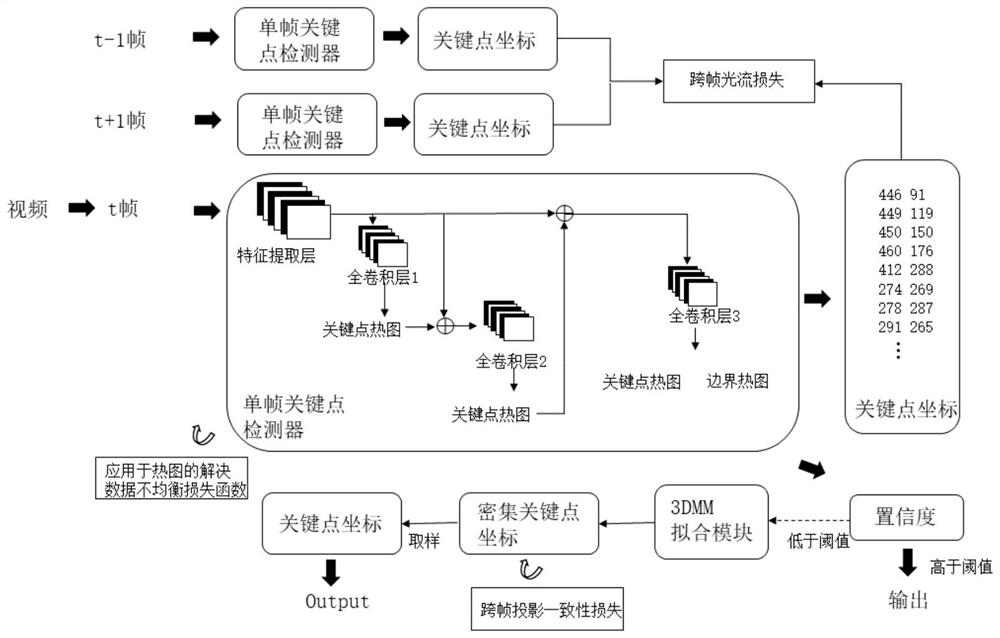

[0028] The method for detecting key points of real-time video faces based on deep learning in the present invention mainly includes steps such as constructing a convolutional neural network for key point detection, single-frame model training, cross-frame smooth training, and frame-by-frame detection.

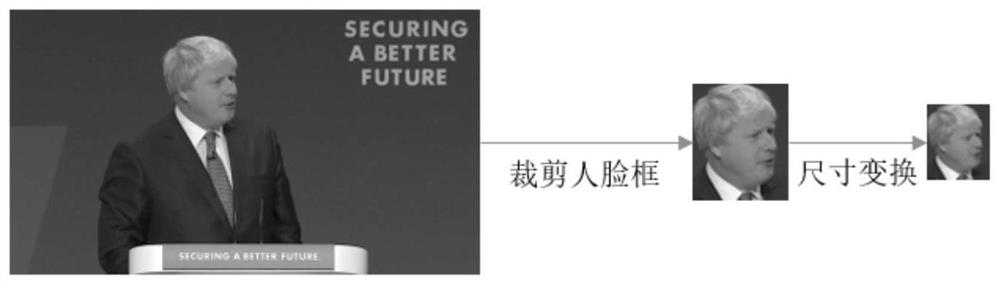

[0029] In the construction of the key point detection convolution network, based on the SAN network, the ordinary convolution is replaced by the depth separable convolution, the network structure is lightweight, and the boundary heat map subtask is added to improve the accuracy of the model detection. Figure, the loss function to solve the imbalanced distribution of face pose samples;

[0030]Perform single-frame model training, including the following steps:

[0031] Read the training samples in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com