Design method of lightweight convolution accelerator based on FPGA

An accelerator and convolution technology, applied in neural learning methods, neural architectures, biological neural network models, etc., can solve problems such as huge power consumption, high power consumption, and large size, and achieve the effect of improving computing power

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

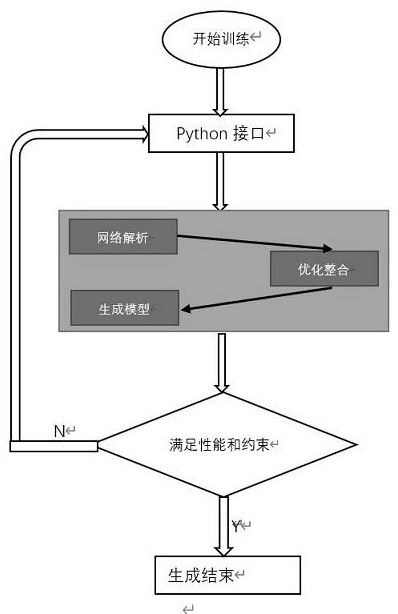

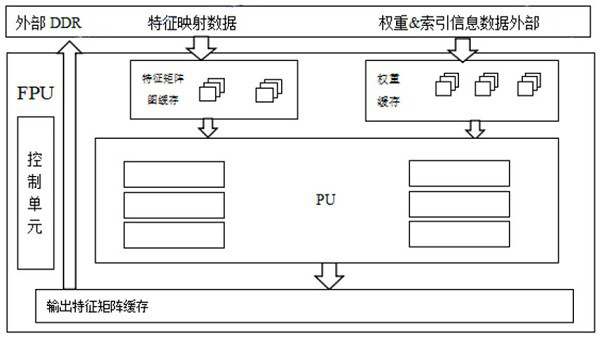

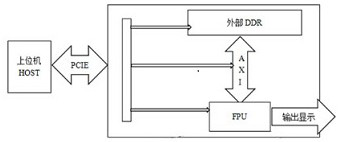

[0018] The present invention provides a method for convolution acceleration based on FPGA. First, the existing data is pre-trained. If the existing network parameters exceed the storage limit, the existing data is sparsely compressed by means of parameter compression, and the compressed data is encoded for index calling. Special design and optimization for different convolutional layers.

[0019] It can be more convenient to pass the transmission and operation when the neural network is operated. The specific implementation steps are as follows:

[0020] Step 01. Model initialization, use a general-purpose processor to parse the neural network configuration information and weight data, and write them into the cache RAM. After the model is initialized, normalization is performed, and the ownership value obeys the normal distribution within the range of 0 to 1;

[0021] Step 02. In step 01, for the problem of external storage access restrictions, based on space exploration, de...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com