Neural network operation device and method

A neural network and computing device technology, applied in the information field, can solve problems such as insufficient memory access bandwidth, tight computing resources, and high power consumption, and achieve the effects of ensuring correctness and efficiency, solving insufficient computing performance, and reducing data volume

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

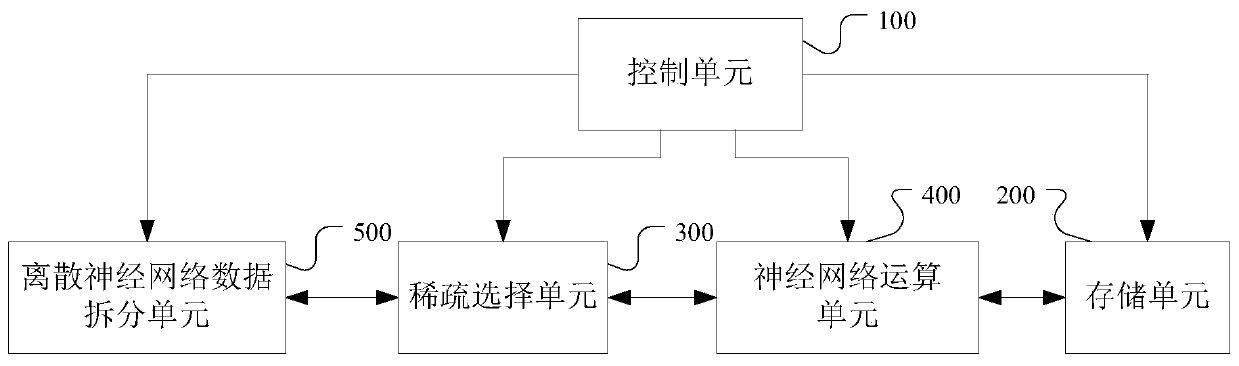

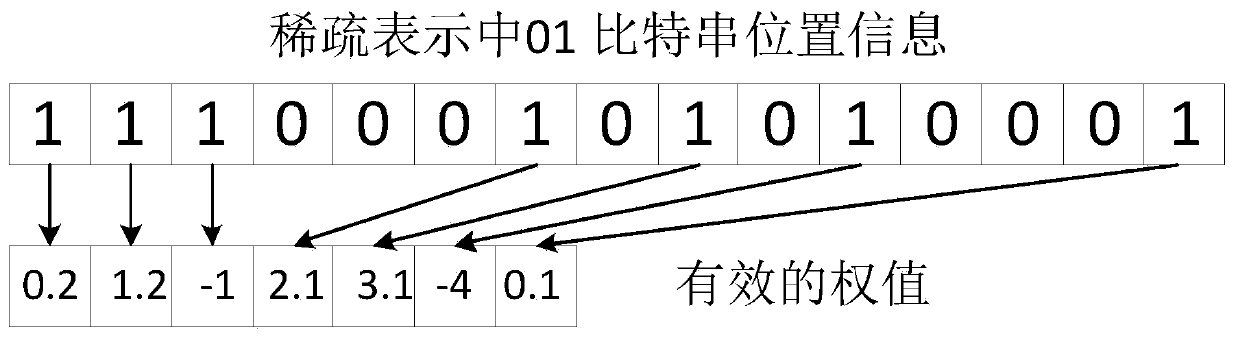

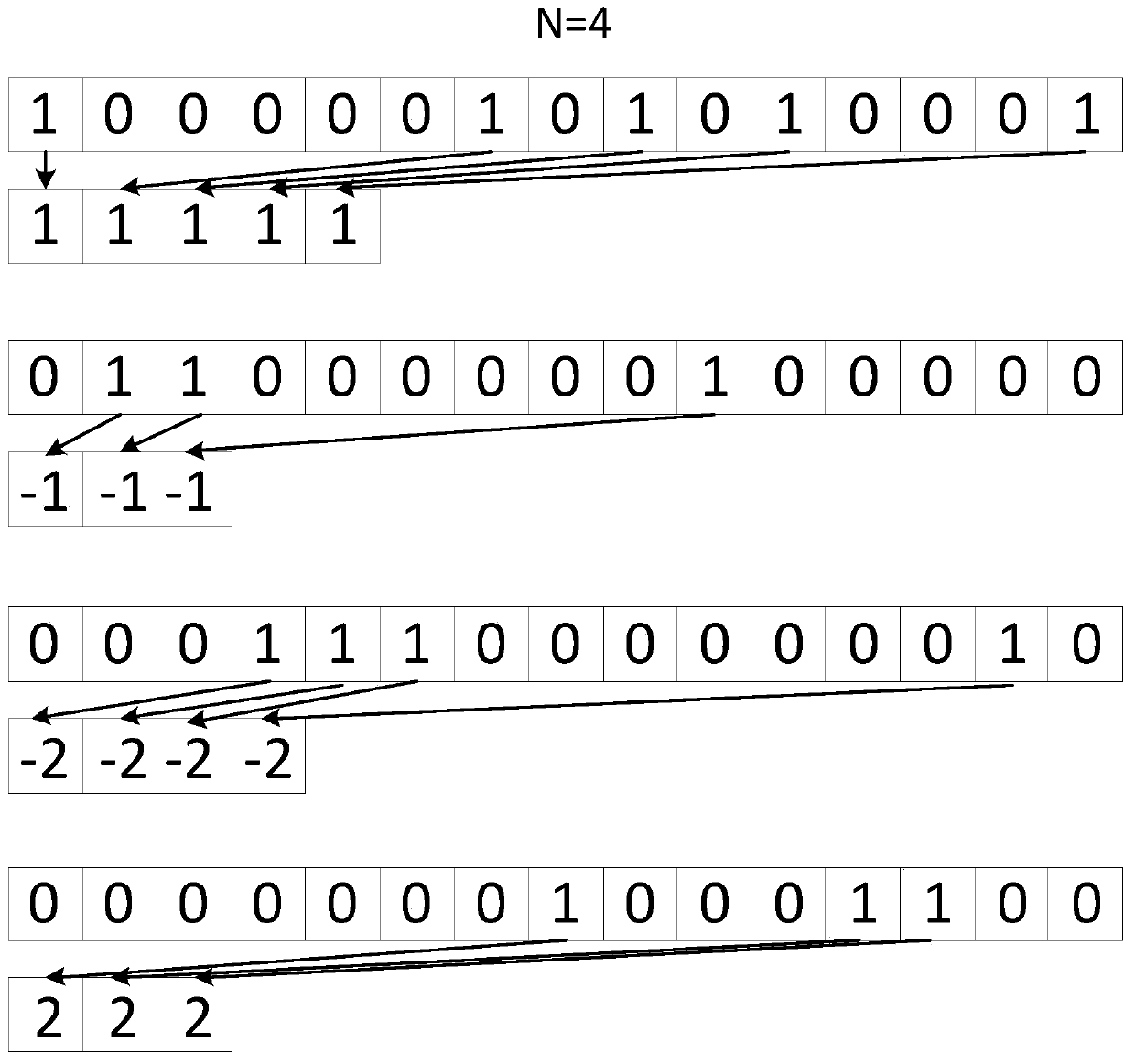

[0044] In a first exemplary embodiment of the present disclosure, a neural network computing device is provided. Please refer to figure 1, the neural network computing device in this embodiment includes: a control unit 100 , a storage unit 200 , a sparse selection unit 300 and a neural network computing unit 400 . Wherein, the storage unit 200 is used for storing neural network data. The control unit 100 is configured to generate microinstructions respectively corresponding to the sparse selection unit and the neural network operation unit, and send the microinstructions to corresponding units. The sparse selection unit 300 is used to select the neural network corresponding to the effective weight value from the neural network data stored in the storage unit 200 according to the microinstruction corresponding to the sparse selection unit issued by the control unit and according to the position information represented by the sparse data therein. Data participates in operation...

no. 3 example

[0069] In a third exemplary embodiment of the present disclosure, a neural network computing device is provided. Compared with the second embodiment, the difference of the neural network computing device in this embodiment is that a dependency processing function is added in the control unit 100 .

[0070] Please refer to Figure 6, according to an embodiment of the present disclosure, the control unit 100 includes: an instruction cache module 110, configured to store a neural network instruction to be executed, the neural network instruction including address information of the neural network data to be processed; an instruction fetch module 120, It is used to obtain neural network instructions from the instruction cache module; the decoding module 130 is used to decode the neural network instructions to obtain microinstructions corresponding to the storage unit 200, the sparse selection unit 300 and the neural network operation unit 400 respectively, Include the address inf...

no. 5 example

[0083] Based on the neural network computing device of the third embodiment, the present disclosure further provides a sparse neural network data processing method for performing sparse neural network computing according to computing instructions. Such as Figure 8 As shown, the processing method of the sparse neural network data in this embodiment includes:

[0084] Step S801, the instruction fetching module fetches the neural network instruction from the instruction cache module, and sends the neural network instruction to the decoding module;

[0085] Step S802, the decoding module decodes the neural network instruction to obtain microinstructions respectively corresponding to the storage unit, the sparse selection unit and the neural network operation unit, and sends each microinstruction to the instruction queue;

[0086] Step S803, obtaining the neural network operation code and the neural network operation operand of the microinstruction from the scalar register file, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com