Complex environment target segmentation method and device based on multi-module convolutional neural network

A convolutional neural network and object segmentation technology, applied in the field of object segmentation in complex environments, can solve problems such as difficult image correction, occlusion, and limited application of segmentation technology, so as to improve segmentation robustness, enhance robustness, and ensure accuracy and real-time effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

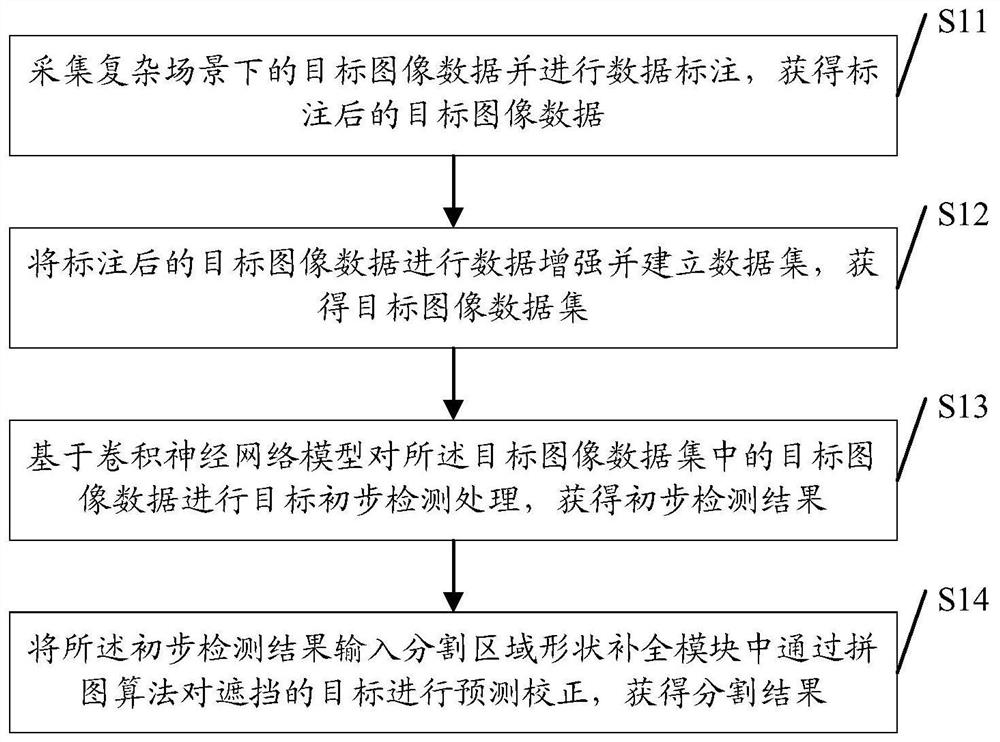

[0046] see figure 1 , figure 1 It is a schematic flow chart of a complex environment object segmentation method based on a multi-module convolutional neural network in an embodiment of the present invention.

[0047] Such as figure 1 As shown, a complex environment target segmentation method based on multi-module convolutional neural network, the method includes:

[0048] S11: Collect target image data in complex scenes and perform data labeling processing to obtain the marked target image data;

[0049] In the specific implementation process of the present invention, the acquisition of target image data in complex scenes and data labeling processing to obtain the marked target image data include: collecting target image data in different regions and in different scenes; The target image data in different regions and in different scenes are labeled with the image labeling tool Labelme to obtain the labeled target image data.

[0050]Specifically, in the embodiment of the p...

Embodiment 2

[0068] see figure 2 , figure 2 It is a schematic diagram of the structural composition of the complex environment target segmentation device based on the multi-module convolutional neural network in the embodiment of the present invention.

[0069] Such as figure 2 Shown, a kind of complex environment target segmentation device based on multi-module convolutional neural network, said device comprises:

[0070] Labeling module 21: used to collect target image data in complex scenes and perform data labeling processing to obtain labeled target image data;

[0071] In the specific implementation process of the present invention, the acquisition of target image data in complex scenes and data labeling processing to obtain the marked target image data include: collecting target image data in different regions and in different scenes; The target image data in different regions and in different scenes are labeled with the image labeling tool Labelme to obtain the labeled target...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com