Two-dimensional target detection-based point cloud recognition and segmentation method, device and system for workpiece most suitable for grabbing

A target detection, the most suitable technology, used in character and pattern recognition, image analysis, computer parts and other directions, can solve the problem of rapid identification and segmentation of out-of-order workpieces, large amount of calculation, and the recognition results cannot be directly applied to pose estimation, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0052] In this embodiment, a DLP4500 projector (resolution 912×1140) and two Daheng Mercury industrial cameras (resolution 2448×2048) are used to build a structured light camera; the configuration of the selected computer is as follows: the processor model is IntelCore i5-7400 The model of CPU and graphics card is NVIDIA GeForce GTX 1050; the selected out-of-sequence workpieces are the out-of-order stacked small workpieces in the loading station of the manufacturing production line.

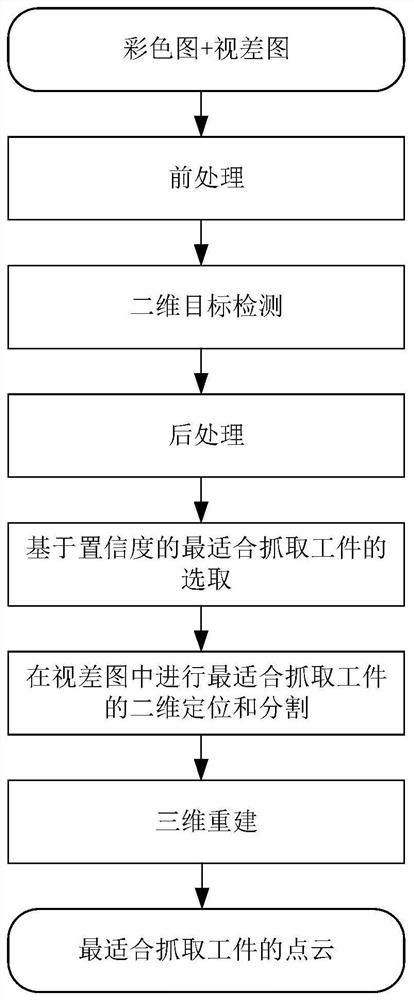

[0053] An embodiment of the present invention provides a method for identifying and segmenting the most suitable point cloud of a captured workpiece based on two-dimensional target detection, such as figure 1 -2, specifically includes the following steps:

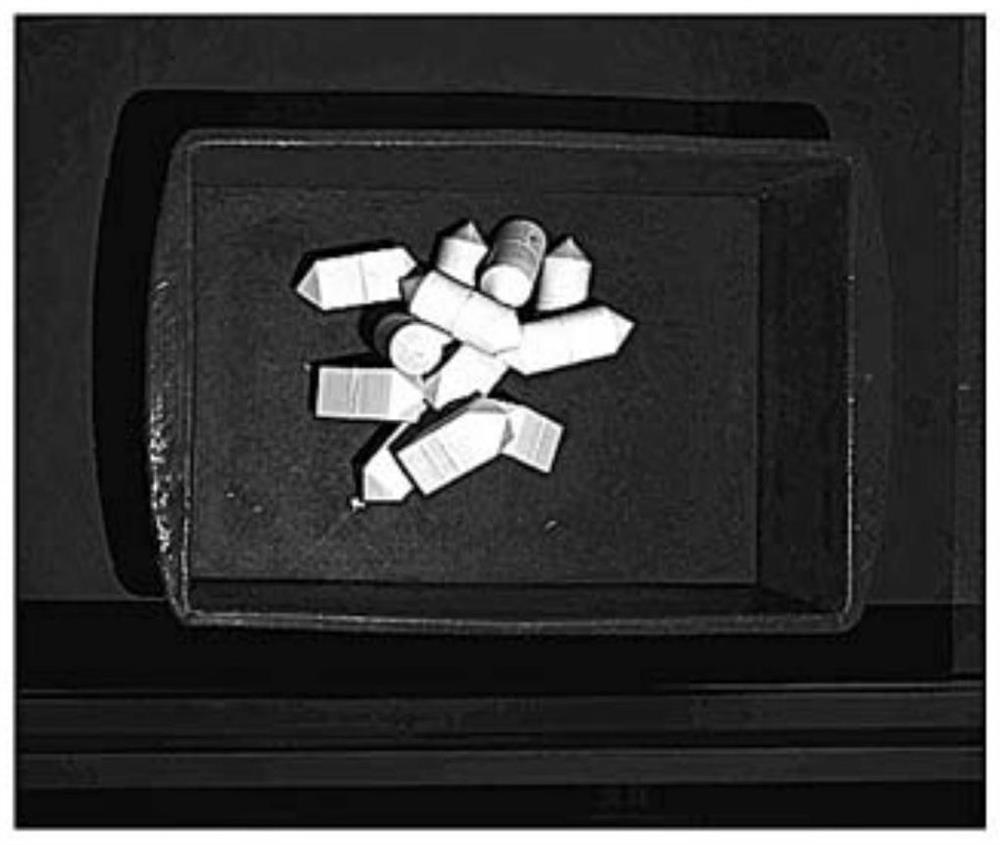

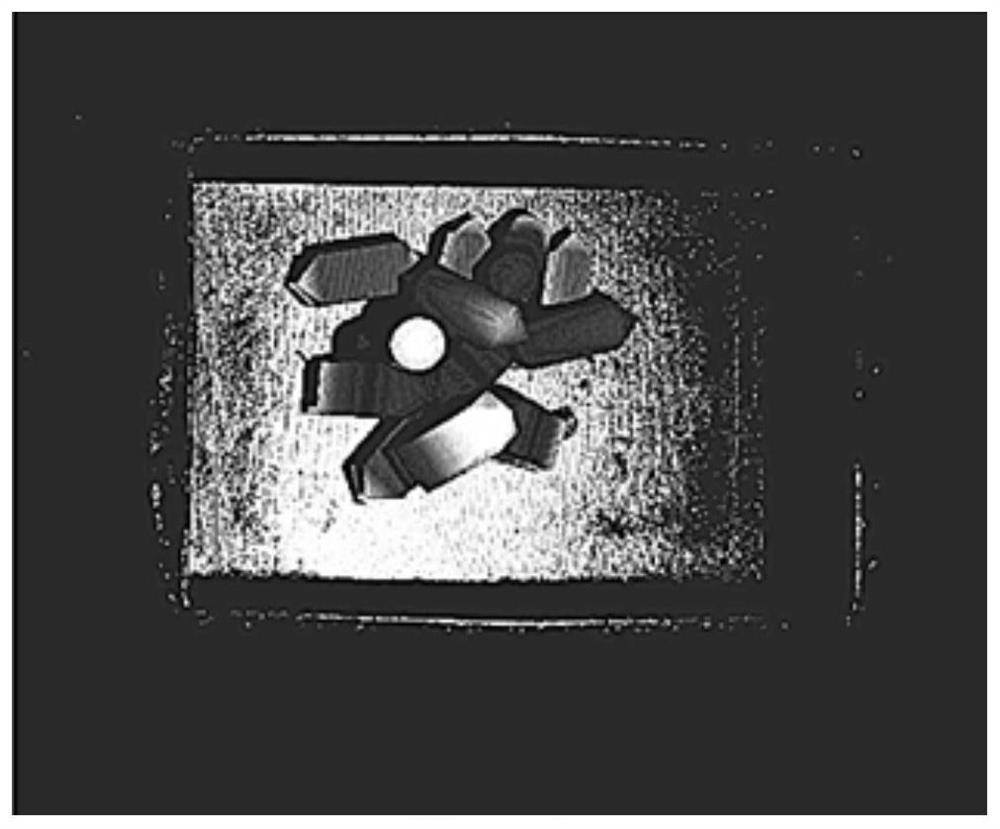

[0054] Step 1, input the color image (resolution 2448×2048) and disparity image (resolution 2448×2048) of the out-of-sequence artifacts generated by the binocular structured light camera, see Figure 2(a) and Figure 2(b) for details, and Pre-p...

Embodiment 2

[0066] Based on the same inventive concept as in Embodiment 1, the embodiment of the present invention provides a point cloud recognition and segmentation device most suitable for grabbing workpieces based on two-dimensional target detection, including:

[0067] The detection unit is used to perform two-dimensional target detection on the color image, and output the confidence level and bounding box position of all detected workpieces based on the target detection result;

[0068] The selection unit is used to select the workpiece with the highest confidence as the most suitable workpiece for grabbing;

[0069] The determination unit is used to determine and segment the area covered by the bounding box on the disparity map according to the position parameter of the bounding box of the workpiece with the greatest confidence, so as to realize the two-dimensional positioning and segmentation of the workpiece;

[0070] The generation unit is used to perform 3D reconstruction on th...

Embodiment 3

[0073] An embodiment of the present invention provides a point cloud identification and segmentation system most suitable for grabbing a workpiece based on two-dimensional target detection, including a storage medium and a processor;

[0074] The storage medium is used to store instructions;

[0075] The processor is configured to operate according to the instructions to perform the method according to any one of Embodiment 1

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com