Fused reality interaction system and method

An interactive system and realistic technology, applied in the field of virtual reality, can solve problems such as experience activities and field of view limitations, and the inability to directly view real targets

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

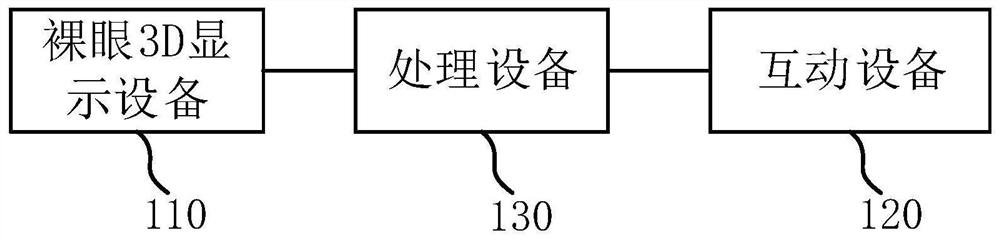

[0021] figure 1 It is a schematic structural diagram of a fusion reality interactive system provided by Embodiment 1 of the present invention. Such as figure 1 As shown, the fusion reality interactive system includes: a naked-eye 3D display device 110 , an interactive device 120 and a processing device 130 .

[0022] The naked-eye 3D display device 110 is configured to acquire and display a fusion reality image.

[0023] The interaction device 120 is configured to collect the physical image of the physical object and / or the interaction input data of the physical object.

[0024] The processing device 130 is configured to respectively acquire a virtual image, a physical image, and / or interactive input data, and map the adjusted virtual image and physical image into a fusion coordinate system according to the interactive input data, so as to form the The fused reality image is provided to the naked-eye 3D display device.

[0025] Wherein, the physical object may be the user ...

Embodiment 2

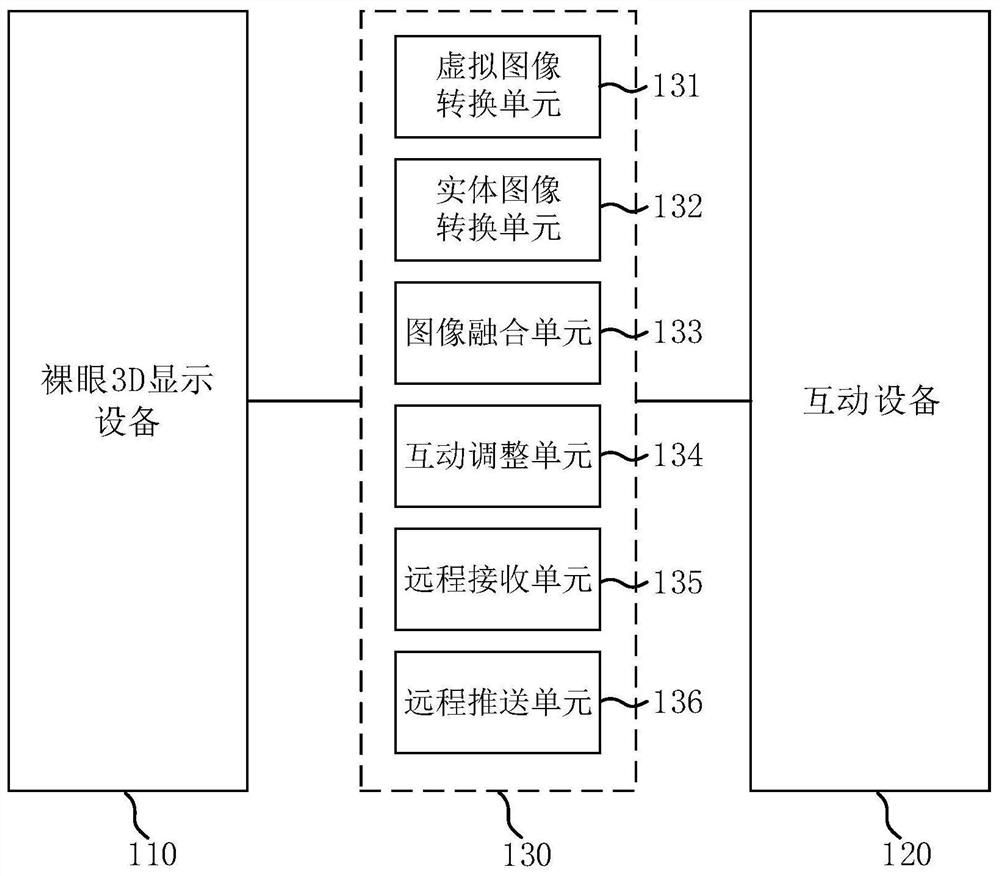

[0041] figure 2 It is a schematic structural diagram of a fusion reality interactive system in Embodiment 2 of the present invention, and this embodiment is refined on the basis of the foregoing embodiments. Such as figure 2 As shown, the fusion reality interactive system includes: a naked-eye 3D display device 110 , an interactive device 120 and a processing device 130 .

[0042] Optionally, the processing device 130 includes:

[0043] The virtual image conversion unit 131 is configured to acquire a virtual image, and convert the virtual image from a virtual coordinate system to a fusion coordinate system according to a virtual fusion transformation relationship;

[0044] The entity image conversion unit 132 is configured to acquire the entity image, and convert the entity image from the entity coordinate system to the fusion coordinate system according to the entity fusion transformation relationship;

[0045] The image fusion unit 133 is configured to superimpose the con...

Embodiment 3

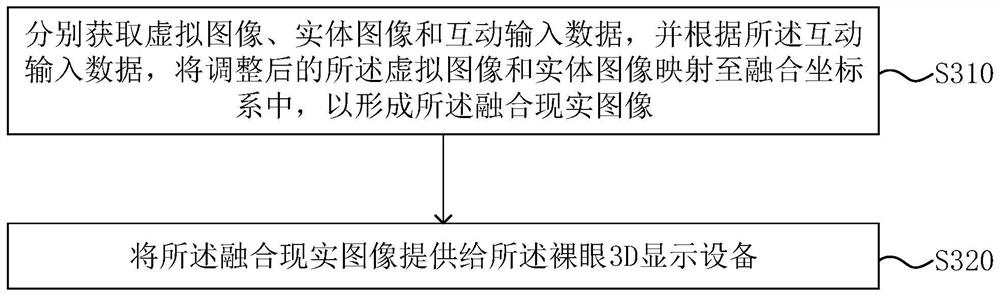

[0059] Figure 3A It is a flow chart of a fusion reality interaction method provided by Embodiment 3 of the present invention. This embodiment is applicable to the scene of interaction in virtual reality, augmented reality or fusion reality system, and the method can be executed by the fusion reality interaction system in the embodiment of the present invention, and the system can be realized by software and / or hardware. Such as Figure 3A As shown, the method specifically includes the following steps:

[0060] Step 310, respectively acquire virtual image, physical image and interactive input data, and map the adjusted virtual image and physical image into a fusion coordinate system according to the interactive input data, so as to form the fusion reality image.

[0061] Entity images can be obtained through interactive devices, virtual images can be obtained through any software platform or software engine model image data, and fused reality images are processed by processi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com