Task hierarchy scheduling method and device based on execution time prediction

A technology of execution time and task scheduling, applied in the direction of multi-program device, program startup/switching, program control design, etc., can solve problems such as computing resource load balancing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

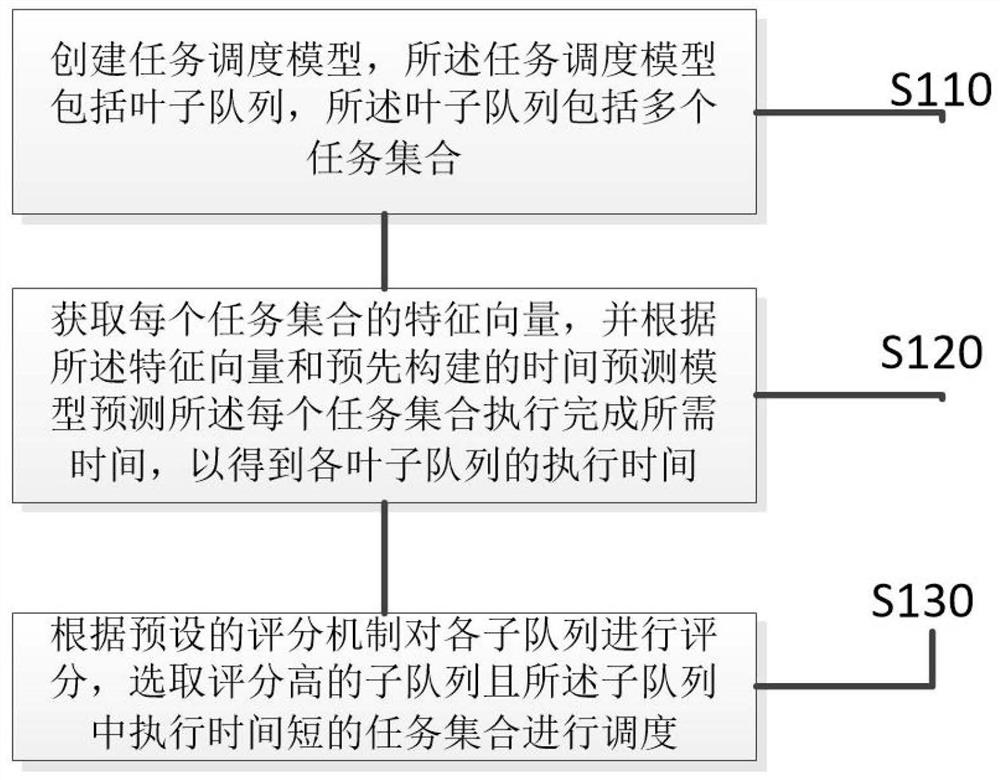

[0050] Such as figure 1 As shown, a task-level scheduling method based on execution time prediction includes the following steps:

[0051] S110. Create a task scheduling model, the task scheduling model includes a leaf queue, and the leaf queue includes a plurality of task sets;

[0052] S120. Obtain the feature vector of each task set, and predict the execution time required for each task set according to the feature vector and the pre-built time prediction model, so as to obtain the execution time of each sub-queue;

[0053] S130. Score each of the sub-queues according to a preset scoring mechanism, and select a sub-queue with a high score and a task set with a short execution time in the sub-queue for scheduling.

[0054] According to Embodiment 1, it can be seen that a multi-layer scheduling model is established according to the queue priority and queue resource limit set by the user. The whole model consists of multiple non-leaf queues and leaf queues. The task set submi...

Embodiment 2

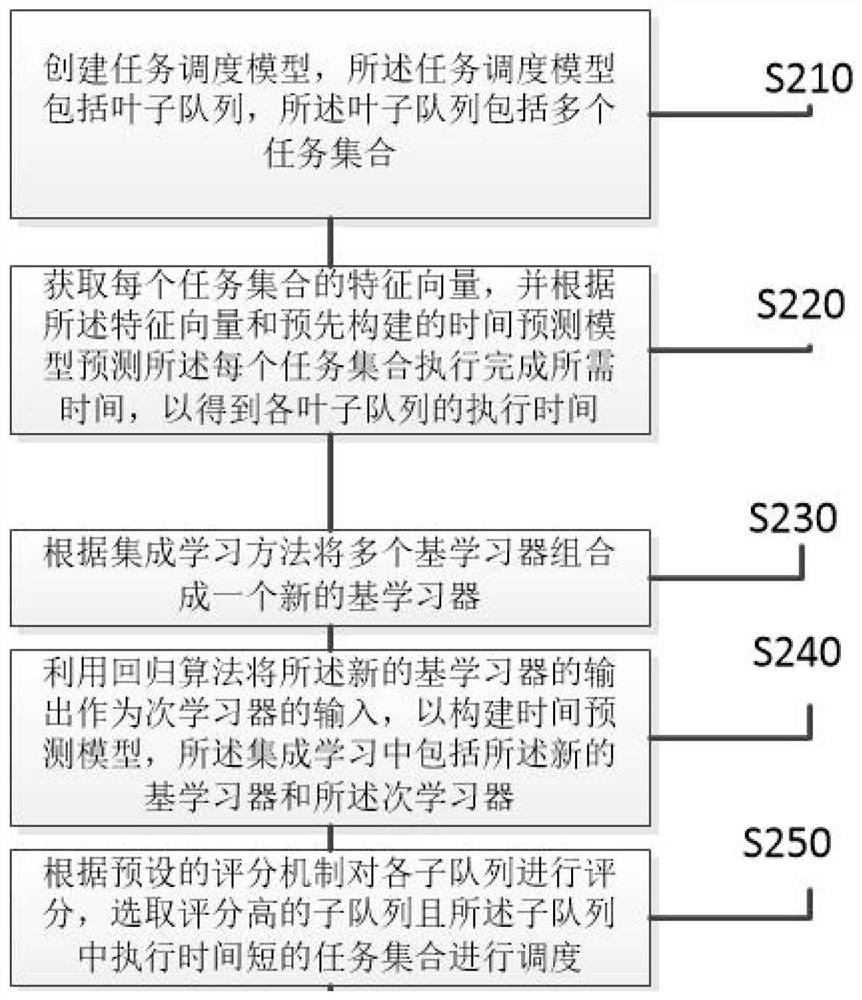

[0058] Such as figure 2 As shown, a task-level scheduling method based on execution time prediction, including:

[0059] S210. Create a task scheduling model, where the task scheduling model includes a leaf queue, and the leaf queue includes multiple task sets;

[0060] S220. Acquire the feature vector of each task set, and predict the execution time required for each task set according to the feature vector and the pre-built time prediction model, so as to obtain the execution time of each leaf queue;

[0061] S230. Combine multiple base learners into a new base learner according to an ensemble learning method;

[0062] S240. Use a regression algorithm to use the output of the new base learner as the input of the secondary learner to construct a time prediction model, and the integrated learning includes the new base learner and the secondary learner;

[0063] S250. Score each sub-queue according to a preset scoring mechanism, and select a sub-queue with a high score and a...

Embodiment 3

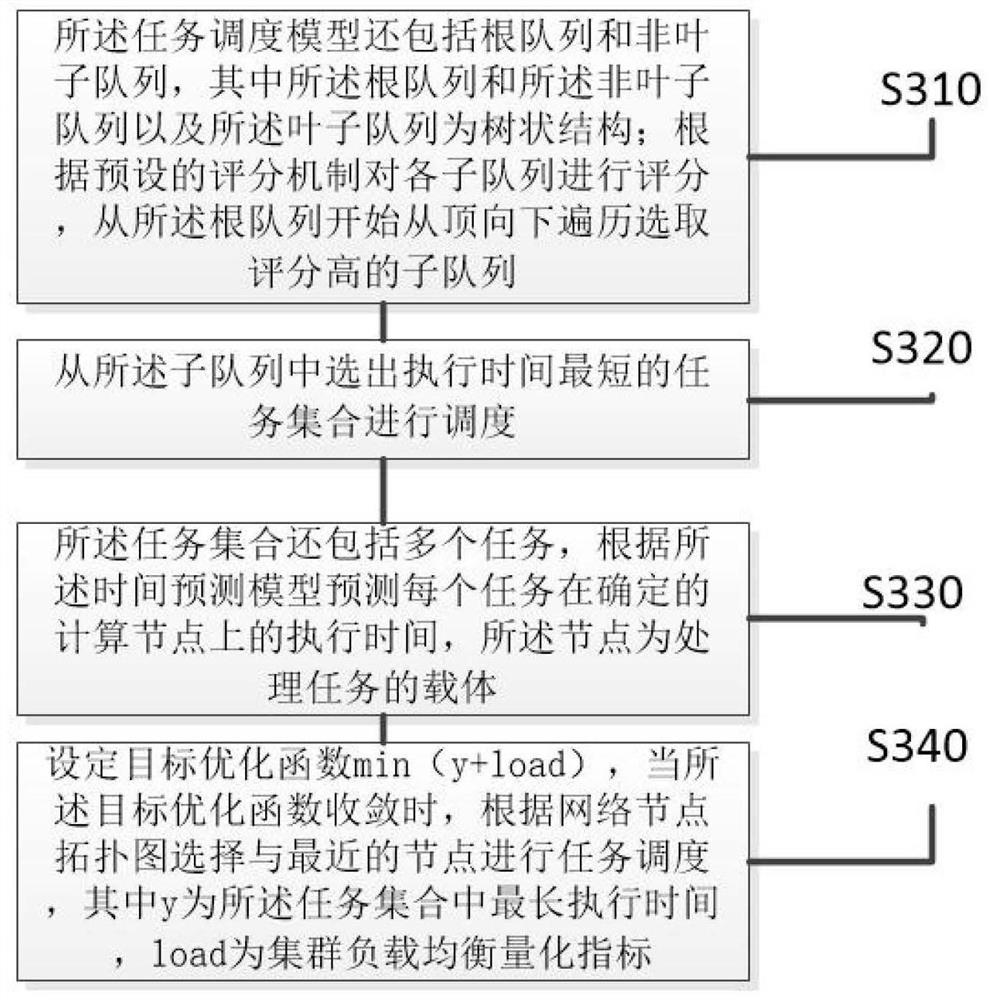

[0067] Such as image 3 As shown, a task-level scheduling method based on execution time prediction, including:

[0068] S310. The task scheduling model further includes a root queue and a non-leaf queue, wherein the root queue, the non-leaf queue, and the leaf queue are in a tree structure; each sub-queue is scored according to a preset scoring mechanism, Starting from the root queue, traversing from top to bottom to select sub-queues with high scores;

[0069] S320. Select a task set with the shortest execution time from the sub-queue for scheduling;

[0070] S330. The task set further includes a plurality of tasks, and the execution time of each task on a determined computing node is predicted according to the time prediction model, and the node is a carrier for processing tasks;

[0071] S340. Set the target optimization function min(y+load). When the target optimization function converges, select and perform task scheduling with the nearest node according to the network...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com