Convolutional neural network model compression method and device

A convolutional neural network and compression method technology, applied in biological neural network models, neural learning methods, neural architectures, etc., can solve problems such as limited scalability, large loss of compression accuracy, low pruning efficiency, etc. Huge number and calculation amount, improve precision performance, and ensure the effect of compression rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

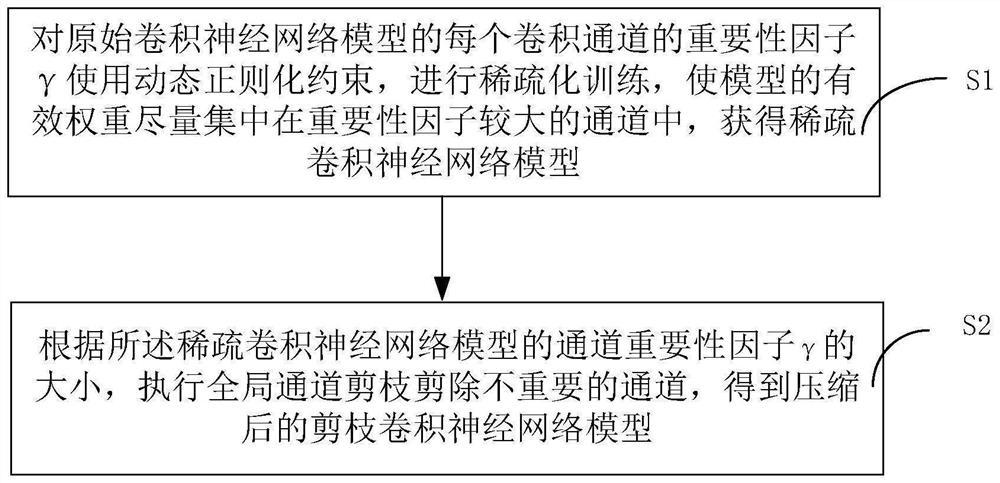

[0088] In this embodiment, a compression method of a convolutional neural network model includes the following steps:

[0089] Step 1. Use dynamic regularization constraints on the importance factor of each convolutional channel of the original convolutional neural network model, and perform sparse training, so that the effective weight of the model is concentrated in the channel with a larger importance factor as much as possible, and sparseness is obtained. The sparse convolutional neural network model of ;

[0090] In this embodiment, before step 1 is performed, the original convolutional neural network model may be acquired based on target detection algorithm training. In particular, the present invention does not limit the target detection algorithm for obtaining the original convolutional neural network model, nor does it limit the application scenarios of the original convolutional neural network model, that is, the compression method of the above-mentioned convolutiona...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com