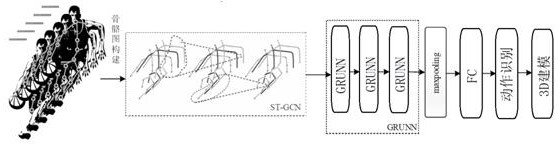

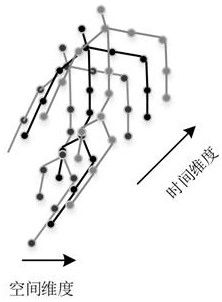

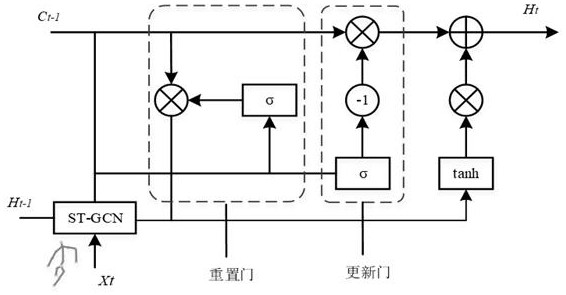

Human body behavior recognition system based on graph convolutional neural network

A convolutional neural network and recognition system technology, applied in the field of human behavior recognition, can solve the problems of deep feature extraction of CNN and loss of key information, and achieve the effect of improving accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] In order to make the purpose, technical solutions and advantages of the present invention clearer, the present invention will be described below through specific embodiments shown in the accompanying drawings. It should be understood, however, that these descriptions are illustrative only and are not intended to limit the scope of the invention. The structures, proportions, sizes, etc. shown in the drawings of this specification are only used to cooperate with the content disclosed in the specification, for those who are familiar with this technology to understand and read, and are not used to limit the conditions for the implementation of the present invention, so Without technical substantive significance, any modification of the structure, change of the proportional relationship or adjustment of the size shall still fall within the technology disclosed in the present invention without affecting the functions and objectives of the present invention. within the scope o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com