Self-supervised video deblurring and image frame insertion method based on event camera

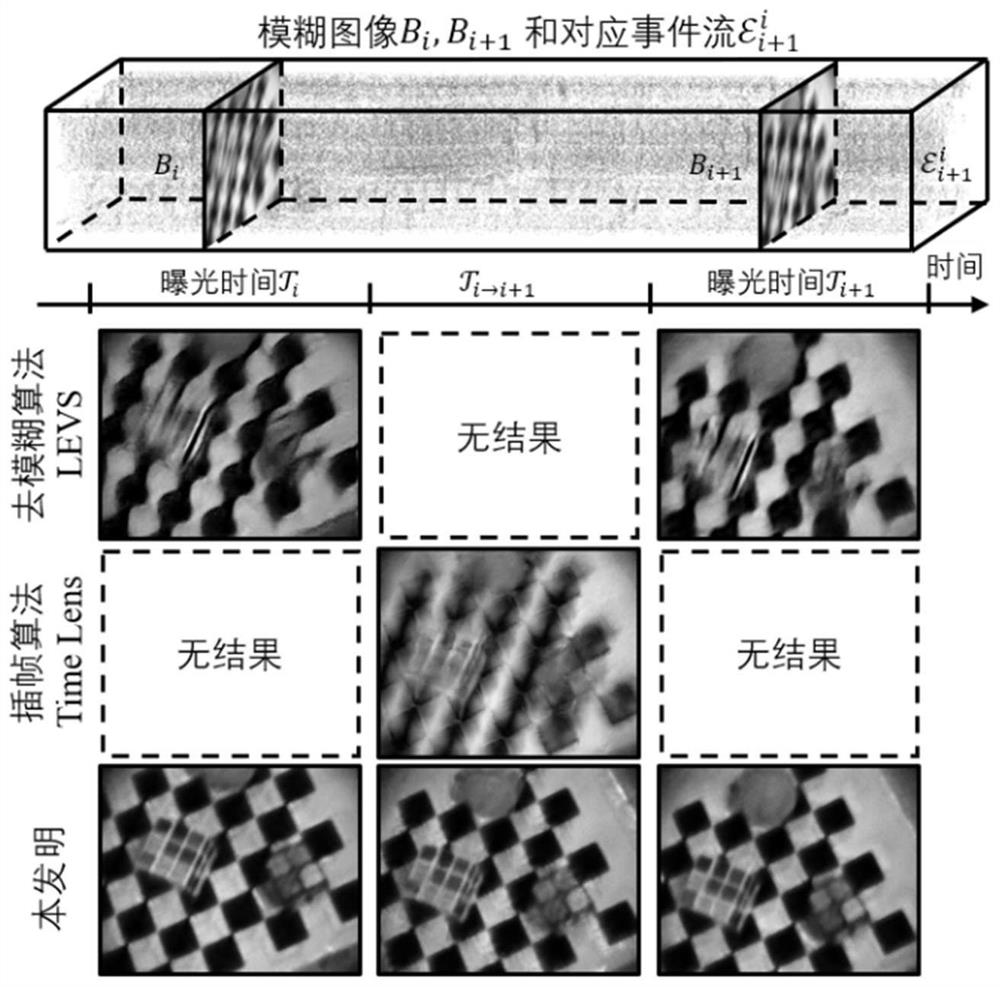

A blurred image and deblurring technology, which is applied in the field of image processing, can solve problems such as performance degradation, achieve low latency, solve motion blur and information loss between frames, and achieve good deblurring and image interpolation effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

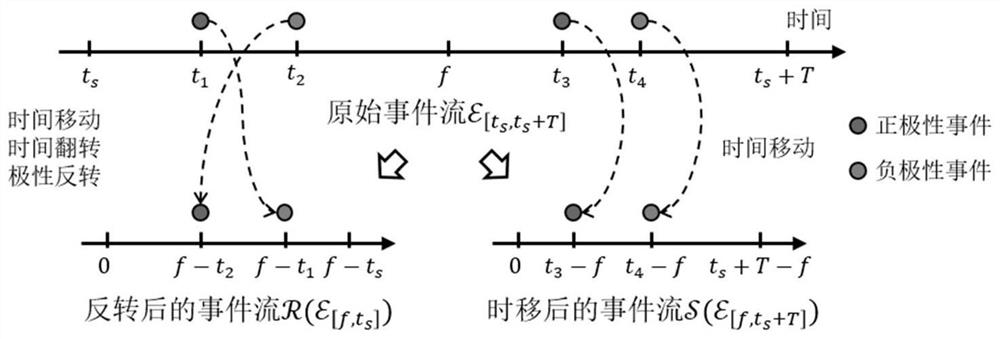

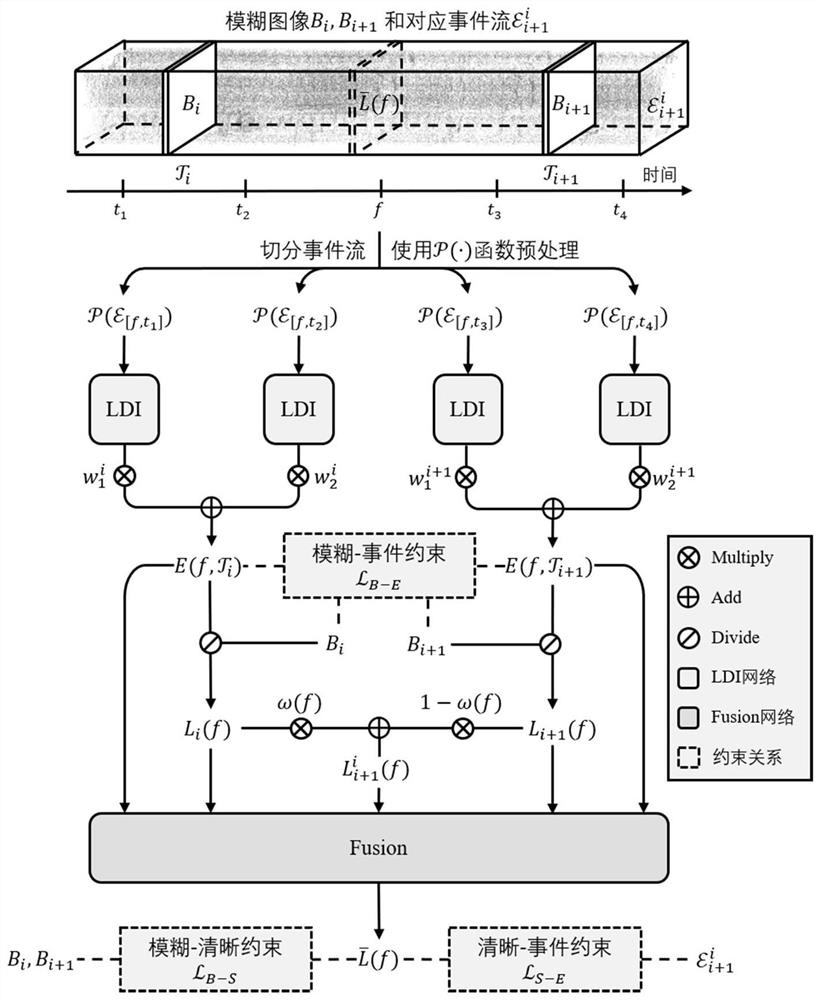

Method used

Image

Examples

Embodiment Construction

[0059] In order to understand the present invention more clearly, the technical contents of the present invention are introduced in detail below.

[0060] Use ordinary optical cameras and event cameras to shoot high-dynamic scenes, such as fast-moving objects or cameras, collect low frame rate, video with motion blur, and construct fuzzy video datasets by spatiotemporal matching of blurred image frames and event streams. Due to the limited scale of field data, data augmentation methods can be used for sample expansion. Deep learning is a data-driven method. The larger the training data set, the stronger the generalization ability of the trained model. However, when collecting data in practice, it is difficult to cover all scenarios, and collecting data also requires a lot of cost, which leads to a limited training set in practice. If you can generate various training data based on existing data, you can achieve better open source and cost reduction, which is the purpose of da...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com