Data poisoning attack method, electronic equipment, storage medium and system

A data and attack model technology, applied in the field of data security, can solve the problem of weakening the attack effect of poisoning attack, sensitivity, etc., and achieve the effect of obvious poisoning attack effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

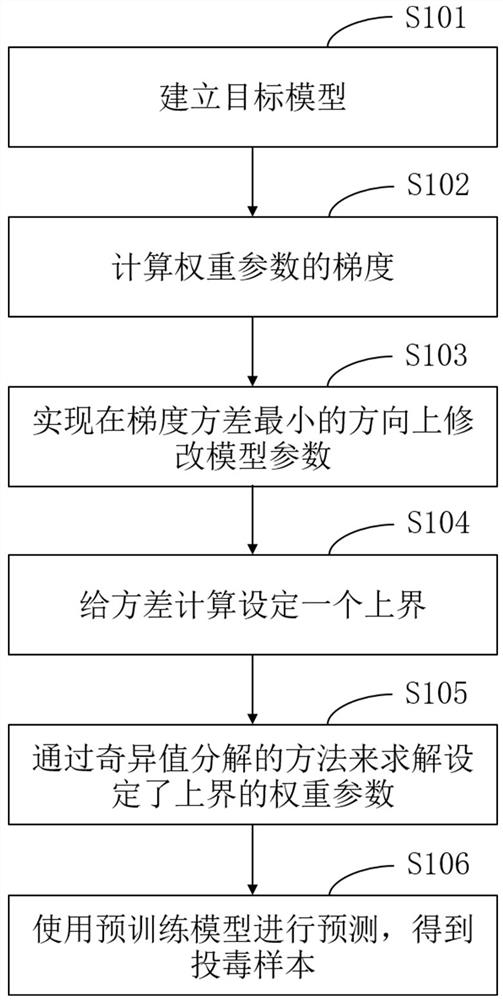

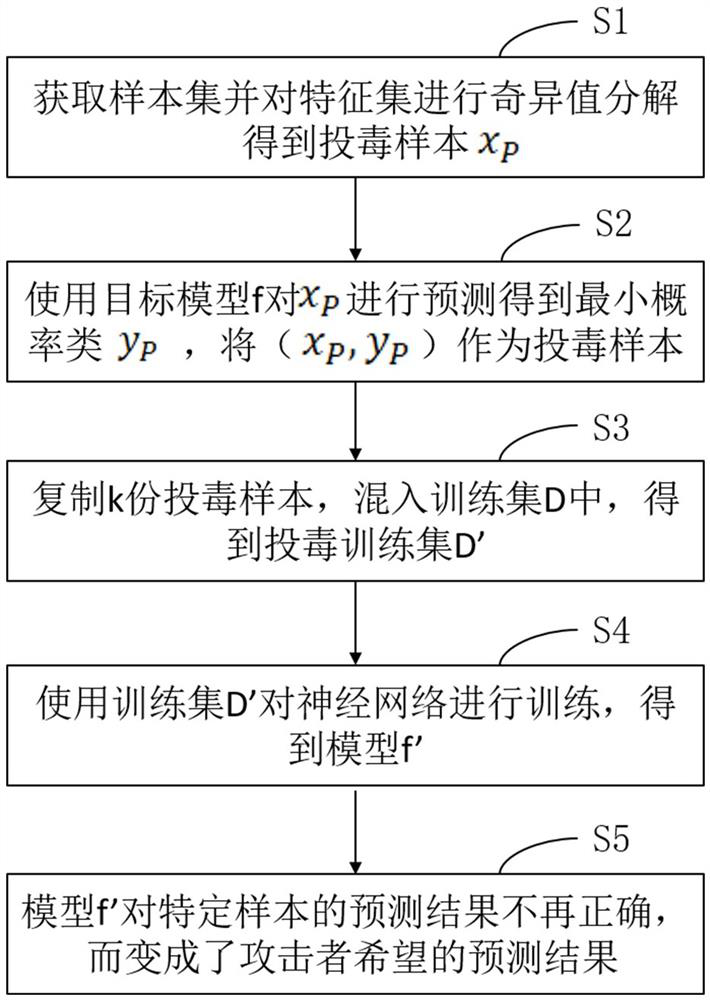

[0038] As an aspect of the embodiments of the present disclosure, this embodiment provides a data poisoning attack method, such as figure 1 shown, including the following steps:

[0039] S101. Set the target model of the attack as , the original training set is ( ), where X is the feature set, Y is the label set, and training samples .

[0040] S102. Calculate weight parameters gradient, gradient The formula for calculating is as follows:

[0041] (1)

[0042] in represents the loss function, training samples , the original training set is D=(X, Y), where X is the feature set, Y is the label set, and b is the bias parameter of the model

[0043] S103. Obtain a poisoned sample The calculation formula of , that is to say, the poisoning sample that satisfies the following formula , the model parameters can be modified in the direction with the smallest gradient variance:

[0044] (2)

[0045] in, is the variable value that indicates the objective fu...

Embodiment 4

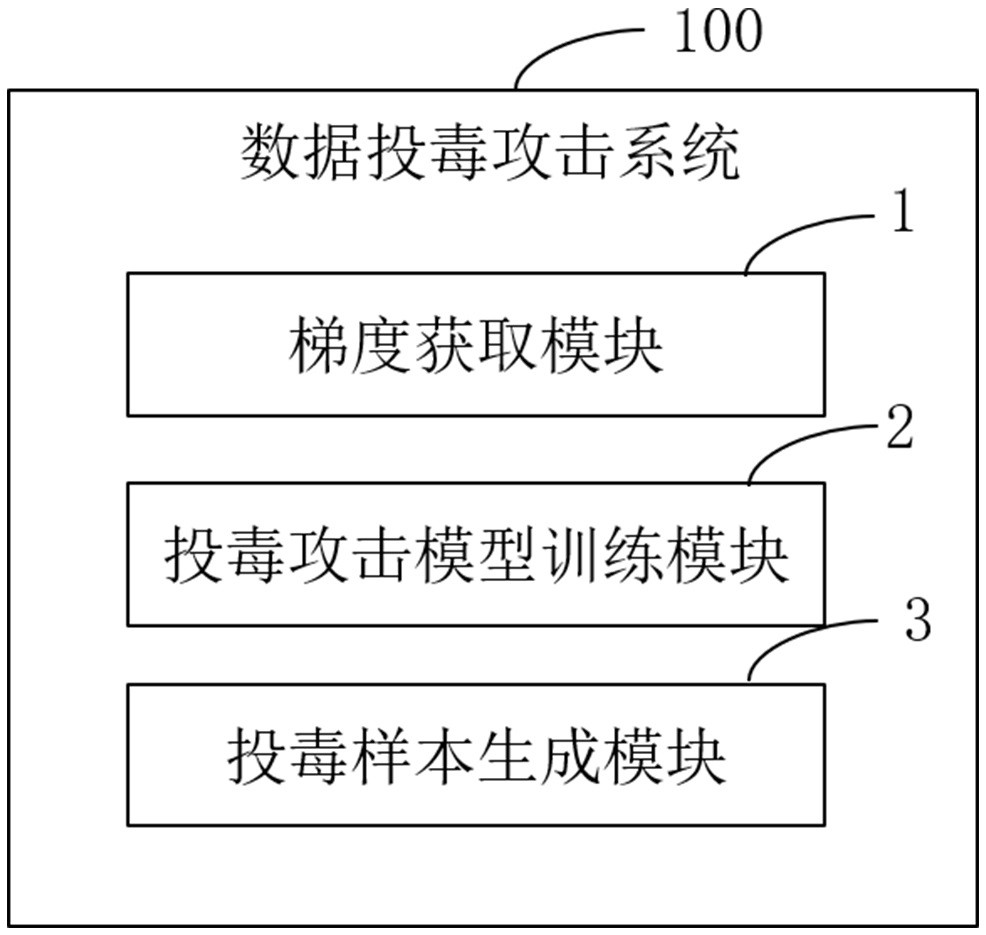

[0081] As another aspect of the embodiments of the present disclosure, the present embodiment provides a data poisoning attack system 100, such as image 3 shown, including:

[0082] Gradient acquisition module 1, establishes the target model and obtains the gradient of the weight parameter w in the target model ;

[0083] Specifically, let the target model of the attack be , the original training set is ( ), where X is the feature set, Y is the label set, and the training samples ( )∈ .

[0084] Calculate the gradient of the weight parameter w, gradient The formula for calculating is as follows:

[0085] (1)

[0086] in, represents the loss function, the training samples ( )∈ , the original training set is D=(X, Y), where X is the feature set, Y is the label set, and b is the bias parameter of the model.

[0087] Poisoning attack model training module 2, calculating gradient The direction with the smallest variance of the data distribution is used ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com