Behavior recognition system and method based on multi-position sensor feature fusion

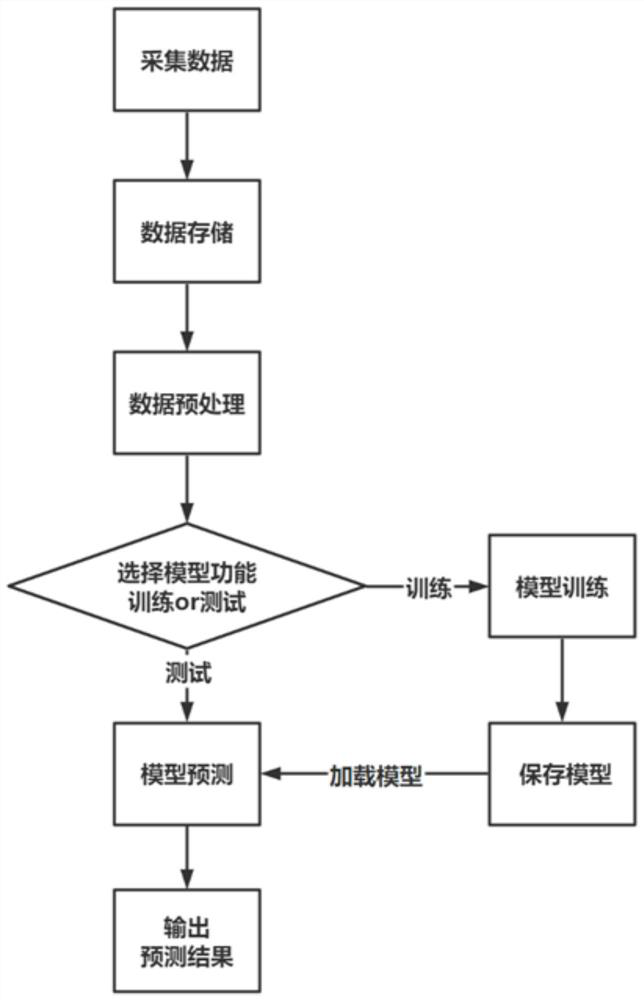

A feature fusion and recognition system technology, applied in sensors, neural learning methods, character and pattern recognition, etc., can solve the problem of ignoring spatial dependencies, not considering the spatial and temporal dependencies of axial data, and unable to effectively extract multi-dimensional time series data spatial features and other problems to achieve the effect of accurately identifying human behavior

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

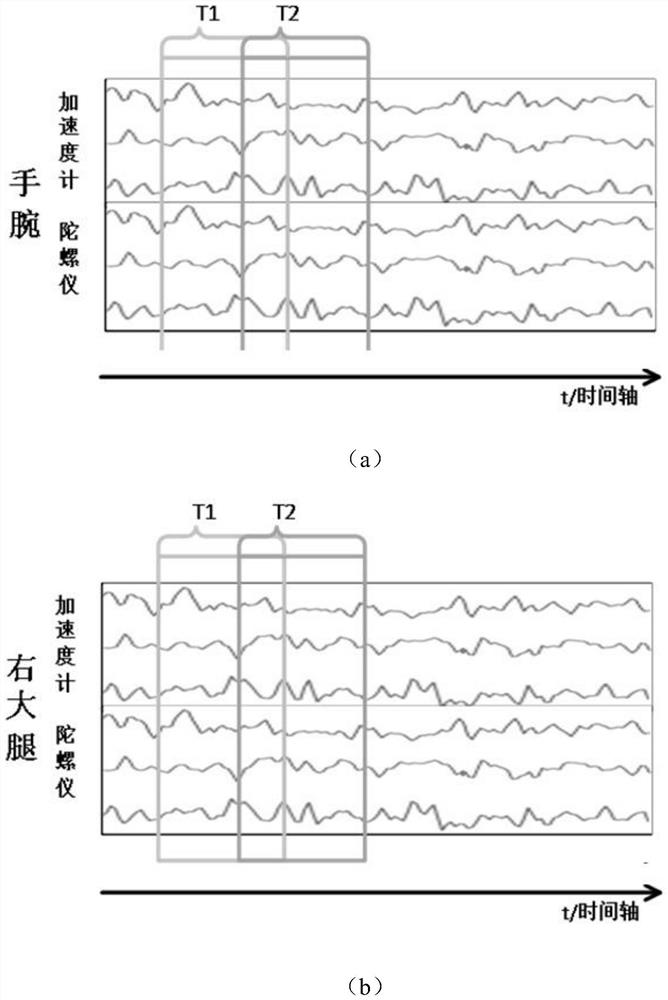

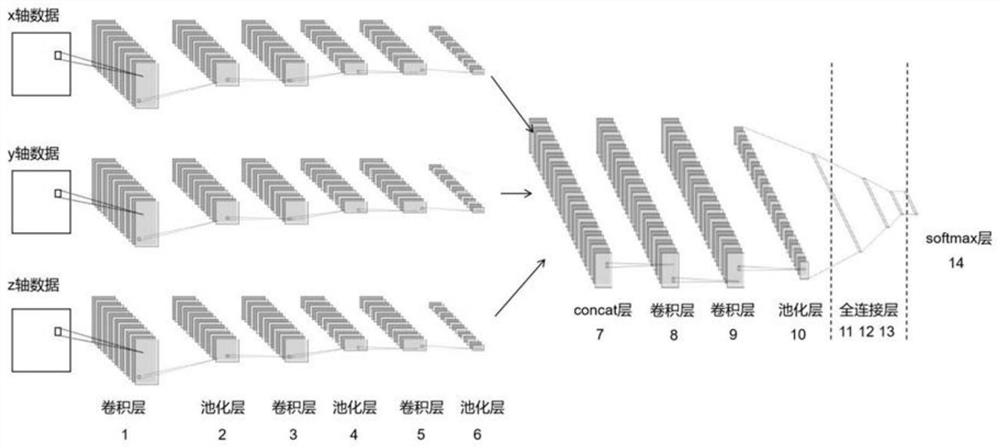

[0057] When processing multi-sensor multi-dimensional data, the two-dimensional convolution kernel comprehensively considers the time dependence of multi-dimensional data and the spatial dependence between different types of sensor data. However, most researchers just list and splicing multiple sensor data, and the spatial features extracted by this method are limited. Because theoretically, different axial data on the same sensor are independent of each other, and the correlation is weak, and there is no correlation between different axial data of different sensors, and simple splicing can only mine different axes of the same sensor. The spatial features extracted are limited due to the spatial dependencies between the orientation data and different axial data from different sensors. From the data point of view, we select five triaxial sensors from the public dataset SKODA to calculate the correlation between each axis data, such as Figure 4 to Figure 6 As shown, the weak c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com