Optimizing training method of neural network equalizer

A technology of neural network and training method, which is applied in the direction of biological neural network model, physical realization, etc., can solve the problems of lower optimization of the equalizer, non-convergence of the training process, failure to successfully achieve the equalizer training quality target, etc., to improve usability and reduce The effect of training time overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

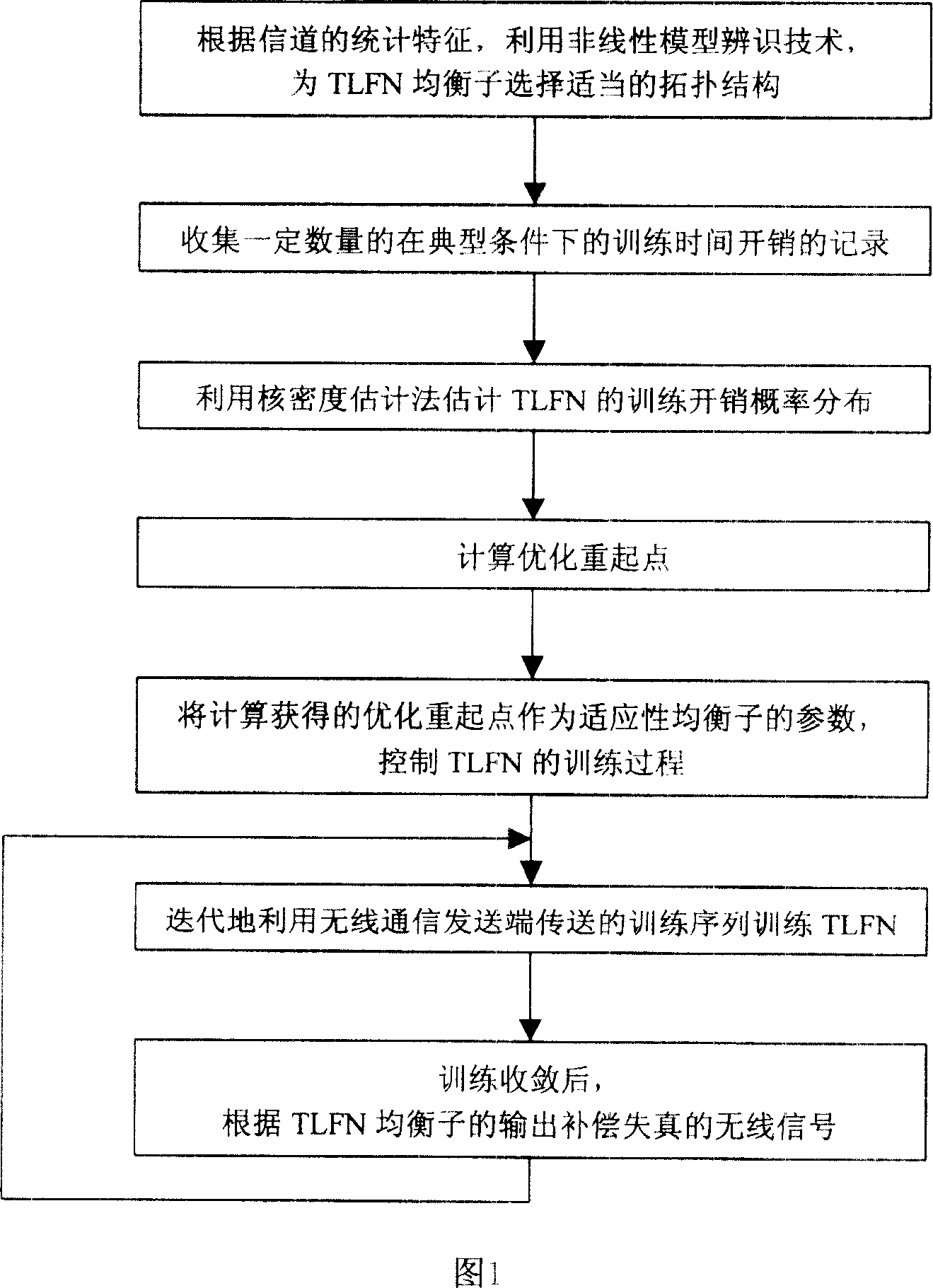

[0018] Since the cost distribution of neural network training time has heavy tails, and the heavy tails of computational time cost distributions are common in NP optimization problems, the method of suppressing the heavy tails of NP optimization problems can be used to solve neural networks. Convergence of training. Following the above ideas, the present invention proposes an optimized training method for improving the training efficiency of feedforward neural networks.

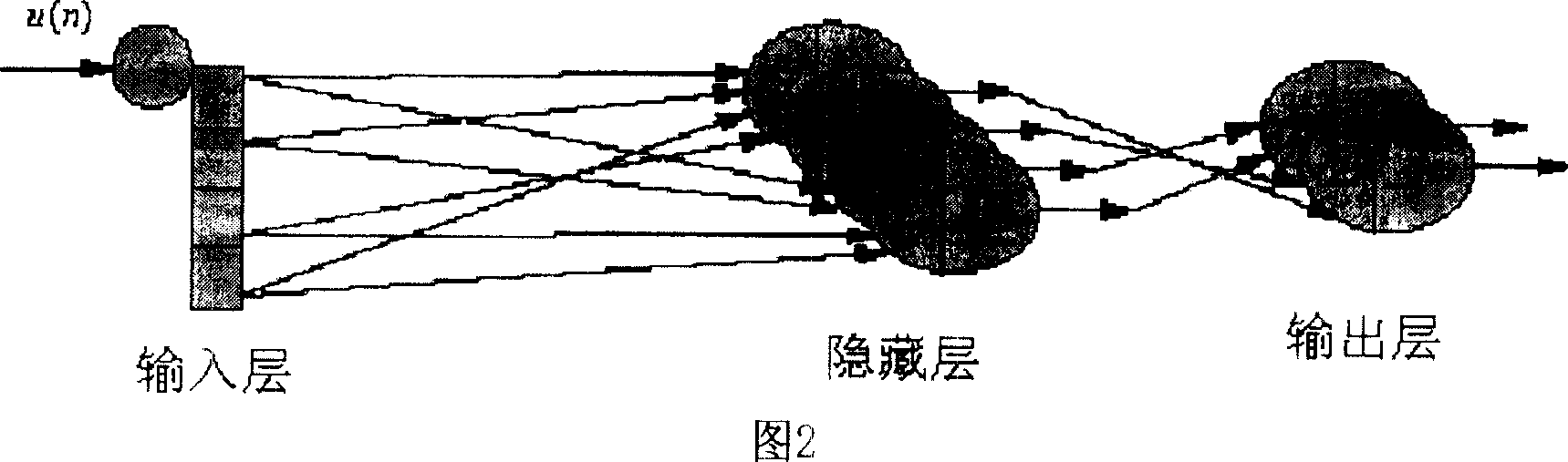

[0019] The present invention selects Time Lagged Forward Neural Networks (TLFN) as an embodiment. The reason is that this type of network topology has both long-range and short-range memory, and can flexibly compromise the long-range correlation and dynamic behavior of the modeling object. Short-range bursty; and this type of network belongs to the feedforward structure, and the computational complexity of its training can be controlled at a low level. The optimized training method of the present invention can al...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com