Information processing device

a technology of information processing device and instruction buffer, which is applied in the direction of program control, computation using denominational number representation, instruments, etc., can solve the problems of large processing time, reduced efficiency increase in the hardware (instruction buffer) of information processing device, so as to reduce confusion in pipeline processing and restrict the increase in the hardware of instruction buffer.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

second embodiment

[0122]FIG. 14 is a timing diagram for the information processing device according to the second embodiment of the present invention. The information processing device shown in FIG. 14 is a microprocessor and it has a chip-mounted CPU40, a cache memory unit 50 and a memory bus access portion 60. To the left of the memory bus access portion 60 is outside the chip and the main memory 64 is connected via the external memory bus 62.

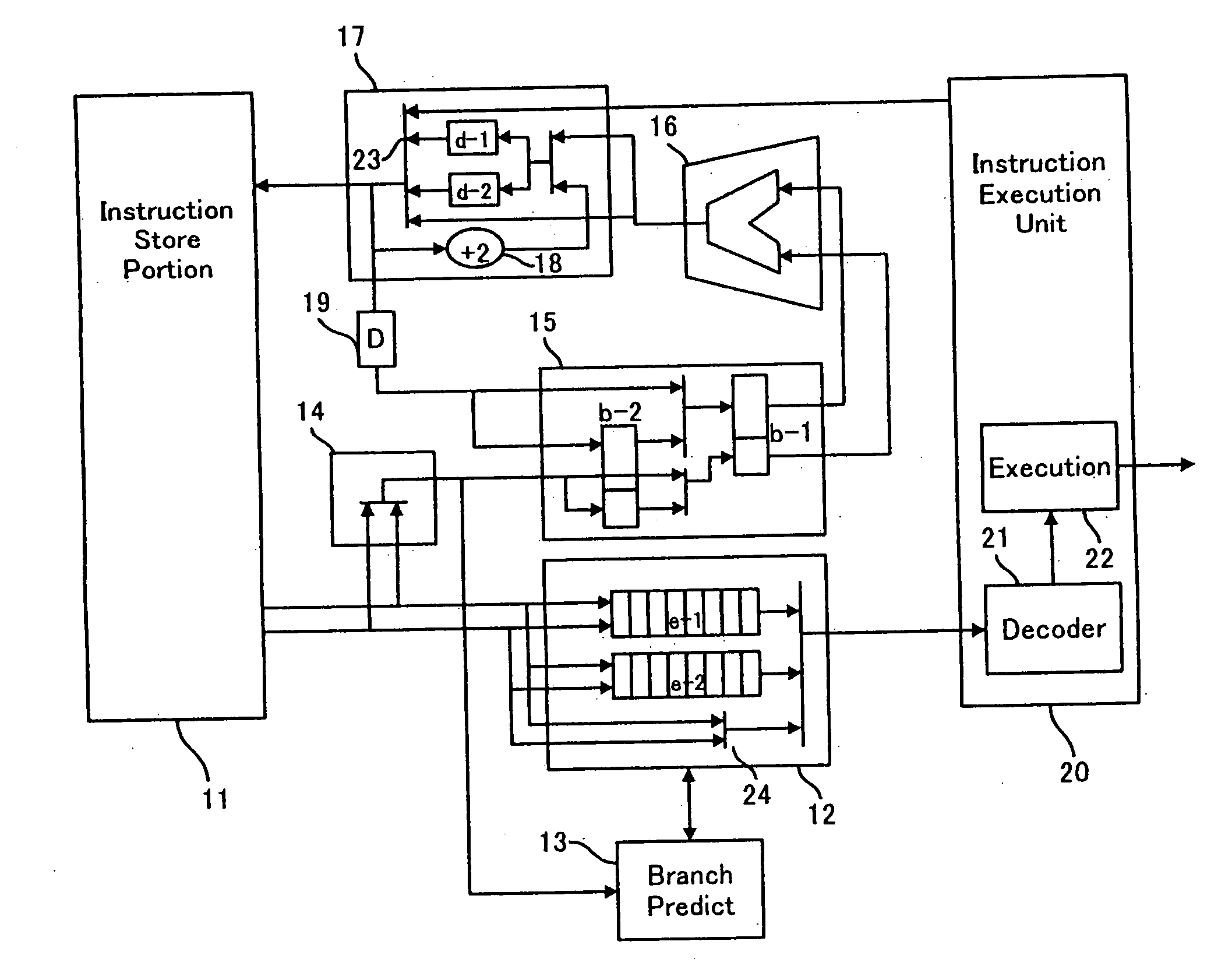

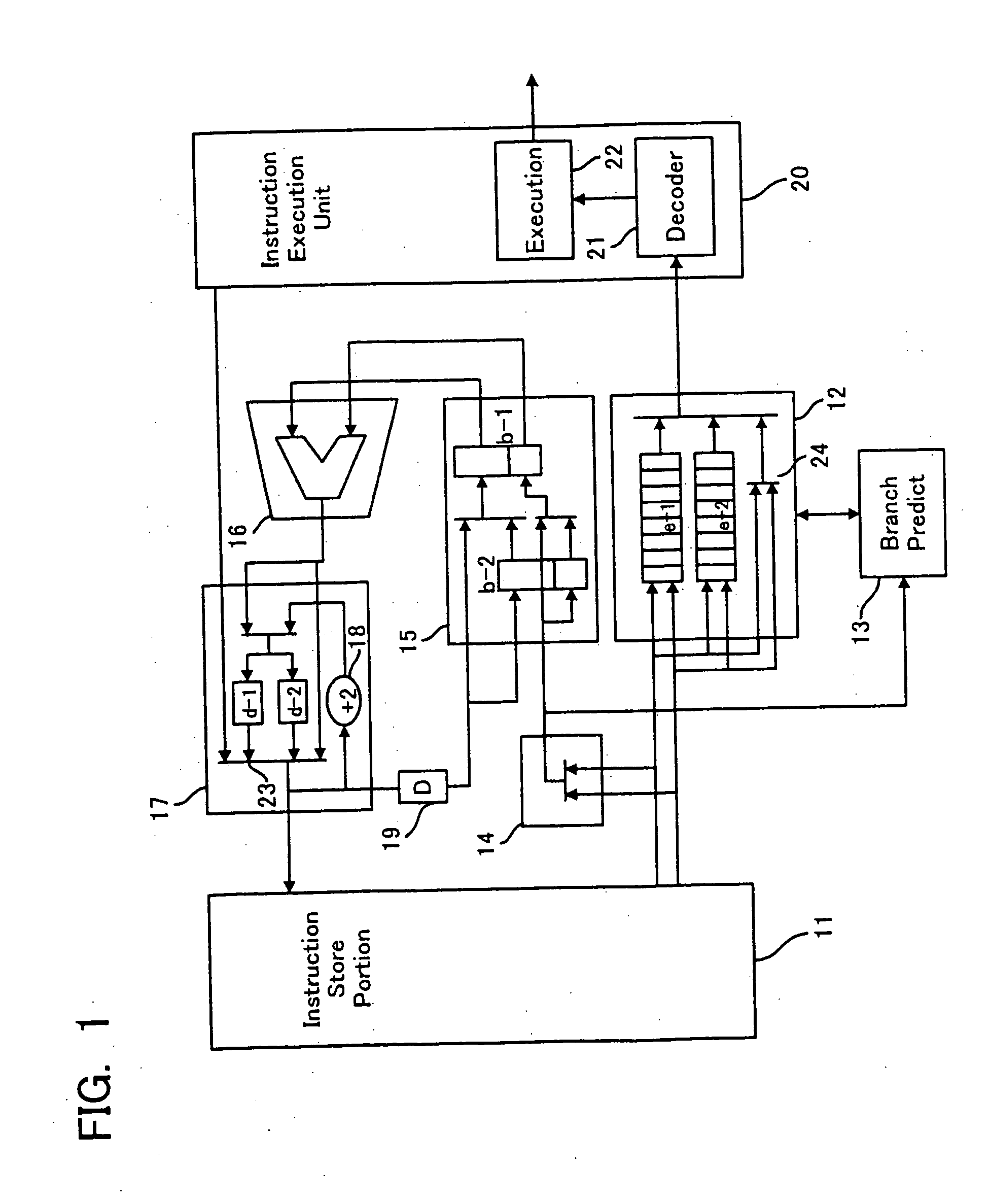

[0123] The CPU 40 comprises an instruction decoder and execution portion 49 which decodes instructions and executes those instructions. The CPU 40 shown in FIG. 14 comprises dual-instruction-fetch-type instruction fetch portions 410, 411 which fetch both branching instruction sequential side and branching instruction target side instructions at the same time. Furthermore, CPU 40 has instruction buffers 470, 471 which store instructions which have been fetched both on the sequential side and on the target side. Instructions selected by the selector 48 from amo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com