Web platform for interactive design, synthesis and delivery of 3D character motion data

a technology of interactive design and character motion data, applied in the field of video animation, can solve the problems of increasing cost, time-consuming and cumbersome manual animation or using motion capture, and requiring the use of complex equipment and actors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

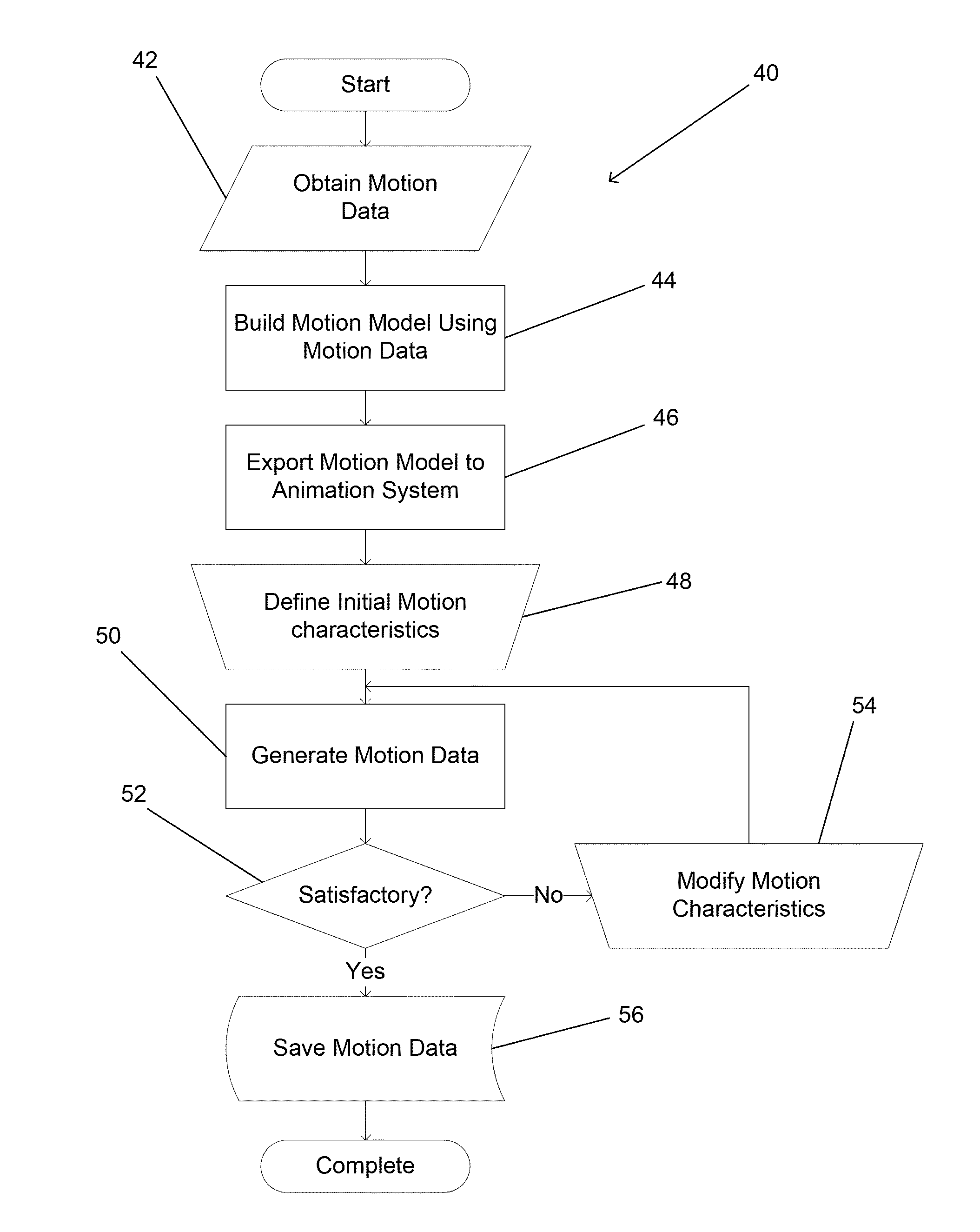

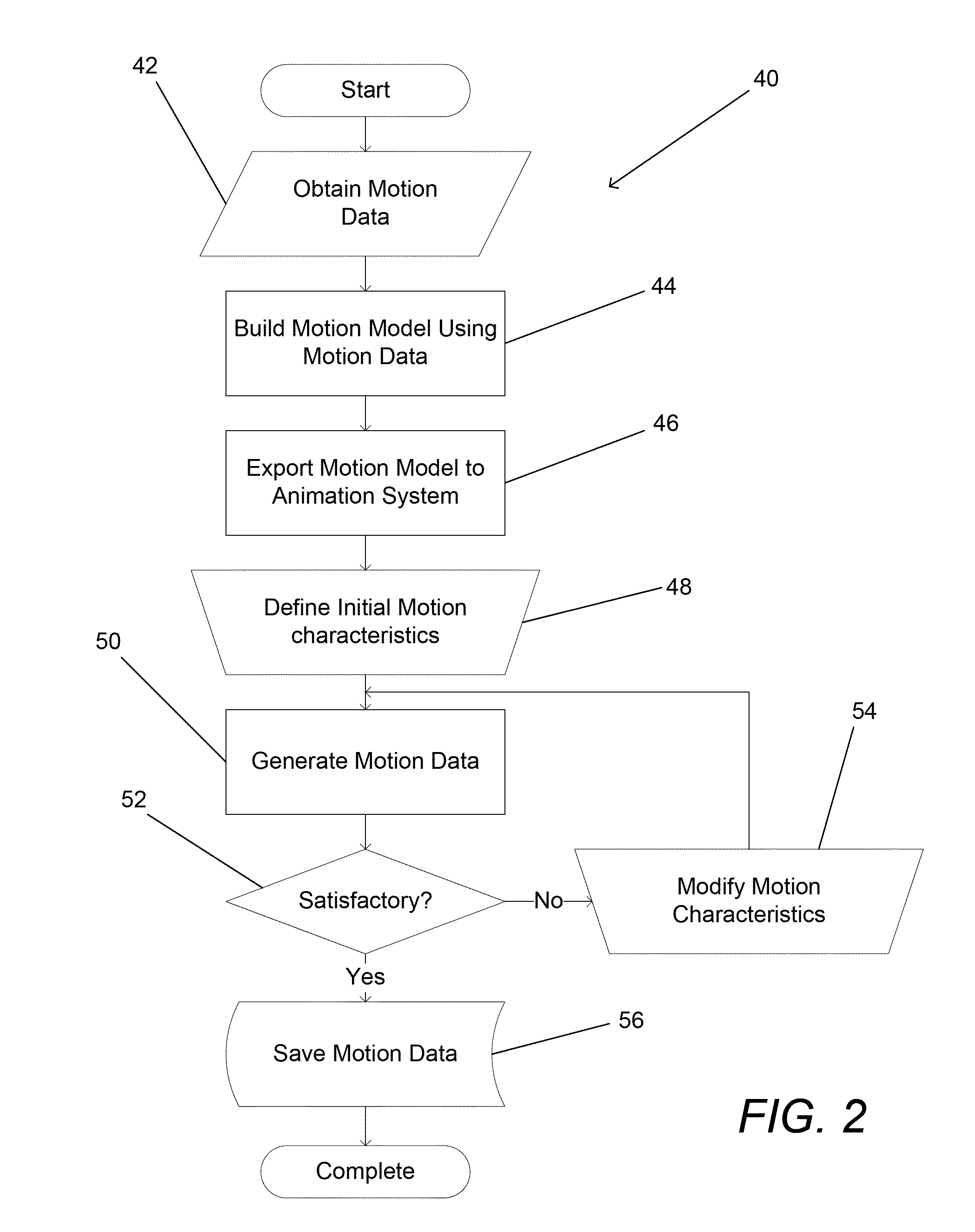

[0039]Turning now to the drawings, animation systems and methods for real time interactive generation of synthetic motion data for the animation of 3D characters are illustrated. The term synthetic motion data describes motion data that is generated by a machine. Synthetic motion data is distinct from manually generated motion data, where a human animator defines the motion curve of each Avar, and actual motion data obtained via motion capture. Animation systems in accordance with many embodiments of the invention are configured to obtain a high level description of a desired motion sequence from an animator and use the high level description to generate synthetic motion data corresponding to the desired motion sequence. Instead of directly editing the motion data, the animator can edit the high level description until synthetic motion data is generated that meets the animator's needs.

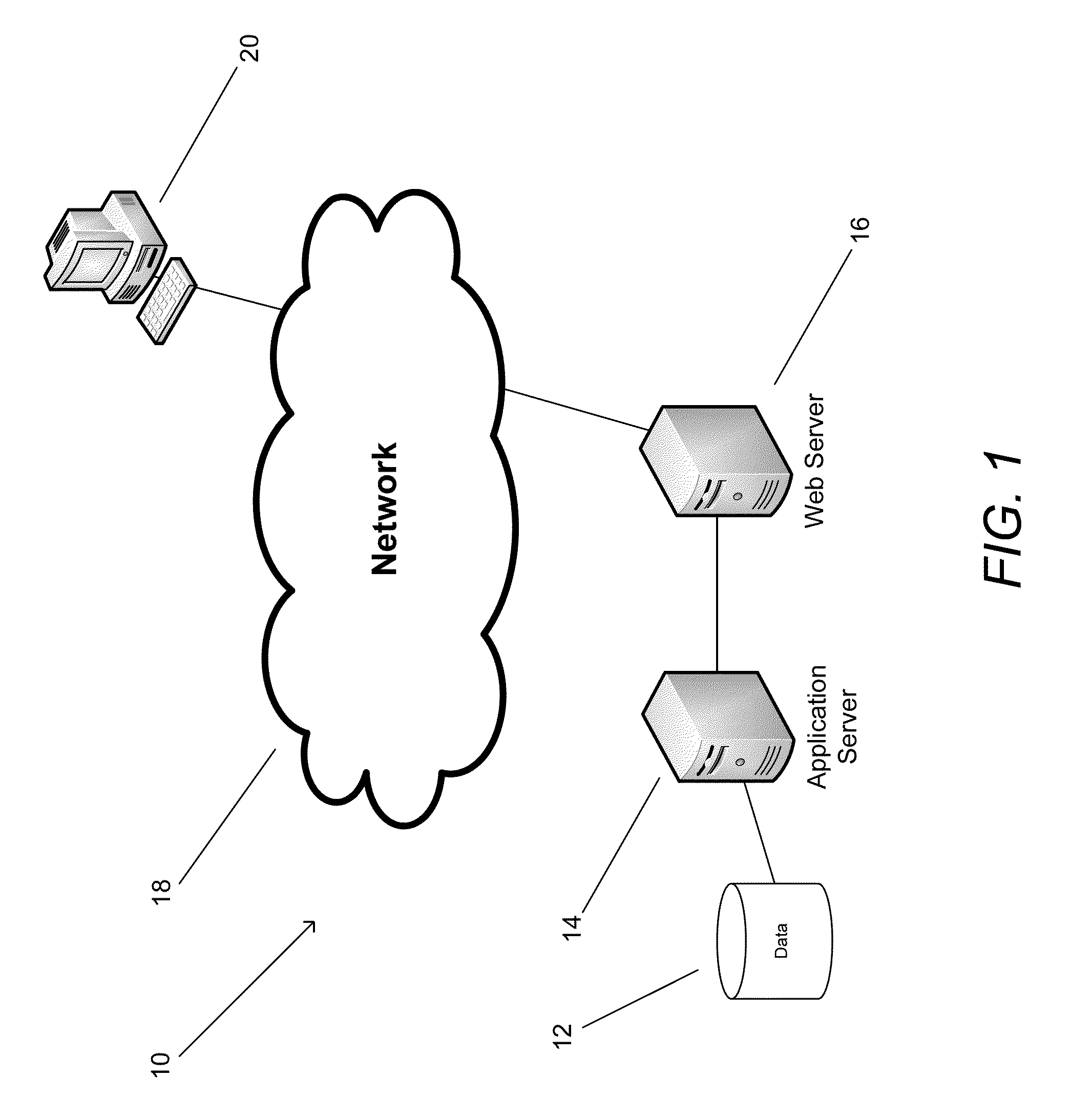

[0040]In a number of embodiments, the animation system distributes processing between a user's comp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com