Improved physical object handling based on deep learning

a deep learning and physical object technology, applied in image enhancement, image analysis, program-controlled manipulators, etc., can solve the problems of not disclosing the details of training and using convolutional neural networks, not disclosing the handling of 3d objects,

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example 1

mbodiments According to the Invention

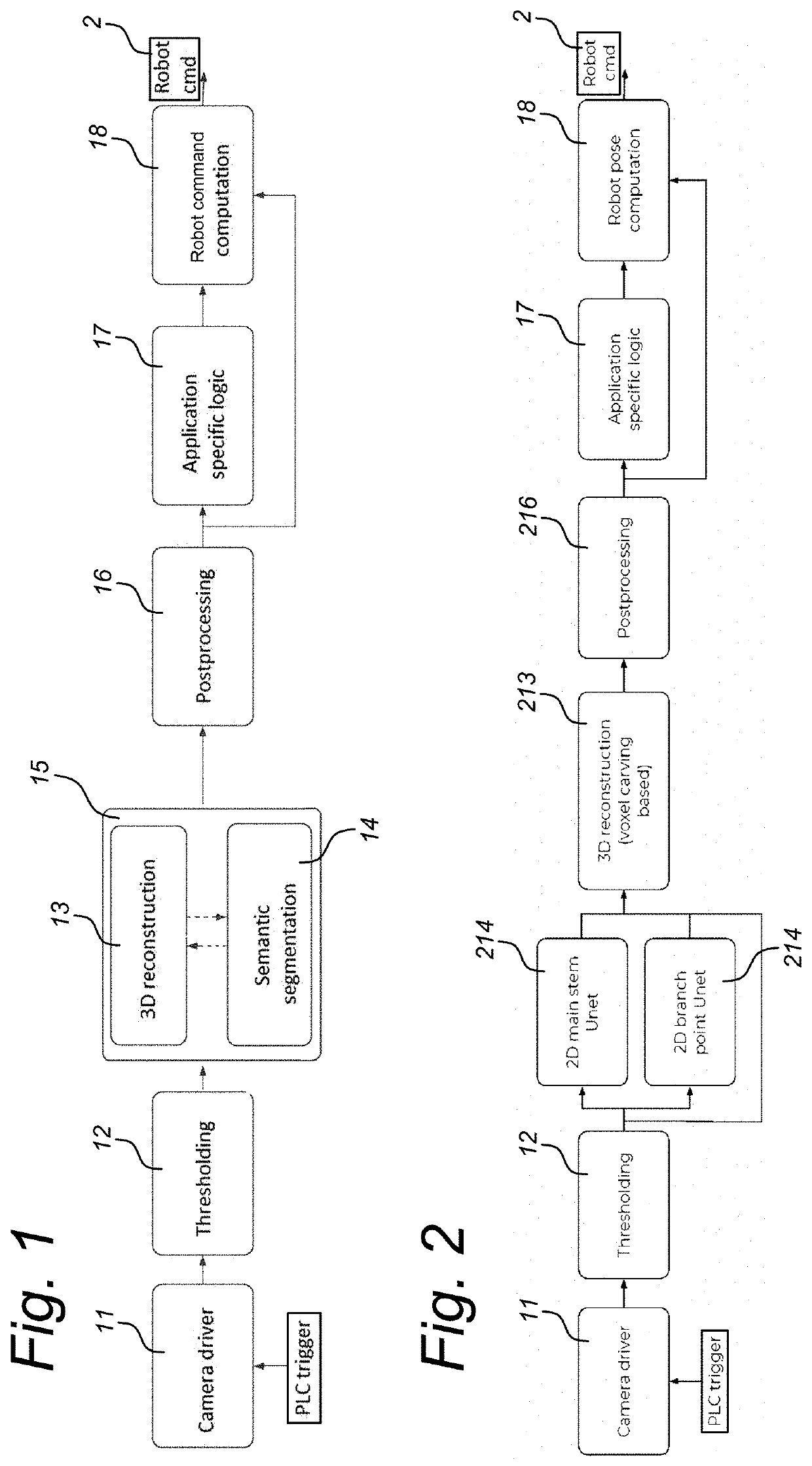

[0143]FIG. 1 illustrates example embodiments of a method according to the invention. It relates to a method for generating a robot command (2) for handling a three-dimensional, 3D, physical object (1) present within a reference volume and comprising a 3D surface. It comprises the step of, based on a PLC trigger, obtaining (11) at least two images (30) of said physical object (1) from a plurality of cameras (3) positioned at different respective angles with respect to said object (1).

[0144]Each of the images is subject to a threshold (12), which may preferably be an application-specific pre-determined threshold, to convert them into black and white, which may be fed as a black and white foreground mask to the next step, either replacing the original images or in addition to the original images.

[0145]The next step comprises generating (15), with respect to the 3D surface of said object (1), a voxel representation segmented based on said at least tw...

example 2

mbodiments with 2D CNN According to the Invention

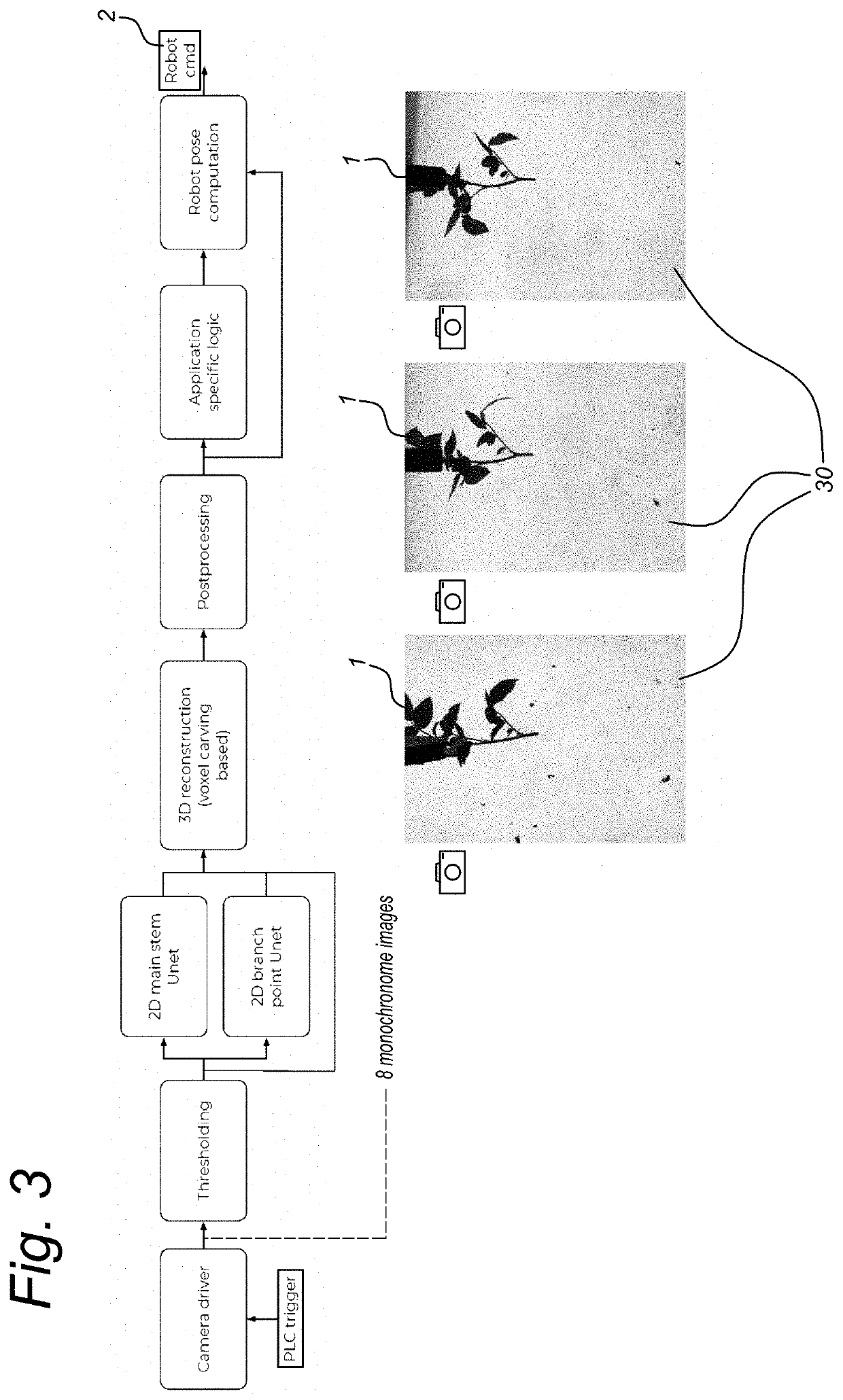

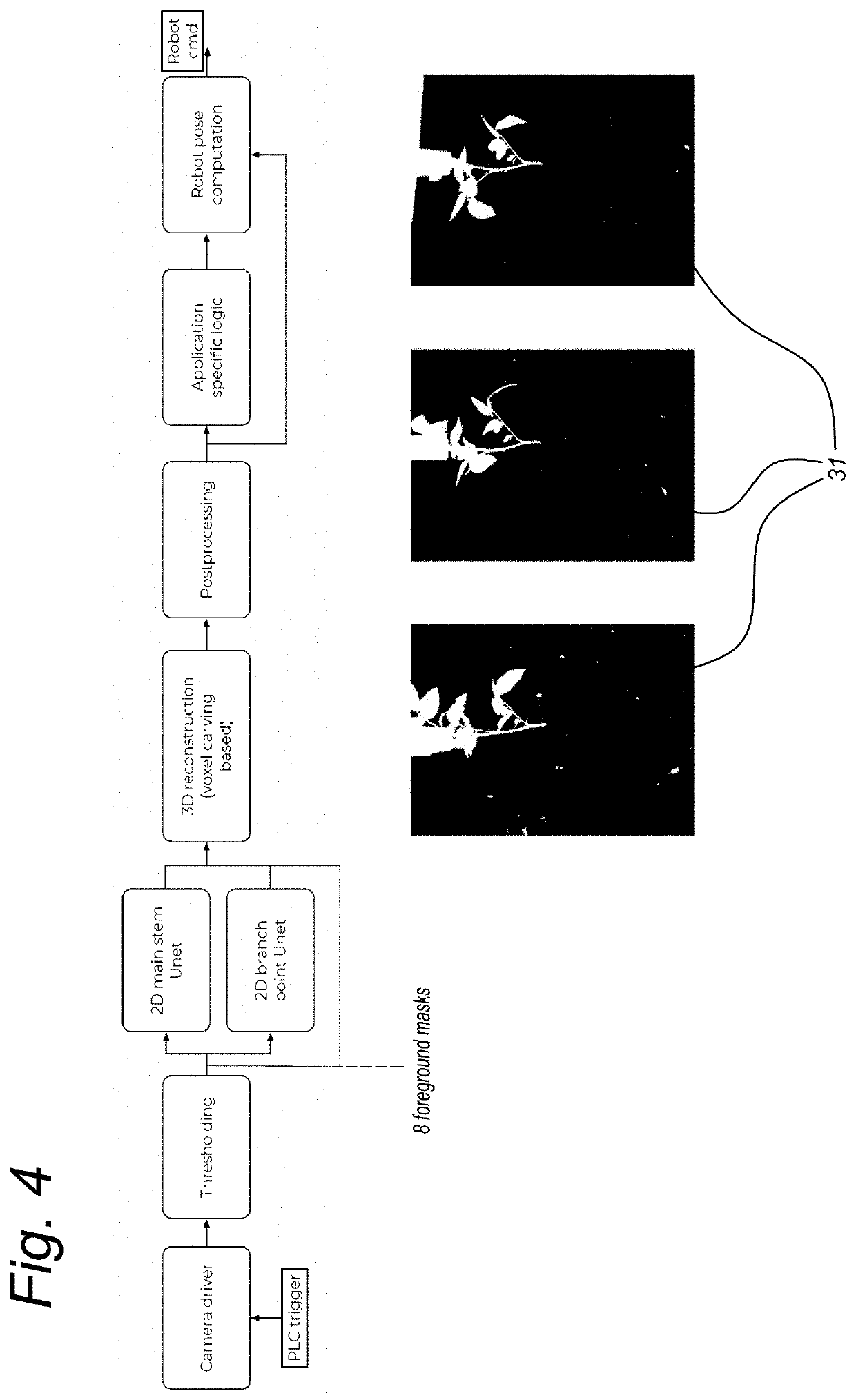

[0151]FIGS. 2-8 illustrate steps of example embodiments of a method according to the invention, wherein the NN is a CNN, particularly a 2D U-net.

[0152]FIG. 2 provides an overview of example embodiments of a method according to the invention. In this example, the object (2) is a rose present in the reference volume that is cut in cuttings such that the cuttings may be picked up and planted in a next process step. To this end, the robot element is a robot cutter head (4) that approaches the object and cuts the stem, according to the robot command, at appropriate positions such that cuttings with at least one leaf are created. Particularly, the robot command relates to a robot pose, that comprises a starting and / or ending position, i.e. a set of three coordinates, e.g., x, y, z, within the reference volume, and, if one of the starting and ending positions is not included, an approaching angle, i.e. a set of three angles, e.g., alpha, bet...

example 3

UI with 2D Annotation According to the Invention

[0160]FIG. 9 illustrates example embodiments of a GUI with 2D annotation. The GUI (90) may be used for training of any NN, preferably a 2D U-net or a 3D PointNet++ or a 3D DGCNN, such as the CNNs of Example 2. The GUI operates on a training set relating to a plurality of training objects (9), in this example a training set with images of several hundred roses, with eight images for each rose taken by eight cameras from eight different angles. Each of the training objects (9) comprises a 3D surface similar to the 3D surface of the object for which the NN is trained, i.e. another rose.

[0161]However it should be noted that the NN, when trained for a rose, may also be used for plants with a structure similar to that of a rose, even if the training set did not comprise any training objects other than roses.

[0162]The GUI comprises at least one image view (112) and allows to receive manual annotations (91, 92, 93) with respect to a plurality ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com