Multi-strategy image fusion method under compressed sensing framework

An image fusion and compressed sensing technology, applied in the field of image processing, can solve the problems of large image storage space, long image fusion process, unfavorable image compression and transmission, etc., to reduce the amount of fusion data, improve the fusion effect, shorten the The effect of fusion time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

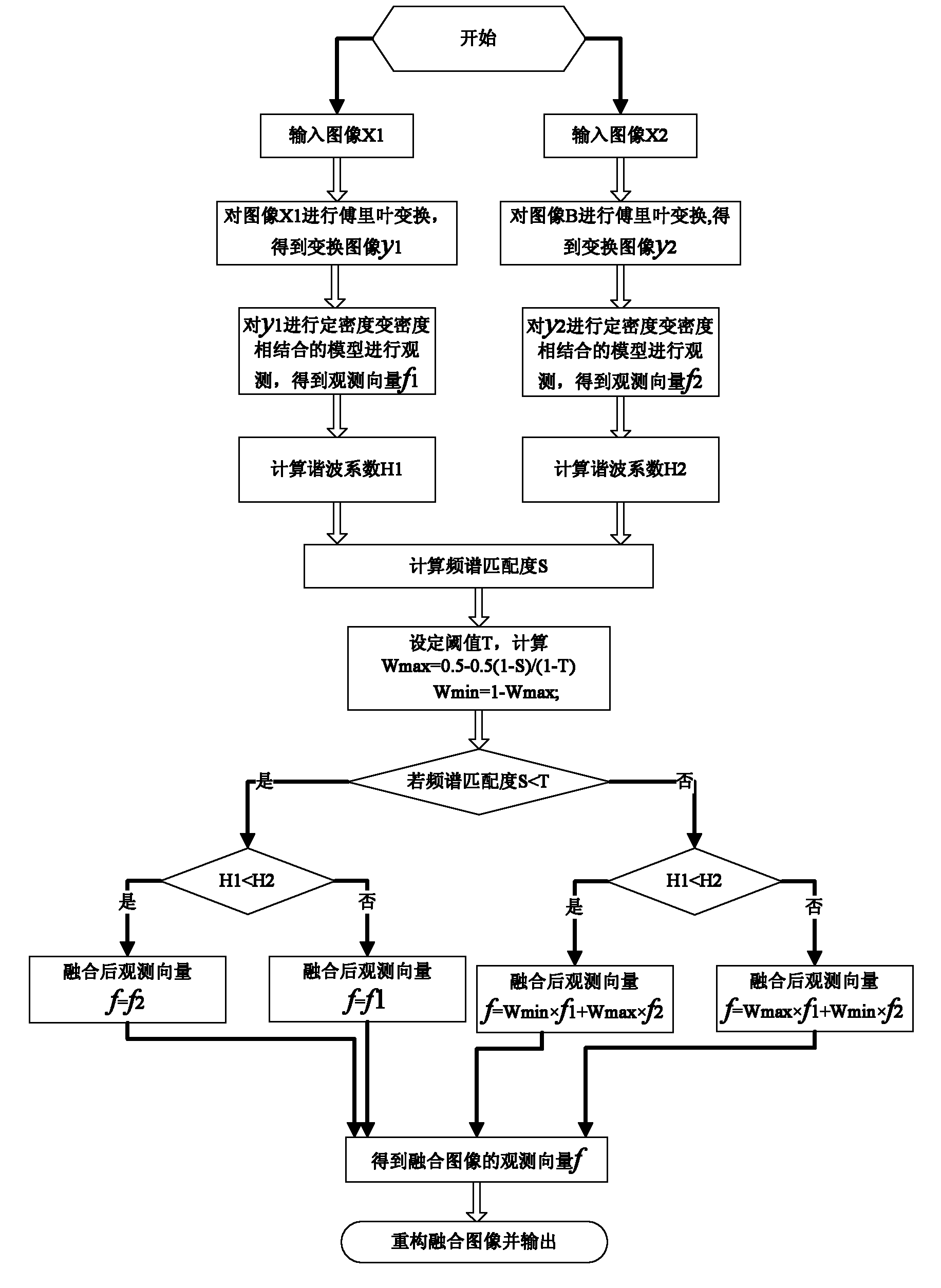

[0037] refer to figure 1 , the specific implementation process of the present invention is as follows:

[0038] Step 1, input the original image A and original image B, divide the original image A and original image B into partial images X1 and X2 of size C×C, C×C is 8×8 or 16×16, and this example takes 16 ×16.

[0039] Step 2: Perform Fourier transform on the local image X1 to obtain a Fourier coefficient matrix y1, and perform Fourier transform on the local image X2 to obtain a Fourier coefficient matrix y2.

[0040] Step 3, using the variable-density observation model of low-frequency full sampling of Fourier coefficients, observe the Fourier coefficient matrix y1 to obtain the observation vector f1.

[0041] (3a) Set the sampling model to be a matrix whose value is only 0 or 1, and use the point with a value of 1 as the sampling point, and set the matrix B according to the size of the input image A: if the size of the input image A is m×m, Then suppose the size of matri...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com