Fast multilevel imagination and reality occlusion method at actuality enhancement environment

A technology of virtual and real occlusion and augmented reality, applied in image data processing, 3D image processing, instruments, etc., can solve problems such as long calculation time, inability to obtain depth maps, and difficulties in real-time processing of live video streams, etc., to meet real-time requirements , good occlusion effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

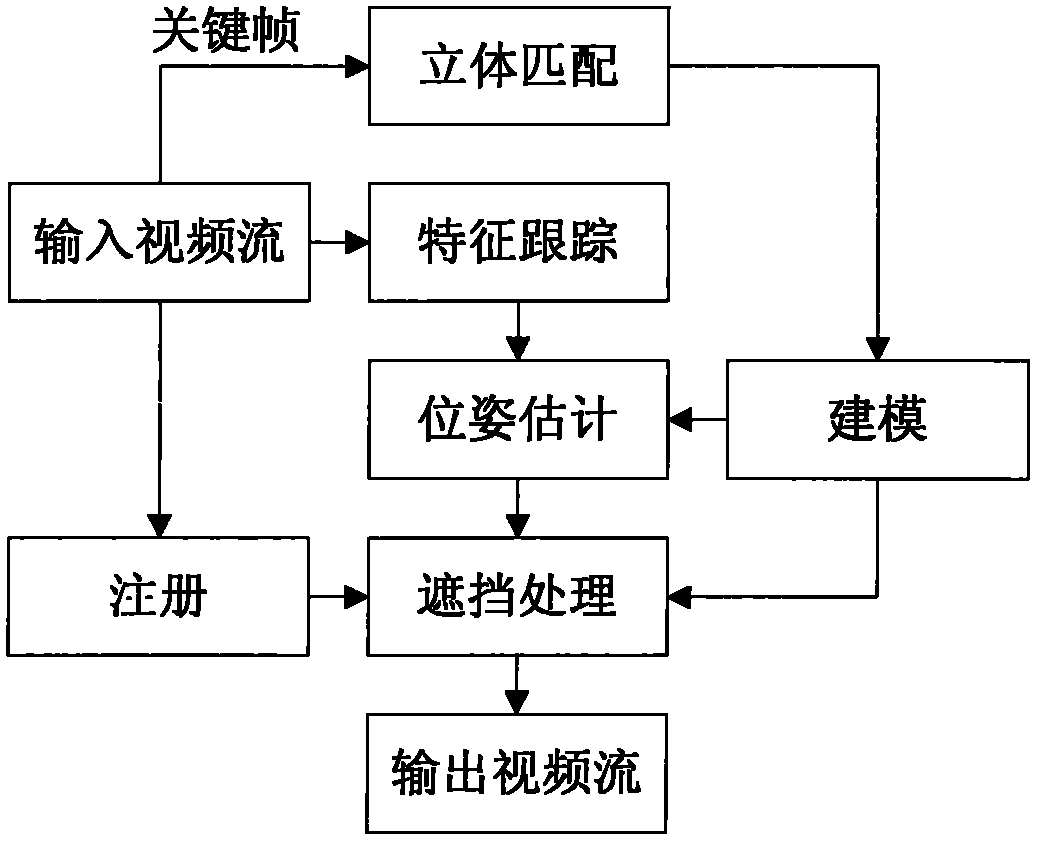

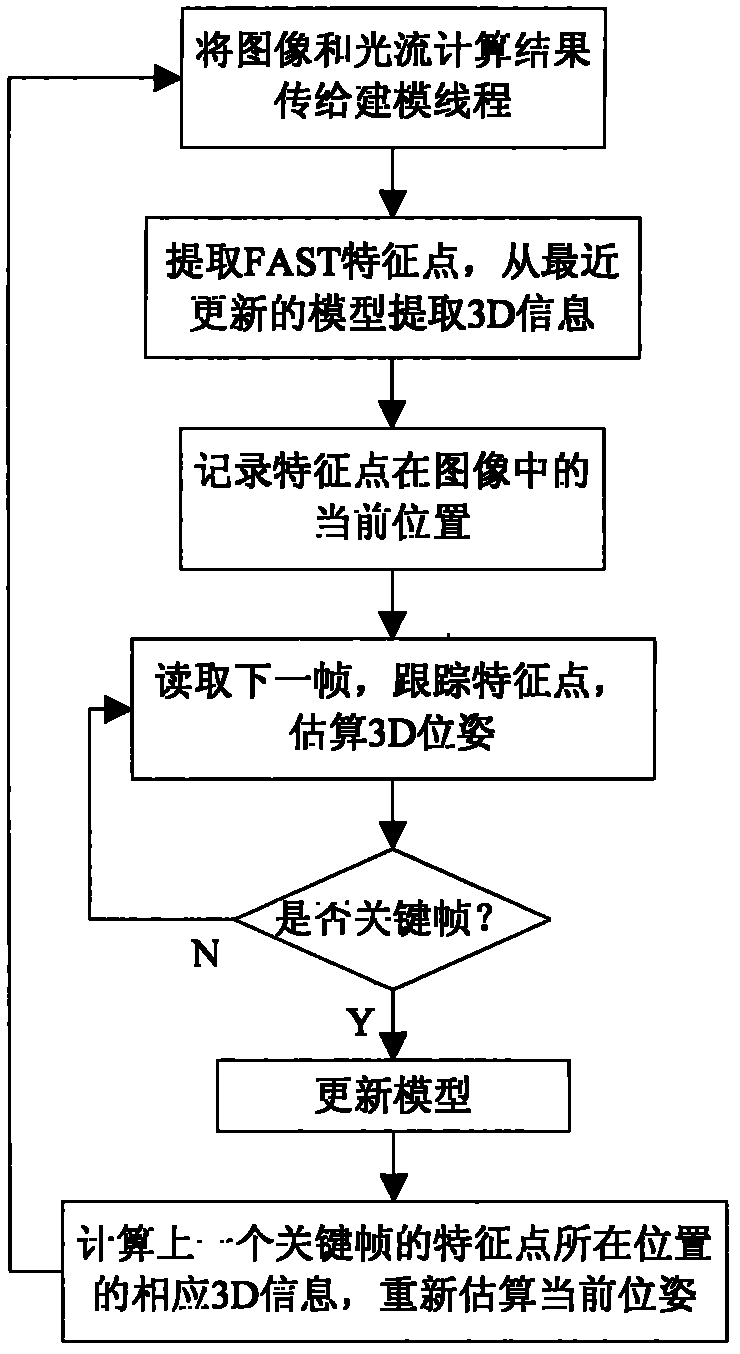

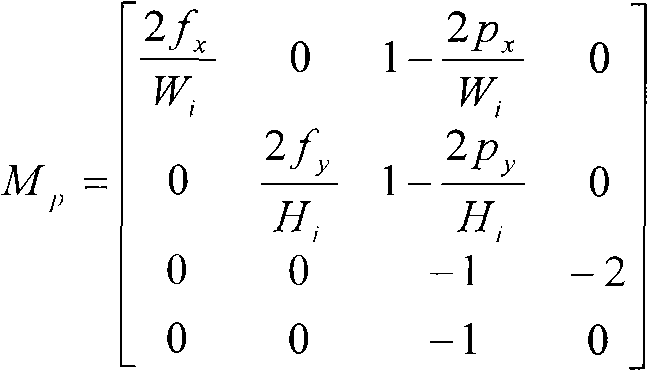

[0026] The invention is a fast multi-level virtual and real occlusion processing method in an augmented reality environment, which uses a method of combining sparse feature point tracking, pose estimation and three-dimensional reconstruction to process the multi-level virtual and real occlusion of the augmented reality. The present invention comprises the following steps:

[0027] 1) Use dual cameras to collect real-time dynamic stereoscopic video images of real scenes;

[0028] 2) Take key frames from the two-way video stream at regular intervals, calculate the dense depth map for it, establish a 3D model of the real object to be occluded, and extract sparse feature points at the same time;

[0029] Here, a key frame refers to a pair of stereoscopic video image pairs used for 3D modeling of a real scene, and the time interval for extracting a key frame is the calculation cycle of dense stereo matching and modeling of the previous pair of key frames. The significance of this ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com