Non-contact type intelligent inputting method based on video images and device using the same

A video image, non-contact technology, applied in the direction of user/computer interaction input/output, graphic reading, mechanical mode conversion, etc., can solve the problems of reducing user experience and not conforming to usage habits, so as to improve interactive response time, The effect of removing the restrictions on the use of venues and auxiliary materials and avoiding stimulation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

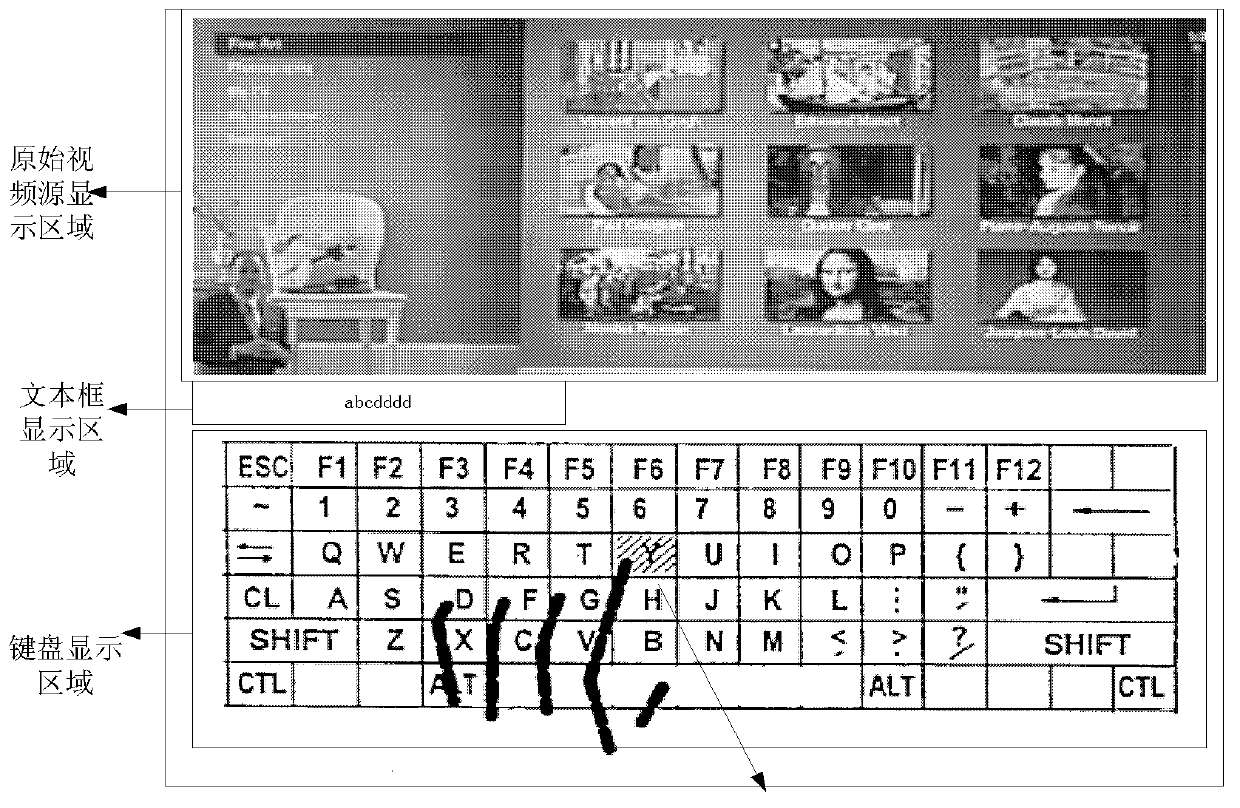

[0023] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

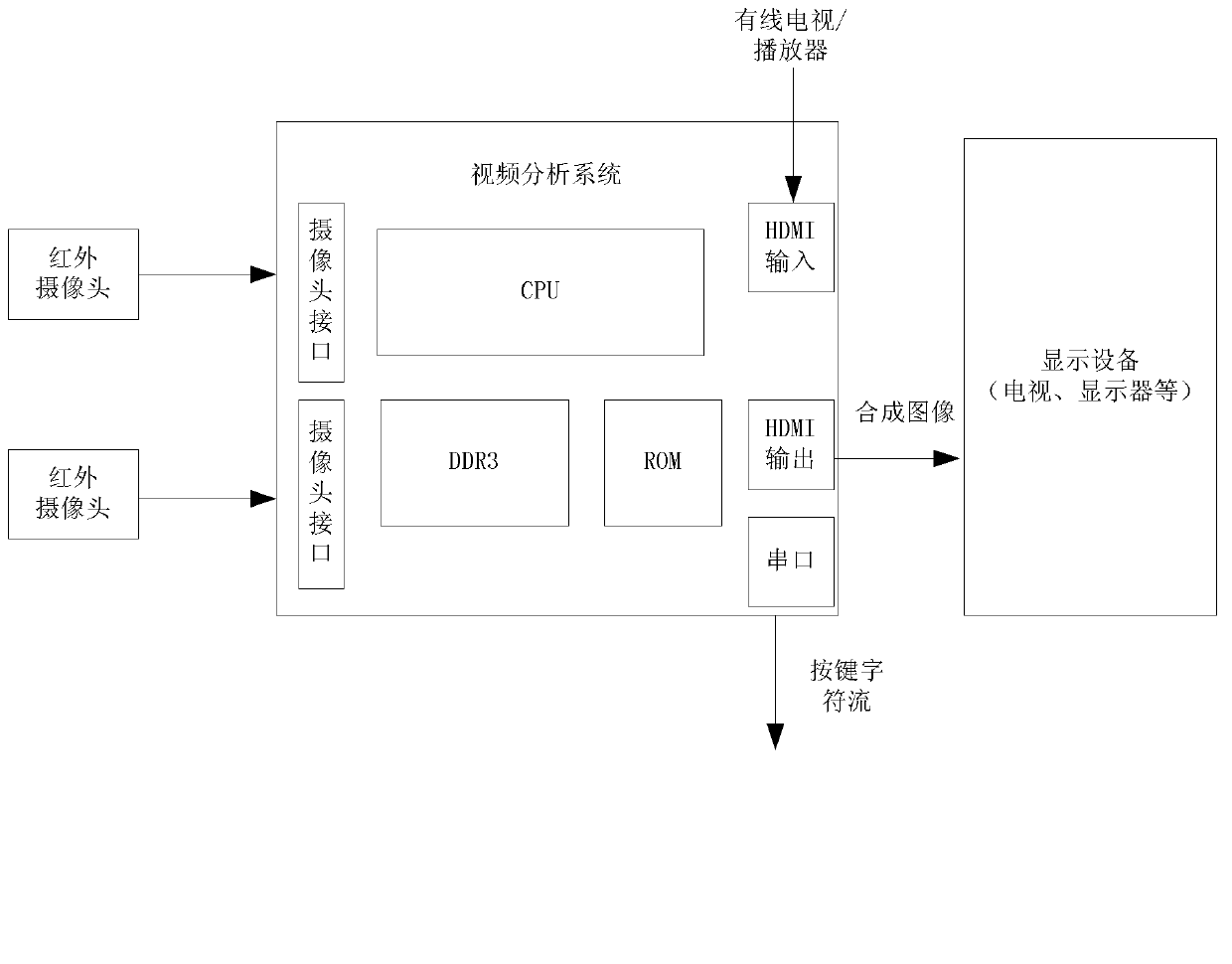

[0024] figure 1 A design example of a non-contact intelligent input system based on video images proposed by the present invention is shown, which is composed of a display device, a 3D video acquisition system, and a 3D video analysis system.

[0025] The 3D video capture system includes two cameras.

[0026] The video analysis system is composed of CPU, ROM, DDR3SDRAM, camera interface, HDMI interface and serial port. The video acquisition system and the video analysis system can be integrated into the display device, or constructed using existing hardware resources in the display device (such as a TV).

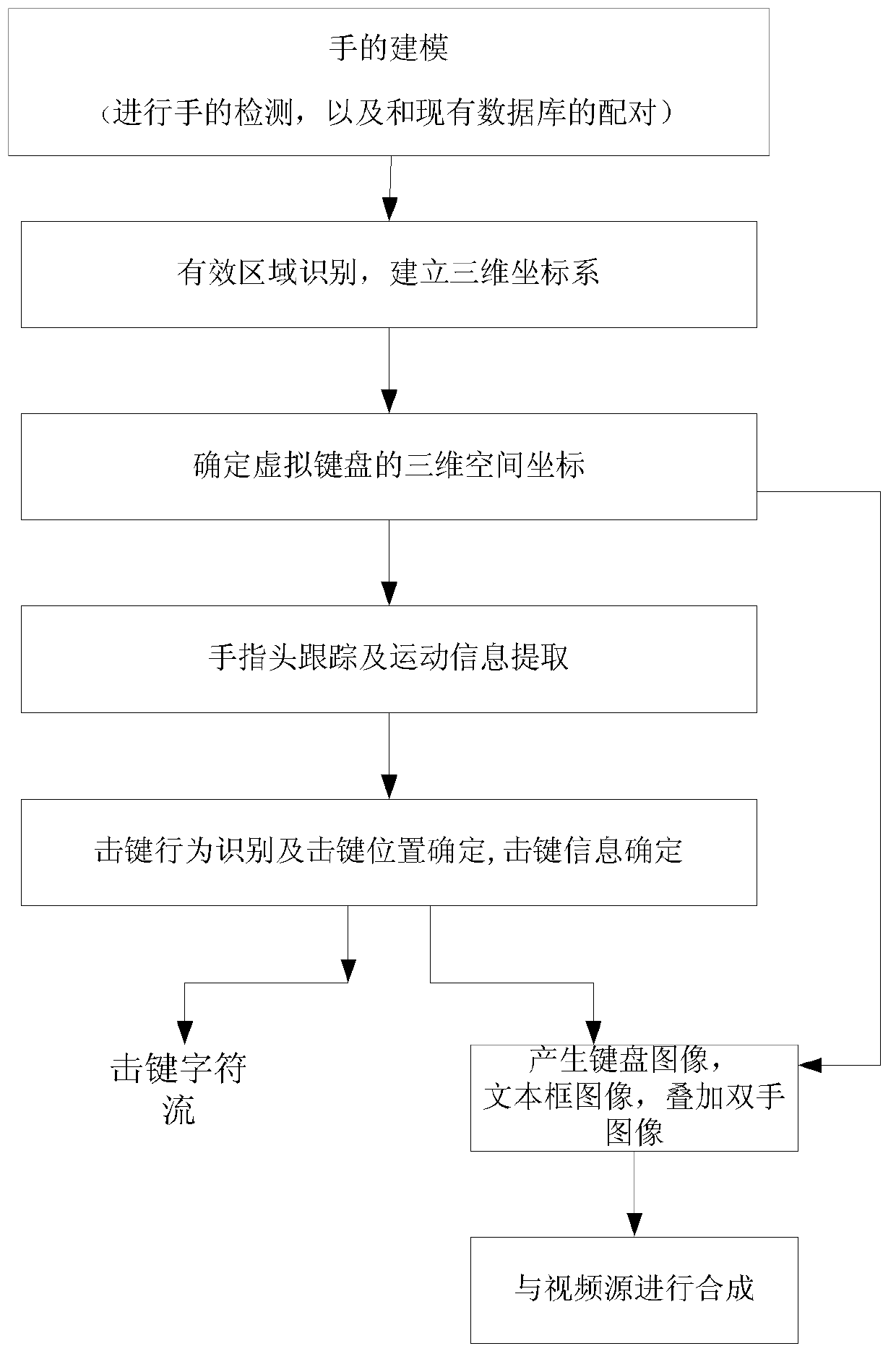

[0027] The two cameras collect videos from two angles at the same time, and transmit the video data to the 3D video...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com