Share cache perception-based virtual machine scheduling method and device

A technology of shared cache and scheduling method, which is applied in the computer field, can solve problems such as system operating efficiency decline and virtual machine performance mutual interference, and achieve the effects of improving operating efficiency, reducing performance mutual interference, and facilitating QoS goals

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

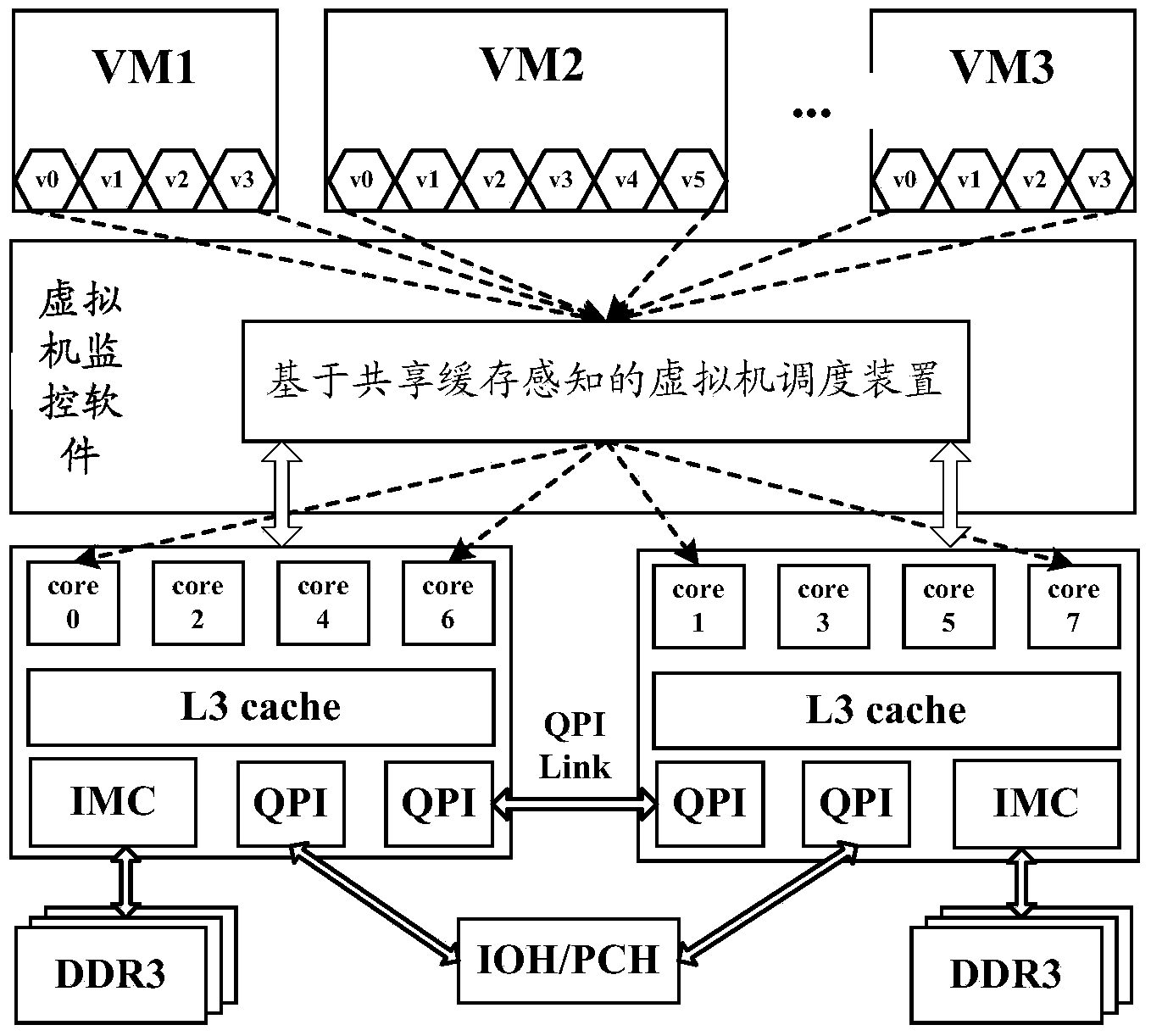

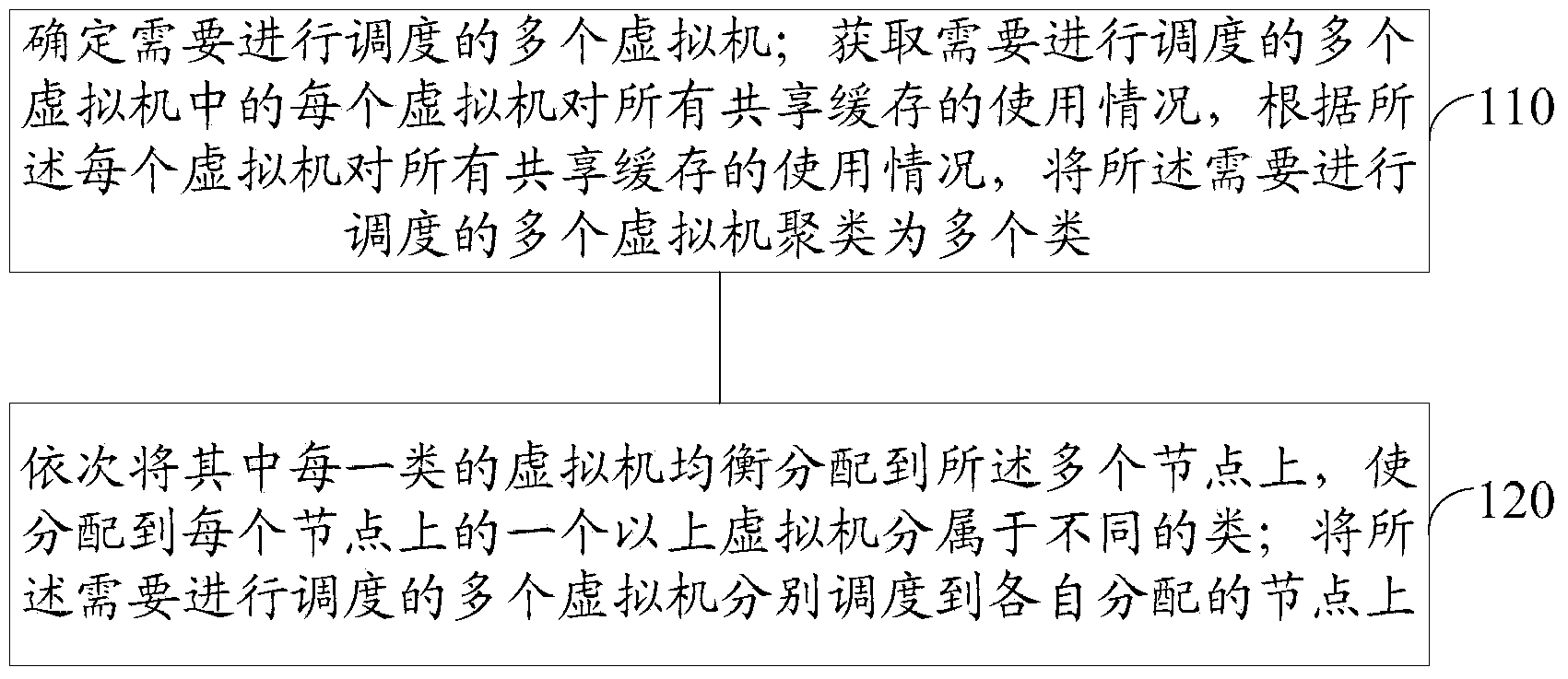

[0052] An embodiment of the present invention provides a virtual machine scheduling method based on shared cache awareness.

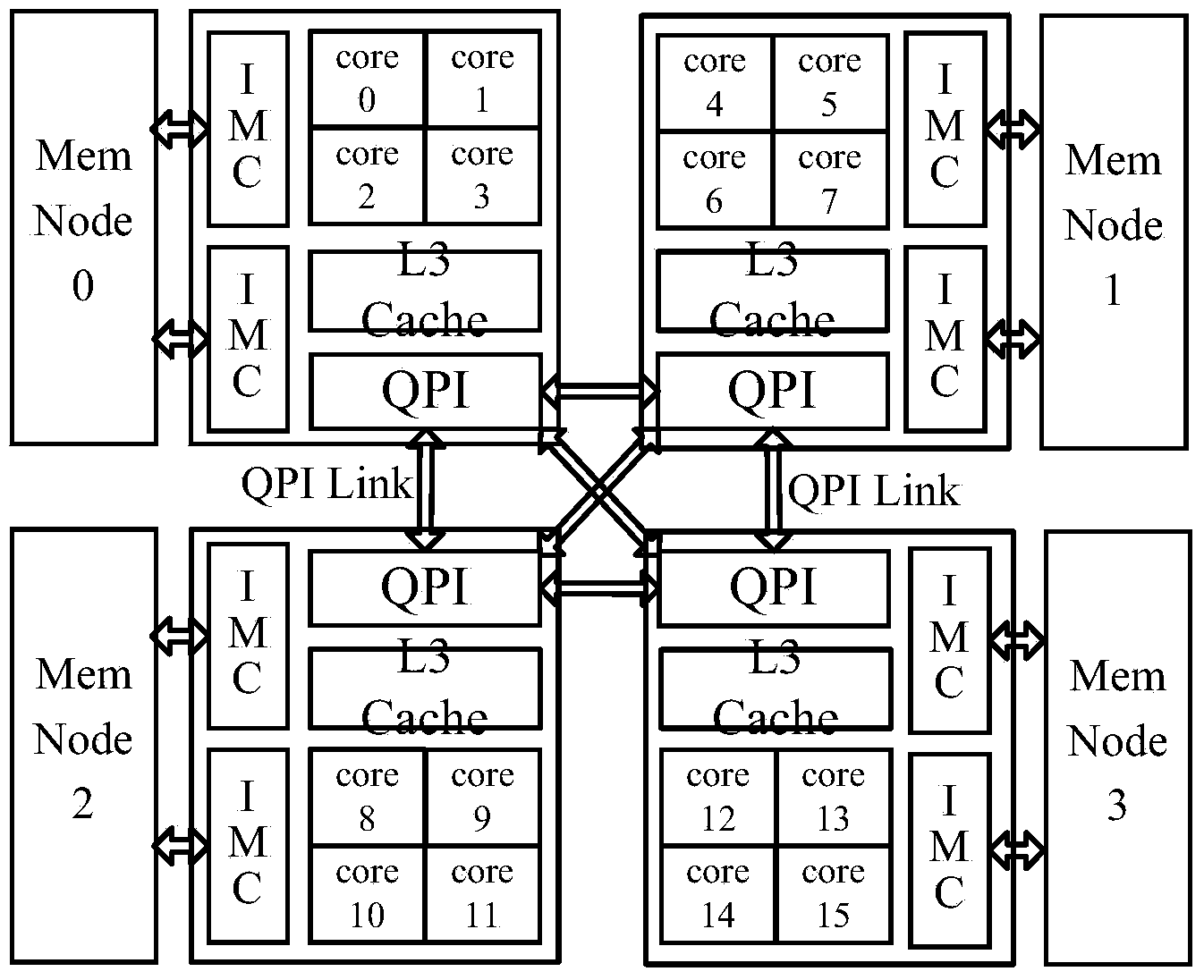

[0053] The method is used in a computer system, and the system includes a hardware layer, a virtual machine management layer running on the hardware layer, and a plurality of virtual machines running on the virtual machine management layer, and the hardware layer It includes a plurality of nodes, the at least one virtual machine runs on the plurality of nodes, each node includes at least one processor core (core), and the plurality of nodes has a shared cache. Generally, the shared cache is the last-level cache, that is, the last-level cache of each node is used as a shared cache and can be accessed by other nodes.

[0054] The so-called cache is a temporary storage located between the CPU and the memory. Its capacity is much smaller than that of the memory but the switching speed is much faster than that of the memory. The data in the cache is a small...

Embodiment 2

[0106] In order to better implement the above solutions of the embodiments of the present invention, related devices for coordinating the implementation of the above solutions are also provided below.

[0107] Please refer to Figure 7 , an embodiment of the present invention provides a virtual machine scheduling device based on shared cache perception, which is used in a computer system, and the computer system includes a plurality of nodes, each node includes at least one processor core, and the last level cache of each node To share the cache, multiple virtual machines run on the multiple nodes; the device may include:

[0108] A determining module 740, configured to determine a plurality of virtual machines that need to be scheduled;

[0109] An acquisition module 710, configured to acquire usage of all shared caches by each virtual machine among the multiple virtual machines that need to be scheduled;

[0110] A clustering module 720, configured to cluster the multiple ...

Embodiment 3

[0138] An embodiment of the present invention also provides a computer-readable medium, including computer-executable instructions, so that when a processor of a computer executes the computer-executable instructions, the computer executes the shared cache-aware-based virtual machine scheduling method of Embodiment 1. method flow.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com