Calculating method of attitude matrix and positioning navigation method based on attitude matrix

A matrix calculation and azimuth technology, applied in the field of information, can solve problems such as slow calculation speed, software cannot be positioned normally, and attitude data software cannot have a unified interface and general sharing, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

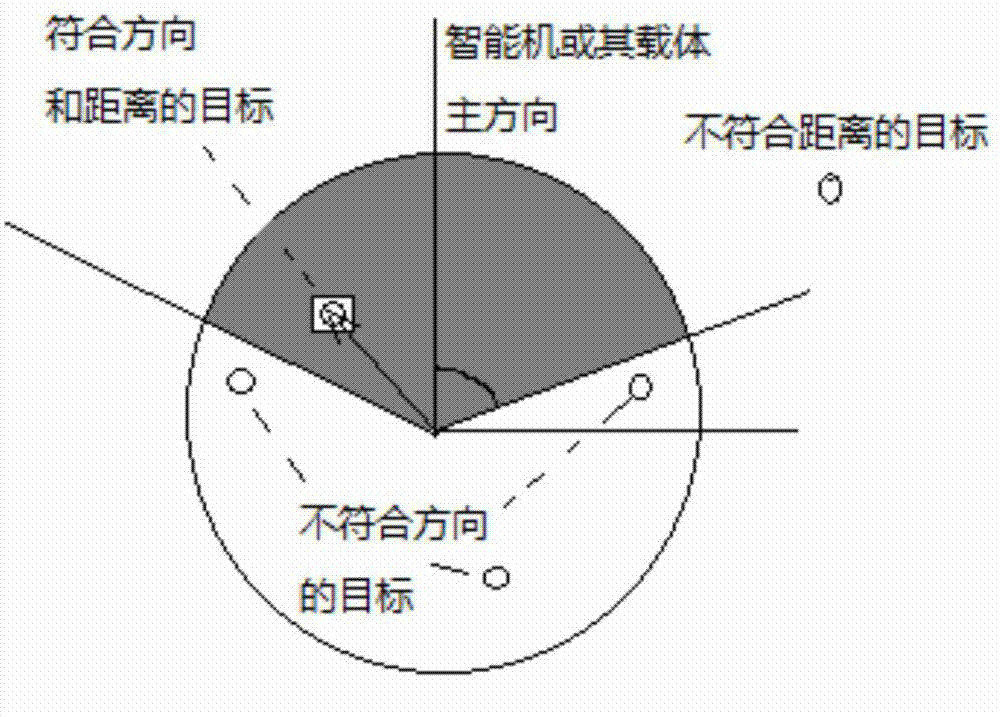

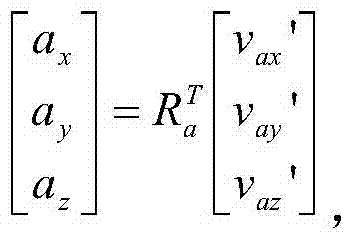

[0184] Assuming that the head of the smartphone is defined as the main direction, the vector of the head relative to the coordinate system of the smartphone is p={0,1,0} T

[0185] then p ┴ =R g T p={r 21 r 22 r 23} T where r ij is the element in row i and column j of the R matrix.

[0186]Assuming that the right side of the smartphone is defined as the main direction, the vector on the right side relative to the coordinate system of the smartphone is p={1,0,0} T

[0187] then p ┴ = R g T p={r 11 r 12 r 13} T

[0188] Method 2 Navigation Service

[0189] Fix the smart machine with a certain carrier, such as a vehicle and boat, and define the vector p of the main direction of the carrier relative to the smart machine itself.

[0190] Draw the vector principal direction vector p in the map in real time ┴ = R T p and target vector v o A schematic diagram of the relationship between.

[0191] Calculate the cosine cos(dφ)>cos(φa) of the included angle to ...

specific Embodiment approach

[0208] The smart phone is required to be placed horizontally, and the angle between the main direction angle vector and the smart phone to the target vector, or the dot product between the two, is obtained by using the first value value[0] of the direction sensor.

[0209] Calculate the target vector v on the 2D map o ={v ox , v oy} azimuth φ vo The inverse trigonometric function atan2(v oy ,v ox ) to achieve, calculate φ angle and φ vo The included angle of the vector formed by the angle on the plane

Embodiment approach

[0210] Implementation methods, using but not limited to the following methods:

[0211] φ angle and φ vo The angle between can be calculated as follows:

[0212]

[0213] Generally, the 2-dimensional dot product is used to calculate the φ angle and φ vo The cosine of the angle between = ({cosφ, sinφ} dot product v o ) / |v o |, if the cosine value>cos(φa) is considered to be in the same direction.

[0214] In order to facilitate user interactive control, the target vector v on the map o Draw simultaneously with the smart machine vector {cosφ, sinφ}. Especially when the target is not within the field of view of the map, the drawing direction at the intersection of the line from the smartphone to the target and the border of the map is v o arrow pointing to the target.

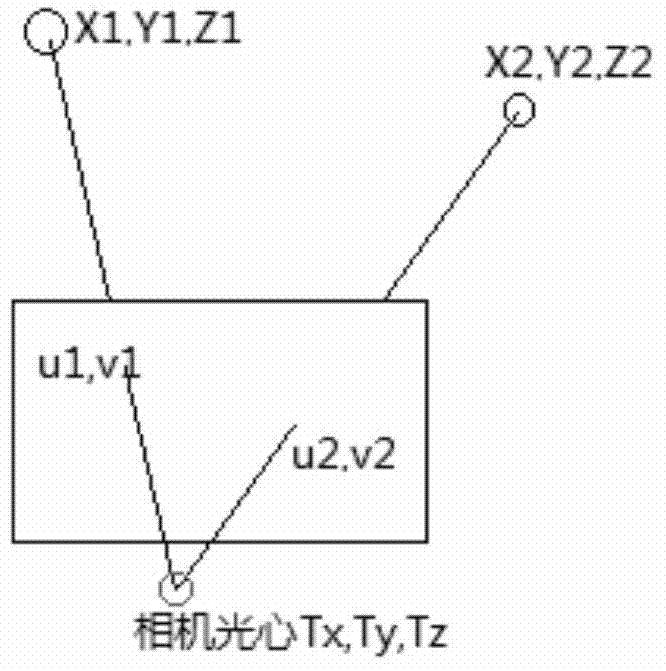

[0215] Real-time correction of video images based on attitude data of intelligent machines

[0216] The general method steps are as follows:

[0217] 1. Adopt the method of claim 1 to obtain the attitu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com