Cache device based on adaptive routing and scheduling policy

A cache and scheduling strategy technology, applied in memory systems, memory architecture access/allocation, instruments, etc., can solve the problems of increased storage medium operation frequency, affecting the working life of storage systems, and wasting cache bandwidth, saving hardware design costs. , Data transmission delay time optimization, to achieve the effect of cache multiplexing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The present invention will be further described below with reference to the accompanying drawings and specific embodiments.

[0023] Referring to the drawings showing embodiments of the invention, the invention will be described in more detail below. However, this invention may be embodied in many different forms and should not be construed as limited to the embodiments set forth herein. Rather, the embodiments are presented so that this disclosure will be thorough and complete, and will fully convey the scope of the invention to those skilled in the art.

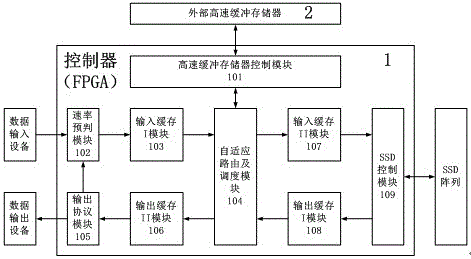

[0024] Such as figure 1 The shown cache device provided by the present invention based on an adaptive routing and scheduling strategy includes a controller and an external cache memory 2;

[0025] The controller includes a cache control module 101, a rate prediction module 102, an input cache I module 103, an input cache II module 107, an output cache I module 108, an output cache II module 106, an adaptive routing...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com