Eye-movement tracking method and system based on recalling and annotation

An eye-tracking and mouse technology, applied in the fields of visual cognition, social computing, and human-computer interaction, can solve the problems of complex use process, high software and hardware costs, and difficulty in promotion, and achieve high acquisition accuracy, low economic cost, and comfort. high degree of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] A method and system for eye movement tracking based on recall and labeling of the present invention will be clearly and completely described below in conjunction with the accompanying drawings. Apparently, the described embodiments are only some of the embodiments of the present invention, not all of them. . Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the protection scope of the present invention.

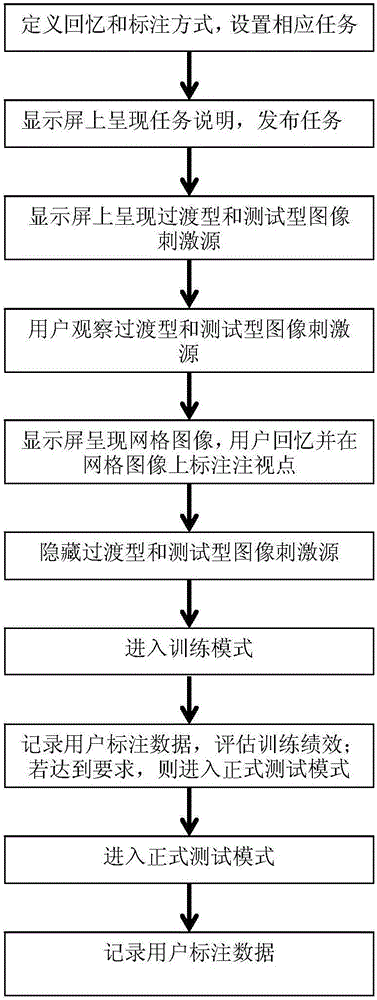

[0026] see figure 1 , a basic flow diagram of an eye movement tracking method based on recall and labeling provided by an embodiment of the present invention, which mainly includes steps:

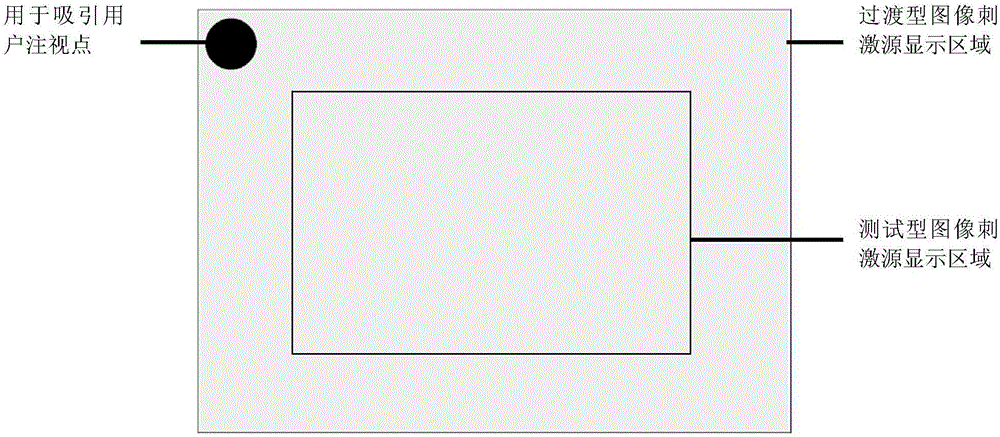

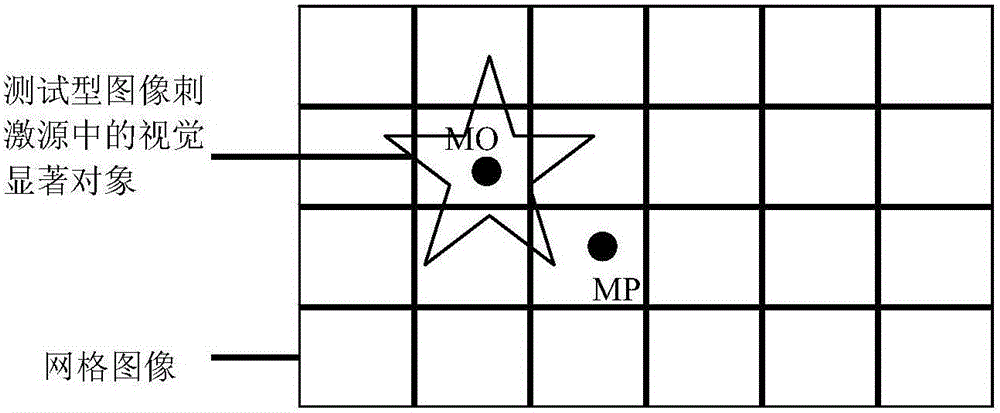

[0027] (1) Define the way for users to recall and label, and set corresponding tasks. There are three ways of recalling and labeling. Method 1, the user observes the image stimulus source, and then hides the image stimulus source, the user recalls the position of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com