A resource scheduling and allocation method and system for matching computing

A technology of resource scheduling and matching computing, which is applied in the resource scheduling method of matching computing and its system field, which can solve the problems of low flow destruction rate, adjustment allocation, poor load balancing effect, etc., to reduce the false positive rate and increase , the effect of low running overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

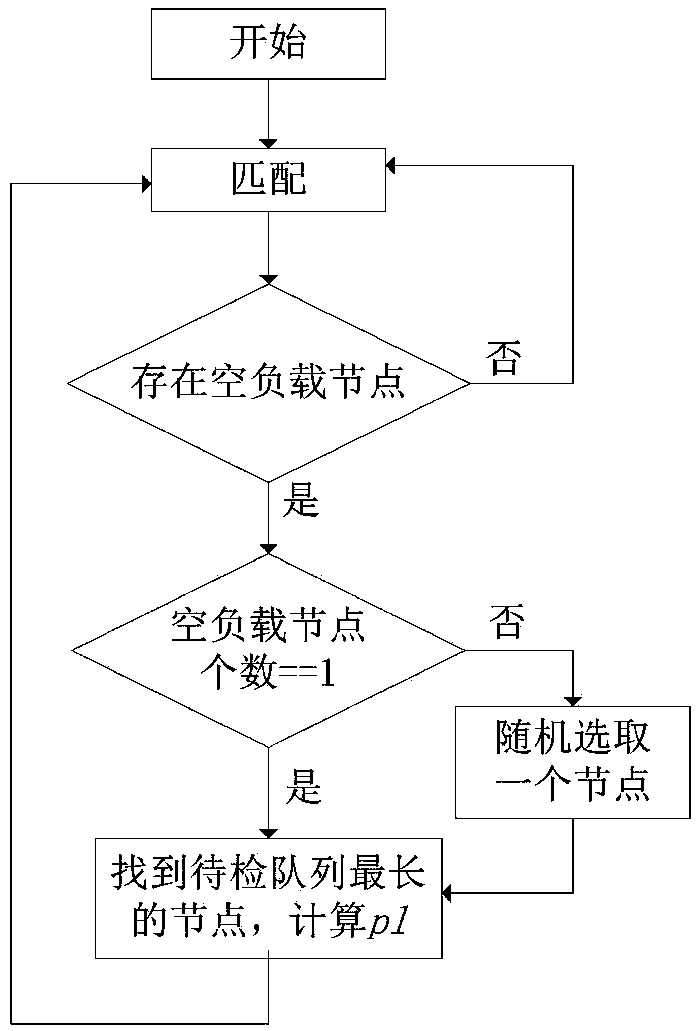

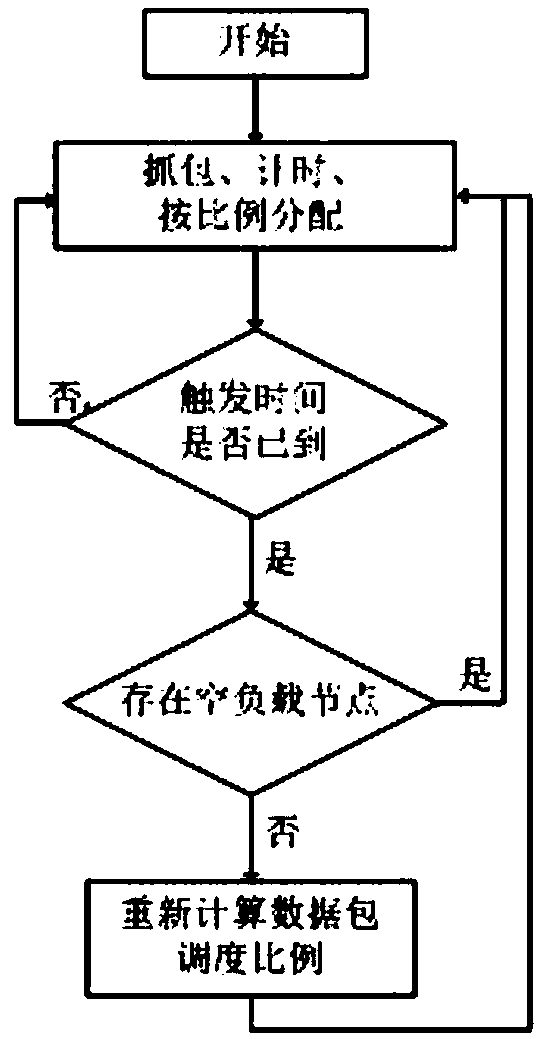

[0051] Such as Figure 1 to Figure 2 As shown, a resource scheduling allocation method for matching computing, including:

[0052] Monitor the computing load of each engine node in real time at intervals of △t, and sort the load of each engine node;

[0053] When there is an empty or overloaded node, the data packets to be detected of the node with the heaviest load are dispatched to the node with the lightest load according to a certain ratio in units of sessions, and the nodes are traversed for load balancing adjustment.

[0054] This embodiment specifically includes the following steps:

[0055] S1: Initialize △t;

[0056] S2: capture the data packet and distribute the data packet to each detection engine node;

[0057] S3: Detect the workload of each detection engine node after △t time, and sort the load of each detection engine node at the current moment in order from heavy to light;

[0058] S4: Detect whether there is an empty or overloaded node;

[0059] S5: If ye...

Embodiment 2

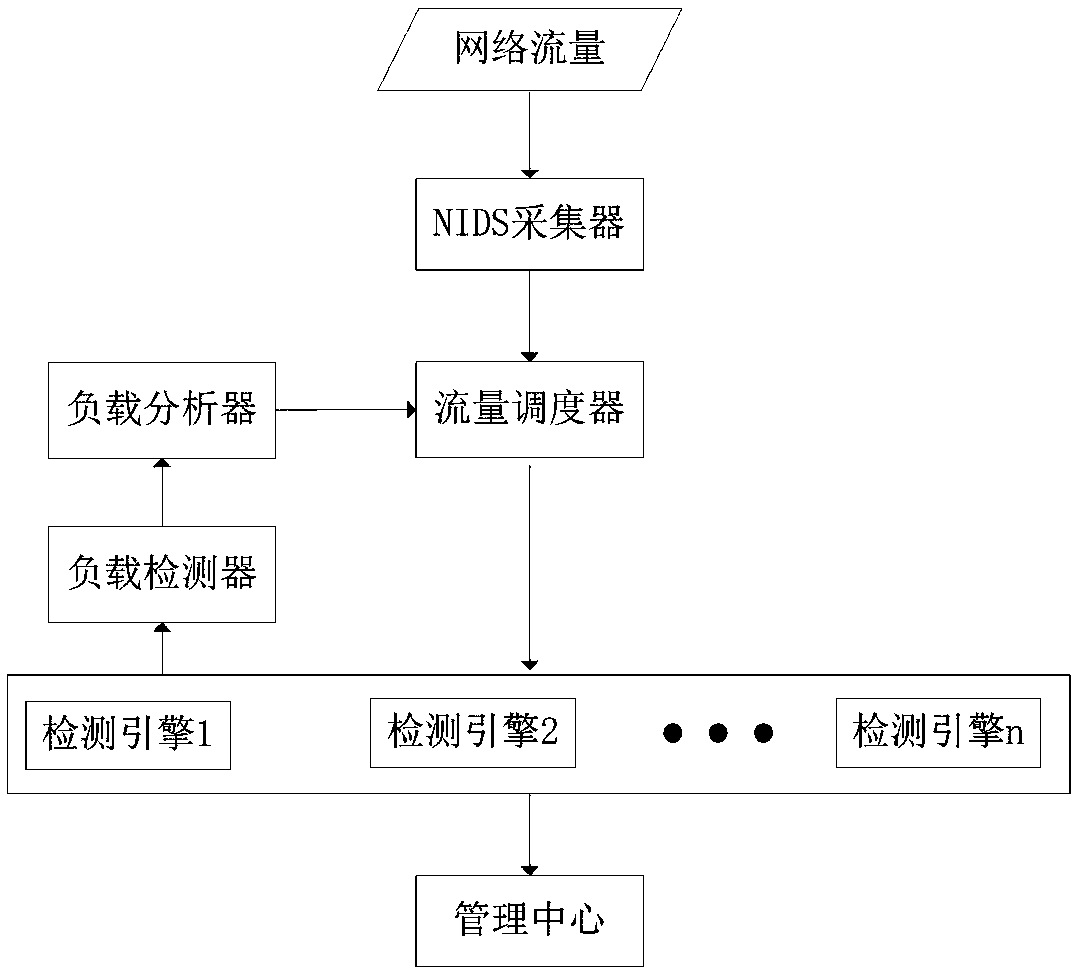

[0074] Such as image 3 As shown, a resource scheduling allocation system for matching computing includes a load detector, a load analyzer, a traffic scheduler and multiple detection engines, and the load detector is connected to the detection engine and the load analyzer respectively, and the load analysis The load detector is also connected with the traffic scheduler, and the load detector is used to dynamically detect the workload conditions of each engine node at intervals Δt time, and the load analyzer detects the load conditions of each engine node at the current moment according to the order from heavy to light The sequence is sorted, and the traffic scheduler is used to schedule the data packets to be detected of the engine node with the heaviest load to the node with the lightest load in units of sessions when an overloaded node or an empty node occurs. And the sessions in the next Δt time period are distributed to the sorted engine nodes in proportion.

[0075] In t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com