Stereoscopic visual structure three-dimensional distortion full-field measurement method

A technology of three-dimensional deformation and stereo vision, which is applied in the direction of measuring devices, instruments, and optical devices, etc., can solve the problems of large amount of calculation, long time consumption, and error of results, so as to improve accuracy and robustness, reduce the amount of calculation, shorten the time consuming effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0029] Specific implementation mode 1: The specific process of a full-field measurement method for three-dimensional deformation of a stereoscopic vision structure in this implementation mode is as follows:

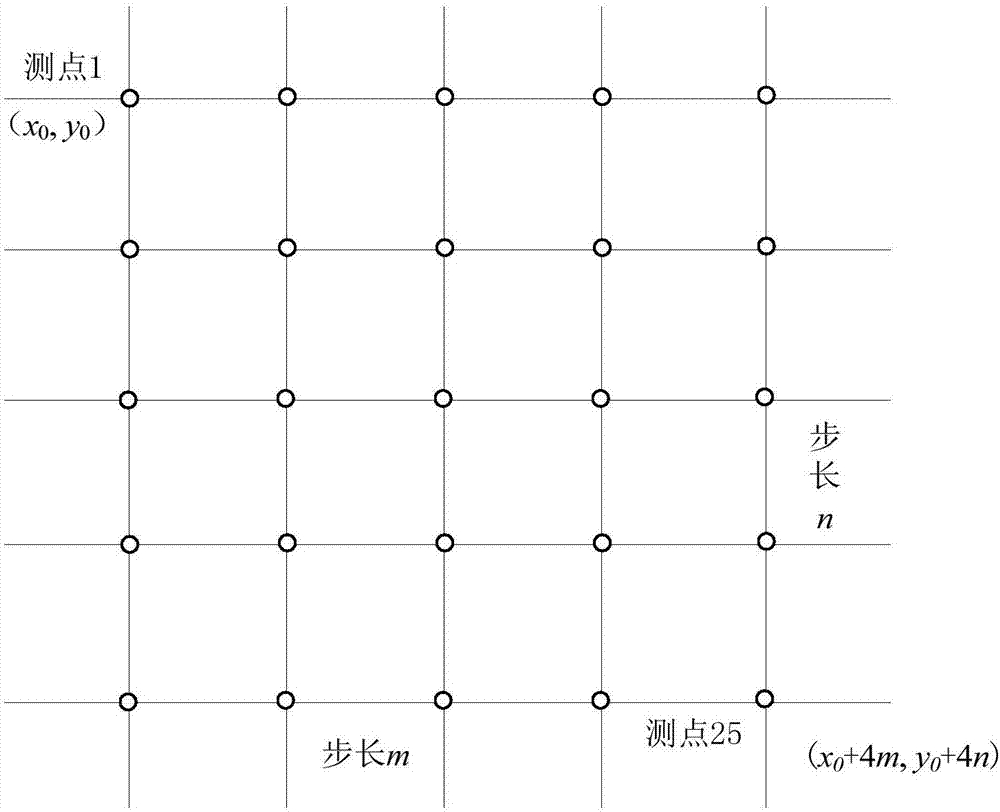

[0030] The invention provides a full-field measurement method and software for three-dimensional deformation of a stereoscopic structure, comprising: first selecting the left and right images at the initial time as the reference image at the initial time, and generating a measurement area in the reference image at the initial time by selecting points and setting the step length and forming The virtual grid performs three-dimensional matching on all grid nodes according to the digital image correlation method, and solves the initial three-dimensional coordinates of all grid nodes in the survey area. Then the left and right measurement areas are divided into 9 cells, and local search is performed according to the position of the cells where the grid nodes are located, and ti...

specific Embodiment approach 2

[0037] Specific embodiment two: the difference between this embodiment and specific embodiment one is: in the said step one, the images of civil engineering test specimens (such as reinforced concrete beams and reinforced concrete columns) are collected, and the images are calibrated by using the solid circle target three-step method , the stereo matching of the initial measurement area, and the initial three-dimensional coordinates of all grid nodes are obtained; the specific process is:

[0038] During the entire measurement process of the 3D deformation field, the 3D coordinates at the undeformed moment of the measurement area need to be used as the reference system, so the stereo matching of the left and right images at the initial moment is the first step in the entire measurement process. Considering the continuity of each point in the survey area at the initial moment, the present invention performs local search by limiting the stereo matching search area, and the specif...

specific Embodiment approach 3

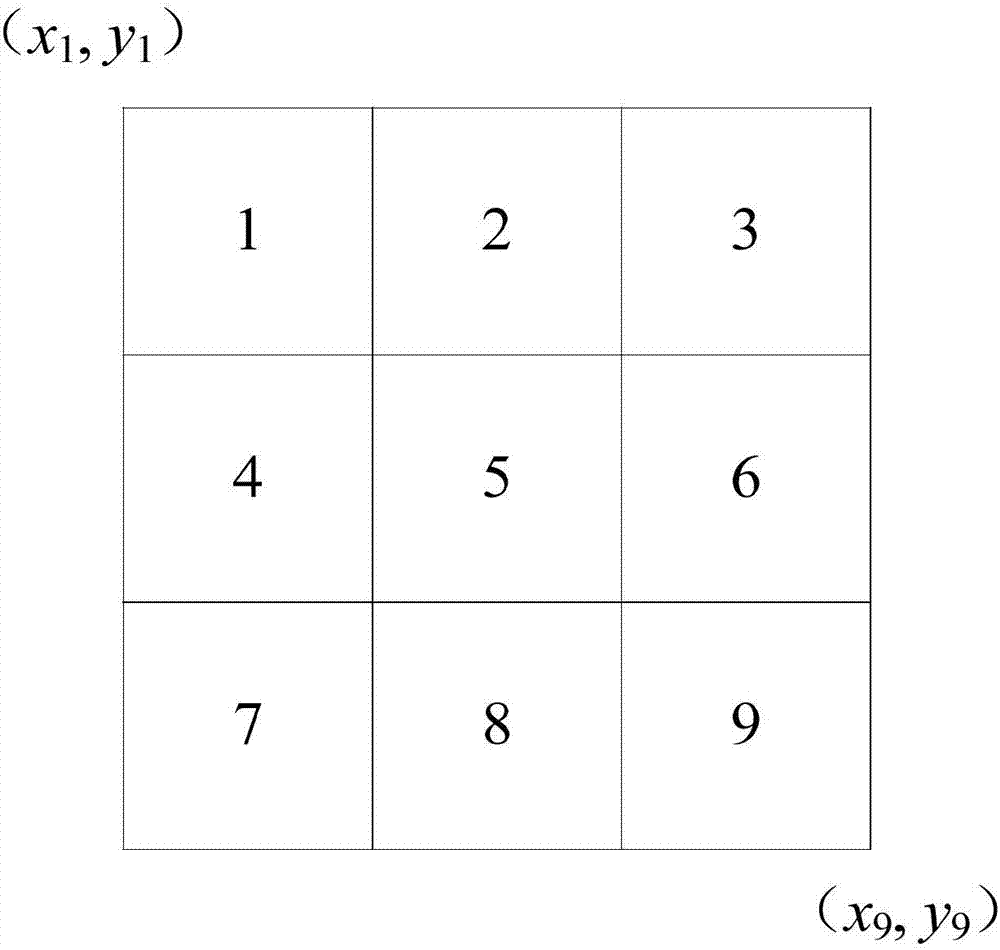

[0047] Specific embodiment three: the difference between this embodiment and specific embodiment one or two is that in the step two, all the grid nodes obtained in step one are matched by blocks, and whether the matching is invalidated is judged by the correlation function threshold. If there is a mismatch in the matching, go to Step 3; if the blocks are matched correctly, get the coordinates of the grid nodes after matching; the specific process is:

[0048] After the stereo matching of the survey area at the initial moment, the three-dimensional coordinates of all grid nodes in the survey area are determined. In order to determine the matching points in the left and right image sequences at any moment during the deformation process, it is necessary to perform timing matching on all measurement points. The invention proposes a block matching algorithm. The idea of this method is: divide the left and right measurement areas into 9 cells, and then perform local search accord...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com