An Indoor Robot Localization Method Fusion of Visual Odometry and Physical Odometry

A visual odometer and indoor robot technology, applied in the field of autonomous positioning accuracy of indoor mobile robots, can solve the problems of superposition and accumulation, which cannot be eliminated, and achieve the effect of meeting accuracy, ensuring efficiency and real-time performance, and solving the problem of error accumulation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be described in detail below with reference to the accompanying drawings and examples.

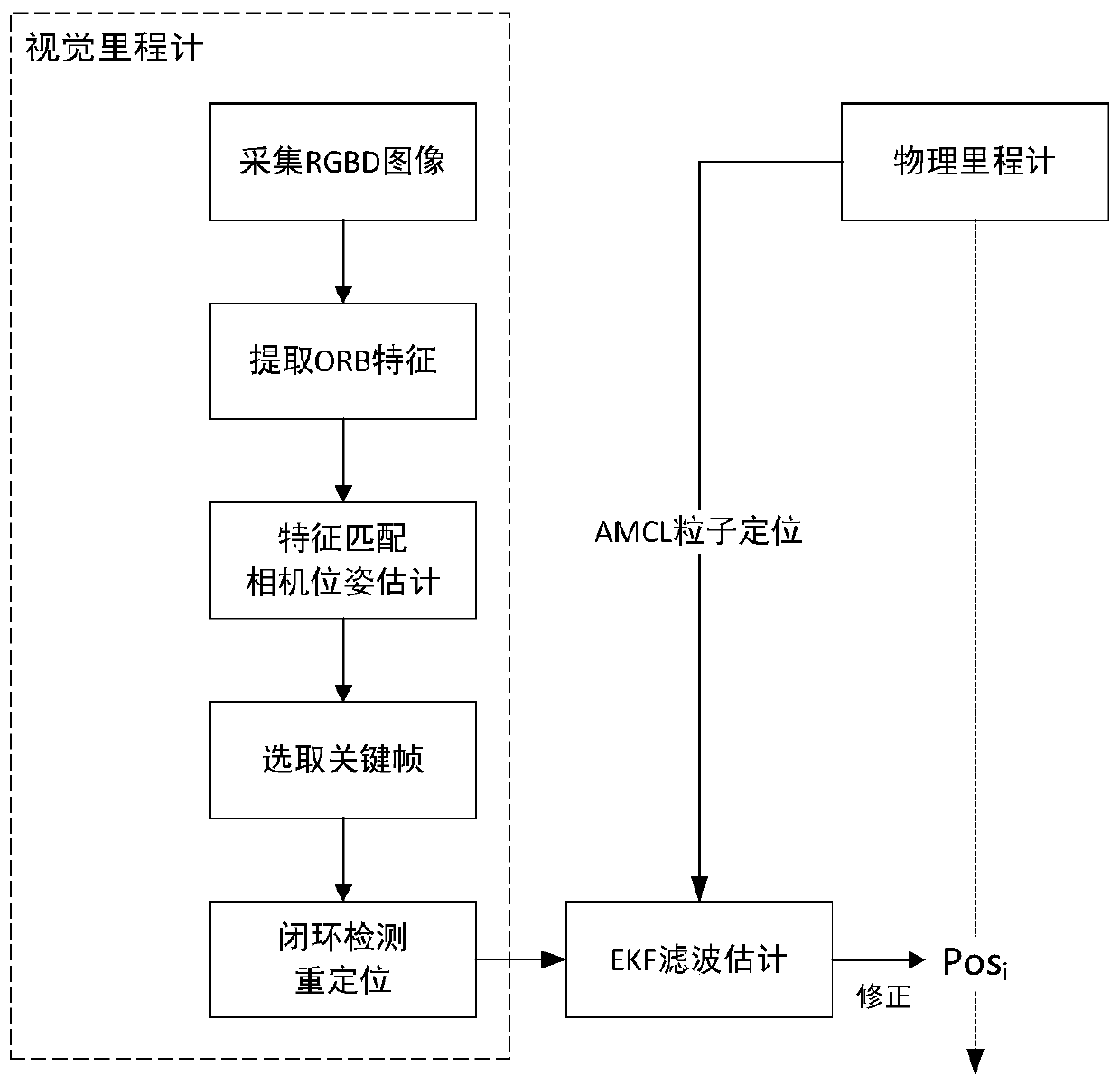

[0019] as attached figure 1 and 2 As shown, the present invention provides a

[0020] Step 1. Use ASUS depth camera Xtion to obtain color and depth images;

[0021] Step 2. Extract ORB features from the obtained two consecutive images, calculate the descriptor of each orb feature point, and estimate the camera pose change through feature matching between adjacent images: 1) Combine the depth image to obtain the effective feature point 2) Match the orb feature and depth value of the feature point, and the RANSAC algorithm eliminates the wrong point pair; 3) Obtain the rotation matrix R and translation matrix T between adjacent images, and estimate the pose transformation of the camera;

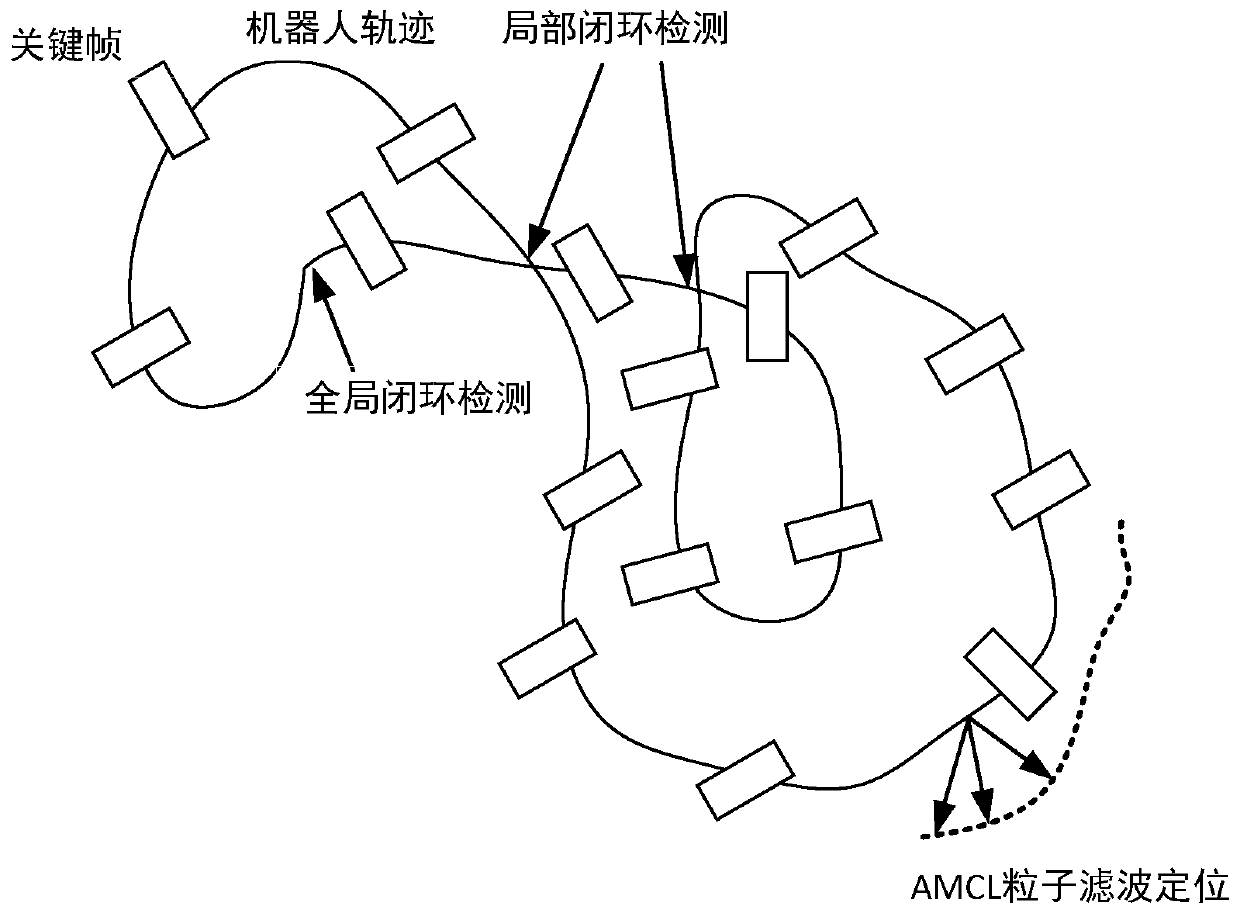

[0022] Step 3. During the movement of the robot, select the image with the most common feature points and the best match in several adjacent frames of images as the key ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com