Neural network model compression method and apparatus, storage medium and electronic device

A technology of neural network model and compression method, which is applied in the field of computer storage media and electronic equipment, devices, and neural network model compression method, which can solve the problems of long feed-forward time and limit the application of neural network, etc., and achieve the effect of strong feature extraction ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

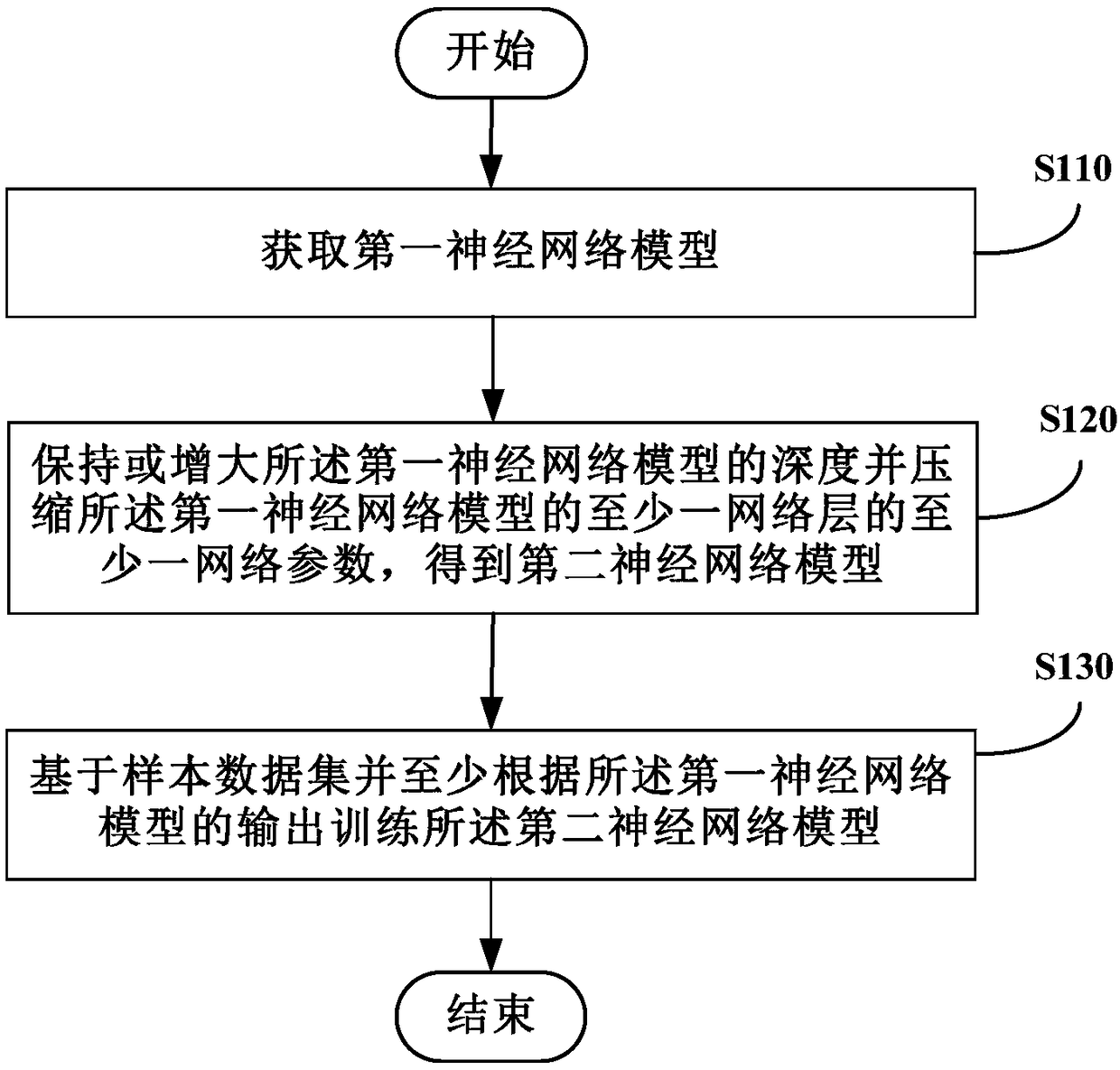

[0046] figure 1 It is a flowchart showing a neural network model compression method according to Embodiment 1 of the present invention.

[0047] refer to figure 1 , in step S110, the first neural network model is acquired.

[0048] The first neural network model here may be a trained neural network model. That is to say, the neural network model compression method according to Embodiment 1 of the present invention is suitable for compressing any general neural network model.

[0049] In the embodiment of the present invention, the training of the first neural network model is not limited, and the first neural network model can be pre-trained by any traditional network training method. According to the functions, characteristics and training requirements to be realized by the first neural network model, the first neural network model can be pre-trained using a supervised learning method, an unsupervised method, a reinforcement learning method or a semi-supervised method.

...

Embodiment 2

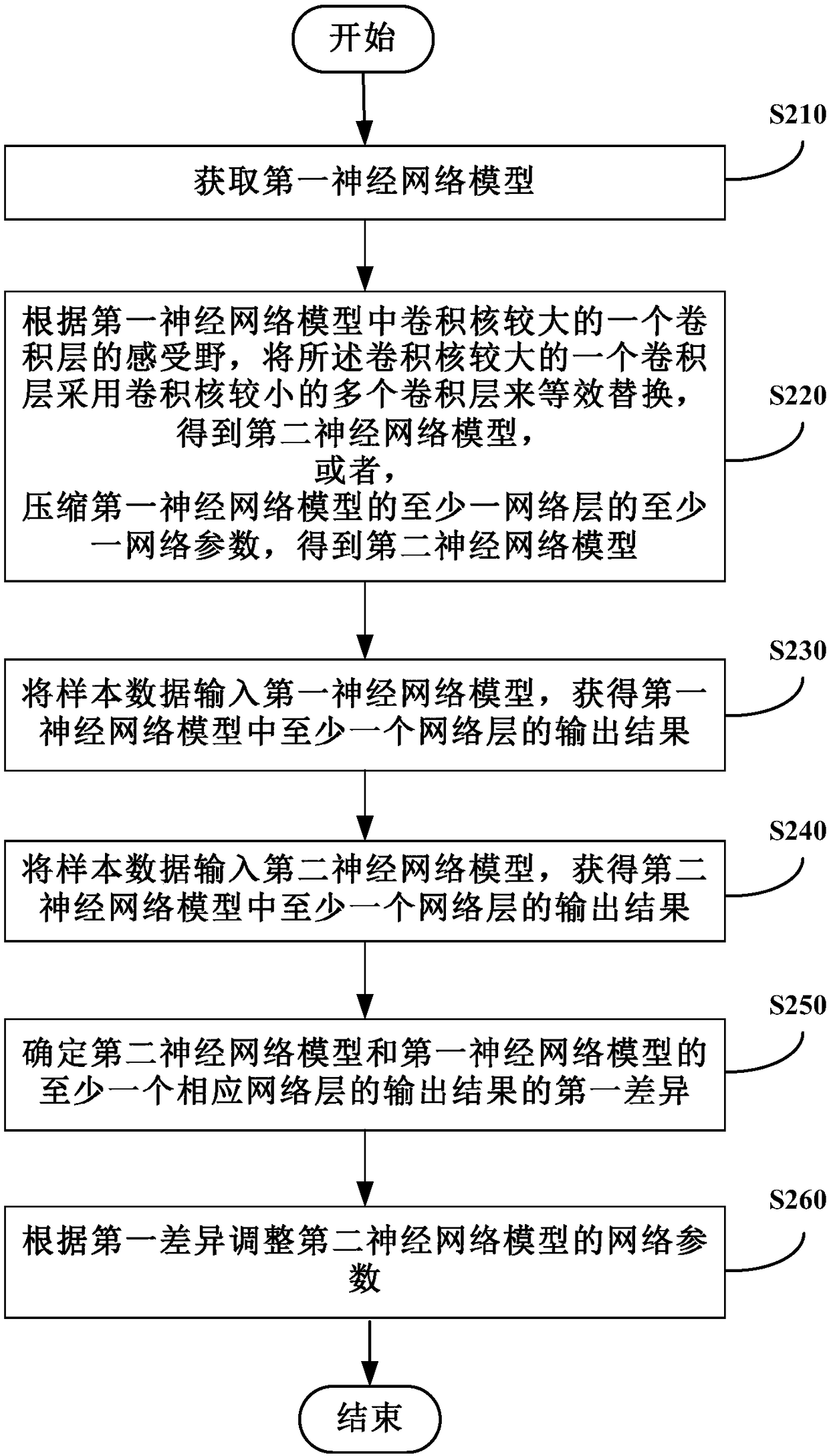

[0060] figure 2 It is a flow chart showing a neural network model compression method according to Embodiment 2 of the present invention.

[0061] refer to figure 2 , in step S210, the first neural network model is acquired. The processing of this step is similar to the processing of the aforementioned step S110, and will not be repeated here.

[0062] According to an optional implementation manner of the present invention, in step S220, according to the receptive field of a convolution layer with a larger convolution kernel in the first neural network model, a convolution layer with a larger convolution kernel is used to convolve Multiple convolutional layers with smaller kernels are equivalently replaced to obtain the second neural network model.

[0063] For the first neural network model, which usually includes convolutional layers, one convolutional layer with a large kernel can be replaced by multiple convolutional layers with a smaller kernel for the convolutional l...

Embodiment 3

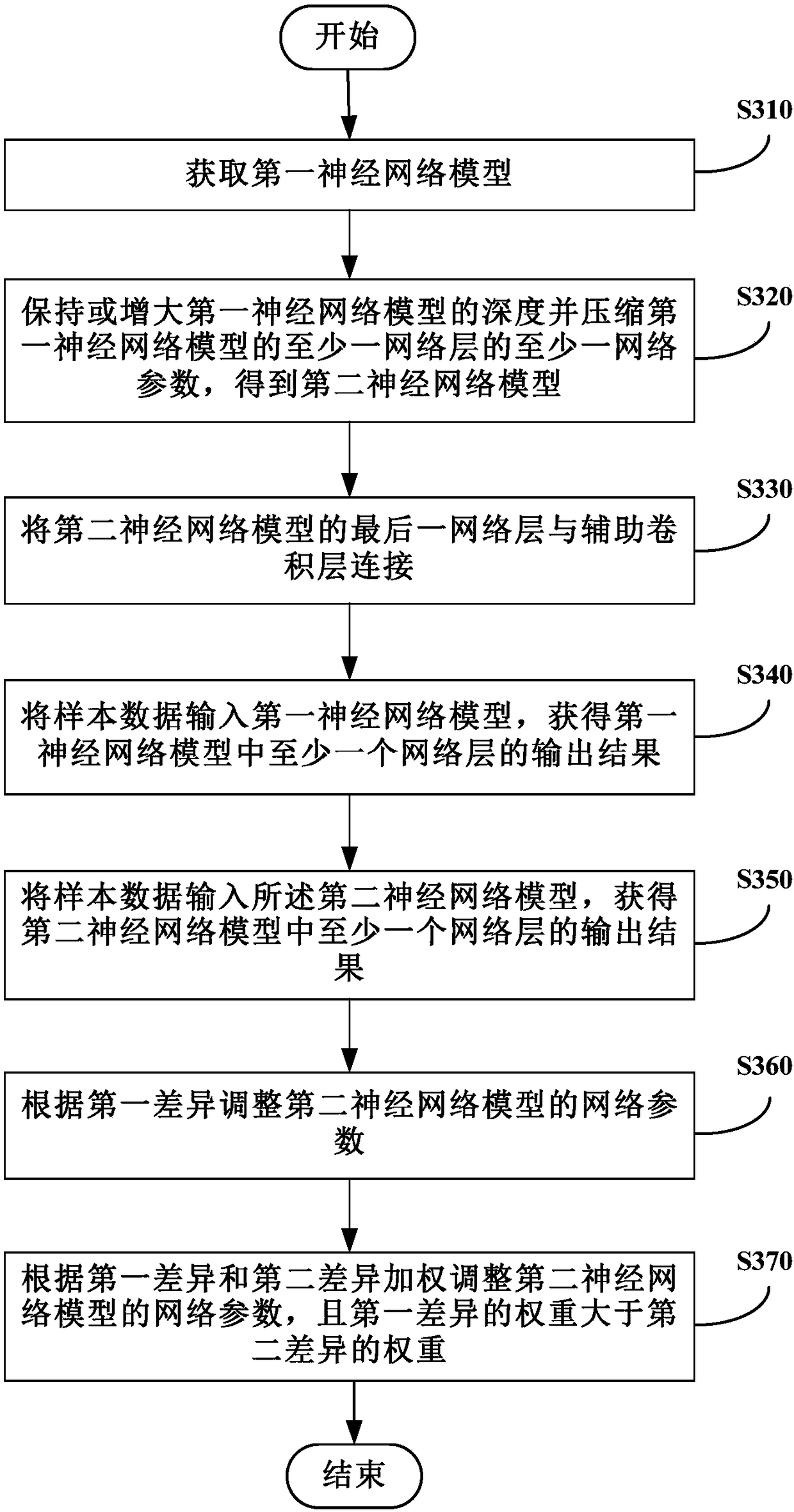

[0095] image 3 It is a flowchart showing a neural network model compression method according to Embodiment 3 of the present invention.

[0096] refer to image 3 , in step S310, the first neural network model is acquired. The processing of this step is similar to the processing of the aforementioned step S110, and will not be repeated here.

[0097] In step S320, maintain or increase the depth of the first neural network model and compress at least one network parameter of at least one network layer of the first neural network model to obtain a second neural network model.

[0098] The processing of this step is similar to the processing of the aforementioned step S120 or step S220, and will not be repeated here.

[0099] Since the second neural network model is a network generated after the first neural network model is compressed, its network parameters are reduced, in order to improve the feature expression ability of the second neural network model, in the neural netwo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com