Deep image intensification method fused with RGB image information

A technology of RGB image and depth image, which is applied in the field of depth image enhancement that integrates RGB image information, can solve the problems of limited computing speed and low real-time performance of the algorithm, and achieve the effect of improving accuracy and ensuring accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

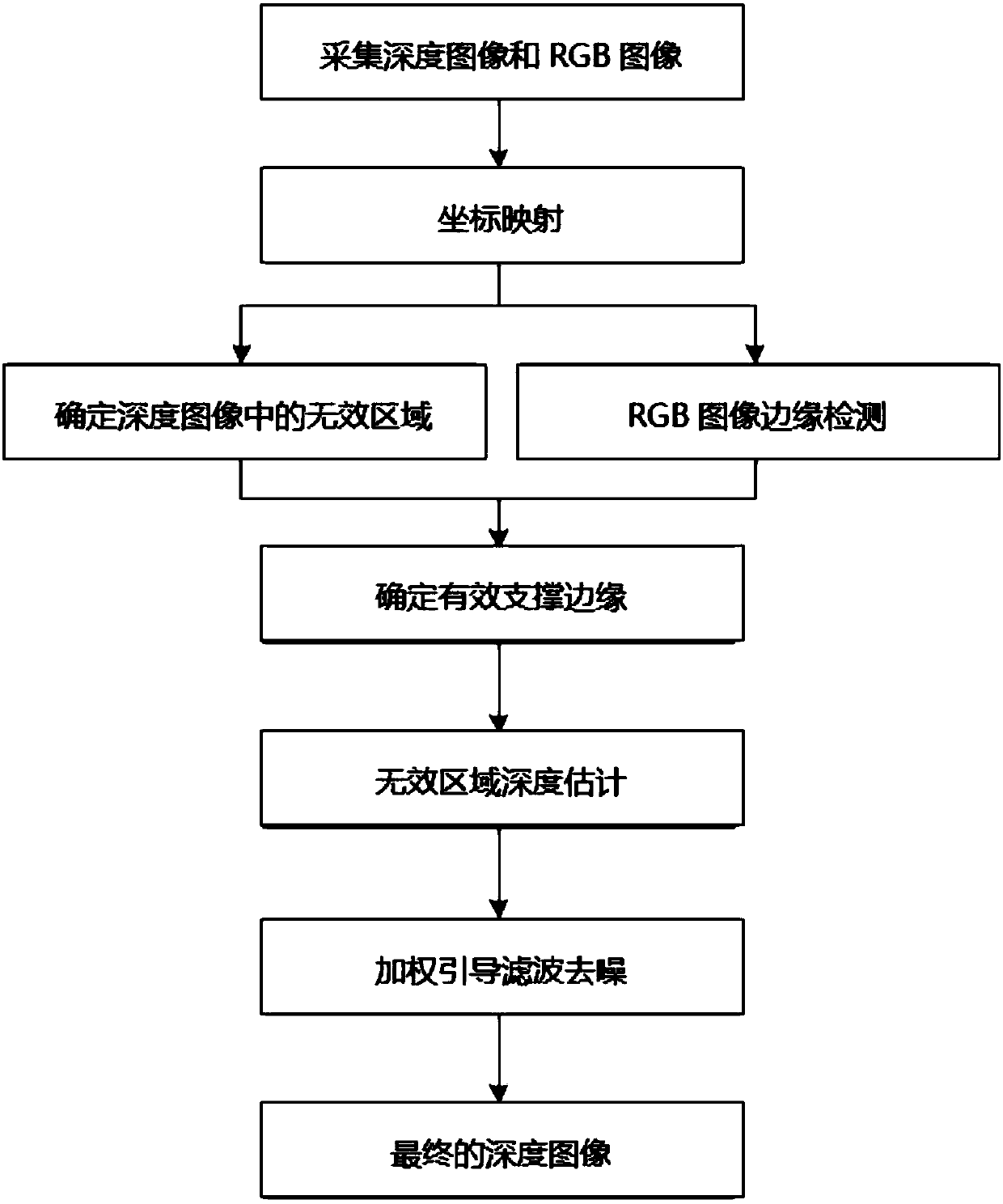

[0050] Below in conjunction with accompanying drawing and specific embodiment, a kind of depth image enhancement method of fusing RGB image information that the present invention proposes is described in further detail. The advantages and features of the present invention will be apparent from the following description and claims. It should be noted that the drawings are all in very simplified form and use imprecise ratios, which are only used to facilitate and clearly assist the purpose of illustrating the embodiments of the present invention.

[0051] see figure 1 , a depth image enhancement method for fusing RGB image information, comprising the following steps:

[0052] S1: Obtain the mapping relationship between the coordinates of the depth image and the RGB image;

[0053] S2: Preprocess the depth image and the RGB image respectively; extract the invalid area in the depth image and mark the pixel position of the invalid area;

[0054] Carry out edge detection to the R...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com