Depth feature bag-based classification method

A technology of deep features and classification methods, applied in image analysis, image data processing, instruments, etc., can solve the problems of large amount of calculation and long time consumption of 3D convolutional neural network, avoid over-fitting problems, shorten time, improve The effect of classification performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

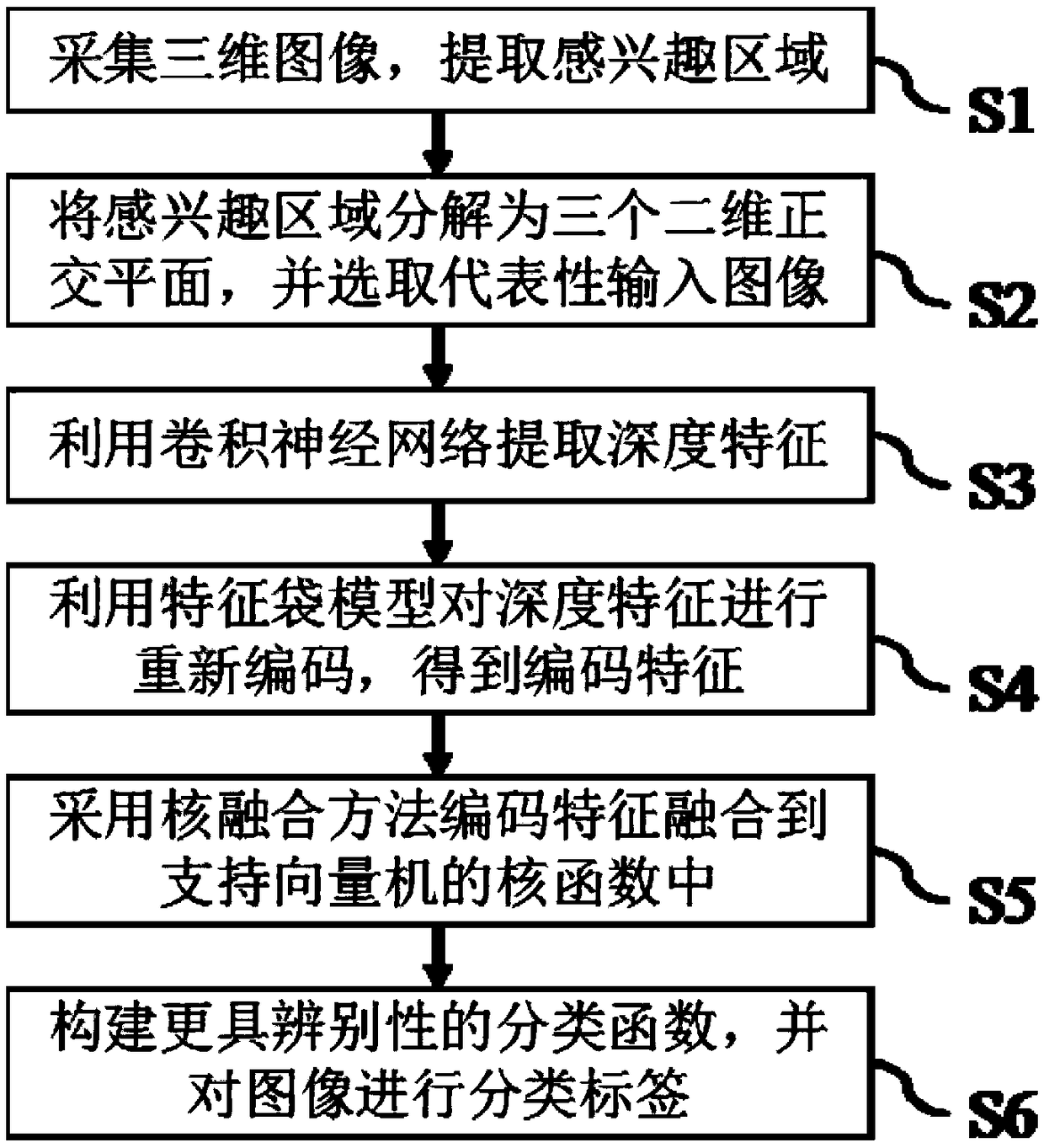

[0040] Such as Figure 1-3 As shown, a classification method based on deep feature bags includes the following steps in turn:

[0041] S1. Collect the 3D image of the target object, outline and segment the region of interest;

[0042] S2. Decompose the three-dimensional image of the region of interest obtained in step S1 into two-dimensional images of three two-dimensional orthogonal planes to obtain three sets of two-dimensional image groups, and select a pixel point from each set of two-dimensional image groups The most two-dimensional images are respectively used as input images of three two-dimensional orthogonal planes;

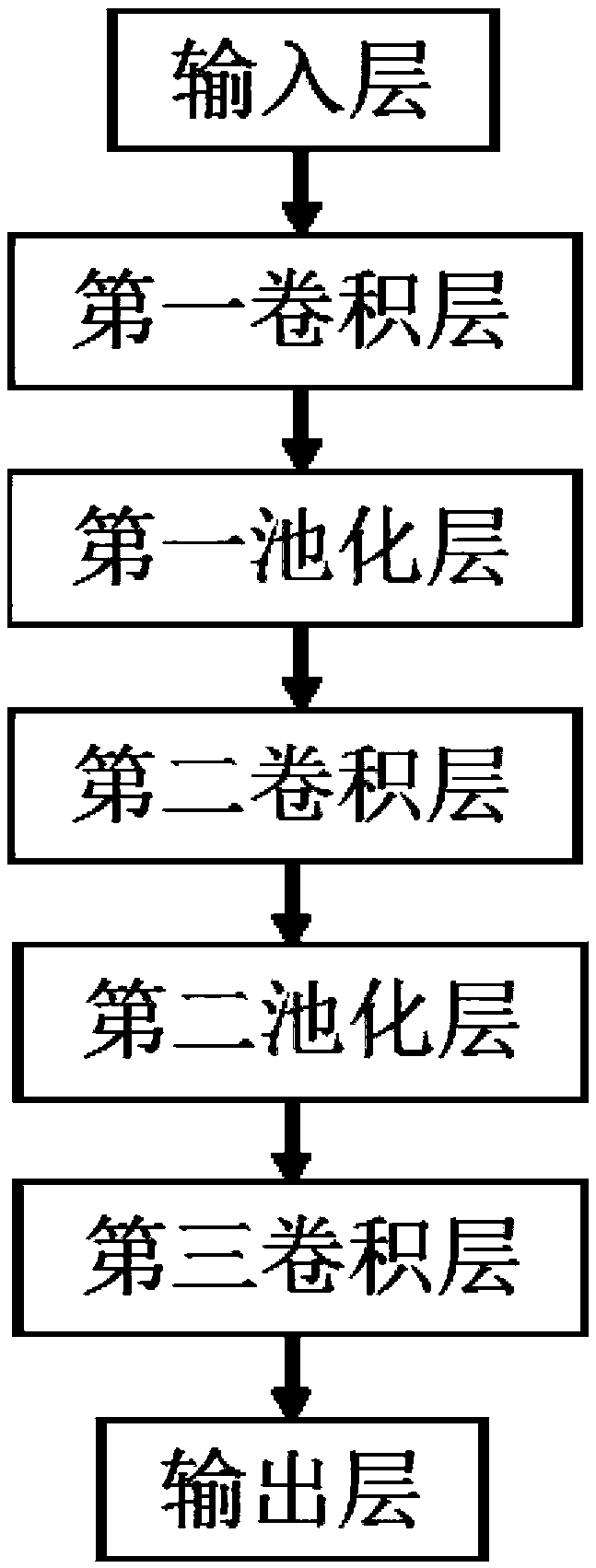

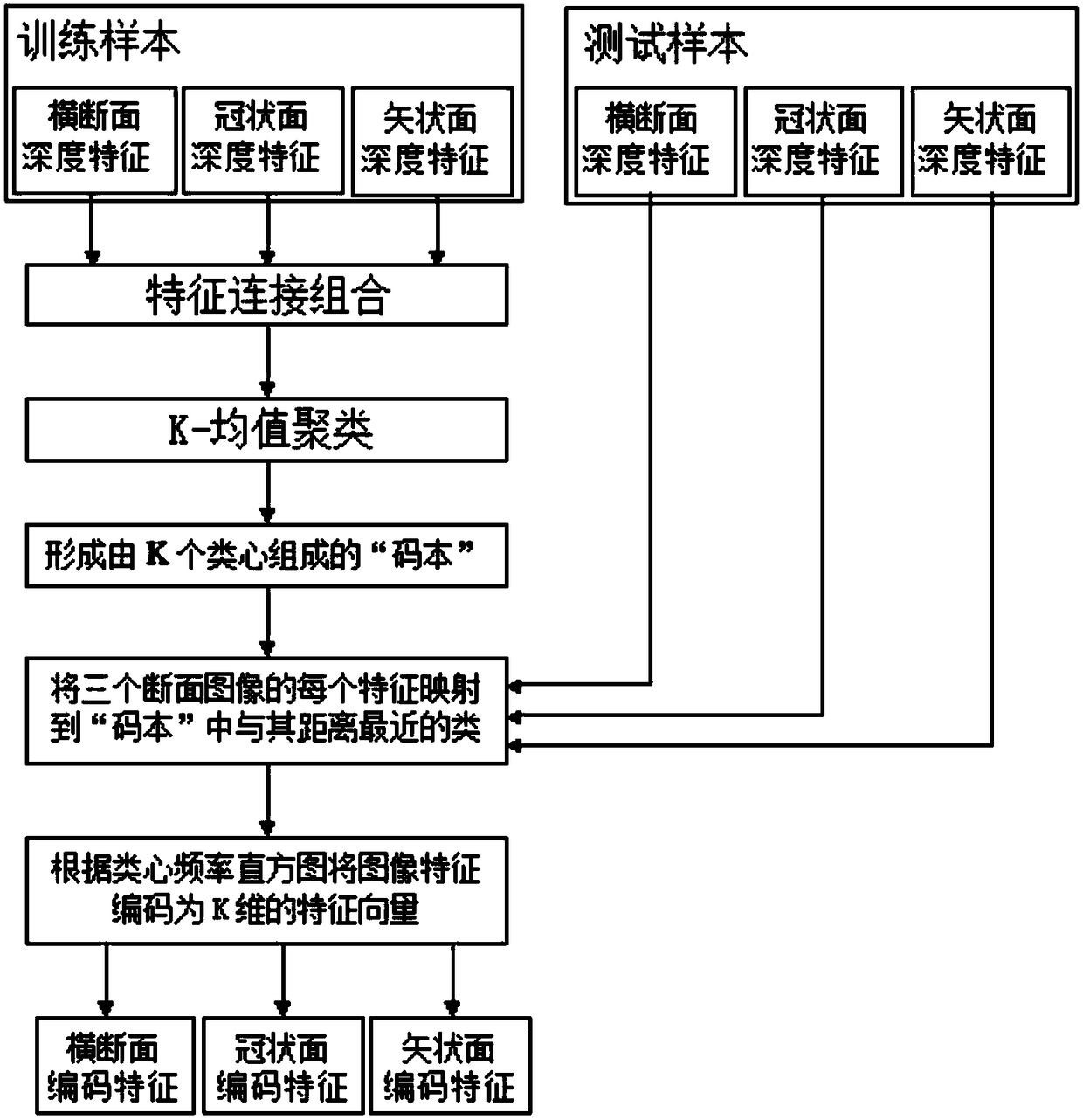

[0043] S3. Use the convolutional neural network to perform feature extraction on the input images of the three two-dimensional orthogonal planes obtained in step S2, and obtain the depth features of the three two-dimensional orthogonal planes. The depth features of the three two-dimensional orthogonal planes include training Sample depth features and t...

Embodiment 2

[0059] A classification method based on deep feature bags, other features are the same as in Embodiment 1, the difference is that in step S5, the kernel function is a Gaussian kernel, and the Gaussian kernel expression is: where D RBF (x i ,x j ) is the Gaussian distance, V=1 or 2 or 3, representing the cross-section, coronal plane and sagittal plane respectively, that is, ω 1 , ω 2 , ω 3 represent the weighting coefficients of the transverse plane, coronal plane and sagittal plane respectively, Denote the Gaussian distance of the transverse plane, coronal plane and sagittal plane respectively; X i ,X j Represents the i-th and j-th samples in the training sample features; σ is a hyperparameter, which is determined during the training process; k represents the k-th feature in the feature vector; N is the total number of training samples.

[0060] In step S6, the classification function expression is:

[0061]

[0062] where α i Indicates the weight coefficient o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com