Depth video fast intraframe coding method

An intra-frame coding and depth video technology, applied in the field of video coding, can solve the problems of increasing coding complexity, not considering depth video texture, lack of research on depth layer distribution characteristics, etc., to reduce computational complexity and save mode rough selection. Effect of time and PU mode traversal time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

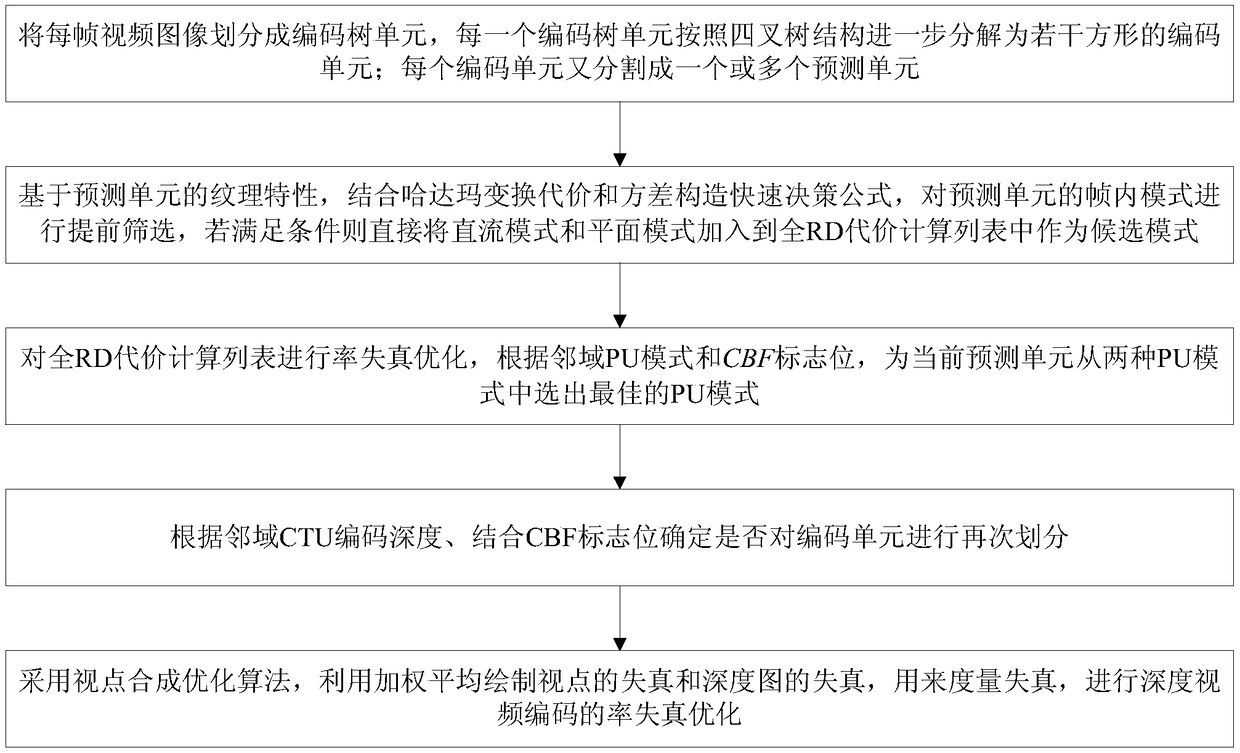

[0045] In order to overcome the shortcomings of the existing technology, the embodiment of the present invention proposes a fast intra-frame encoding method for depth video based on content characteristics, which reduces the encoding time without significantly reducing the video quality. The specific technical solutions are mainly divided into the following Several steps:

[0046] 101: Divide each frame of video image into coding tree units, and each coding tree unit is further decomposed into several square coding units according to the quadtree structure; each coding unit is further divided into one or more prediction units;

[0047]102: Based on the texture characteristics of the prediction unit, combined with the Hadamard transform cost and variance, construct a fast decision-making formula to screen the intra-frame mode of the prediction unit in advance, and directly add the DC mode and planar mode to the full RD cost calculation list if the conditions are met as a candid...

Embodiment 2

[0060] The scheme in embodiment 1 is further introduced below in conjunction with specific examples and calculation formulas, see the following description for details:

[0061] Taking the video sequence Kendo as an example, the specific implementation process of the algorithm is illustrated by encoding it. The order of the input video sequence is as follows: color viewpoint 3, depth viewpoint 3, color viewpoint 1, depth viewpoint 1, color viewpoint 5, depth viewpoint 5, wherein the color viewpoint is encoded by the original 3D-HEVC encoding method, and the depth viewpoint is implemented by the present invention The method proposed by the example is coded.

[0062] 1. Coding tree unit division

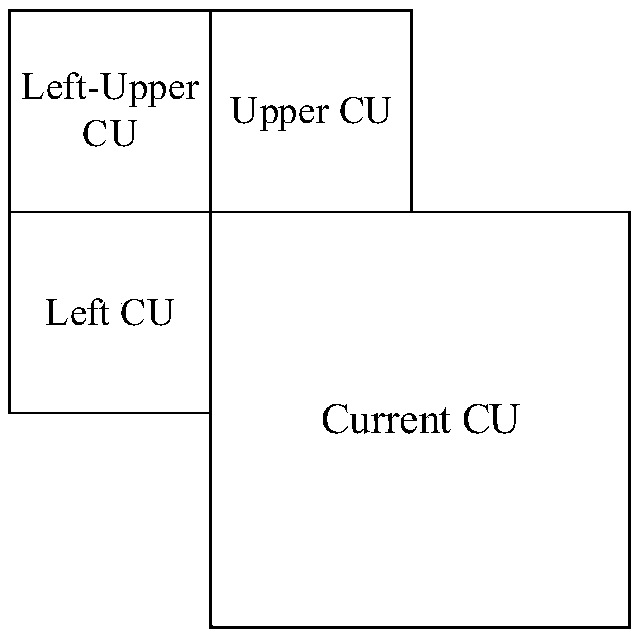

[0063] HEVC adopts a block-based encoding method, and the size of a block can be adaptively changed by division. When the encoder processes a frame of image, the image is first divided into coding tree units (CodingTree Unit, CTU) with a size of 64×64 pixels. Each coding tree unit c...

Embodiment 3

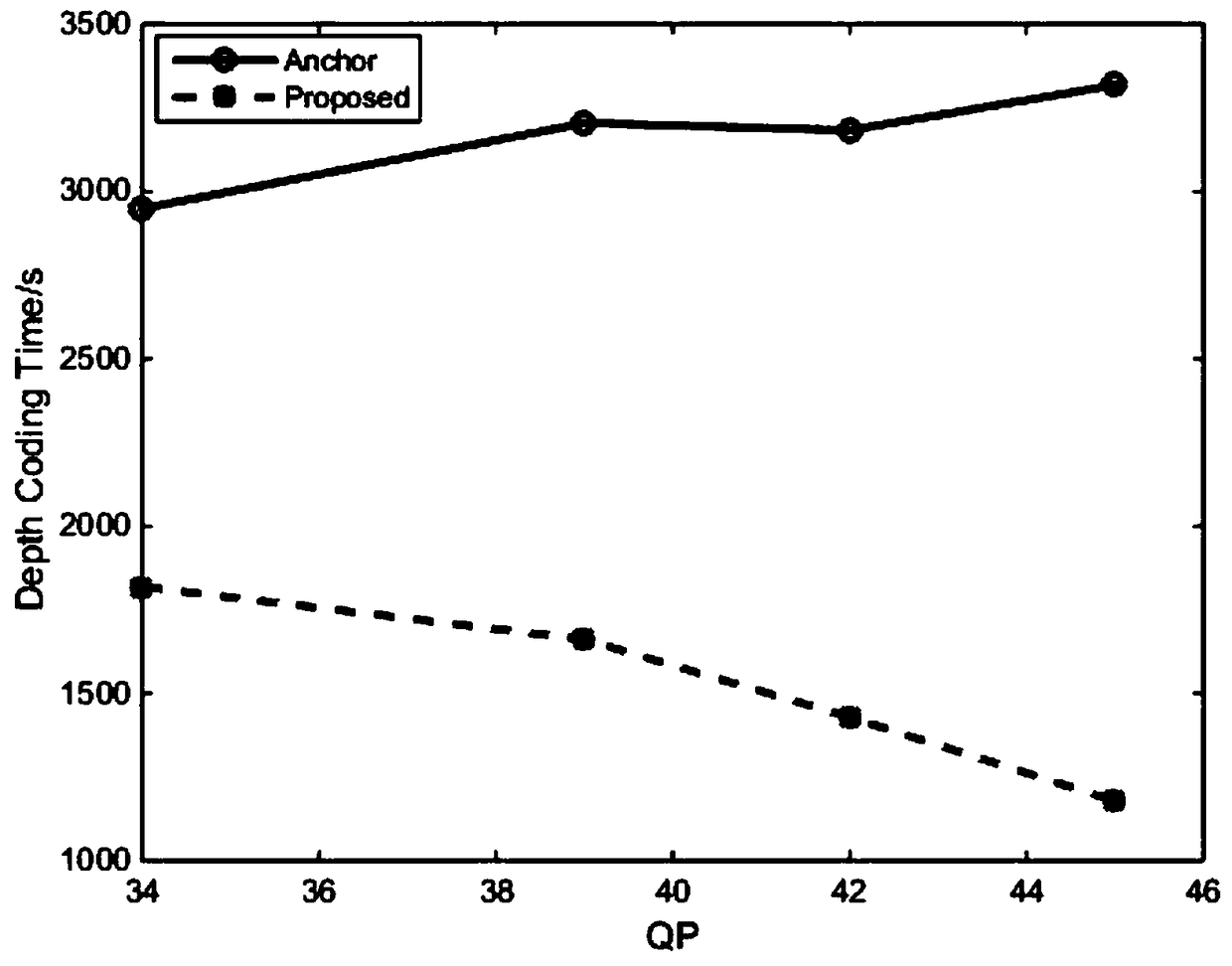

[0107] Below in conjunction with concrete experimental data, the scheme in embodiment 1 and 2 is carried out feasibility verification, see the following description for details:

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com