Method and circuit of accelerated operation of pooling layer of neural network

A neural network and accelerated computing technology, applied in the field of accelerated computing of the pooling layer of neural networks, can solve the problems of large computational load of neural networks and reduce chip area, achieve high computing throughput, reduce chip area, and improve reuse efficiency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

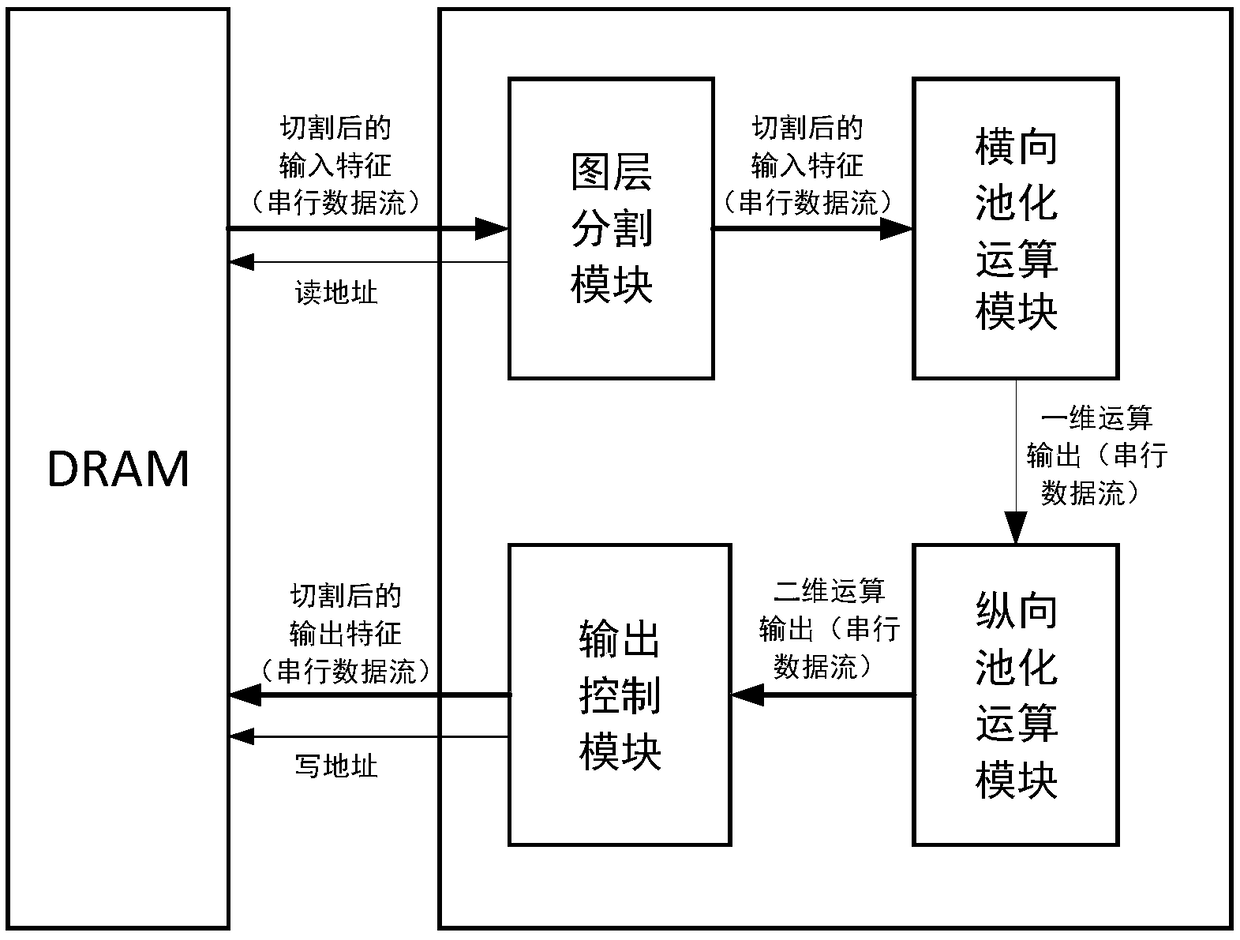

[0036] In the present invention, the basic block diagram of the circuit of high-efficiency accelerated pooling operation is as follows figure 1 shown. The design works as follows:

[0037] The input feature layers for the pooling operation are stored in external memory (DRAM). First, the layer segmentation module will divide the layer according to the width direction according to the width information of the input layer, so that the divided layer can be put into the vertical pooling operation module for operation (the vertical pooling operation module There is a limit to the maximum width of the layer, so input layers that are particularly large in the width direction need to be split). The division here is only a logical division, and does not require additional operations on the input layer, but only affects the order in which the data in the DRAM is read. The layer segmentation module will send the data stream of the input features after segmentation to the horizontal p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com