Mobile robot indoor autonomous localization method combining scene point line features

A mobile robot and autonomous positioning technology, applied in the direction of instruments, image data processing, computing, etc., can solve the problems of black holes and inaccurate depth values, and achieve the effect of simple and cheap positioning equipment, solving positioning failure, and strong robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

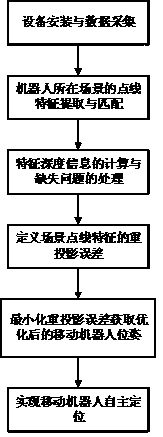

[0034] Embodiment one: see Figure 1 to Figure 4 , this mobile robot indoor autonomous positioning method combined with scene point line features, its specific operation steps are as follows:

[0035] 1) Equipment installation and data collection: fix the depth camera sensor on the top of the mobile robot, install universal wheels that can move in any direction at the bottom of the robot, and place a computer inside to process the environmental data acquired by the depth camera;

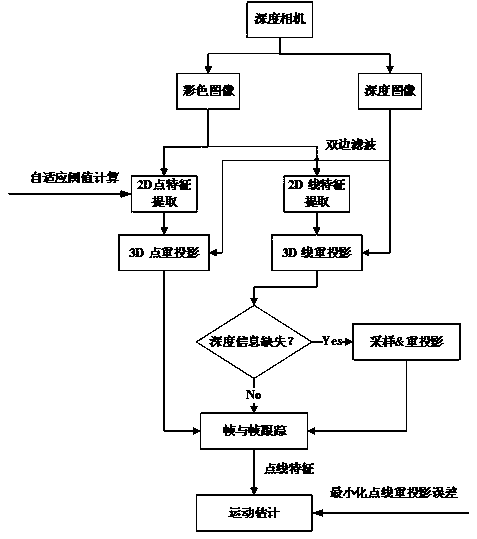

[0036] 2) Point and line feature extraction and matching of the scene where the robot is located: the depth camera acquires the color image and depth image of the scene where the robot is located, performs denoising preprocessing on the collected image, extracts and matches the point features and line features of the color image;

[0037] 3) Calculation of feature depth information and handling of missing problems: the depth camera calculates depth information and solves the problem of missing depth ...

Embodiment 2

[0040] Embodiment 2: This embodiment embodiment is basically the same as Embodiment 1, and the special features are:

[0041] In the described step 1), the depth camera and the universal wheel mobile chassis communicate with the computer through the robot operating system ROS interface; all data calculation and processing are carried out in the ROS system; the depth image collected by the depth camera equipment is denoised and preprocessed The method adopts the bilateral filtering algorithm.

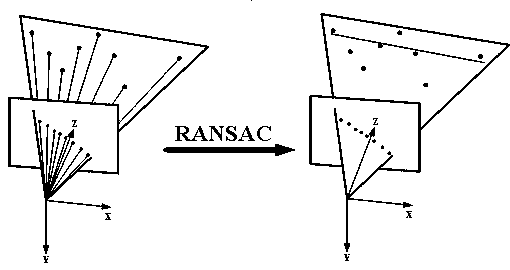

[0042] In the step 2), the extracted point features are ORB features, described by the BRIEF descriptor, and feature point matching is performed using the K nearest neighbor (KNN) algorithm according to the binary descriptor of the feature point. The extracted line features are LSD features, which are described by LBD descriptors, and the appearance and geometric constraints of line features are used to perform effective line matching; the ORB feature extraction algorithm uses an adaptiv...

Embodiment 3

[0046] Embodiment 3: The indoor autonomous positioning method of the mobile robot combined with scene point line features is as follows:

[0047] Such as figure 2 As shown, the depth camera (RGB-D) is built on the mobile robot platform, and the depth camera in the specific embodiment can adopt the cheap Kinect v2 depth camera of Microsoft Corporation, and this camera has 1920 * 1080 color image resolution, 512 * 424 depth The image resolution, the forward-looking horizontal viewing angle is 70 degrees, and the vertical viewing angle is 60 degrees, which can meet the requirements of the positioning method of the present invention. The mobile platform adopts Kobuki mobile chassis, which has a 3-axis digital gyroscope with a measurement range of ±250 degrees / second. This mobile chassis can meet the requirements of autonomous positioning and subsequent navigation. The collection of sensor data, data processing and calculation, and background data optimization are all completed b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com