Transparent caching traffic optimization method, load balancer and storage medium

A load balancer and traffic optimization technology, applied in the network field, can solve problems such as large response delay and slow download rate, and achieve the effect of reducing response delay, increasing download rate, and solving resource consumption problems.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

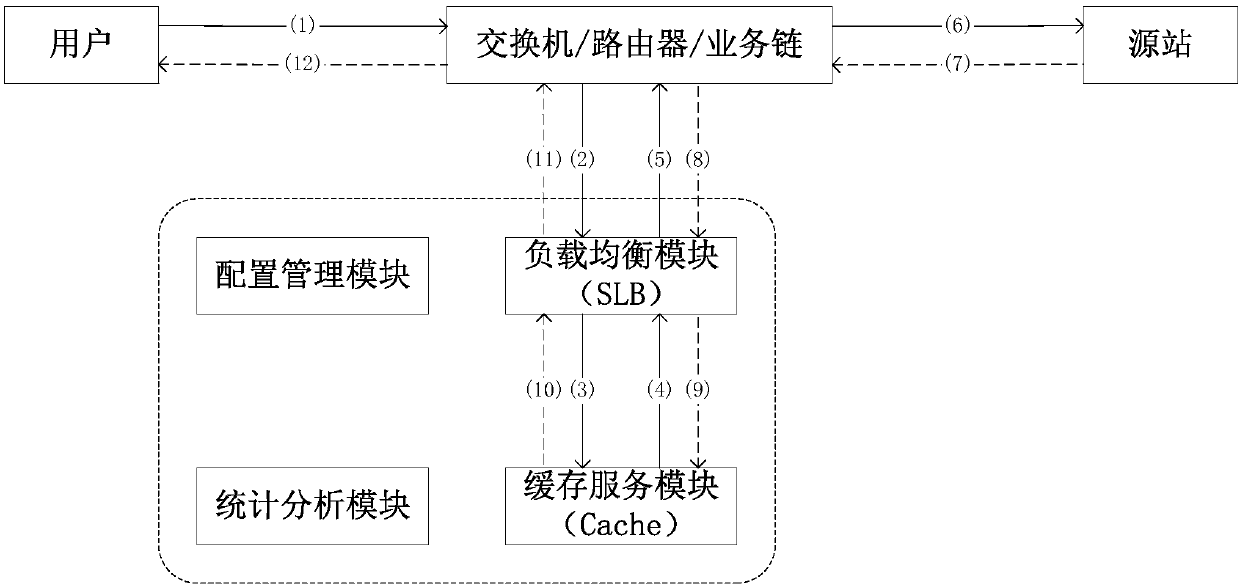

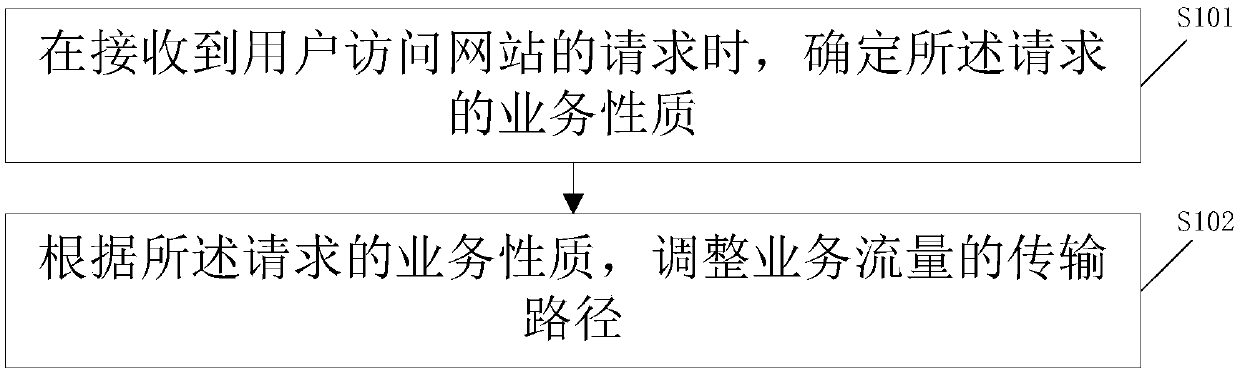

[0037] Such as figure 2 As shown, the embodiment of the present invention provides a transparent caching traffic optimization method, which is used in a load balancer in a transparent caching system in a cloud / virtualized environment, and includes:

[0038] S101: When receiving a request from a user to access a website (that is, an HTTP request), determine the business nature of the access request;

[0039] S102: Adjust the transmission path of the business traffic according to the business nature of the access request; the business traffic includes the access request and file data of the website in response to the access request.

[0040] In the embodiment of the present invention, when the load balancer receives a user request, it adjusts the transmission path of the service flow according to the service nature of the user request, avoiding that all user requests in the existing mode are all forwarded to the cache server, thereby solving the problem of the traditional mode The res...

Embodiment 2

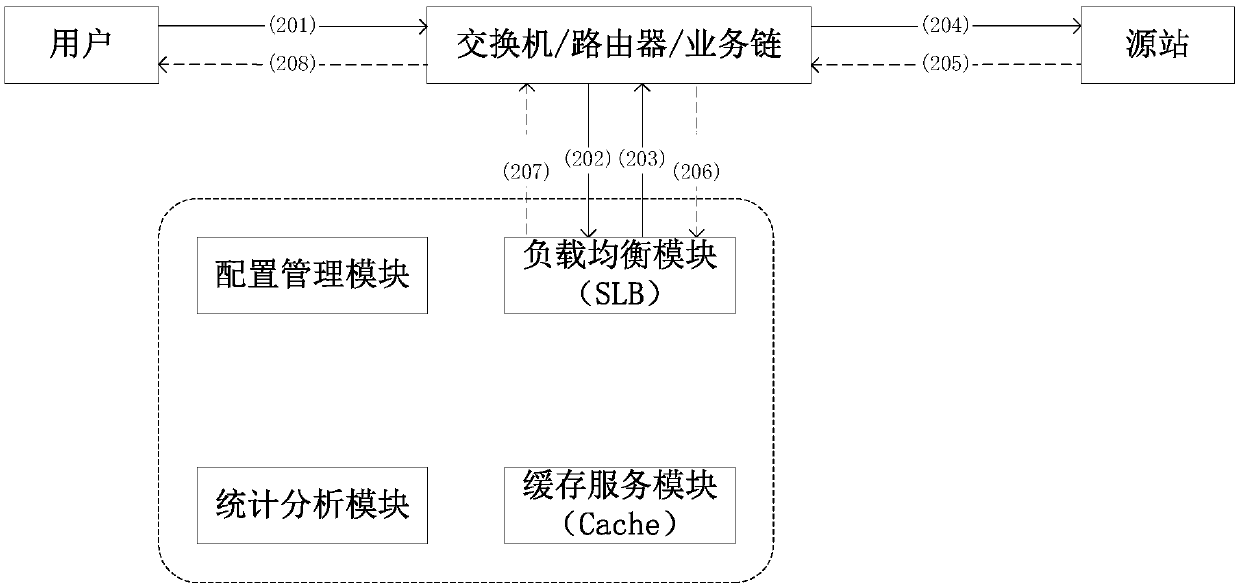

[0101] Such as Figure 4 As shown, an embodiment of the present invention provides a load balancer in a transparent cache system in a cloud / virtualization environment, the load balancer includes a memory 10 and a processor 12; the memory 10 stores a flow optimization computer program; The processor 12 executes the computer program to implement the following steps:

[0102] When receiving a request from a user to visit a website, determine the business nature of the request;

[0103] According to the business nature of the request, the transmission path of the business traffic is adjusted; the business traffic includes the request and the file data of the website in response to the request.

[0104] In the embodiment of the present invention, when the load balancer receives a user request, it adjusts the transmission path of the service flow according to the service nature of the user request, avoiding that all user requests in the existing mode are all forwarded to the cache server,...

Embodiment 3

[0120] An embodiment of the present invention provides a computer-readable storage medium that stores a transparently cached traffic optimization computer program in a cloudification / virtualization environment. When the computer program is executed by at least one processor, the computer program The steps of any one of the methods in Example 1.

[0121] The computer-readable storage medium in the embodiment of the present invention may be RAM memory, flash memory, ROM memory, EPROM memory, EEPROM memory, register, hard disk, mobile hard disk, CD-ROM or any other form of storage medium known in the art. A storage medium may be coupled to the processor, so that the processor can read information from the storage medium and write information to the storage medium; or the storage medium may be a component of the processor. The processor and the storage medium may be located in an application specific integrated circuit.

[0122] It should be noted here that the second embodiment and t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com