Method for reconstructing a three-dimensional facial expression model based on a monocular video

A 3D face and facial expression technology, applied in 3D modeling, character and pattern recognition, details involving processing steps, etc., can solve the problem that it is difficult to ensure the accuracy of the reconstructed coarse-scale face shape, and cannot obtain good recovery results, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The present invention will be described in detail below in conjunction with the accompanying drawings and embodiments.

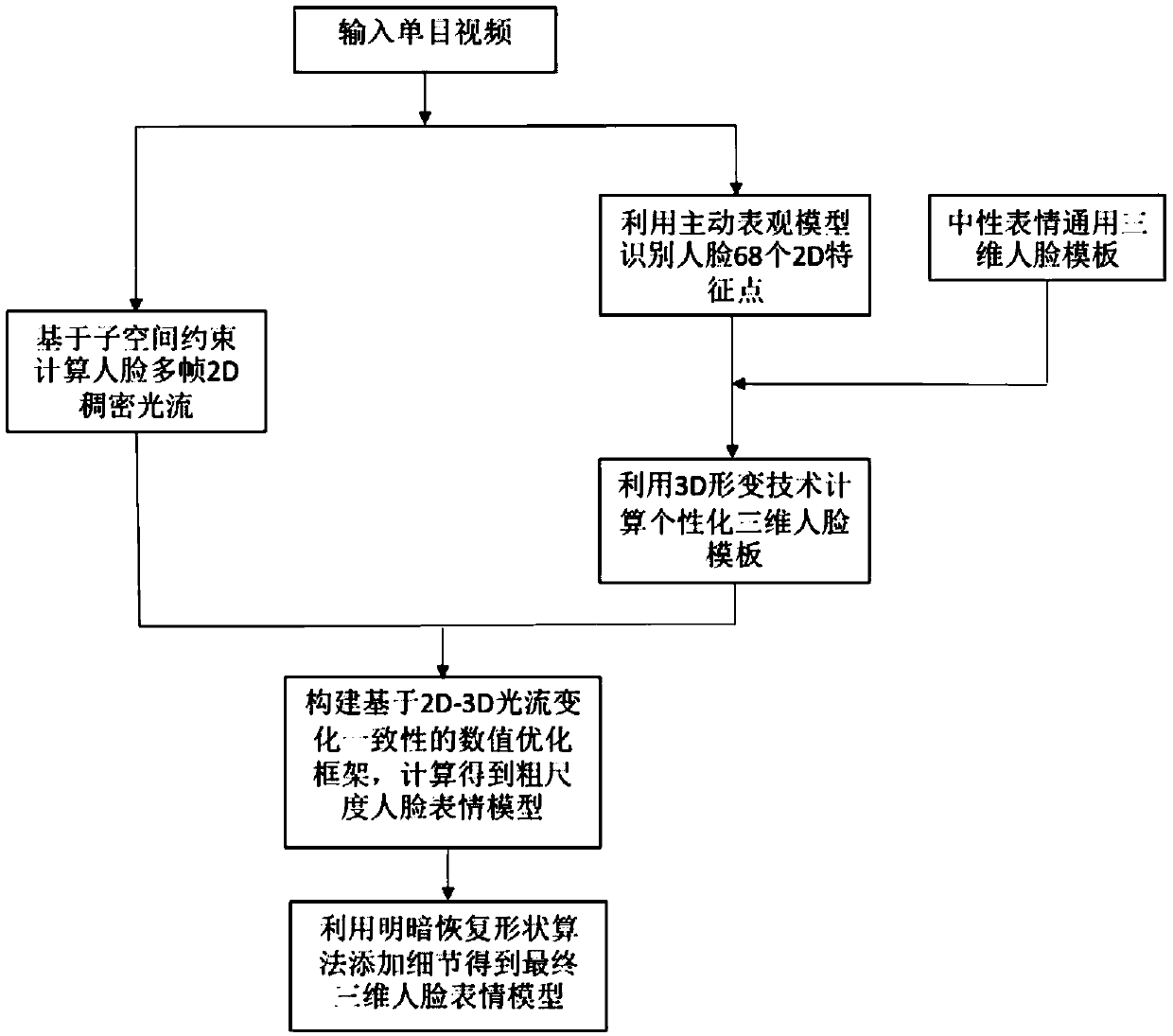

[0048] Such as figure 1 Shown, concrete steps of the present invention are as follows:

[0049] (1) Multi-frame 2D dense optical flow calculation based on subspace constraints

[0050] The two-dimensional optical flow field can be regarded as composed of the motion vector of each pixel of the image on the two-dimensional plane, defining the position of any pixel j on the image relative to its position in the reference image (x 1j ,y 1j )’s 2D motion vector is:

[0051] w j =[u 1j u 2j … u Fj |v 1j v 2j …v Fj ] T

[0052] Suppose there are P pixels in the image, and the input video has F frames, where u ij =x ij -x 1j , v ij =y ij -y 1j ,(x ij ,y ij ) is the coordinates of the i-th frame of point j, (x1j ,y 1j ) is the coordinates of point j in the first frame, that is, the reference image, 1≤i≤F, 1≤j≤P.

[0053] Based on the tr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com