Patents

Literature

95 results about "Monocular video" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

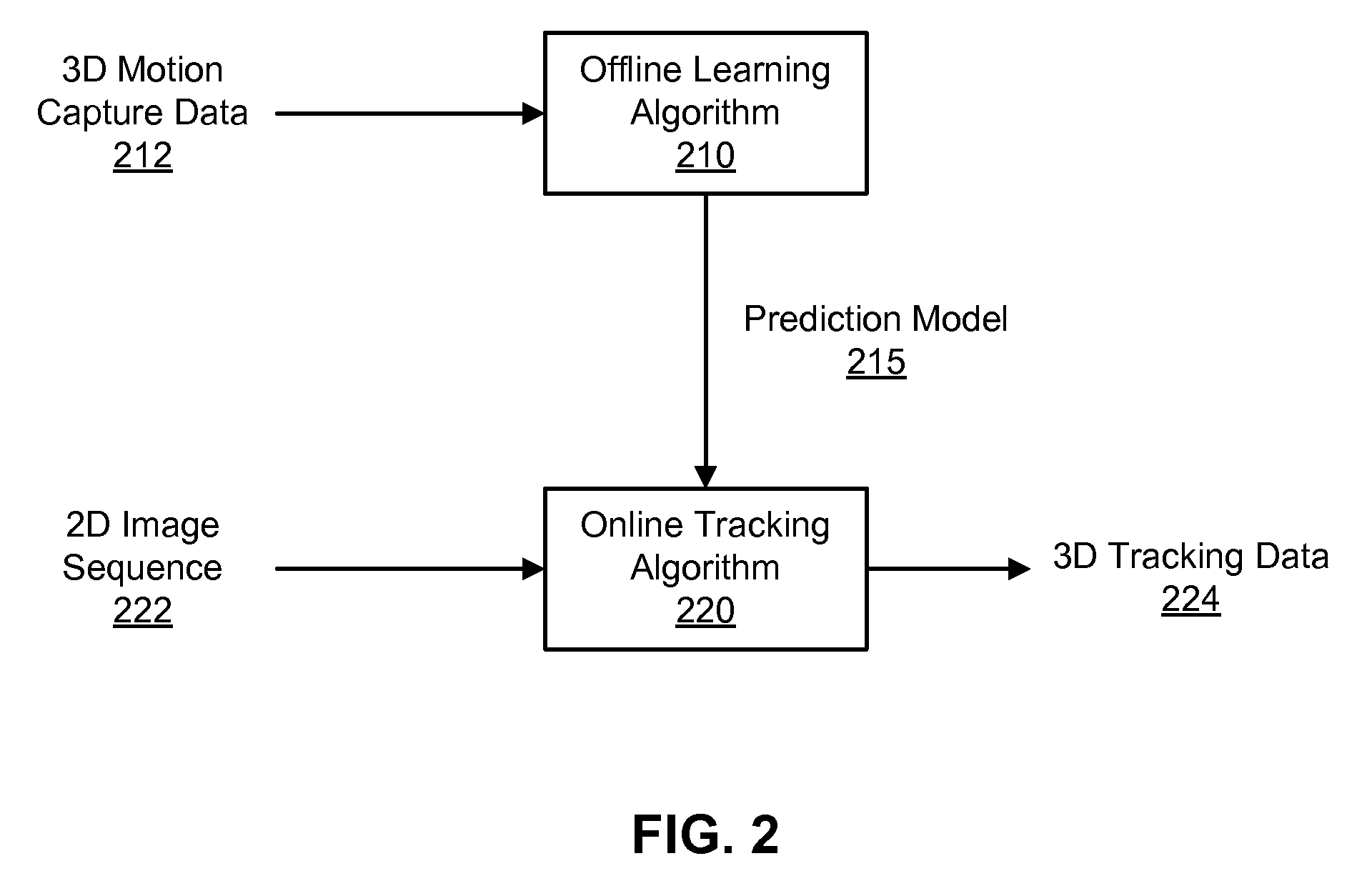

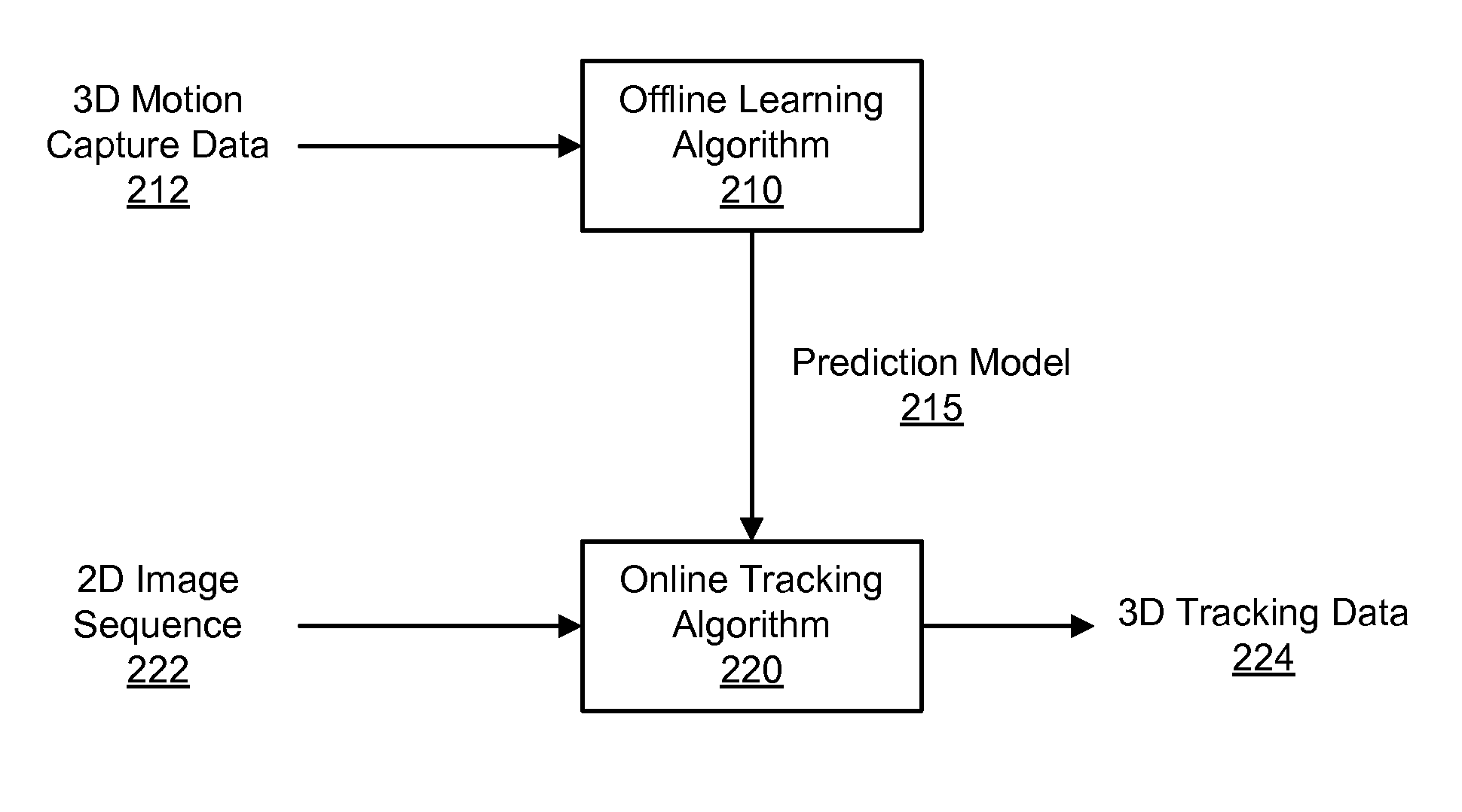

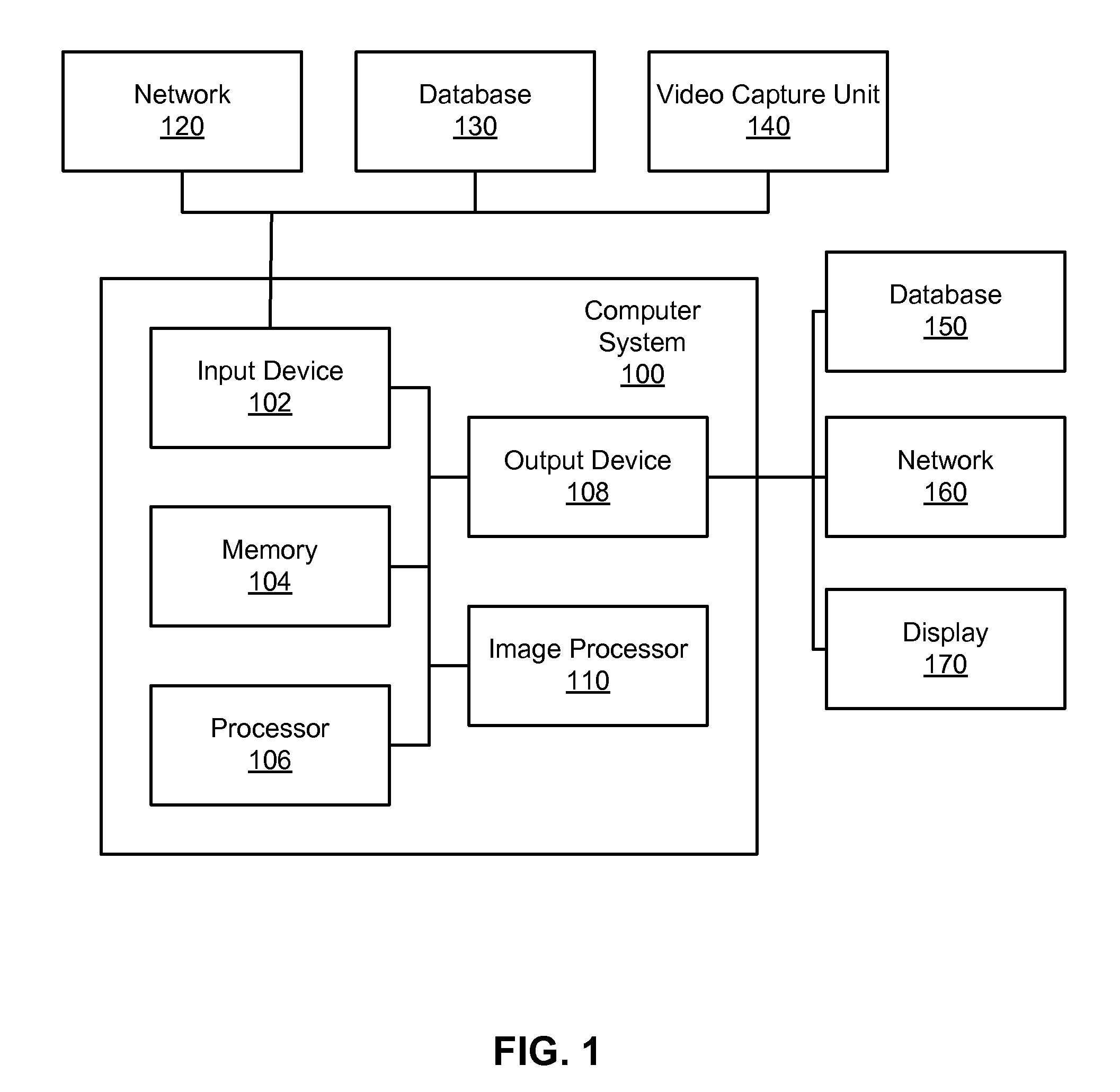

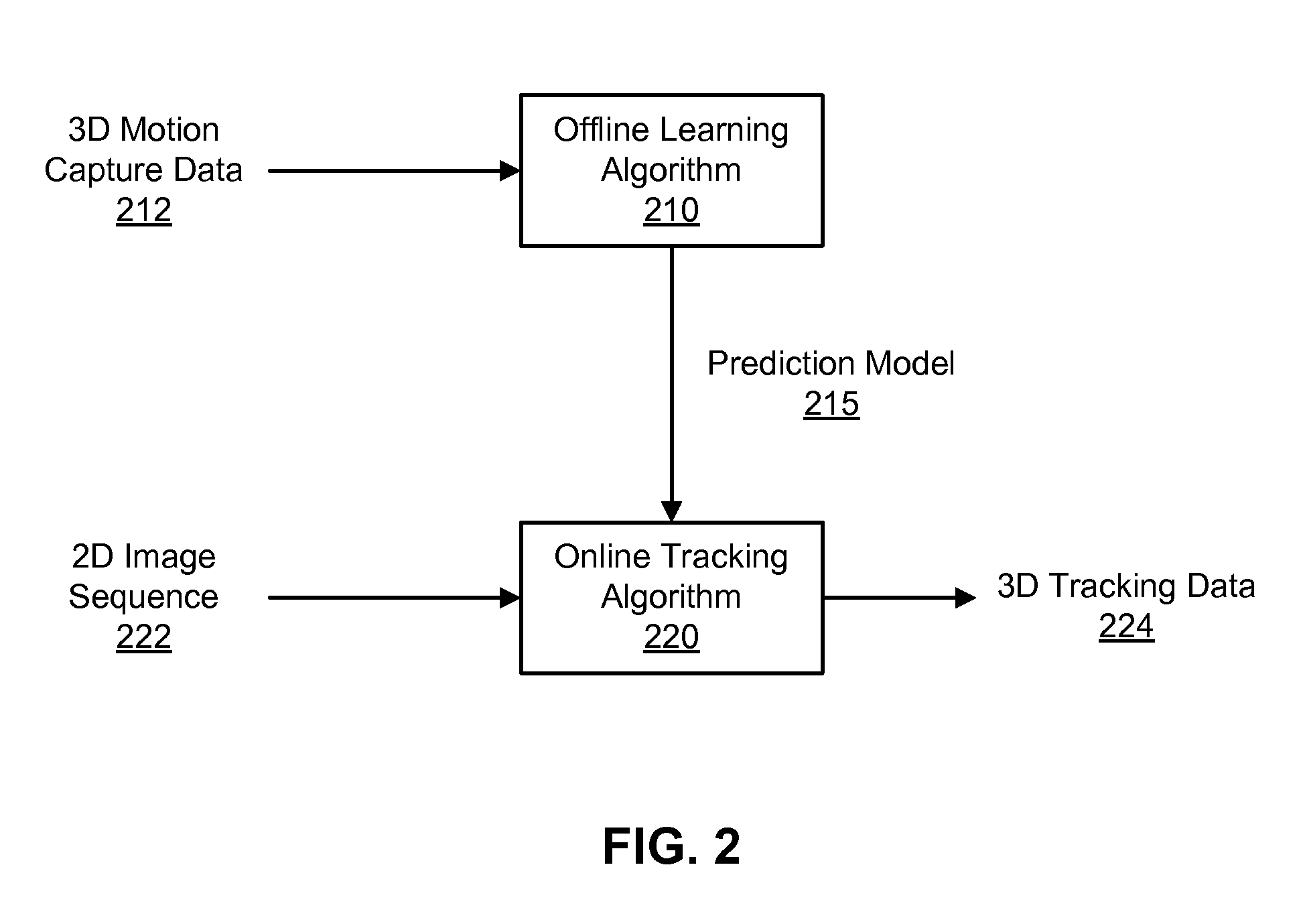

Monocular tracking of 3D human motion with a coordinated mixture of factor analyzers

ActiveUS7450736B2Efficiently and accurately trackingSmall sizeImage analysisCharacter and pattern recognitionOffline learningMultiple hypothesis tracking

Owner:HONDA MOTOR CO LTD

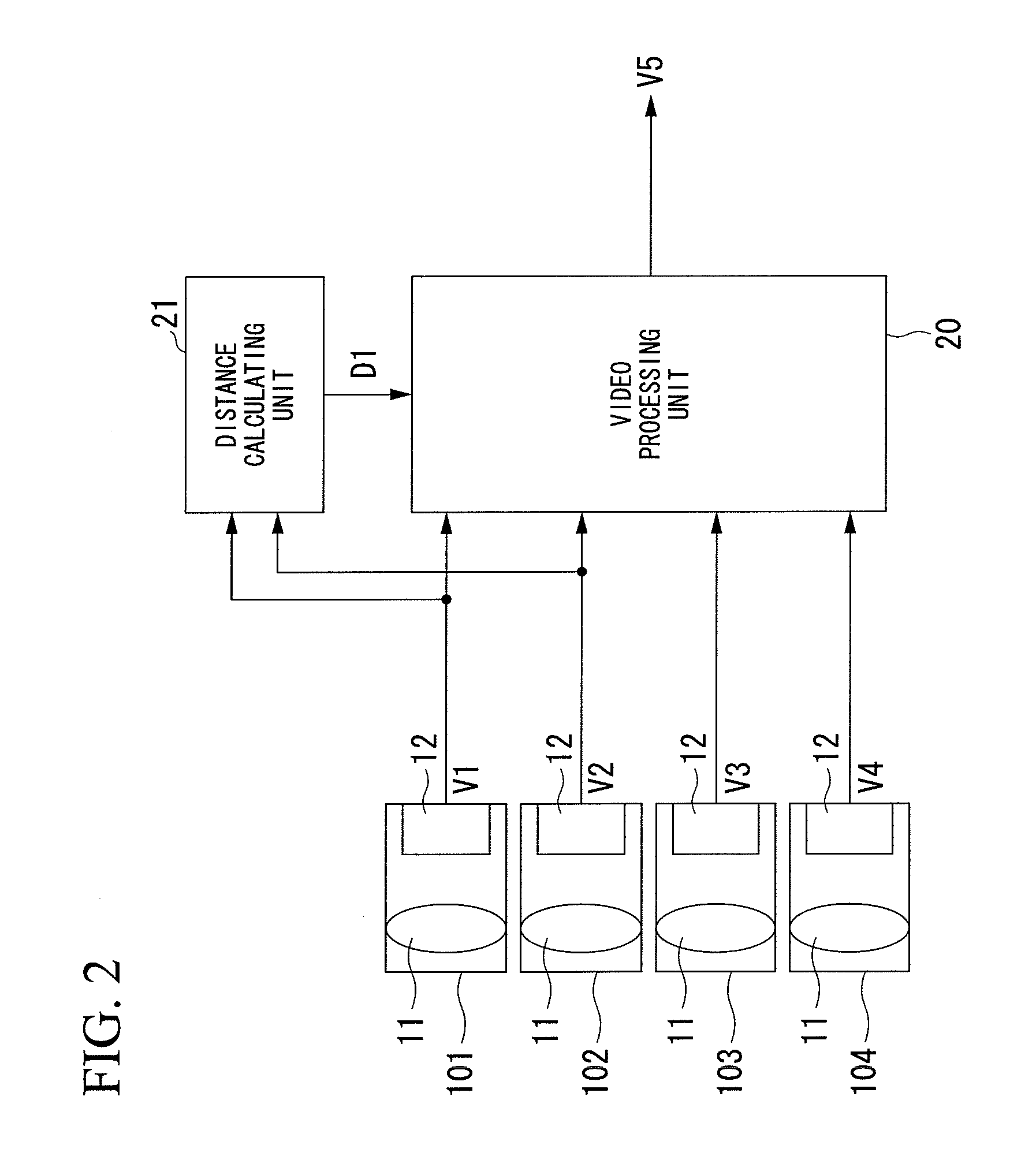

Multocular image pickup apparatus and multocular image pickup method

ActiveUS20120262607A1Increase processing volumeGood lookingTelevision system detailsImage analysisComputer visionMonocular video

A multocular image pickup apparatus includes: a distance calculation unit that calculates information regarding the distance to a captured object from an output video of a first image pickup unit, which is to be the reference unit of a plurality of image pickup units that pick up images, and from an output video of an image pickup unit that is different from the first image pickup unit; a multocular video synthesizing unit that generates synthesized video from the output video of the plurality of image pickup units based on the distance information for regions where the distance information could be calculated; and a monocular video synthesizing unit that generates synthesized video from the output video of the first image pickup unit for regions where the distance information could not be calculated. The distance calculation unit calculates a first distance information that is the distance to the captured object from the output video of the first image pickup unit and the output video of a second image pickup unit that is different from the first image pickup unit, and in case that there is a region where the first distance information could not be calculated, for the region where the first distance information could not be calculated, the distance calculation unit recalculates information regarding the distance to the captured object from the output video of an image pickup unit that was not used for calculating the distance among the plurality of image pickup units, and from the output video of the first image pickup unit.

Owner:SHARP KK

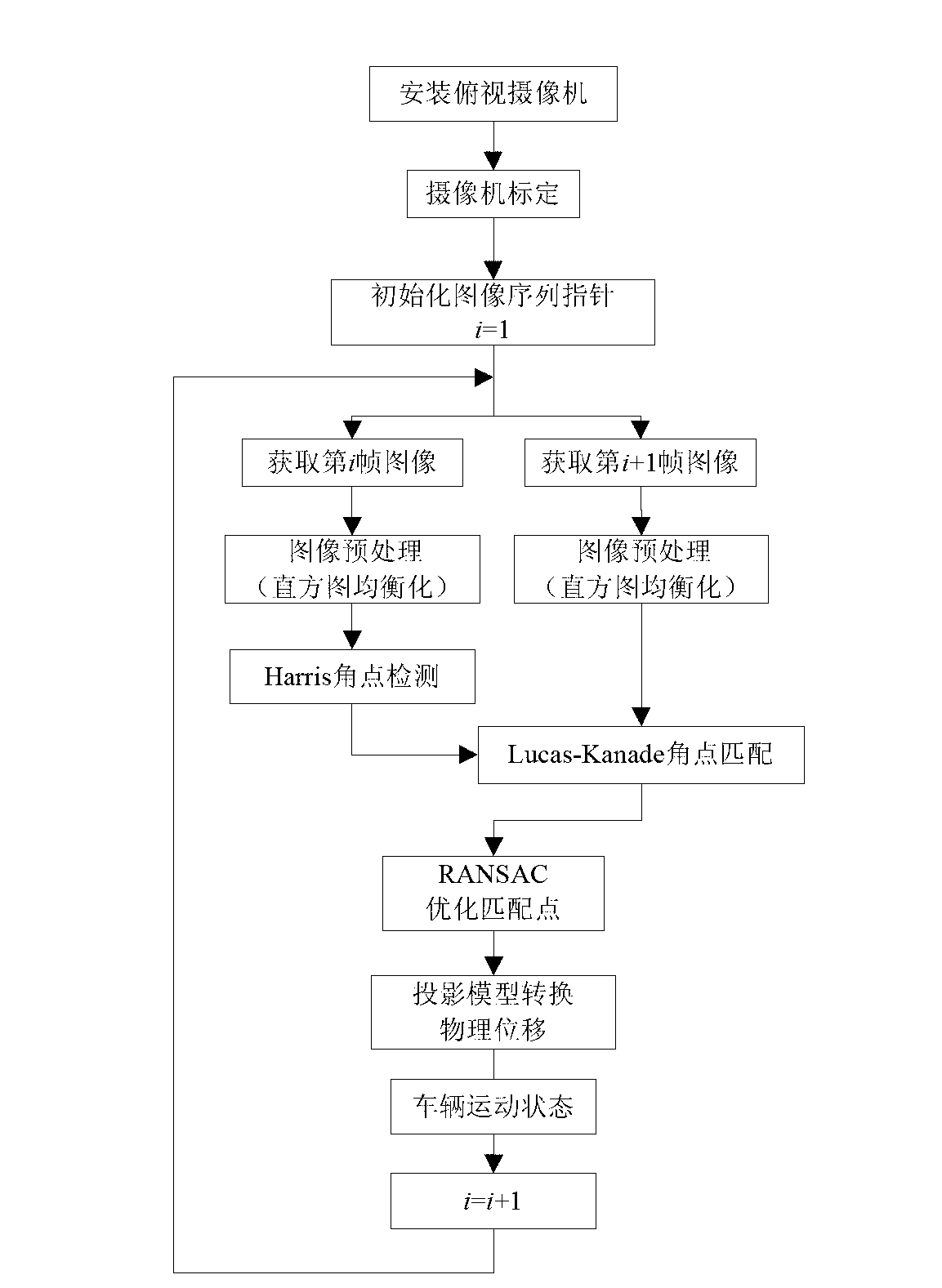

Light stream based vehicle motion state estimating method

InactiveCN102999759AHigh measurement accuracyImprove real-time performanceImage analysisCharacter and pattern recognitionRoad surfaceHistogram equalization

The invention discloses a light stream based vehicle motion state estimating method which is applicable to estimating motion of vehicles running of flat bituminous pavement at low speed in the road traffic environment. The light stream based vehicle motion state estimating method includes mounting a high-precision overlook monocular video camera at the center of a rear axle of a vehicle, and acquiring video camera parameters by means of calibration algorithm; preprocessing acquired image sequence by histogram equalization so as to highlight angular point characteristics of the bituminous pavement, and reducing adverse affection caused by pavement conditions and light variation; detecting the angular point characteristics of the pavement in real time by adopting efficient Harris angular point detection algorithm; performing angular point matching tracking of a front frame and a rear frame according to the Lucas-Kanade light stream algorithm, further optimizing matched angular points by RANSAC (random sample consensus) algorithm and acquiring more accurate light stream information; and finally, restructuring real-time motion parameters of the vehicle such as longitudinal velocity, transverse velocity and side slip angle under a vehicle carrier coordinate system, and accordingly, realizing high-precision vehicle ground motion state estimation.

Owner:SOUTHEAST UNIV

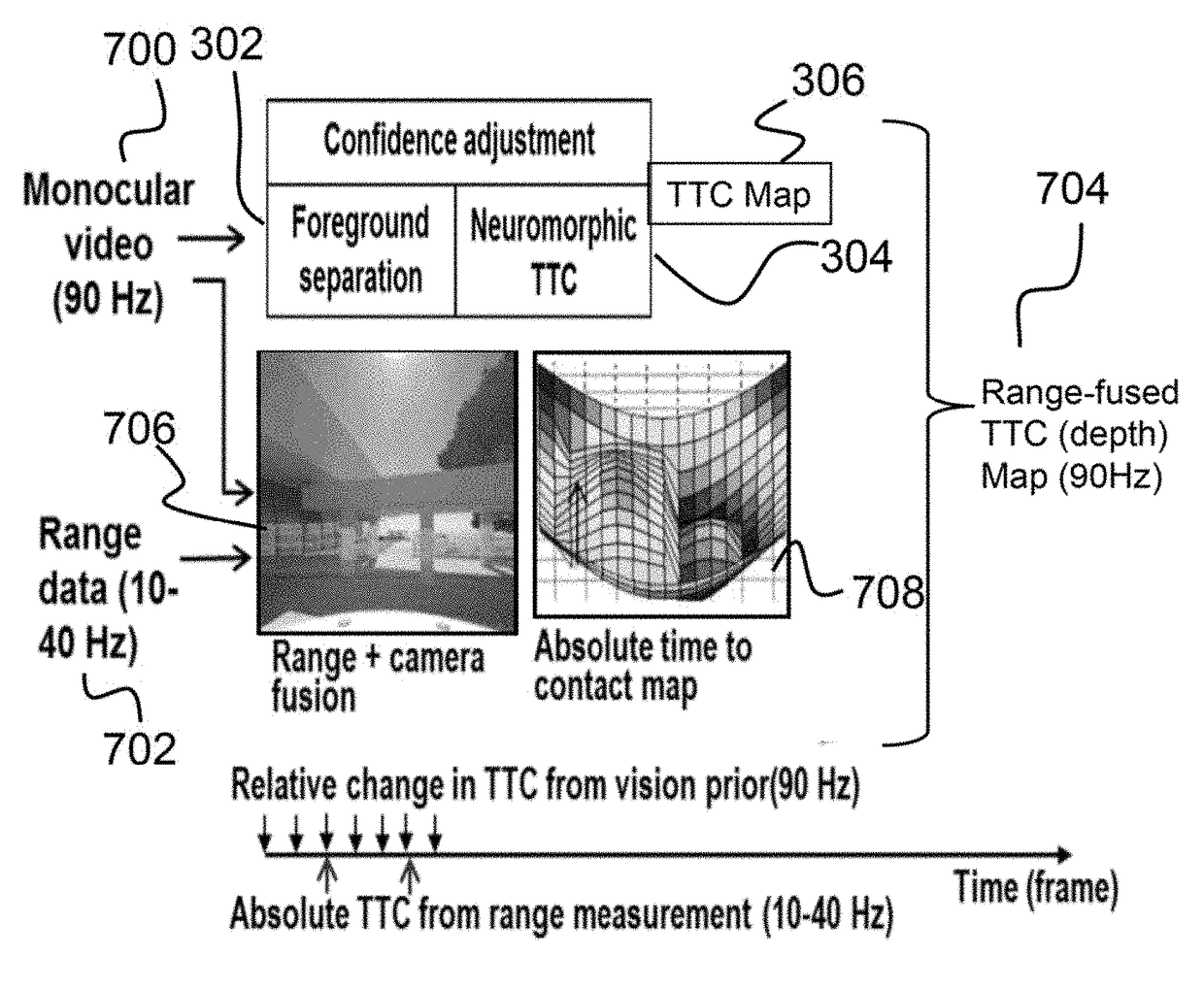

System and method for achieving fast and reliable time-to-contact estimation using vision and range sensor data for autonomous navigation

Described is a robotic system for detecting obstacles reliably with their ranges by a combination of two-dimensional and three-dimensional sensing. In operation, the system receives an image from a monocular video and range depth data from a range sensor of a scene proximate a mobile platform. The image is segmented into multiple object regions of interest and time-to-contact (TTC) value are calculated by estimating motion field and operating on image intensities. A two-dimensional (2D) TTC map is then generated by estimating average TTC values over the multiple object regions of interest. A three-dimensional TTC map is then generated by fusing the range depth data with image. Finally, a range-fused TTC map is generated by averaging the 2D TTC map and the 3D TTC map.

Owner:HRL LAB

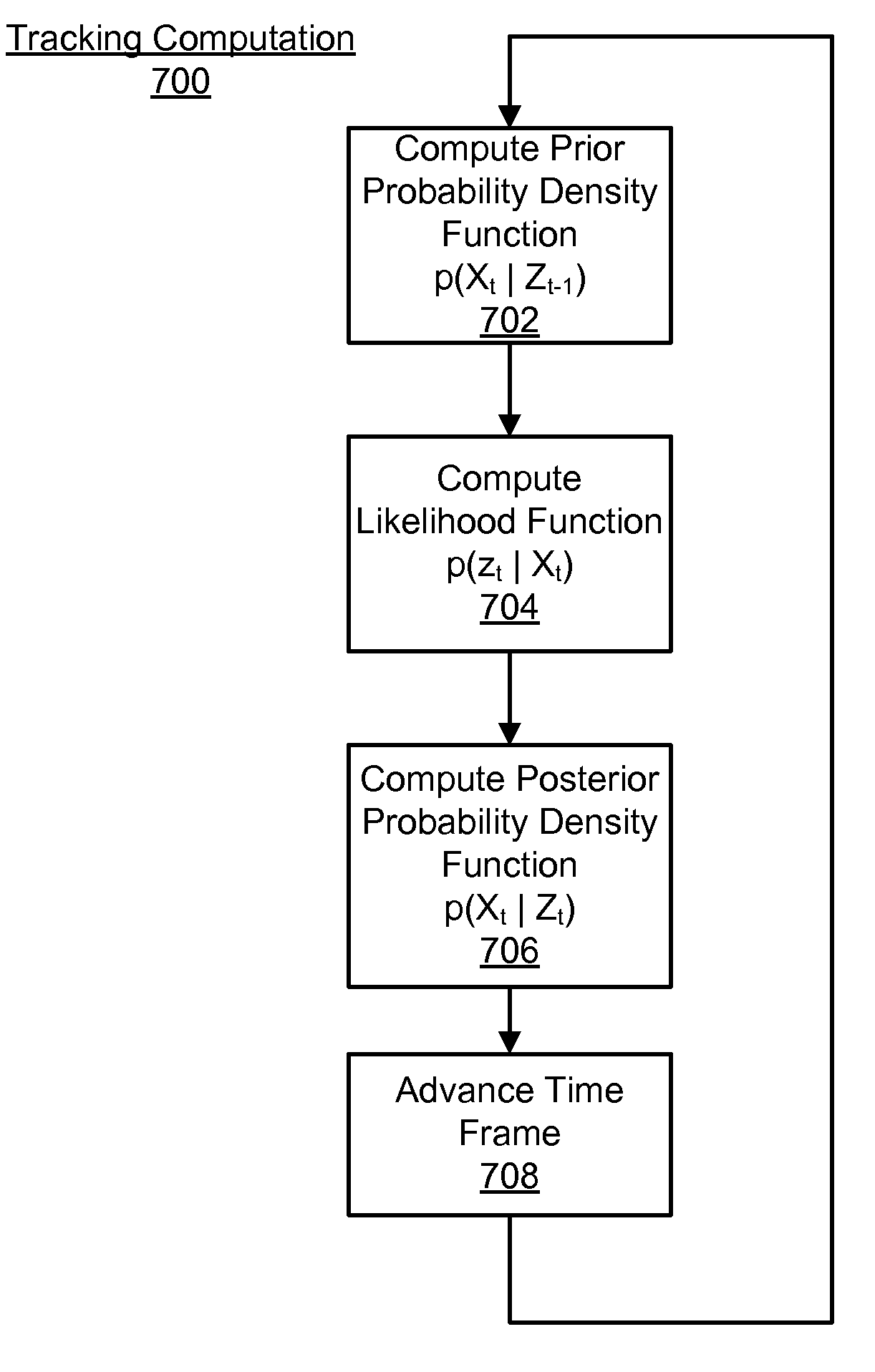

Monocular tracking of 3D human motion with a coordinated mixture of factor analyzers

ActiveUS20070104351A1Efficiently and accurately trackingSmall sizeImage analysisCharacter and pattern recognitionOffline learningMultiple hypothesis tracking

Disclosed is a method and system for efficiently and accurately tracking three-dimensional (3D) human motion from a two-dimensional (2D) video sequence, even when self-occlusion, motion blur and large limb movements occur. In an offline learning stage, 3D motion capture data is acquired and a prediction model is generated based on the learned motions. A mixture of factor analyzers acts as local dimensionality reducers. Clusters of factor analyzers formed within a globally coordinated low-dimensional space makes it possible to perform multiple hypothesis tracking based on the distribution modes. In the online tracking stage, 3D tracking is performed without requiring any special equipment, clothing, or markers. Instead, motion is tracked in the dimensionality reduced state based on a monocular video sequence.

Owner:HONDA MOTOR CO LTD

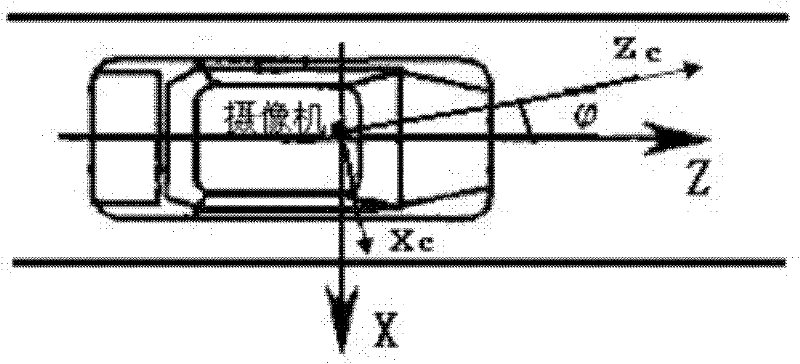

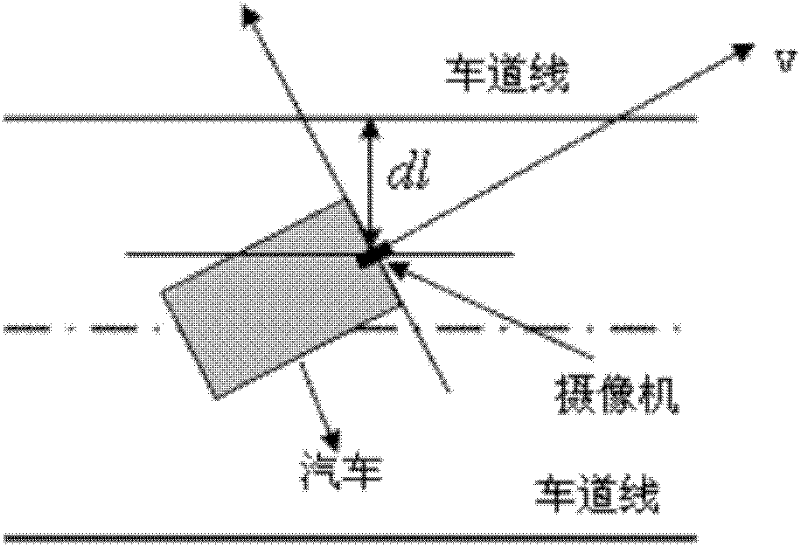

A Lane Departure Distance Measurement and Early Warning Method Based on Monocular Vision

ActiveCN102288121AImprove accuracyReduce measurement impactUsing optical meansVideo imageVisual perception

The invention discloses a method for measuring and pre-warning a lane departure distance based on monocular vision, belonging to the technical field of computer imaging processing. The method comprises the following steps of: collecting a video image through a monocular video camera installed in the front of an automobile at first, completing the detection of a lane line after processing through an image processing technology, and extracting geometrical information of the lane line; obtaining vertical distances between the automobile and the lane lines at left and right sides by utilizing thethree-dimensional geometry transformation relation of a pinhole imaging principle; and establishing a departure pre-warning decision method according to the vertical distances measured in real time, and providing effective information for an intelligent assistant driving technology. According to the method disclosed by the invention, when the lane line is detected by utilizing Hough transform, a constraint condition is added, a part of virtual lane line is excluded, and the operation speed and the lane line detection accuracy are increased; simultaneously, the lane departure pre-warning can be realized only by utilizing image information; the measurement influence of a vehicle departure angle on the lane departure distance is low; furthermore, the solving operation speed is high owing to the use of the three-dimensional geometry transform method; and the requirements of the intelligent assistant driving technology can be satisfied.

Owner:厚普清洁能源(集团)股份有限公司

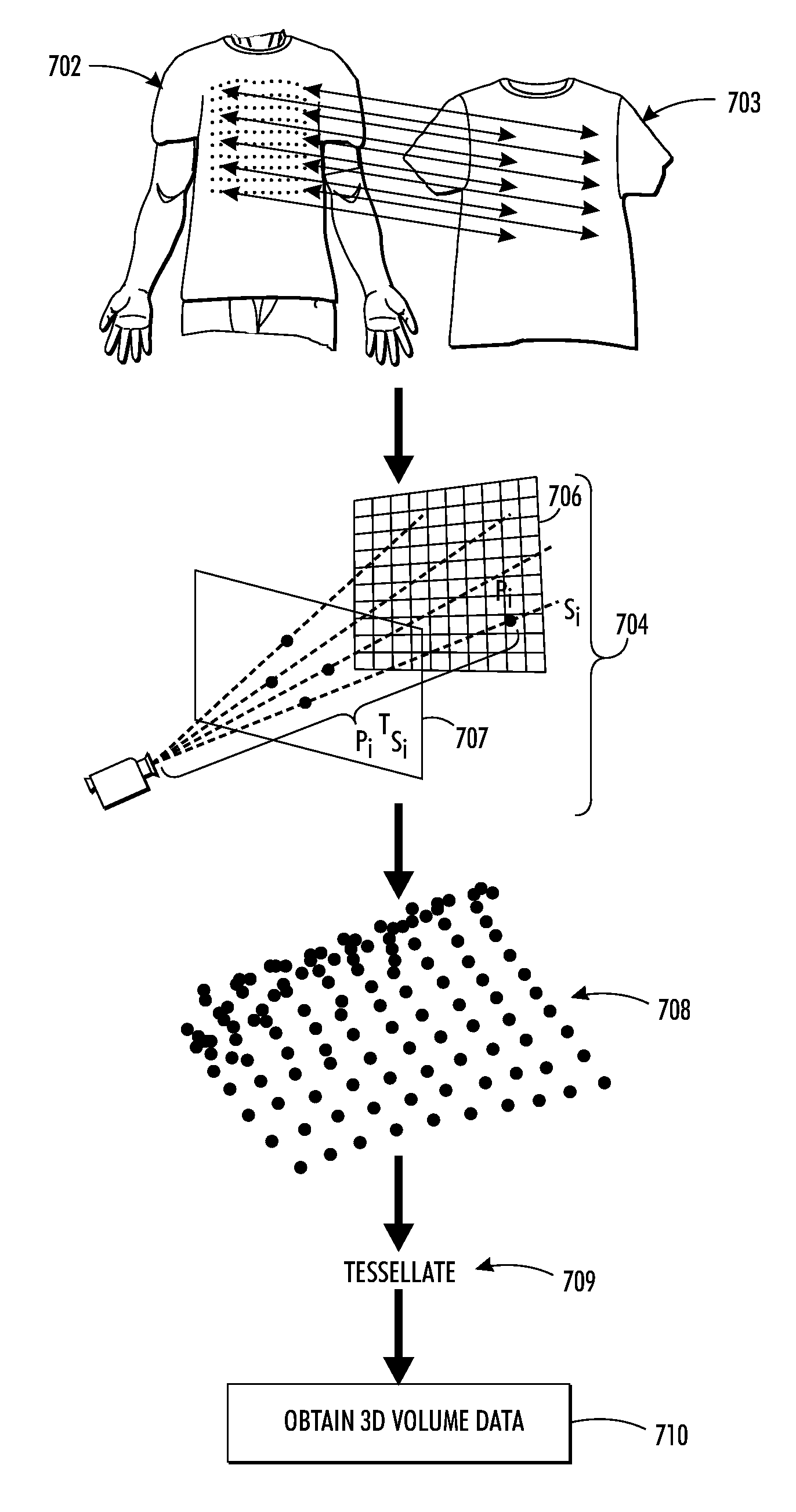

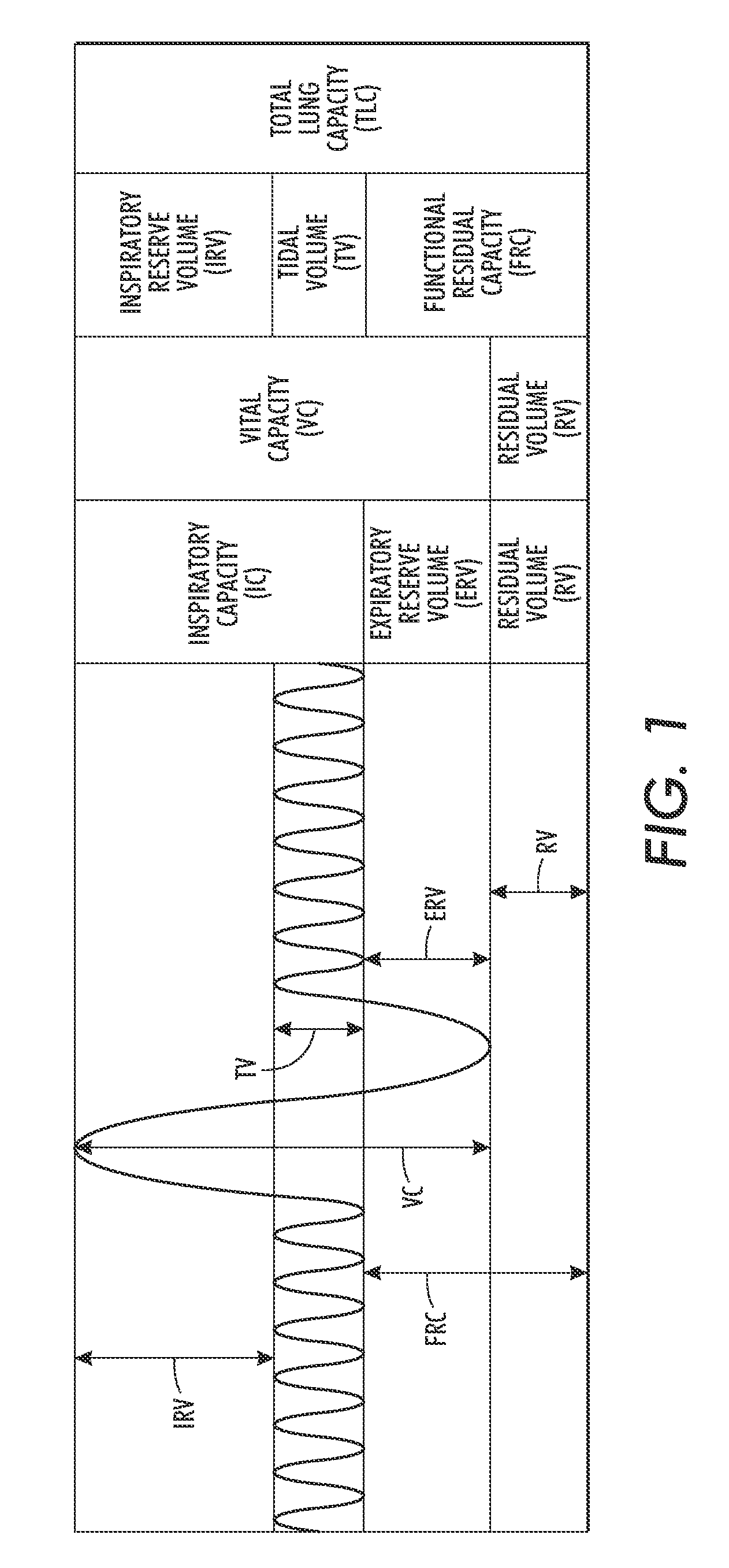

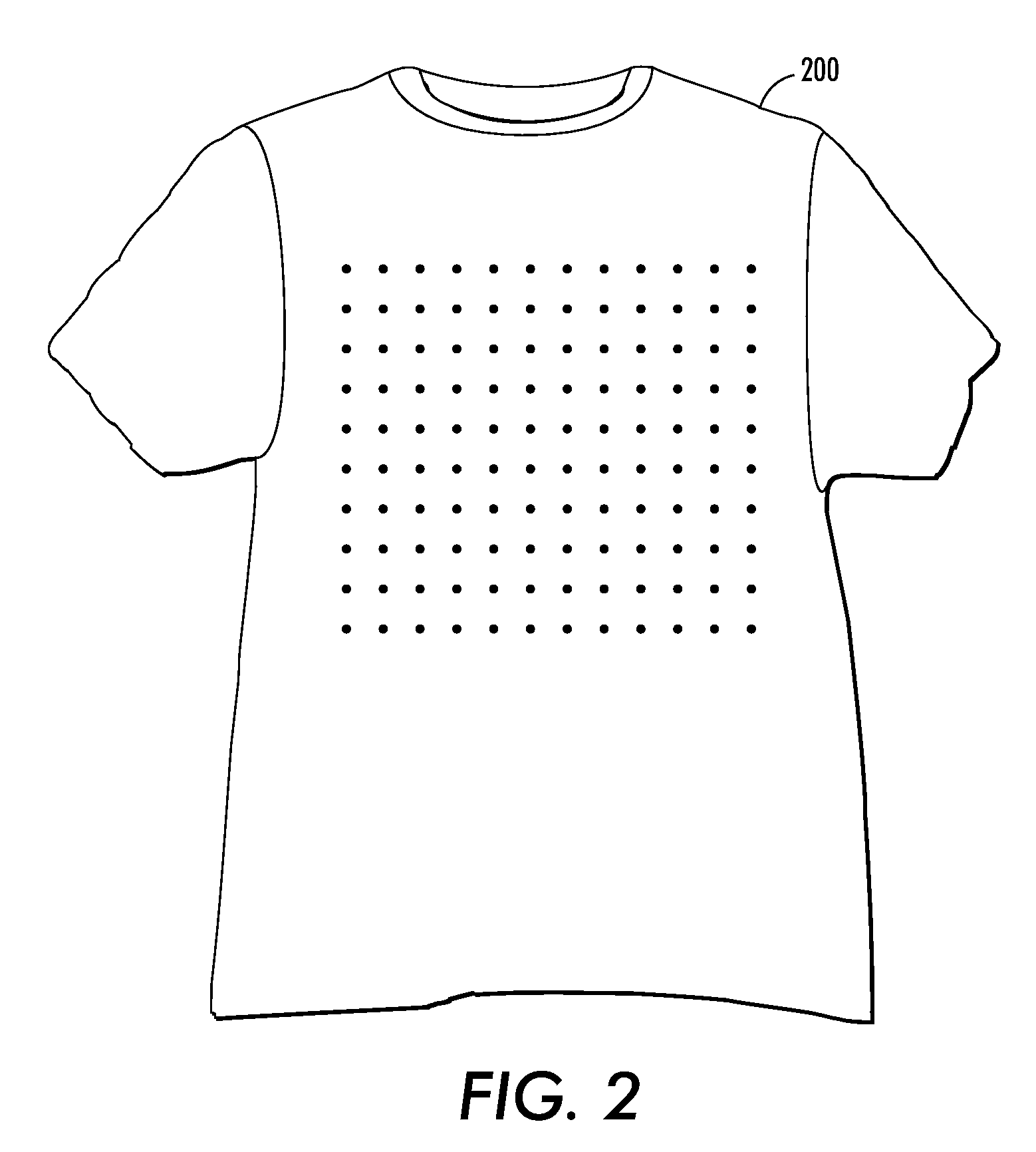

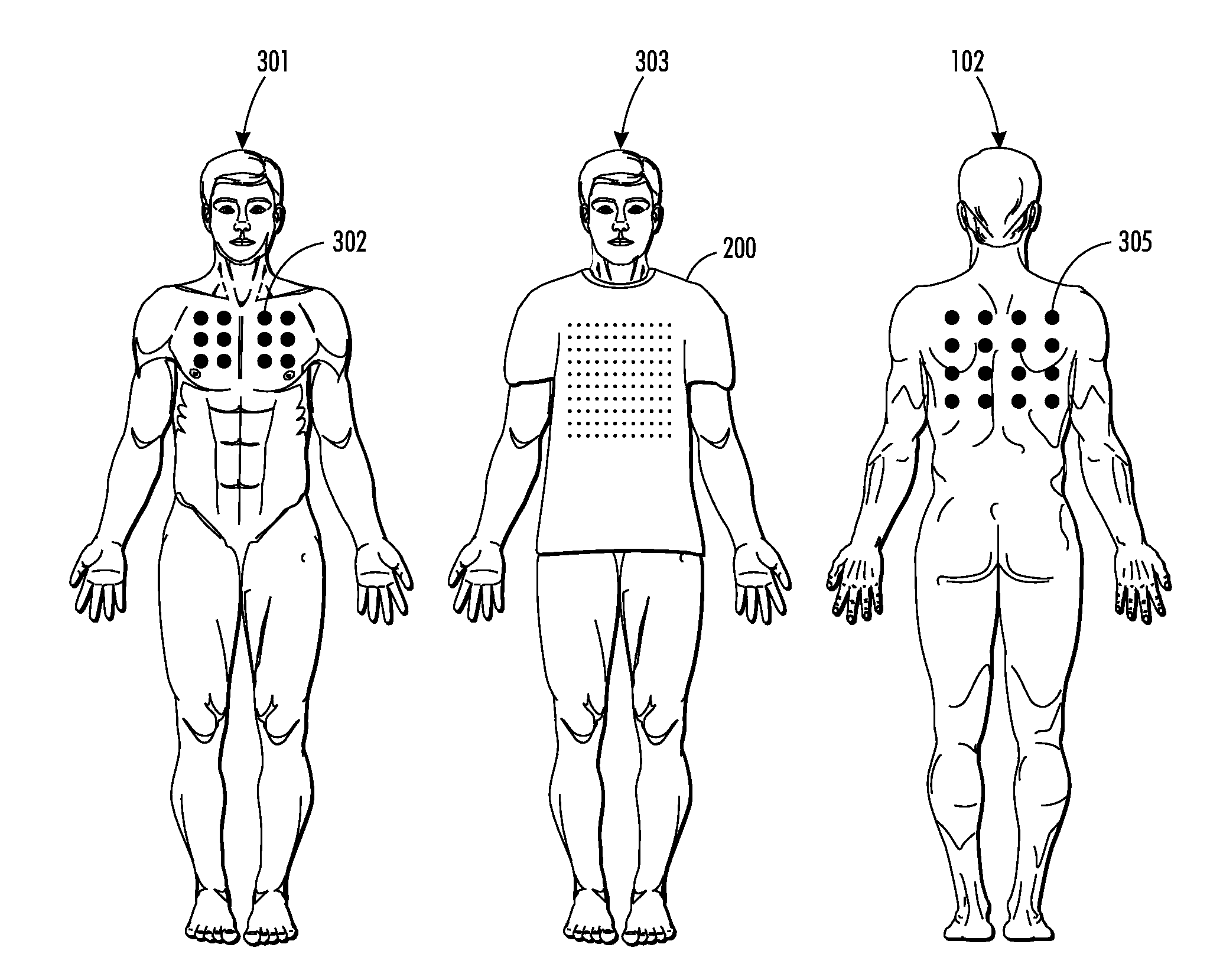

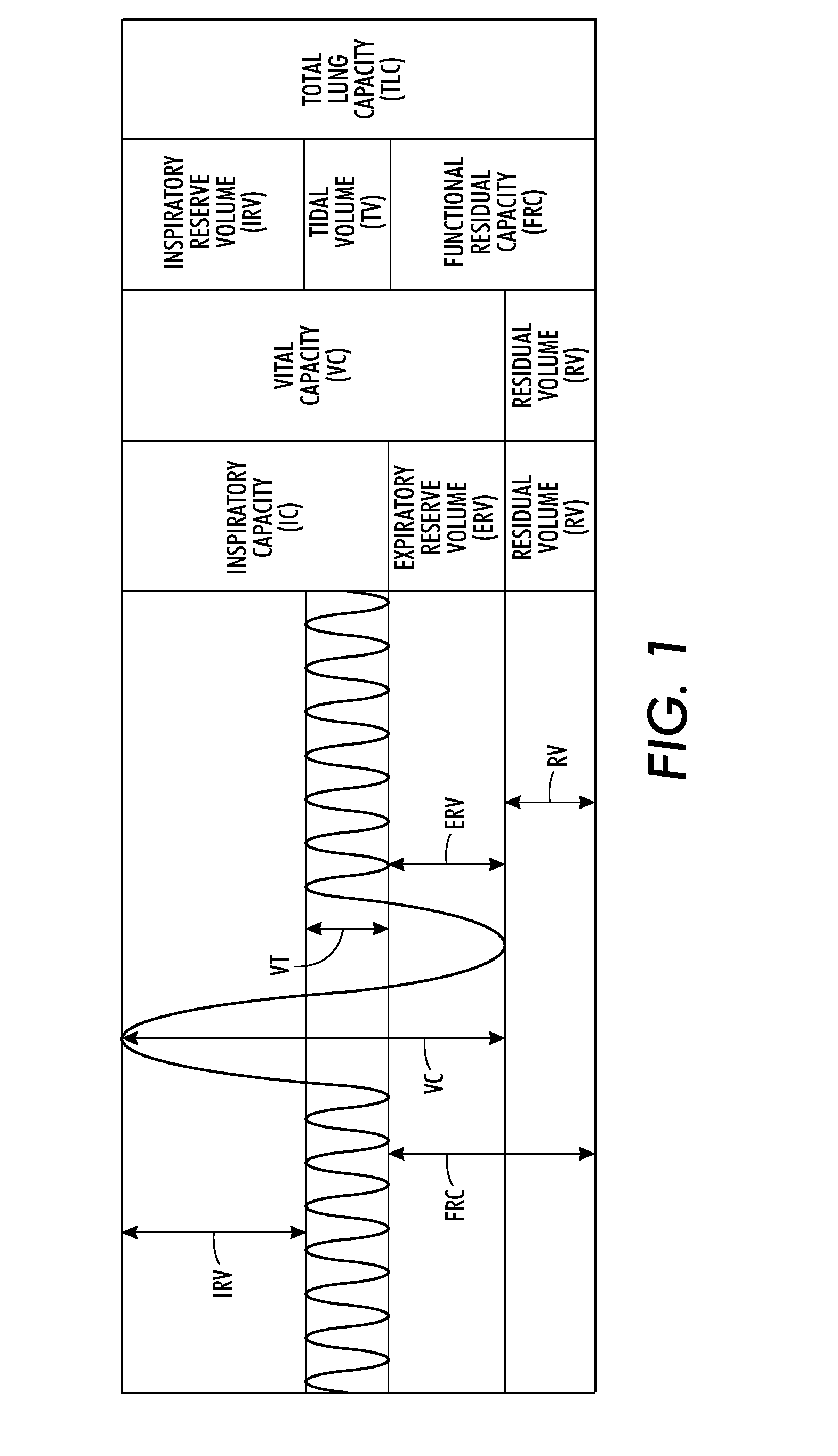

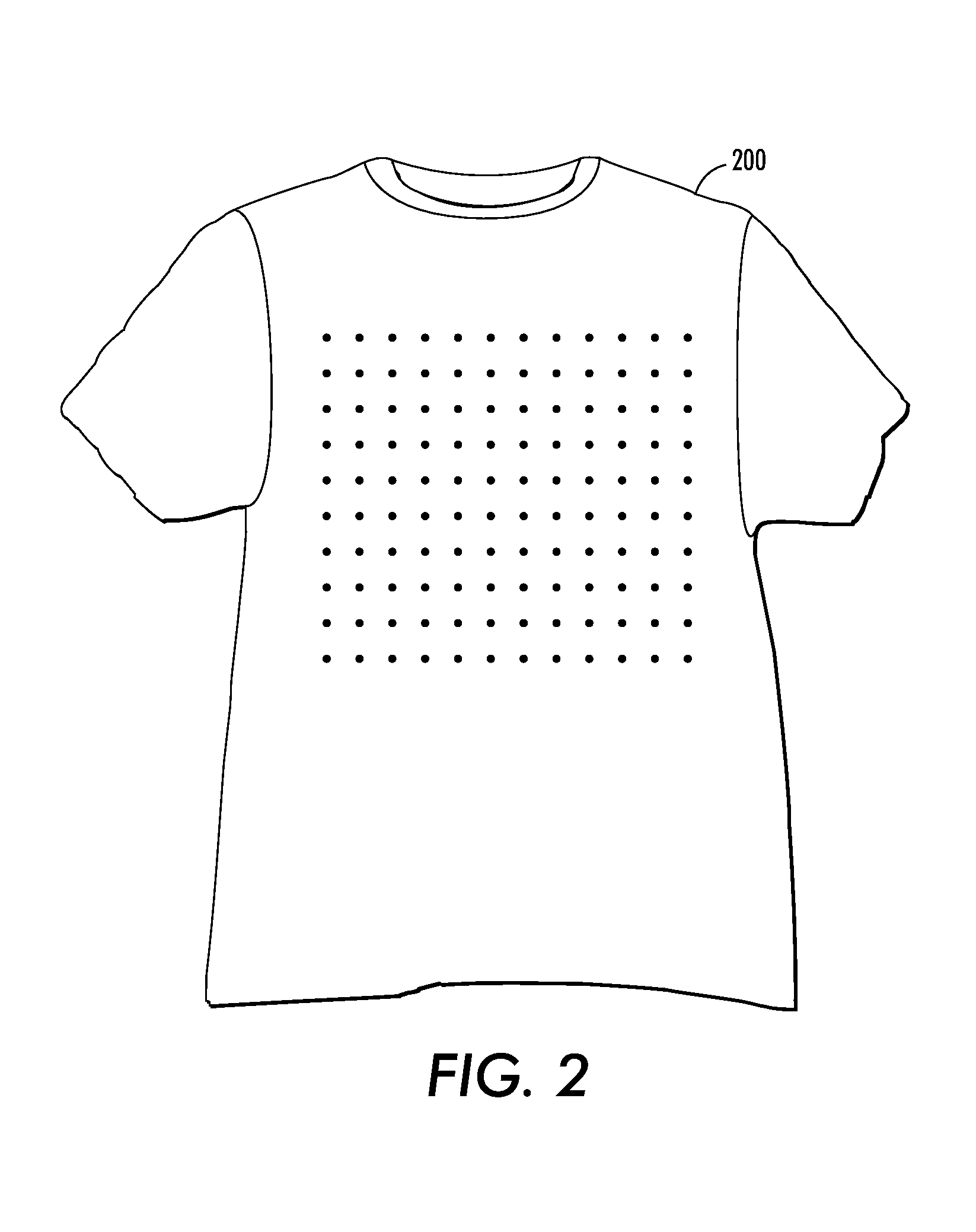

Respiratory function estimation from a 2D monocular video

What is disclosed is a system and method for processing a video acquired using a 2D monocular video camera system to assess respiratory function of a subject of interest. In various embodiments hereof, respiration-related video signals are obtained from a temporal sequence of 3D surface maps that have been reconstructed based on an amount of distortion detected in a pattern placed over the subject's thoracic region (chest area) during video acquisition relative to known spatial characteristics of an undistorted reference pattern. Volume data and frequency information are obtained from the processed video signals to estimate chest volume and respiration rate. Other respiratory function estimations of the subject in the video can also be derived. The obtained estimations are communicated to a medical professional for assessment. The teachings hereof find their uses in settings where it is desirable to assess patient respiratory function in a non-contact, remote sensing environment.

Owner:XEROX CORP

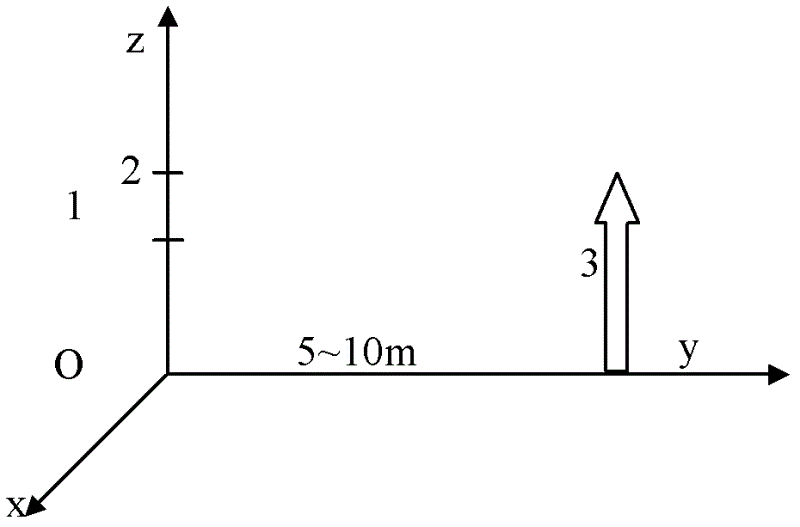

Monocular video based real-time posture estimation and distance measurement method for three-dimensional rigid body object

InactiveCN101907459AImprove concealmentLow costPicture taking arrangementsFeature vectorStereoscopic imaging

The invention discloses a monocular video based real-time posture estimation and distance measurement method for a three-dimensional rigid body object, comprising the following steps: collecting the observation video of the object through an optical observation device; feeding the image sequence obtained from collection into an object segmentation module to obtain an two-value segmentation image and an contour image of the object; extracting the characteristic vectors of contour points of the target to generate a multiple-characteristic drive distance image; establishing the tentative homonymic characteristic correspondence between an input two-dimensional image sequence and the objective three-dimensional model; inverting the three-dimensional posture and distance parameters of the object in the image; feeding back the three-dimensional posture and distance parameters of the object obtained from inversion; and correcting and updating the tentative homonymic characteristic correspondence between the two-dimensional image sequence and the objective three-dimensional model until the correspondence meets the iteration stop condition. The method does not need three-dimensional imaging devices, and has the advantages of no damage to observed objects, good concealment, low cost and high degree of automation.

Owner:TSINGHUA UNIV

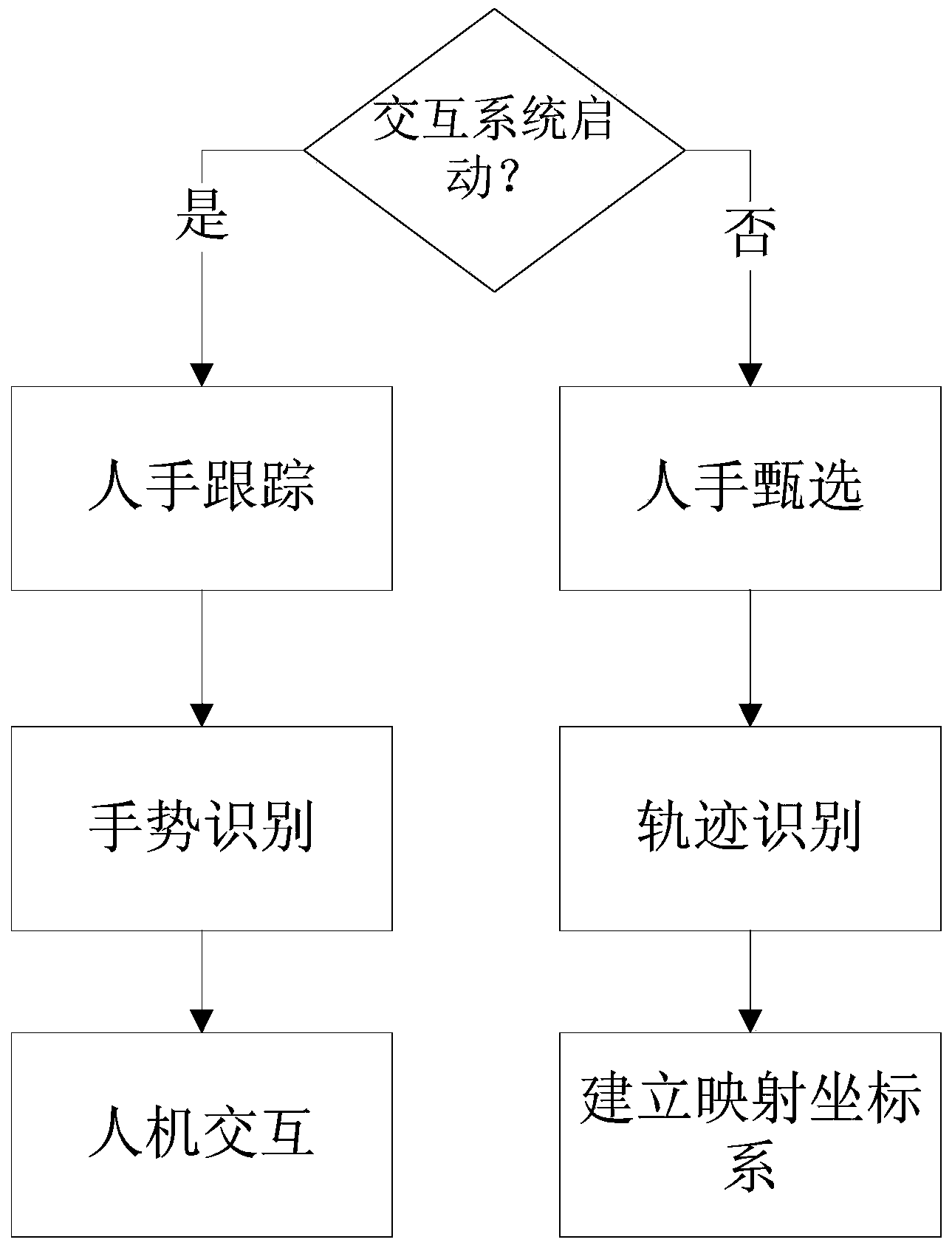

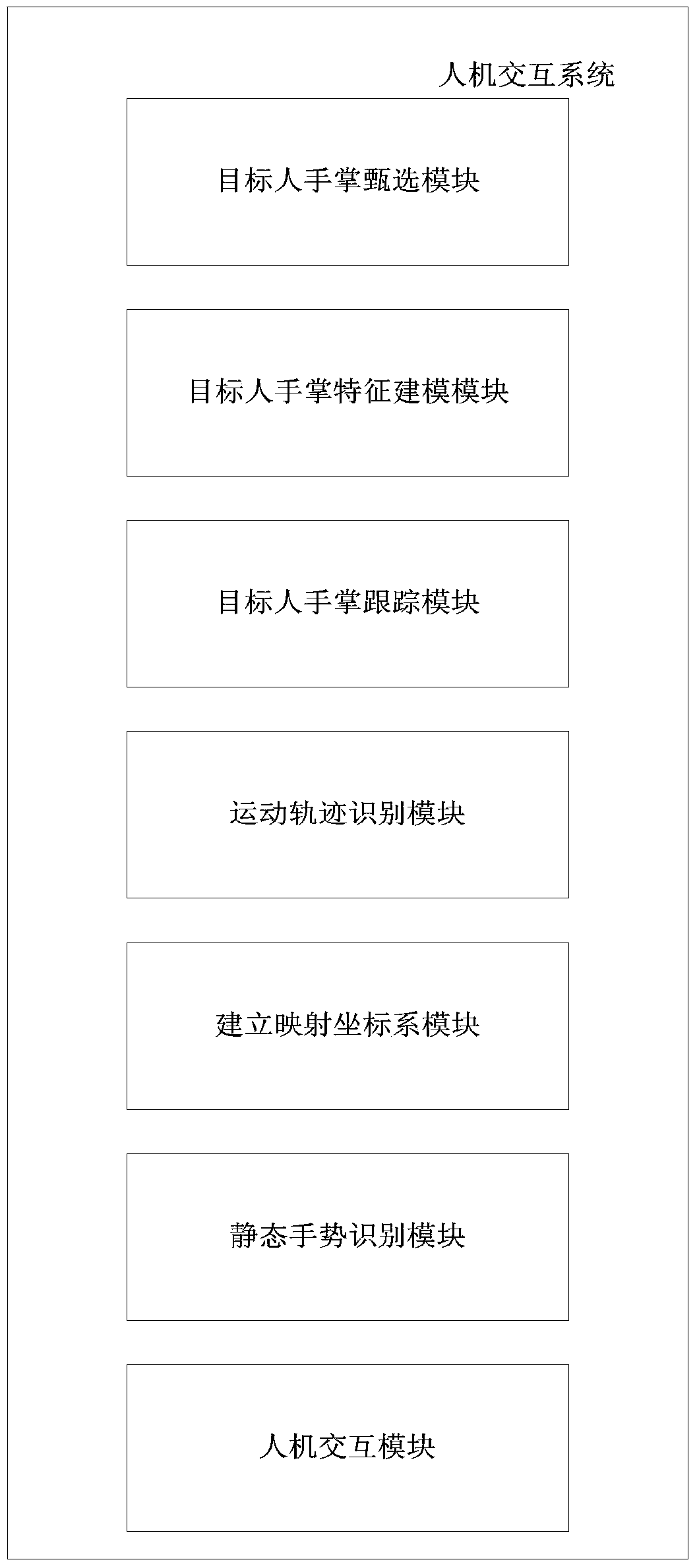

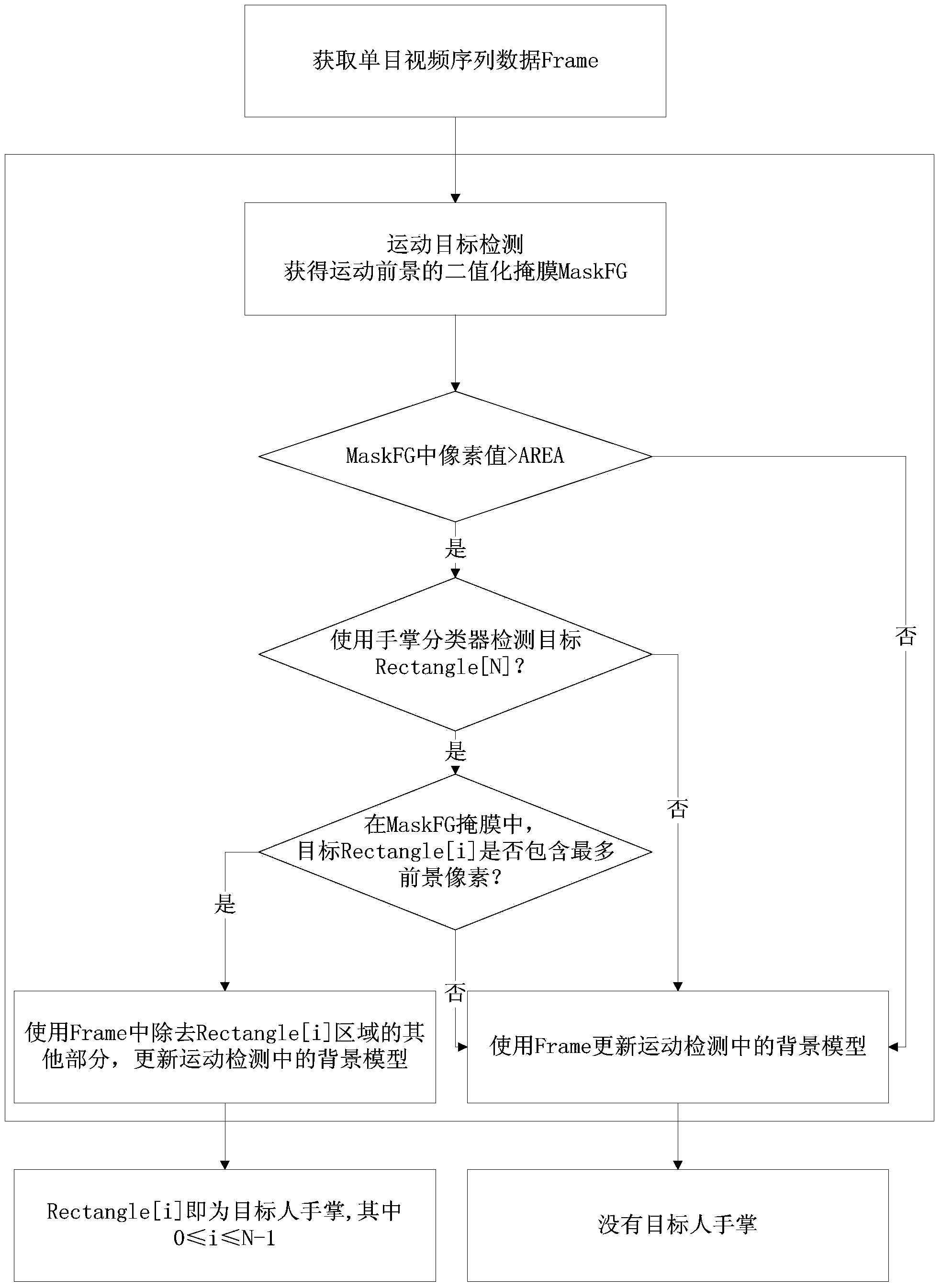

Target person hand gesture interaction method based on monocular video sequence

ActiveCN103530613AGuaranteed uptimeInput/output for user-computer interactionCharacter and pattern recognitionInteraction controlMinimum bounding rectangle

The invention provides a target person hand gesture interaction method based on a monocular video sequence. The target person hand gesture interaction method comprises the following steps: first, acquiring an image in a monocular video frame sequence, using a movement detection algorithm to extract a movement foreground mask, and using a palm classifier to detect the minimum circumscribed rectangle frame of a palm and screen the palm of a target person; extracting a color histogram model from the palm image of the target person, calculating the reverse projection drawing of the palm image of the target person, and summarizing the area model of the palm of the target person; for a tracked target region, using a color model to calculate the reverse projection drawing, and calculating the area of the current frame of a target person hand to judge the static gesture of the target person hand: a fist or the palm; using the fist gesture or the palm gesture to carry out click or move interaction control. The target person hand can be screened under complicated background, and the tracking of any hand gesture and the recognition of any preset trajectory are finished. The method can be applied on an embedded platform with low operation capability and is simple, speedy and stable.

Owner:天辰时代科技有限公司

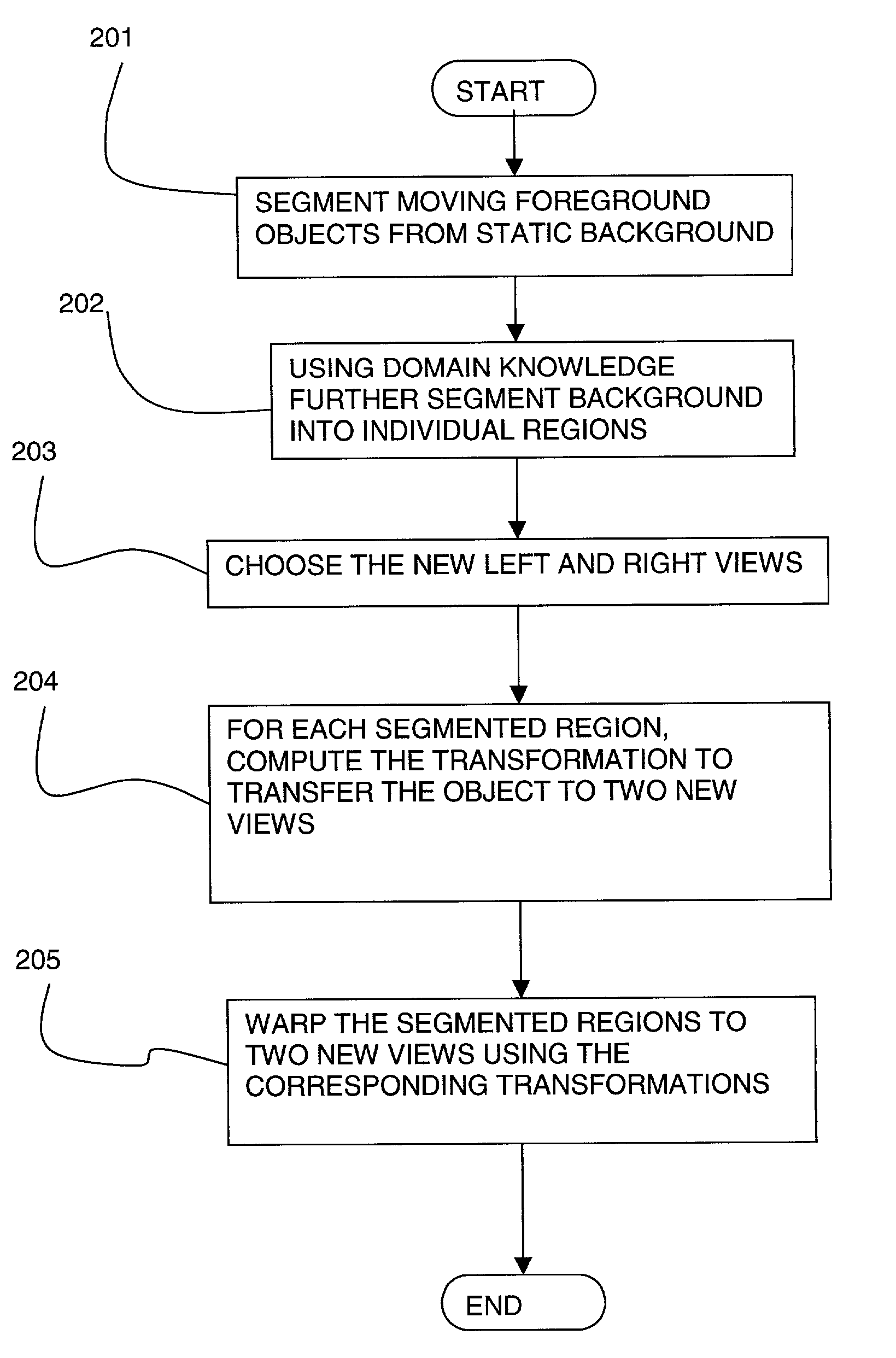

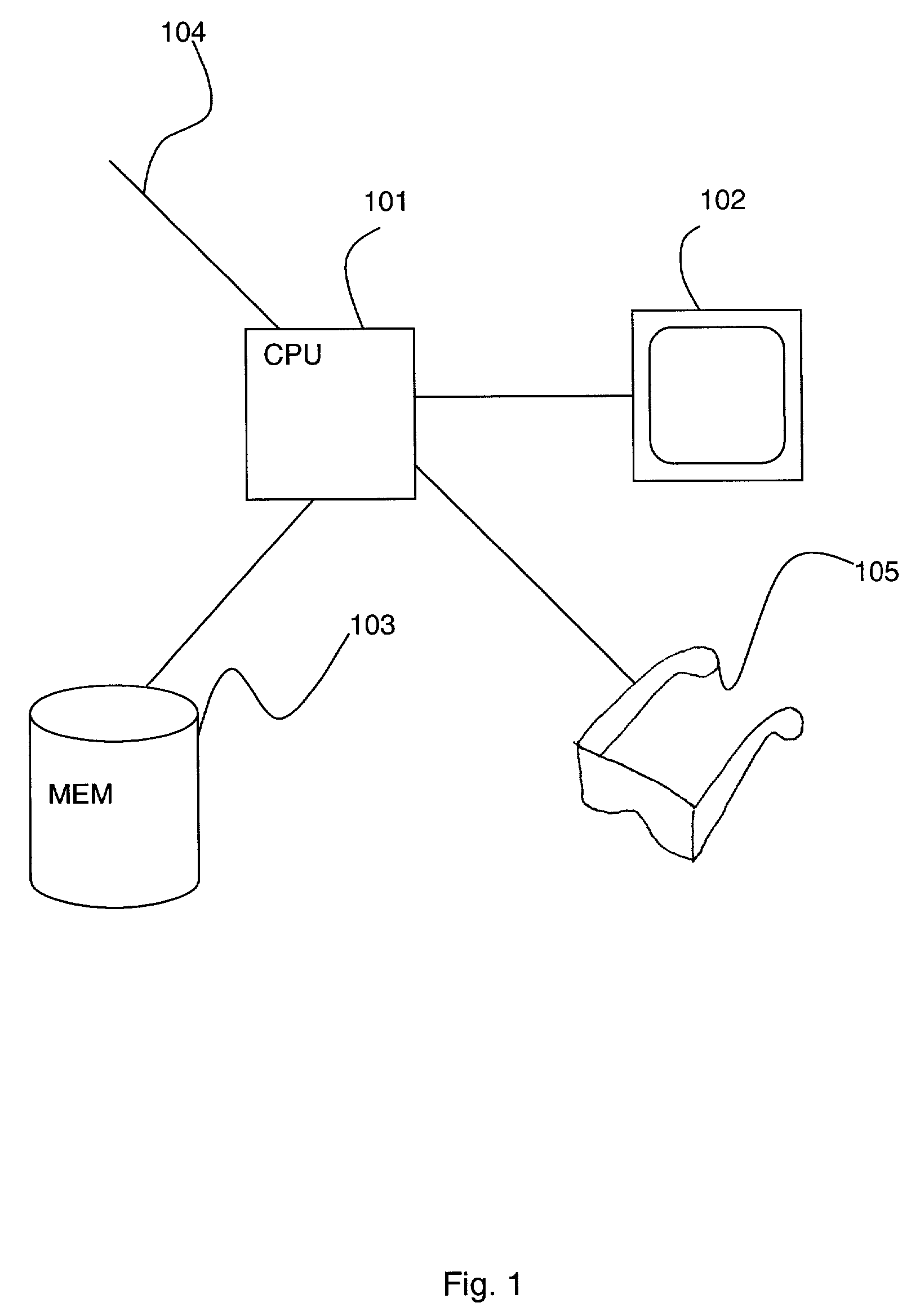

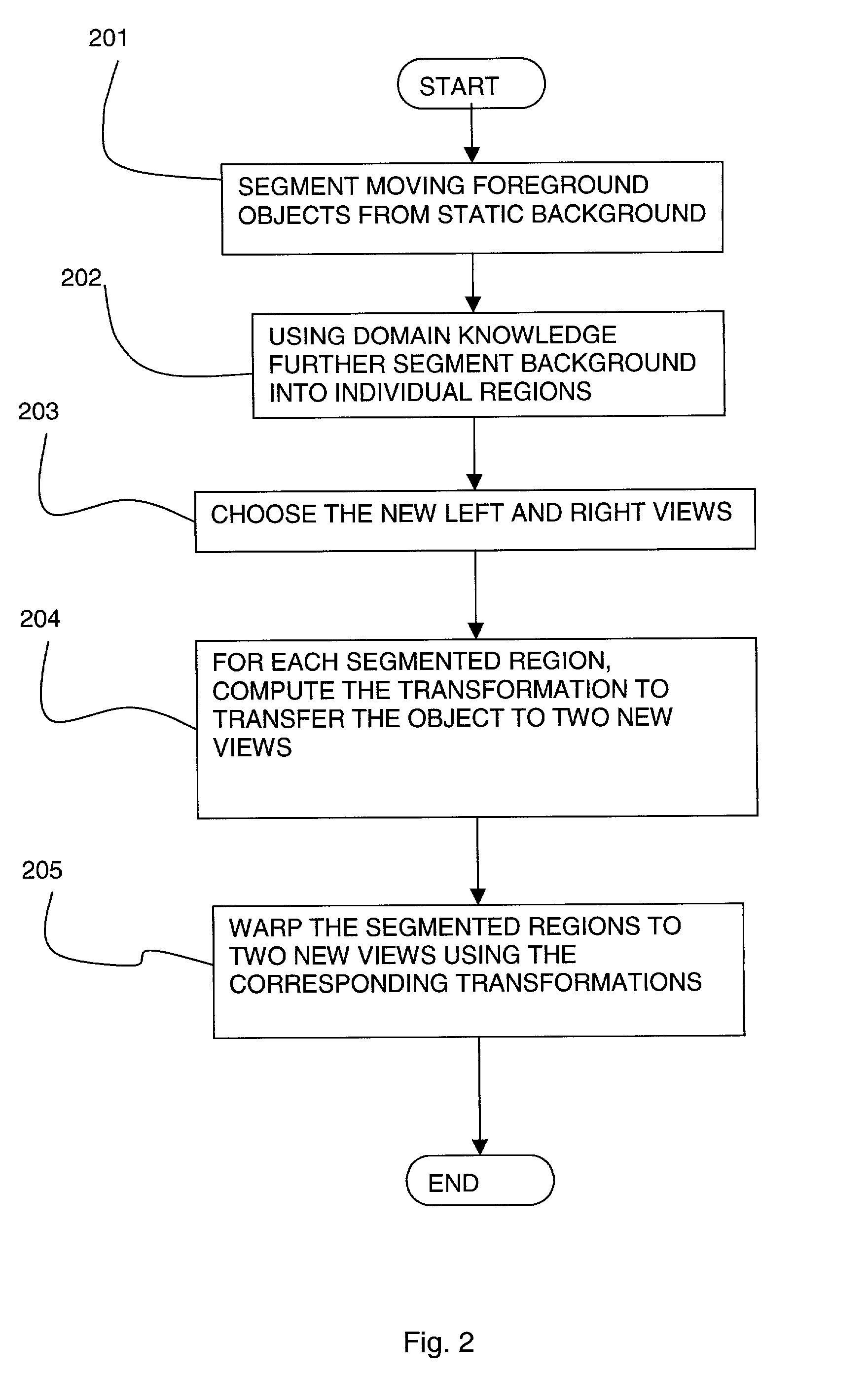

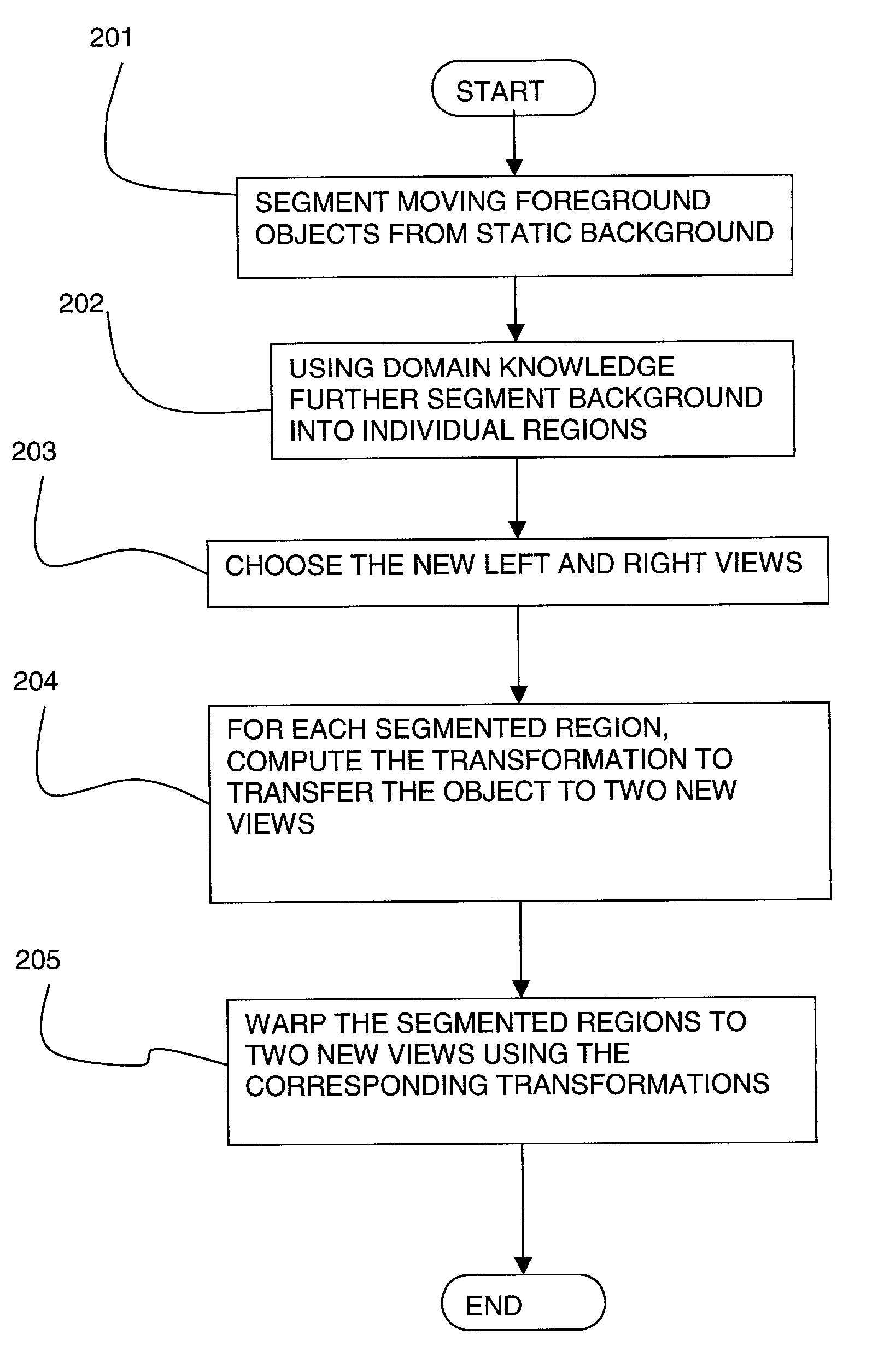

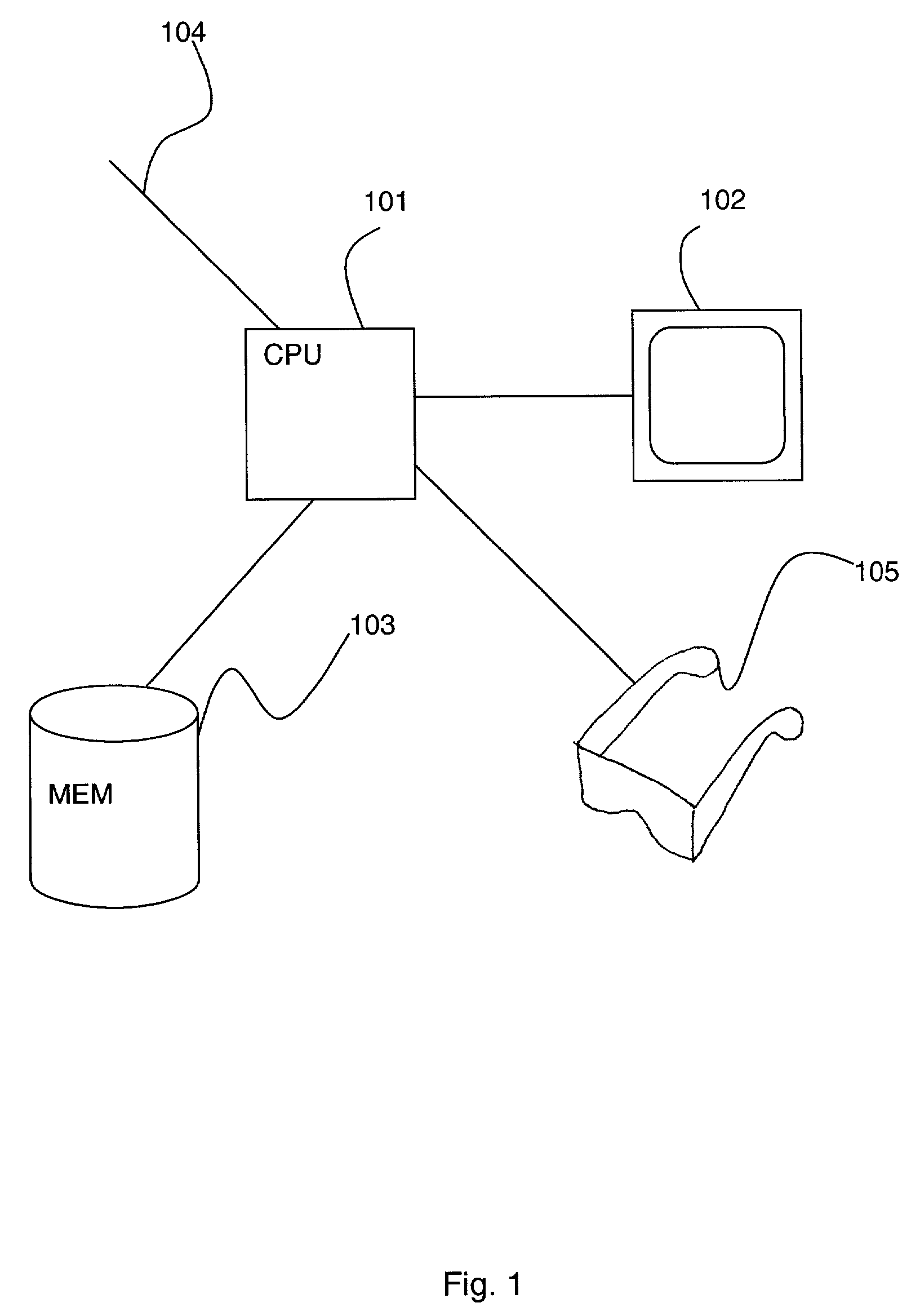

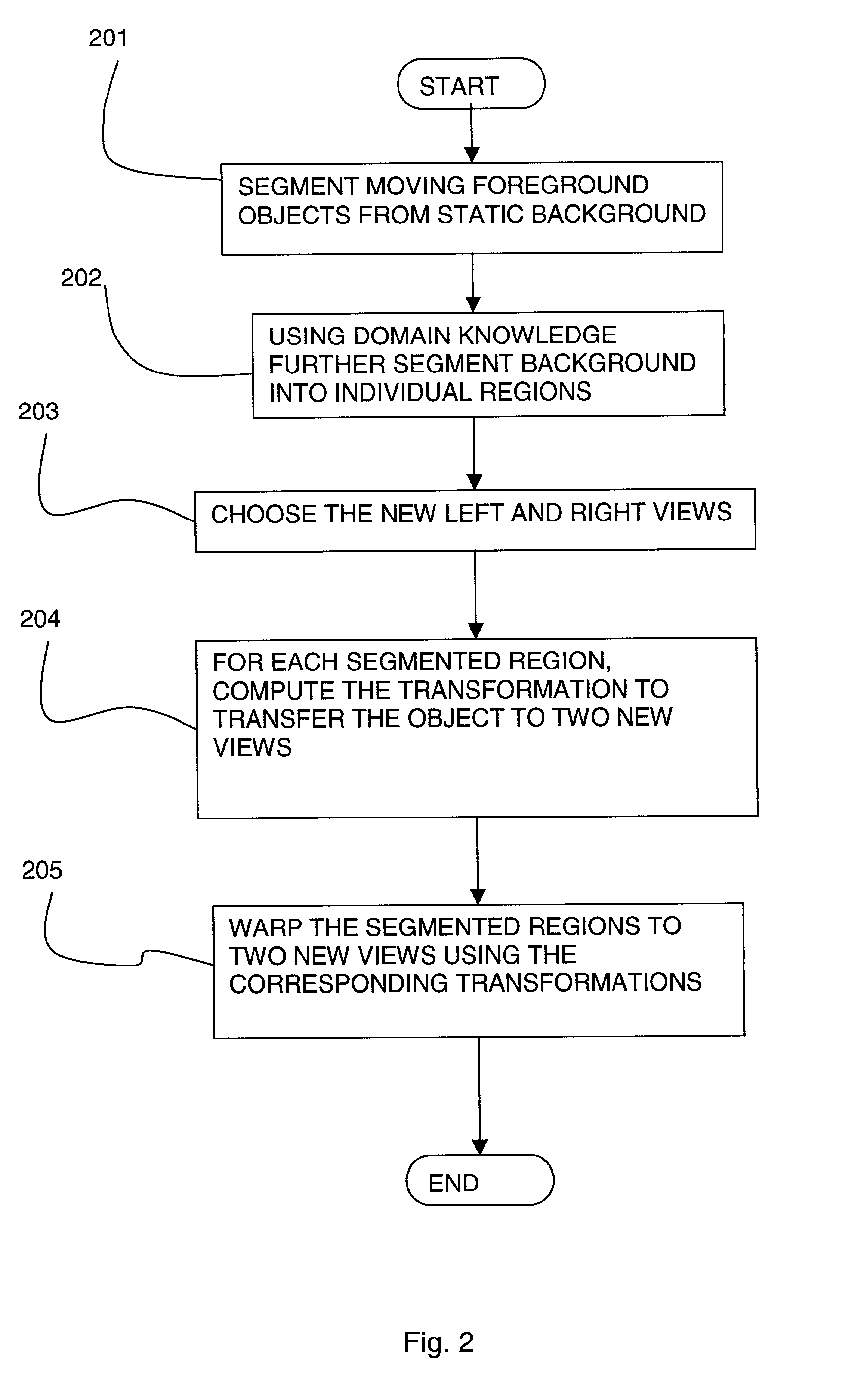

N-view synthesis from monocular video of certain broadcast and stored mass media content

InactiveUS6965379B2Improve approximationSuitable displayImage enhancementImage analysisView synthesisComputer vision

A monocular input image is transformed to give it an enhanced three dimensional appearance by creating at least two output images. Foreground and background objects are segmented in the input image and transformed differently from each other, so that the foreground objects appear to stand out from the background. Given a sequence of input images, the foreground objects will appear to move differently from the background objects in the output images.

Owner:FUNAI ELECTRIC CO LTD

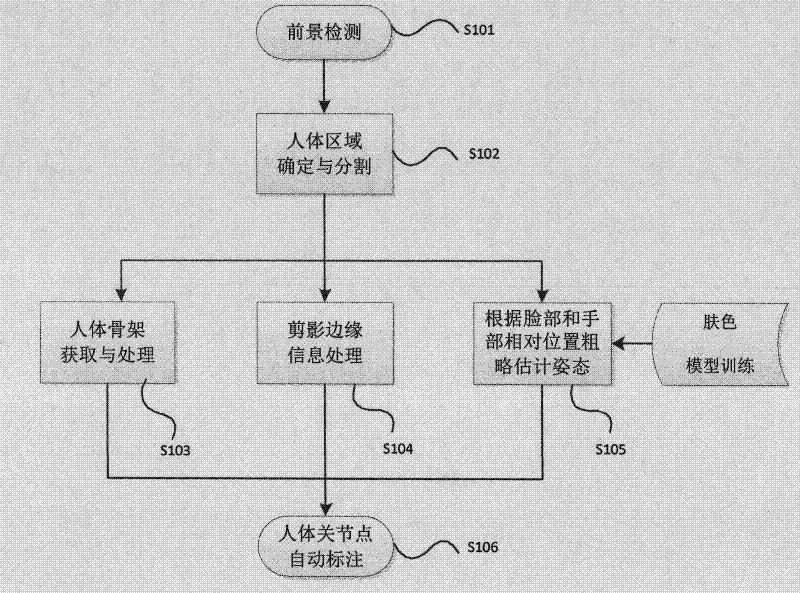

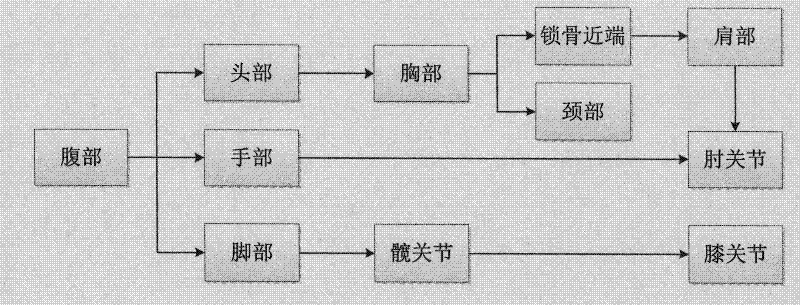

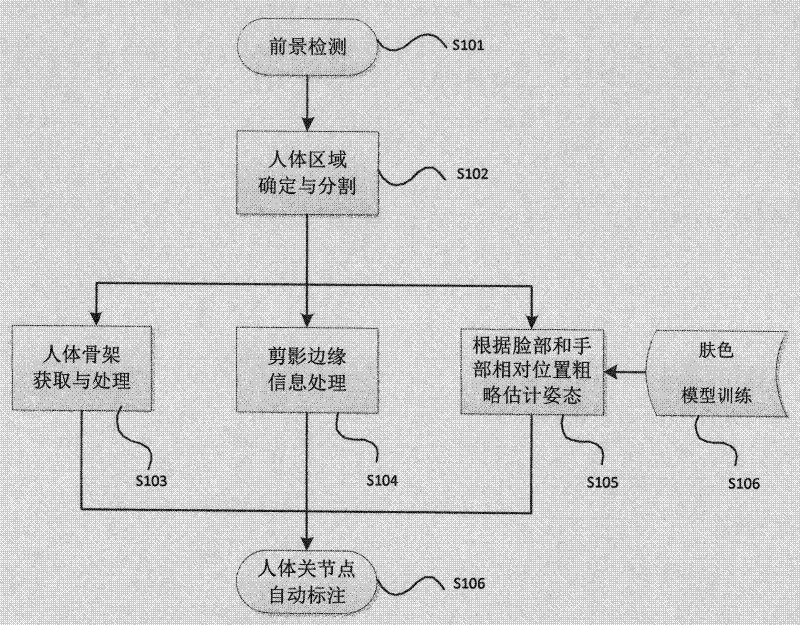

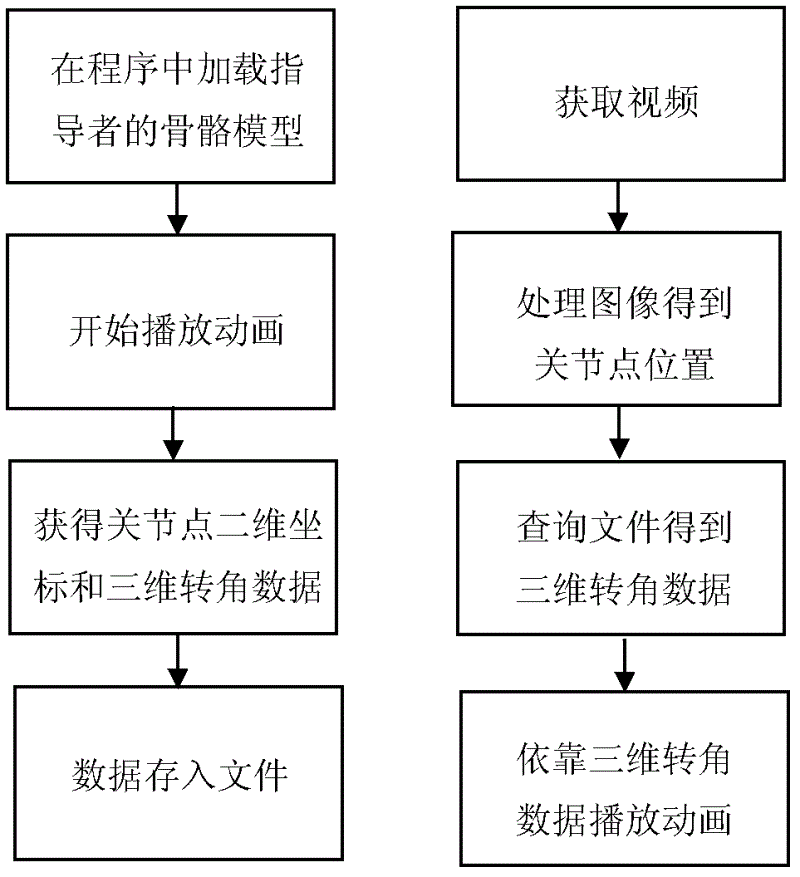

Automatic labeling method for human joint based on monocular video

ActiveCN102609683AEasy to getReduce complexityCharacter and pattern recognitionFeature vectorSkin color

The invention provides an automatic labeling method for a human joint based on a monocular video. The automatic labeling method comprises the following steps: detecting a foreground and storing the foreground as an area of interest; confirming an area of a human body, cutting a part and obtaining an outline of sketch; obtaining a skeleton of the human body and obtaining a key point of the skeleton; utilizing a relative position of face and hands to roughly estimate the gesture of human body; and automatically labeling the point of human joint. During an automatic labeling process, the sketch outline information, skin color information and skeleton information from sketch of human body are comprehensively utilized, so that the accuracy for extracting the joint point is ensured. According to the automatic labeling method provided by the invention, the accurate and efficient cutting for the part of human body is performed, the gesture information of each limb part is obtained and a beneficial condition is supplied to the next operation for obtaining and treating feature vectors of human body.

Owner:BEIJING UNIV OF POSTS & TELECOMM

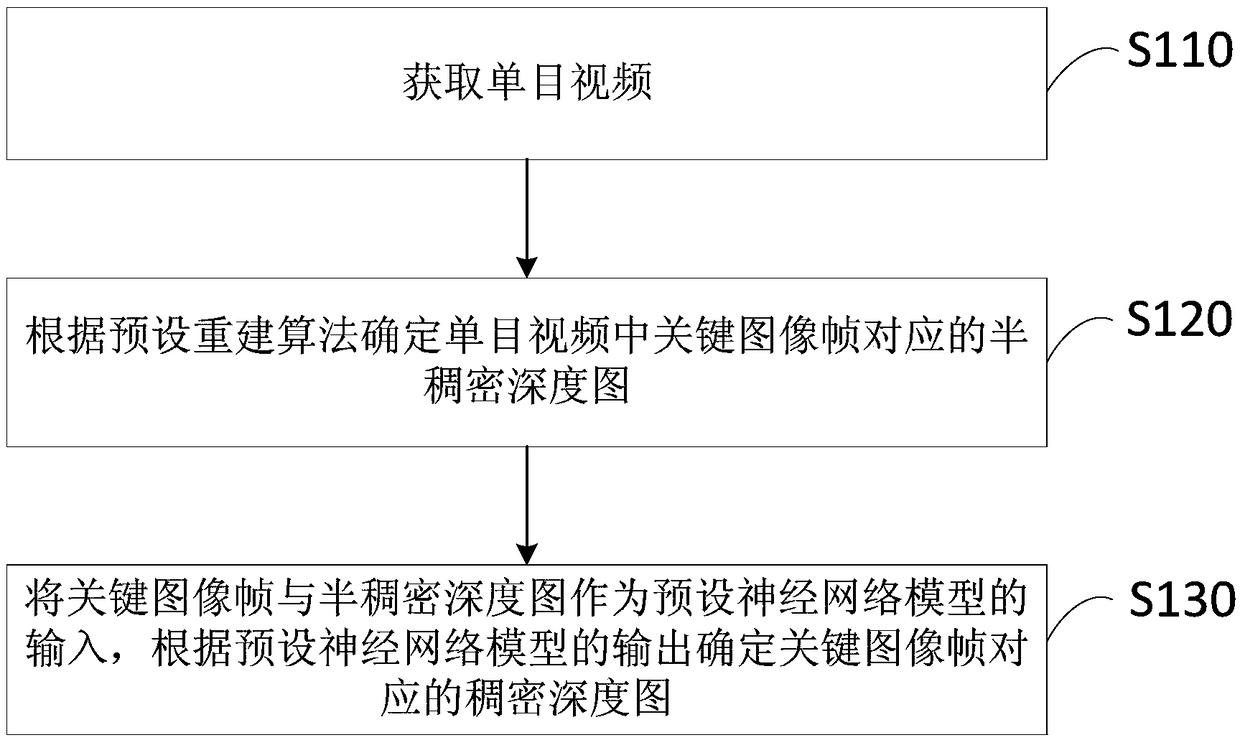

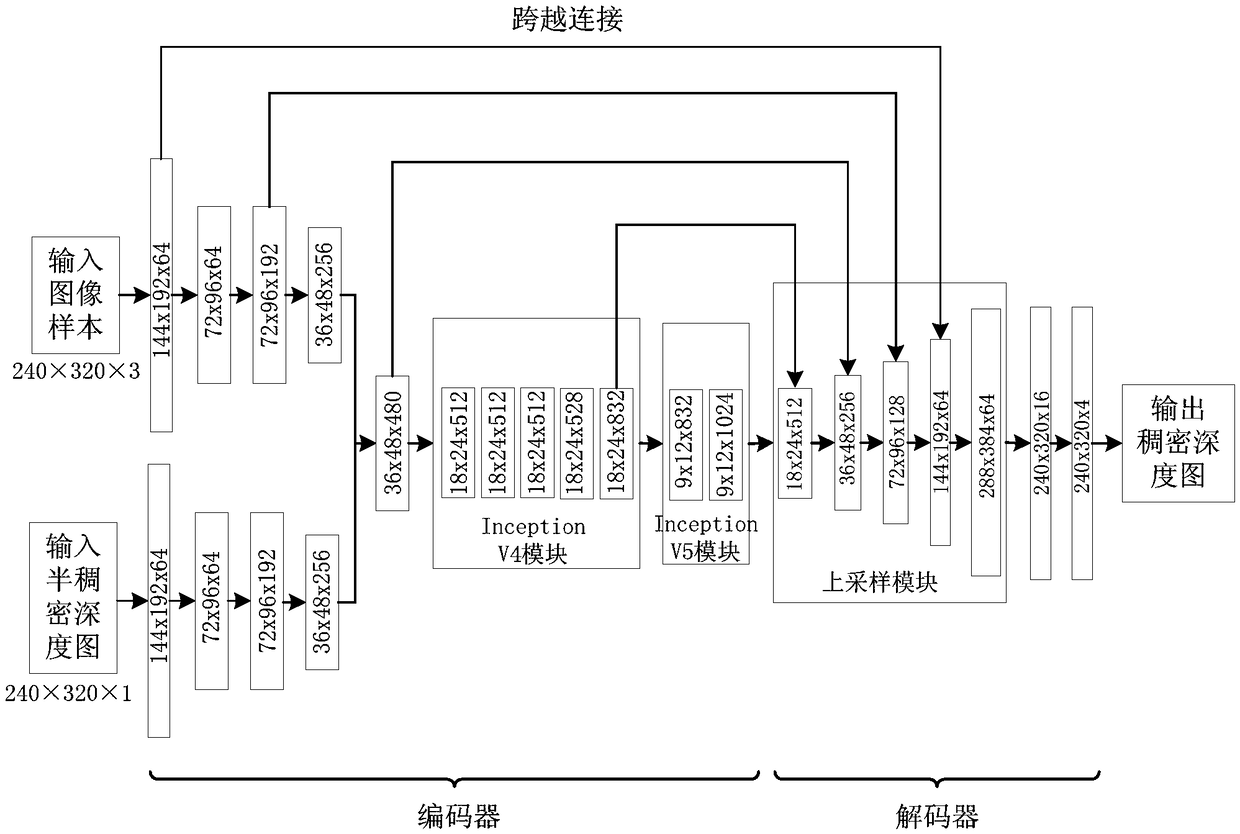

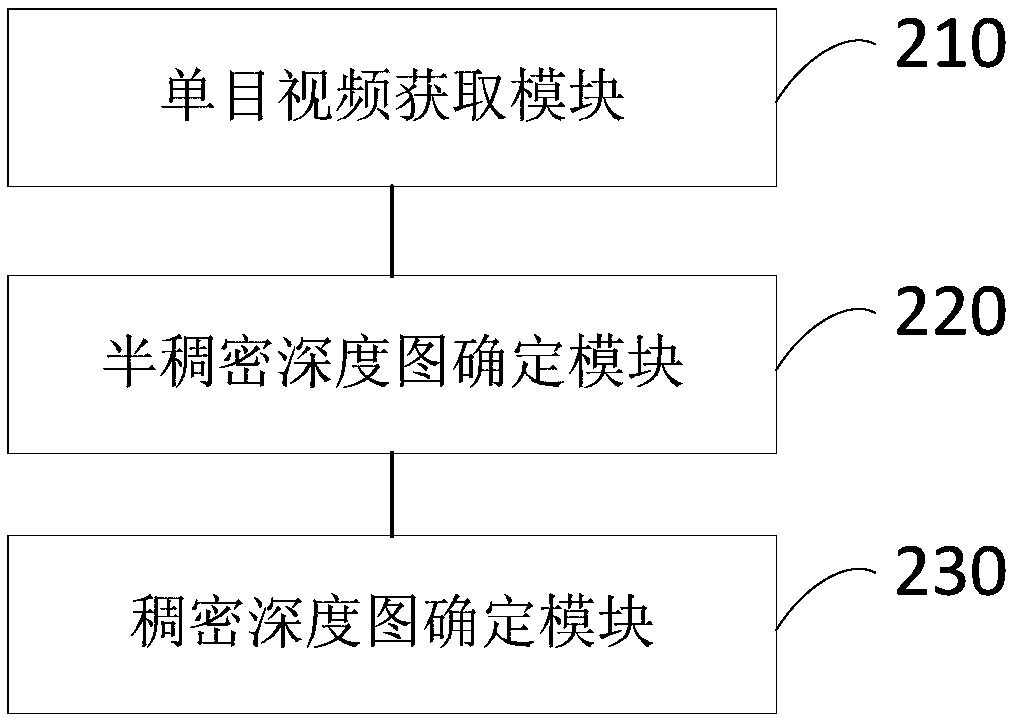

Monocular depth estimation method, apparatus, terminal, and storage medium

ActiveCN109087349ASolve the accuracy problemSolve the problem of low generalization abilityImage enhancementImage analysisEstimation methodsNetwork model

The embodiment of the invention discloses a monocular depth estimation method, a device, a terminal and a storage medium. The method comprises the following steps: obtaining monocular video; determining a semi-dense depth map corresponding to key image frames in the monocular video according to a preset reconstruction algorithm; using the key image frame and the semi-dense depth map as inputs of apreset neural network model, and determining the dense depth map corresponding to the key image frame according to the output of the preset neural network model. The technical proposal of the embodiment of the invention effectively combines a preset reconstruction algorithm and a preset neural network model, thereby obtaining a dense and high-precision depth map.

Owner:HISCENE INFORMATION TECH CO LTD

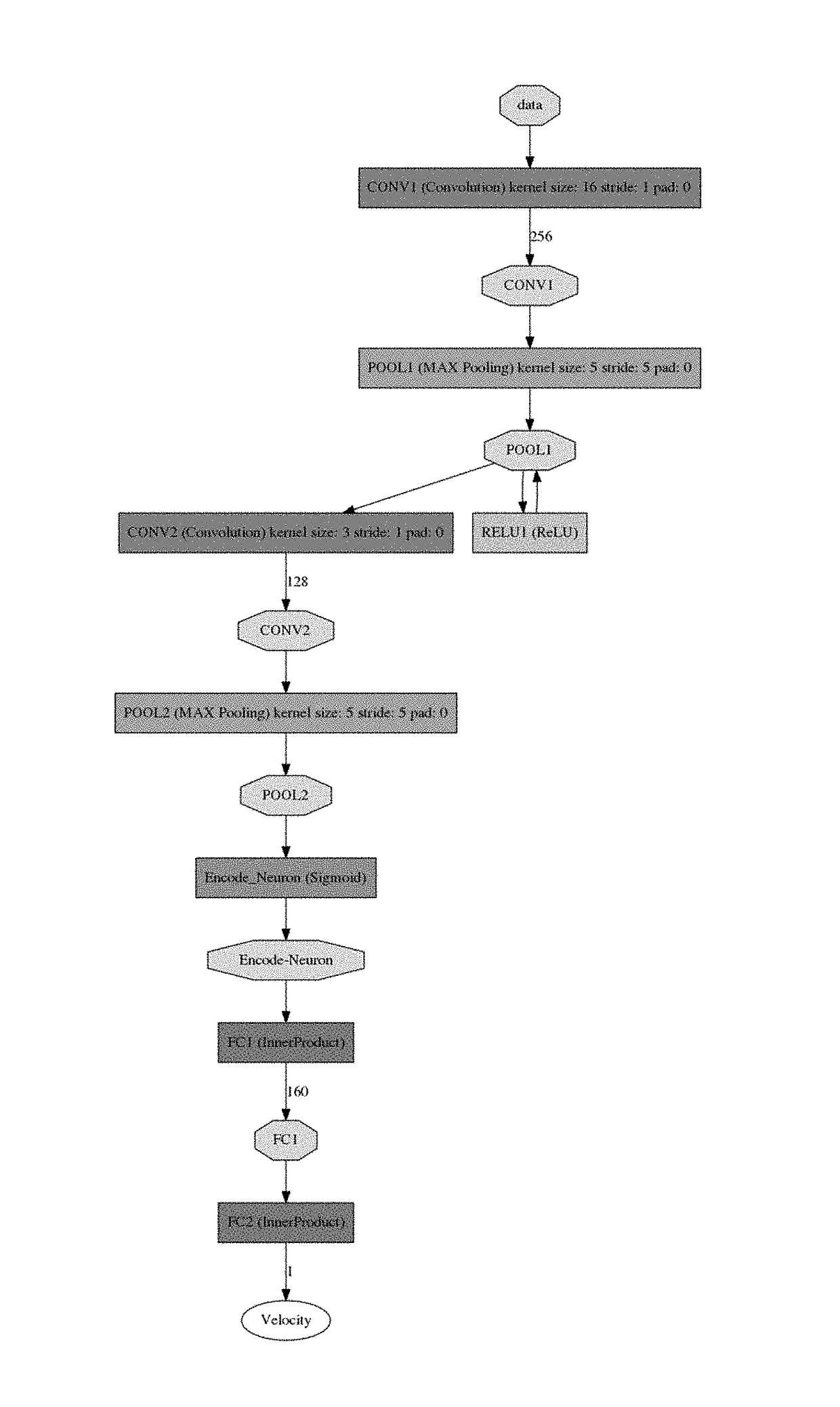

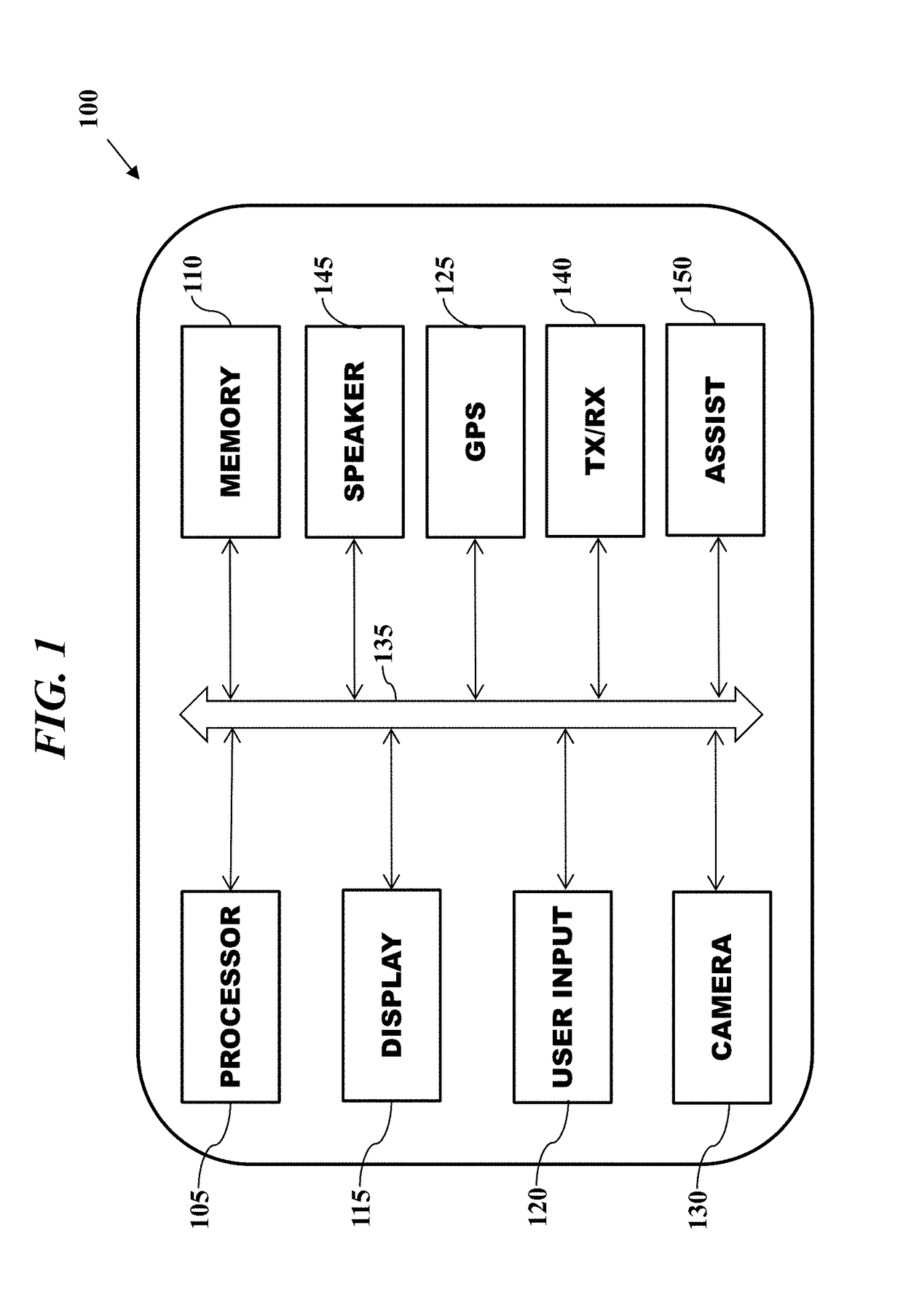

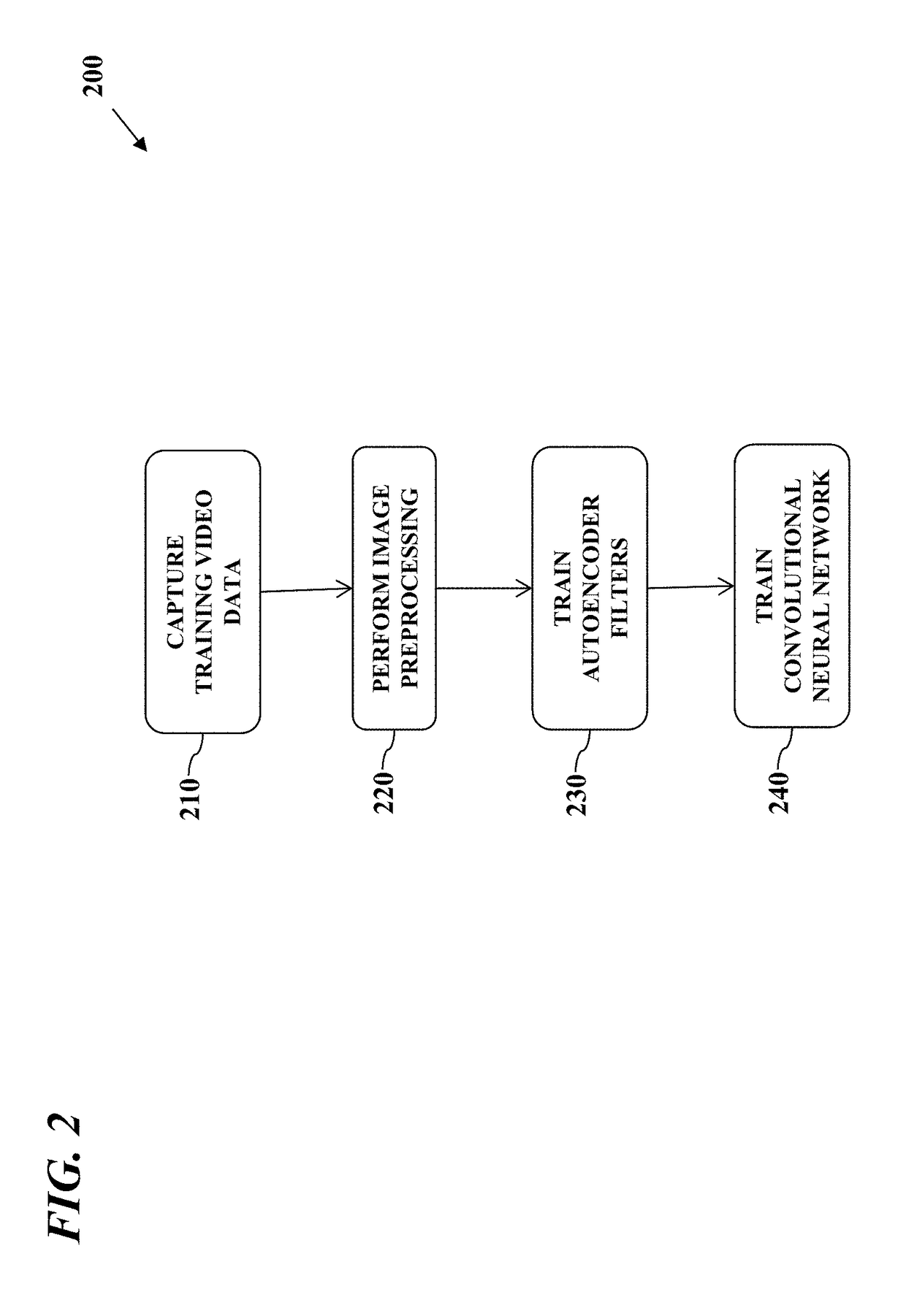

System and Method for Estimating Vehicular Motion Based on Monocular Video Data

A vehicle movement parameter, such as ego-speed, is estimated using real-time images captured by a single camera. The captured images may be analyzed by a pre-trained convolutional neural network to estimate vehicle movement based on monocular video data. The convolutional neural network may be pre-trained using filters from a synchrony autoencoder that were trained using unlabeled video data captured by the vehicle's camera while the vehicle was in motion. A parameter corresponding to the estimated vehicle movement may be output to the driver or to a driver assistance system for use in controlling the vehicle.

Owner:BAYERISCHE MOTOREN WERKE AG +1

Respiratory function estimation from a 2d monocular video

ActiveUS20140142435A1Readily apparentRespiratory organ evaluationSensorsThoracic regionReference patterns

What is disclosed is a system and method for processing a video acquired using a 2D monocular video camera system to assess respiratory function of a subject of interest. In various embodiments hereof, respiration-related video signals are obtained from a temporal sequence of 3D surface maps that have been reconstructed based on an amount of distortion detected in a pattern placed over the subject's thoracic region (chest area) during video acquisition relative to known spatial characteristics of an undistorted reference pattern. Volume data and frequency information are obtained from the processed video signals to estimate chest volume and respiration rate. Other respiratory function estimations of the subject in the video can also be derived. The obtained estimations are communicated to a medical professional for assessment. The teachings hereof find their uses in settings where it is desirable to assess patient respiratory function in a non-contact, remote sensing environment.

Owner:XEROX CORP

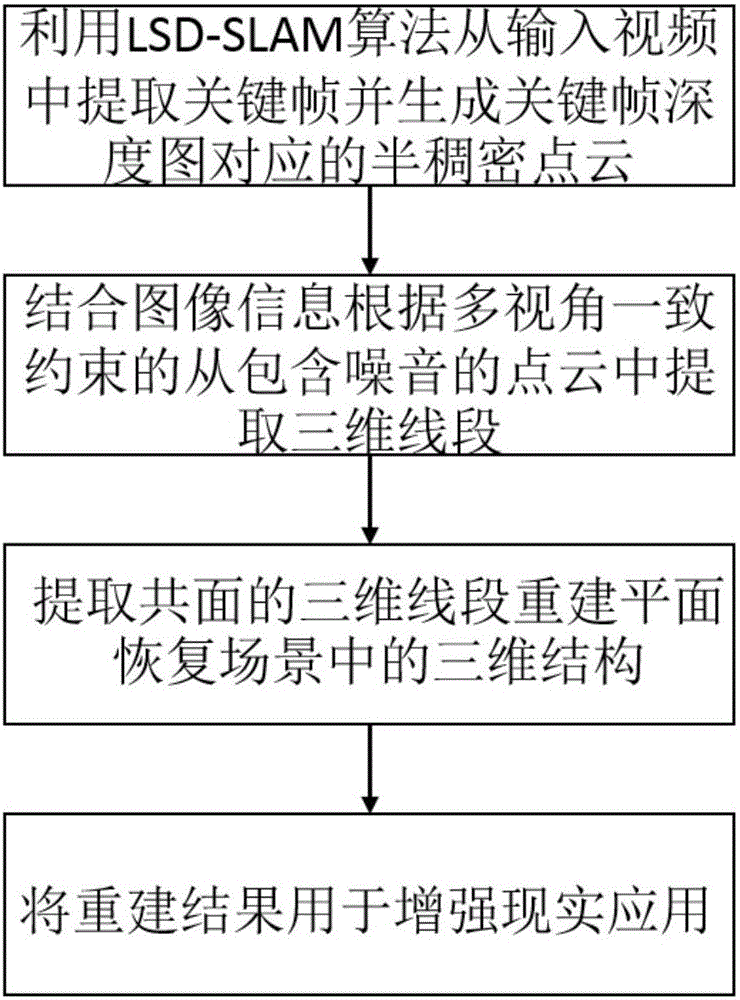

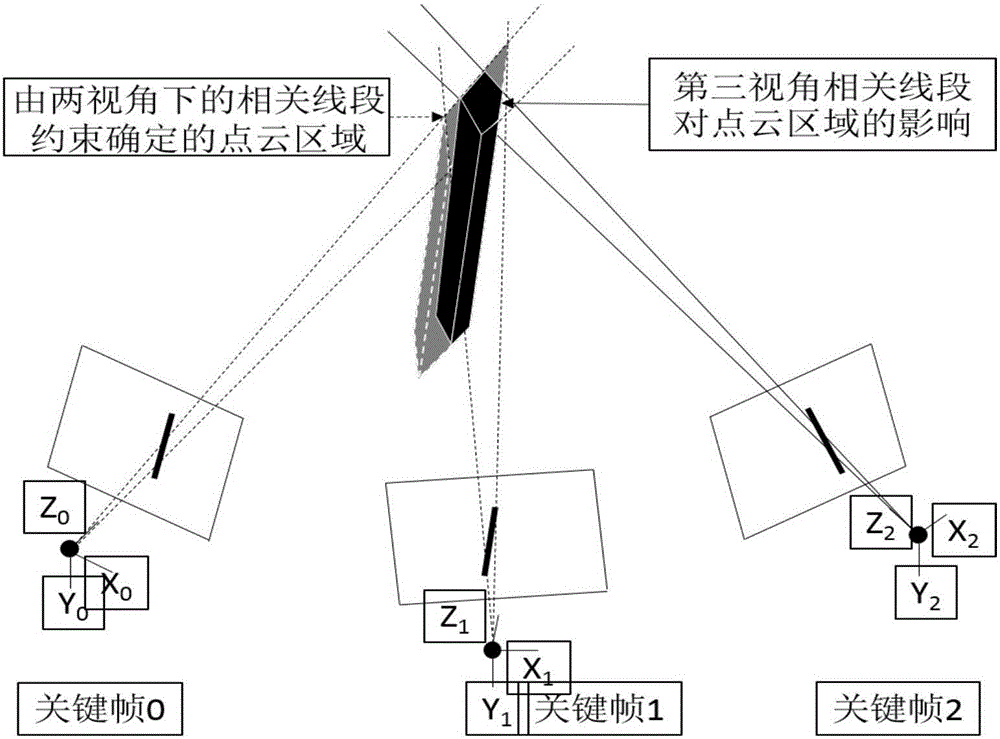

Multi-angle consistent plane detection and analysis method for monocular video scene three dimensional structure

ActiveCN106570507AGood effectCharacter and pattern recognitionSteroscopic systemsIn planePoint cloud

The invention discloses a multi-angle consistent plane detection and analysis method for a monocular video scene three dimensional structure. The method includes inputting a monocular video to extract a key frame and generating a semi-dense point cloud containing noise; extracting a two-dimensional line segment in the key frame image, back-projecting the two-dimensional line segment to the three-dimensional space to obtain the corresponding point cloud; projecting the line segment obtained by single frame extraction into other key frames, filtering out the noise points in the point cloud to obtain the point cloud meeting the constraint according the constraint of multi-angle consistence and fitting the point cloud to obtain three-dimensional line segments; extracting the intersected line segments from the three-dimensional line segments and constructing a plane according to the constraint that line intersection is absolutely in the same plane, and performing detection and analysis in the three-dimensional point cloud including noise according to the constraint of multi-angle consistence to obtain the plane in a monocular video scene; and applying the reconstructed plane to augmented reality according to users' needs. The method has great performance in plane reconstruction and virtual-real fusion, and can be widely applied to the field of augmented reality.

Owner:BEIHANG UNIV

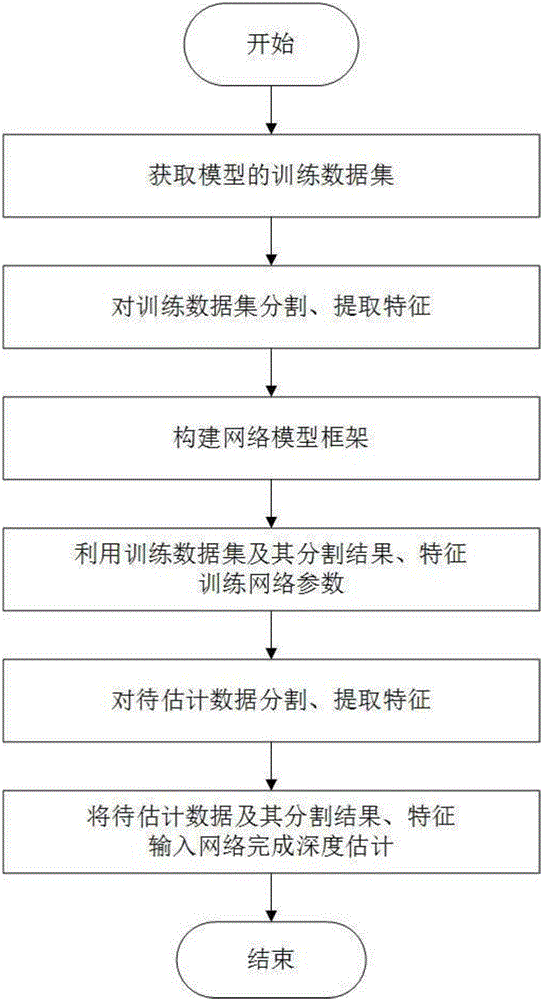

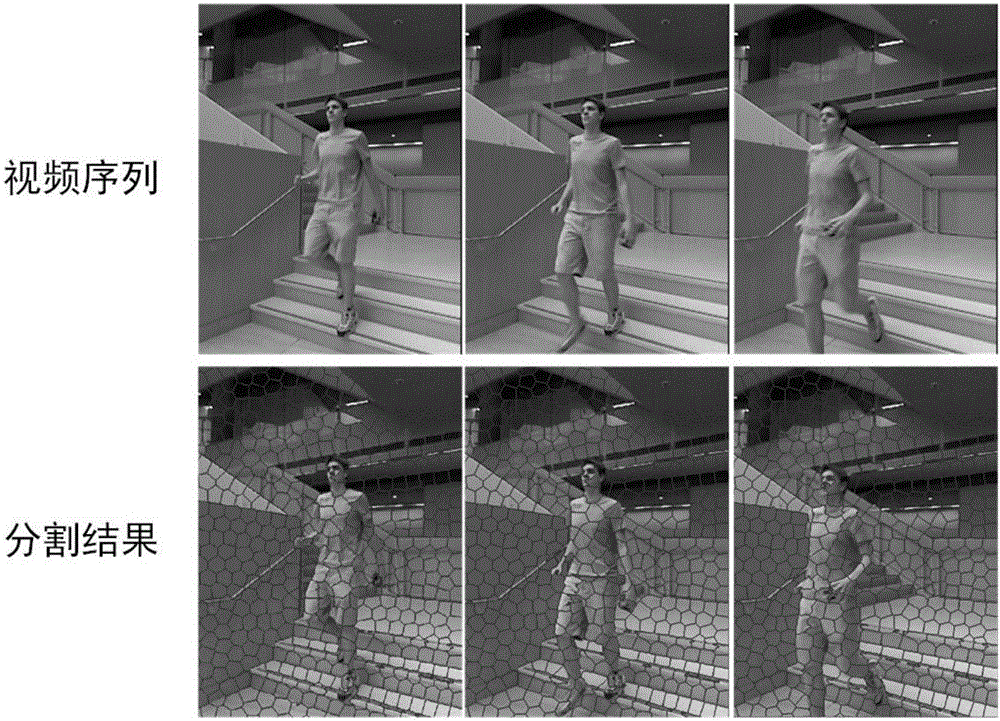

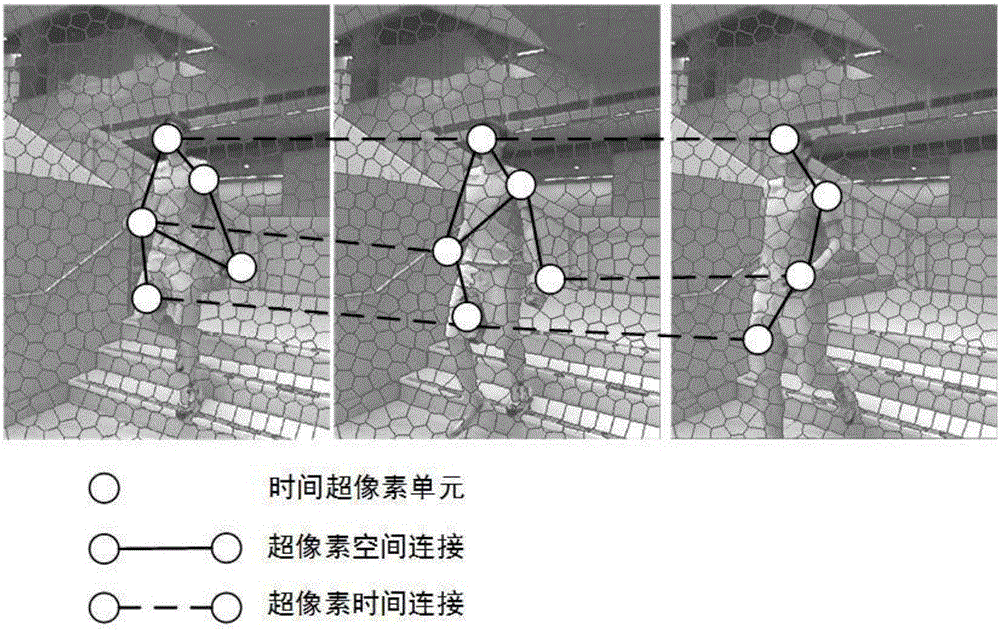

Supervised data driving-based monocular video depth estimating method

ActiveCN106599805AImprove visual effectsImprove generalization abilityCharacter and pattern recognitionStereoscopic videoNerve network

Owner:HUAZHONG UNIV OF SCI & TECH

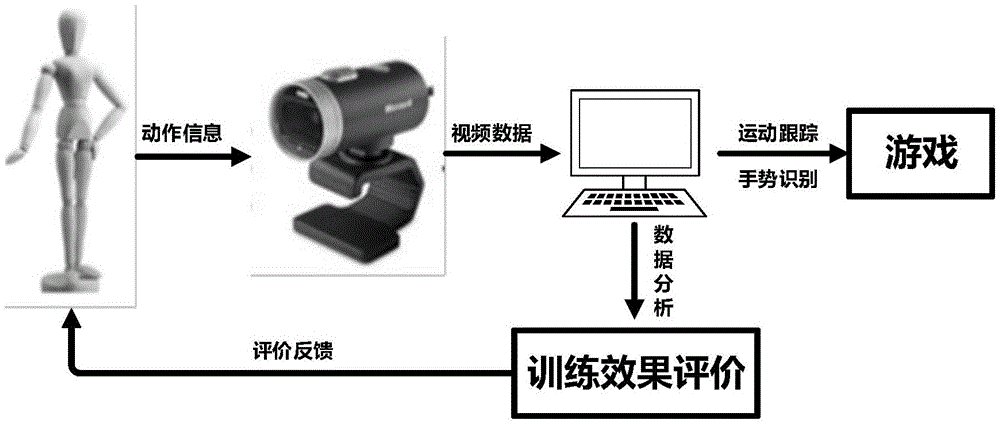

Upper limb training system based on monocular video human body action sensing

InactiveCN105536205AStimulate training initiativeIncrease motivationGymnastic exercisingHuman bodyUpper limb training

The invention discloses an upper limb training system based on monocular video human body action sensing. The upper limb training system comprises a computer and a video camera, wherein the video camera performs video data transmission with the computer, and is used for capturing the human body movement and transmitting the video data through a USB (Universal Serial Bus); the computer is used for receiving the video data, analyzing the video data, completing the hand moving trace tracking and identifying the gesture action according to the tracking result; and the computer is also used for realizing the interaction with a game platform, obtaining a training evaluation parameter and feeding the training evaluation parameter to a user. The training system has the advantages that a quantitative evaluation system is used; the training initiative of a user can be aroused; the defects of the existing training measure are overcome; and the upper limb training system can be applied to communities and families. The immersion feeling of the virtual reality technology of the training system is strong; the interestingness of the training process and the enthusiasm of the user are enhanced; and meanwhile, the safety of the user in the training process is improved by the virtual reality technology.

Owner:TIANJIN UNIV

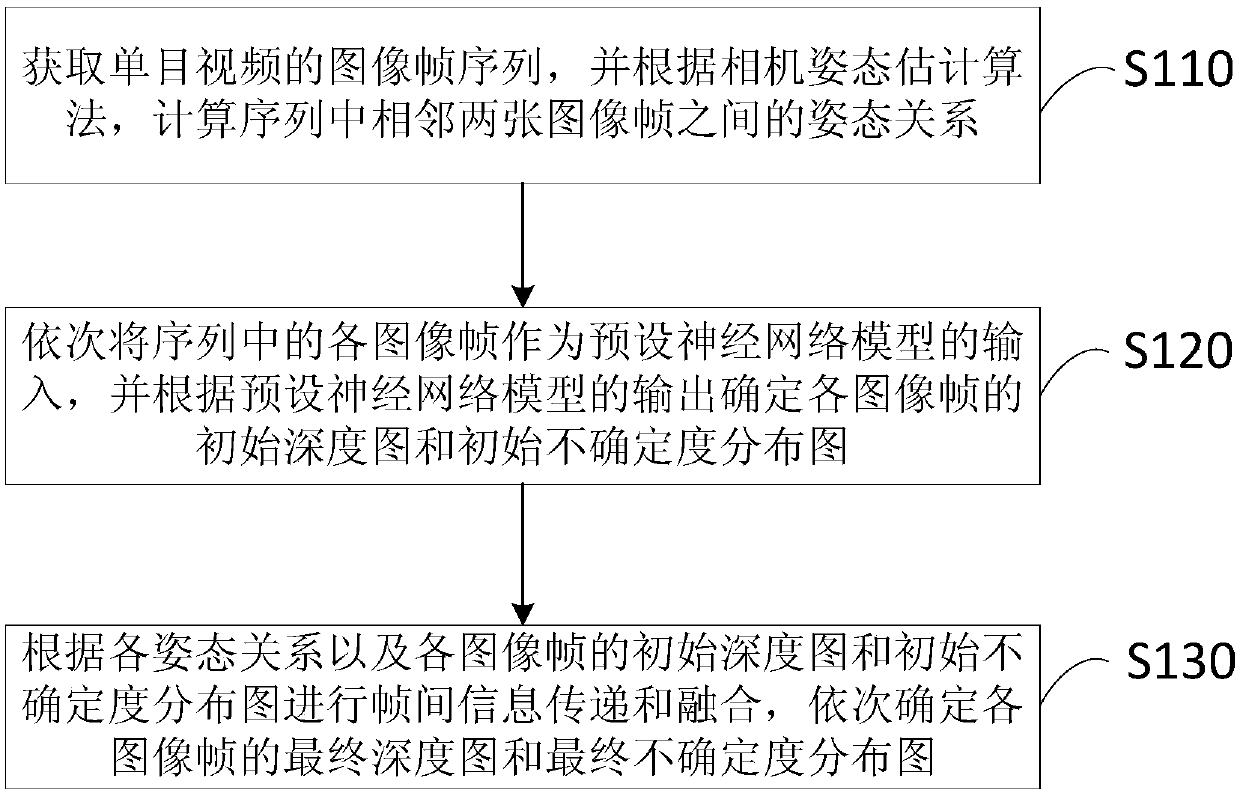

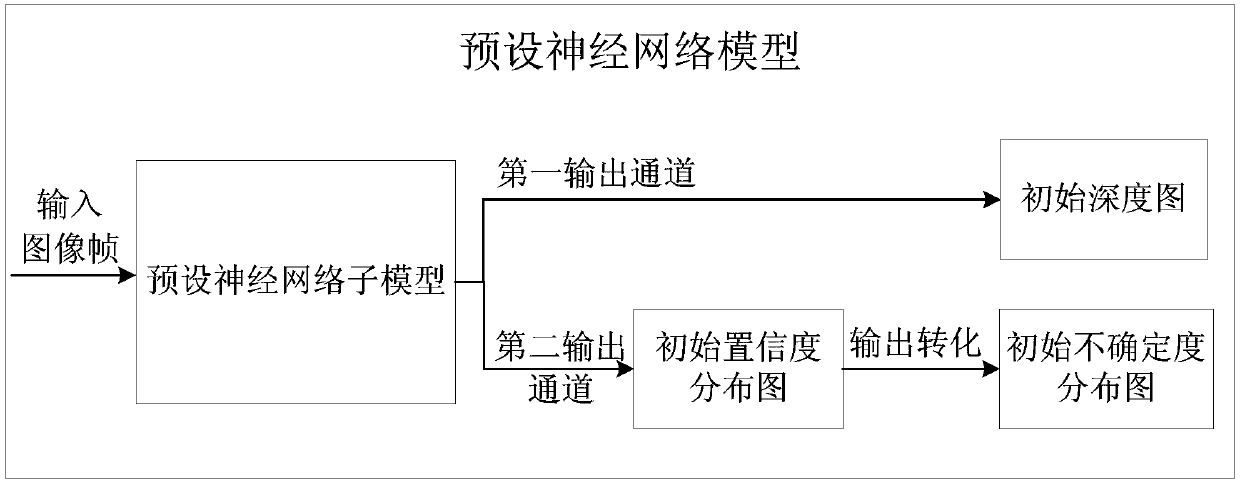

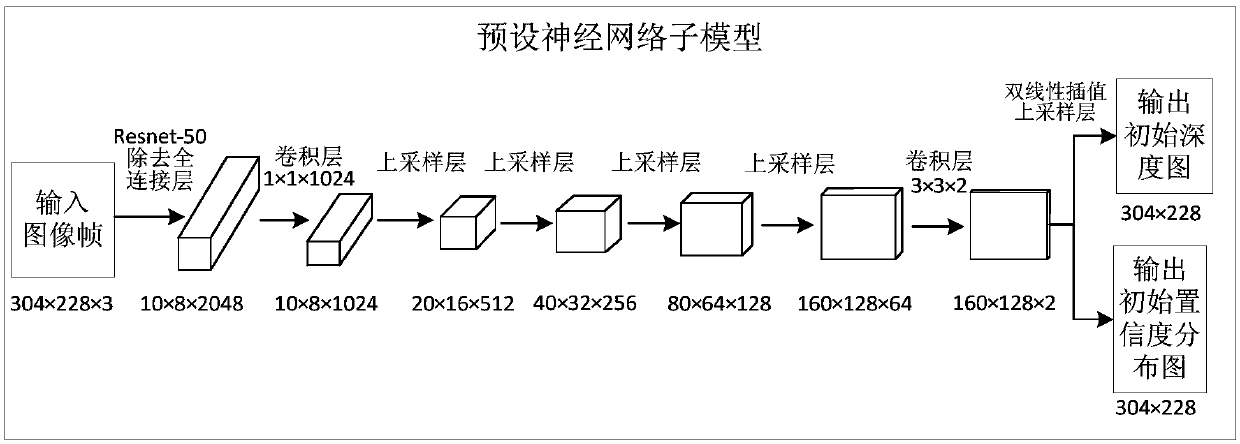

Depth estimation method and apparatus of monocular video, terminal, and storage medium

ActiveCN108765481AAchieving Deep RestorationImprove forecast accuracyImage enhancementImage analysisInformation transmissionEstimation methods

The embodiment of the invention discloses a depth estimation method and apparatus of a monocular video, a terminal, and a storage medium. The method includes steps: obtaining an image frame sequence of the monocular video, and calculating an attitude relation between each two adjacent image frames in the sequence according to a camera attitude estimation algorithm; regarding the image frames in the sequence as input of a preset neural network model in sequence, and determining an initial depth map and an initial uncertainty distribution map of each image frame according to output of the presetneural network model; performing inter-frame information transmission and fusion according to the attitude relations and the initial depth maps and the initial uncertainty distribution maps of the image frames, and determining final depth maps and final uncertainty distribution maps of the image frames in sequence. According to the technical scheme, depth restoration of the image frames of the monocular video can be performed, the prediction precision of the depth maps can be improved, and the uncertainty distribution of the depth maps can be obtained.

Owner:HISCENE INFORMATION TECH CO LTD

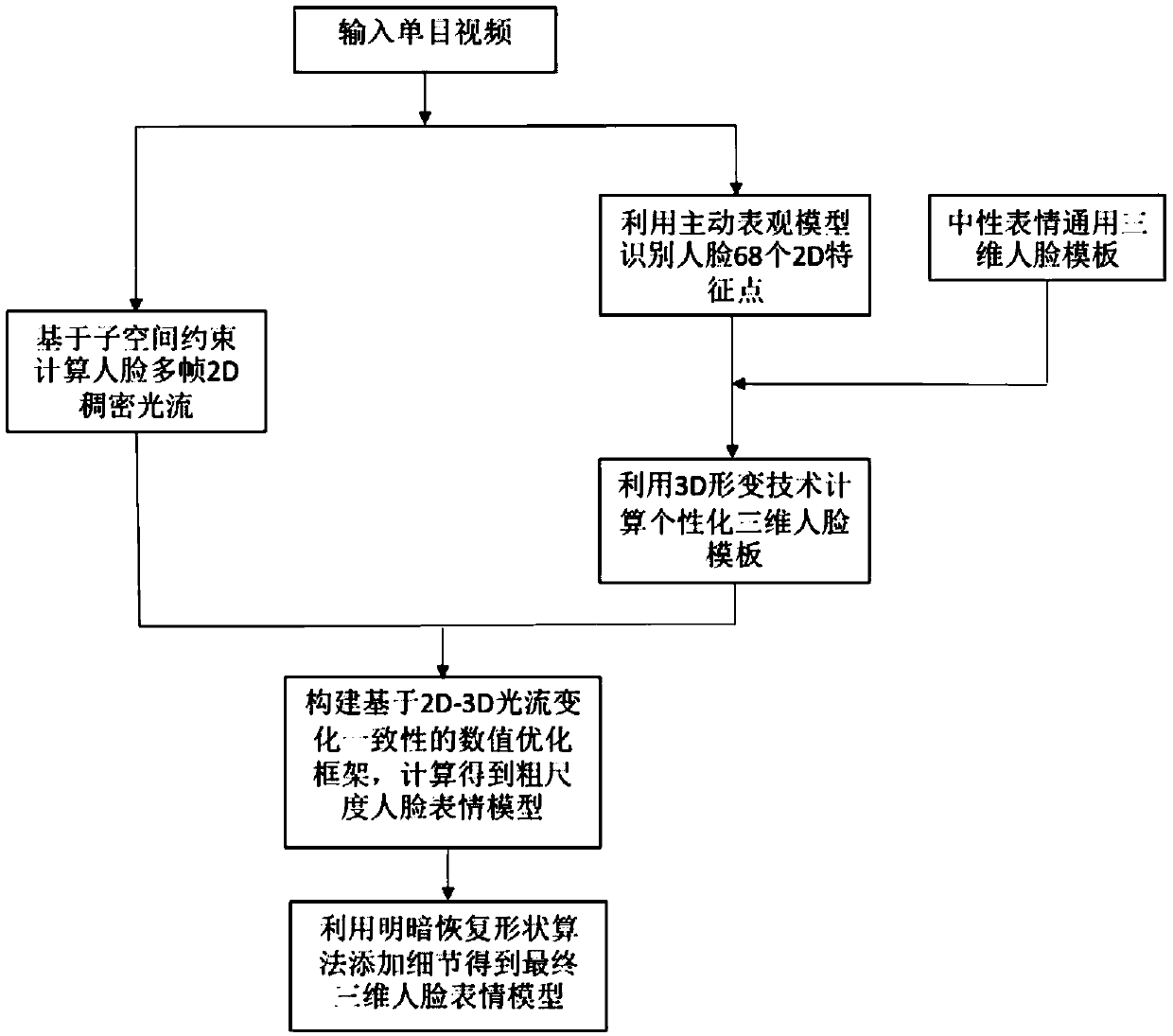

Method for reconstructing a three-dimensional facial expression model based on a monocular video

ActiveCN109584353ASolve the large amount of calculationResolution rangeImage enhancementDetails involving processing stepsPattern recognitionPersonalization

The invention discloses a method for reconstructing a three-dimensional facial expression model based on a monocular video. Extra multi-angle shooting is not needed, A universal 3D face model is directly driven from a neutral expression image frame in an input monocular video to deform so as to generate a personalized three-dimensional face template; and expressing the deformation of the three-dimensional face expressions corresponding to different frames as the change of the personalized three-dimensional face template in the 3D vertex flow of the three-dimensional space, and solving the coarse-scale geometric model of the face expressions through the consistency with the change of the 2D optical flow. While the shape accuracy of a coarse-scale reconstruction model is improved by utilizing dense optical flow, the shooting requirement of an input video is broadened, details are added on a recovered coarse-scale face model through a light and shade recovery shape technology so as to recover fine-scale face geometric data, and a three-dimensional face geometric model with high fidelity is reconstructed.

Owner:BEIHANG UNIV

N-view synthesis from monocular video of certain broadcast and stored mass media content

InactiveUS20020167512A1Improve approximationSuitable displayImage enhancementImage analysisView synthesisComputer vision

A monocular input image is transformed to give it an enhanced three dimensional appearance by creating at least two output images. Foreground and background objects are segmented in the input image and transformed differently from each other, so that the foreground objects appear to stand out from the background. Given a sequence of input images, the foreground objects will appear to move differently from the background objects in the output images.

Owner:FUNAI ELECTRIC CO LTD

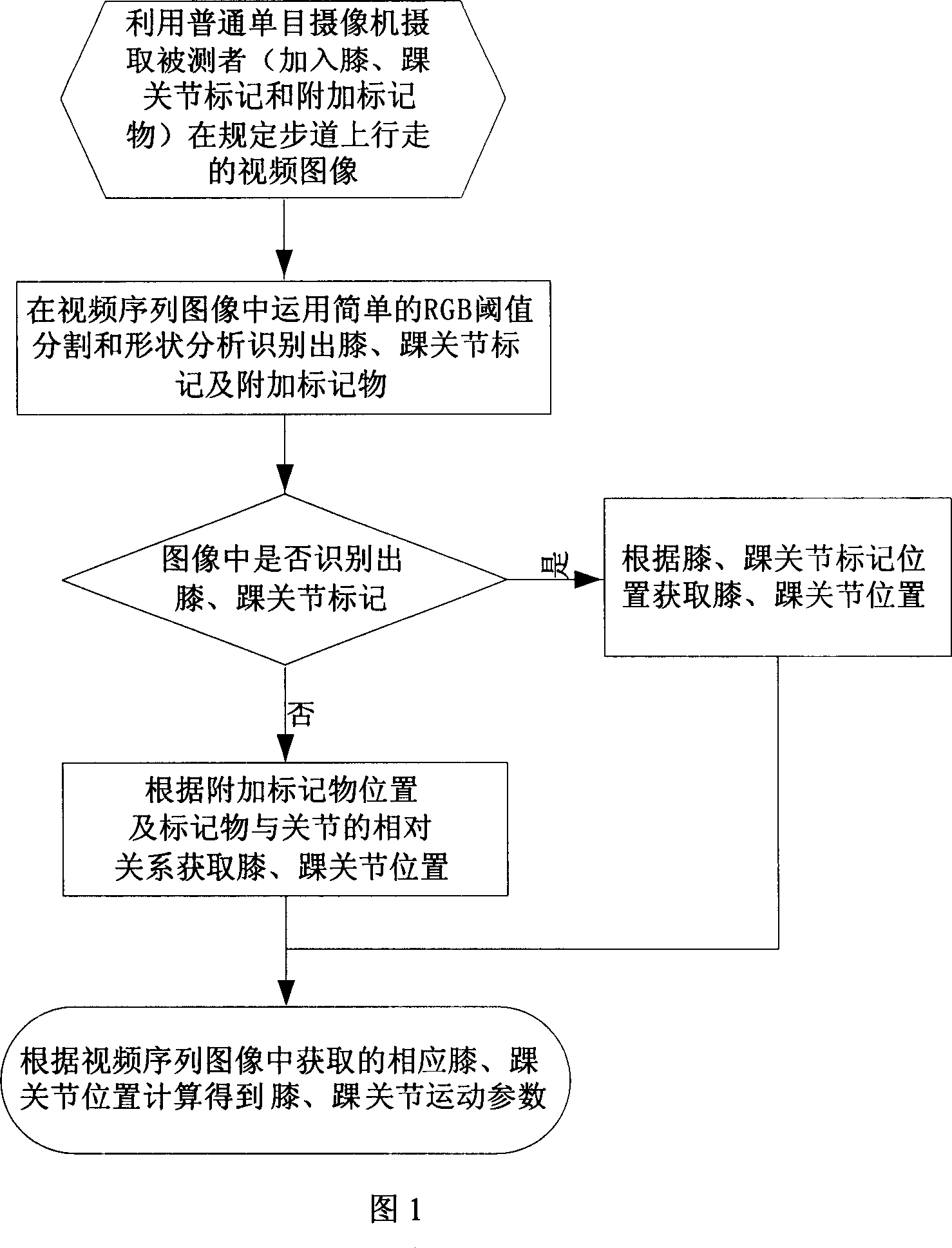

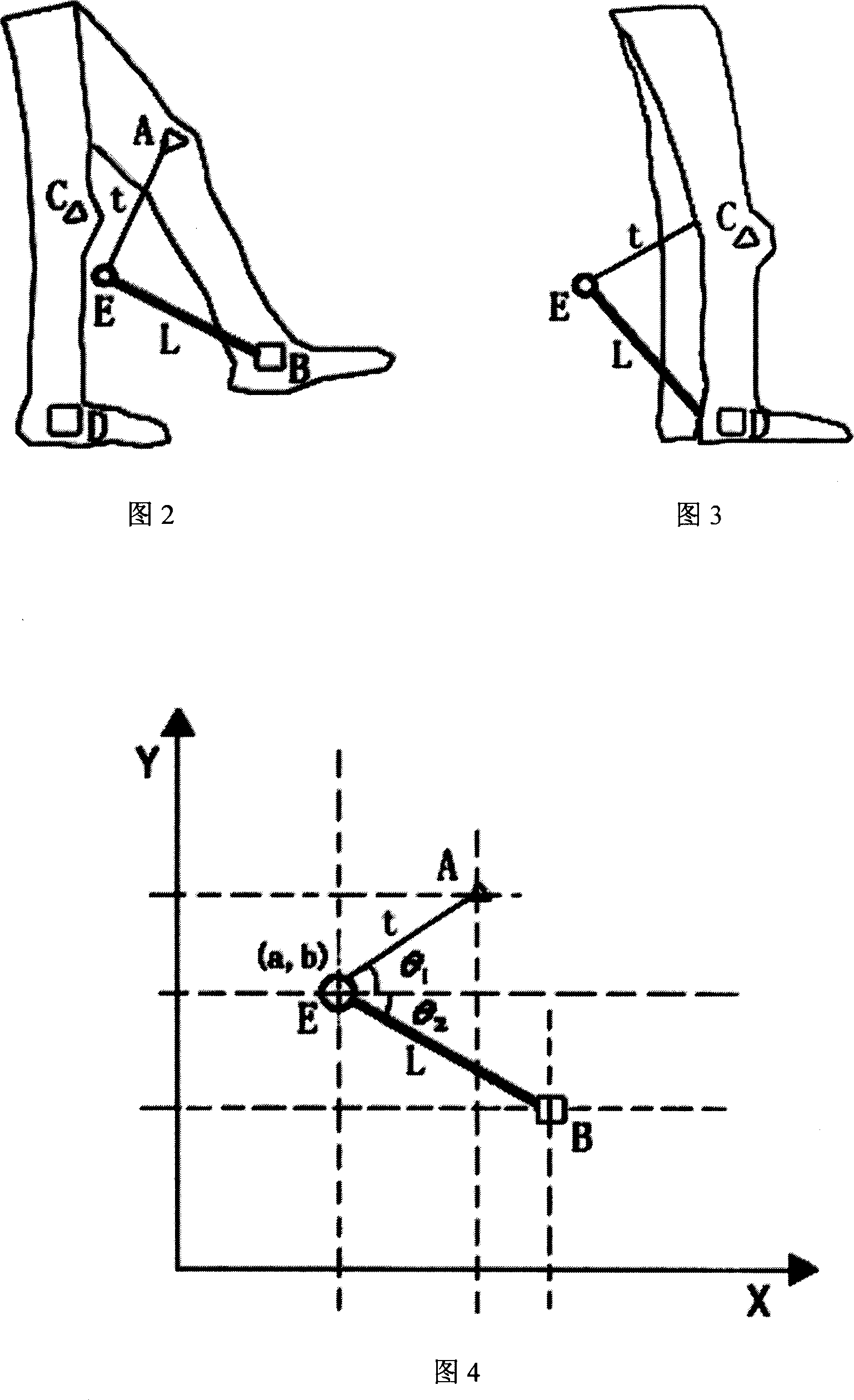

Computer aided gait analysis method based on monocular video

InactiveCN1969748ASolve the problem of discontinuous data acquisitionOvercoming inaccurate motion trackingImage analysisDiagnostic recording/measuringGait analysisMotion parameter

The invention discloses an auxiliary gait analyzing method based on single-visual computer, which is characterized by the following: adding auxiliary marker at joint part to solve self-barrier problem in the moving course; utilizing single-visual camera to shoot real-time walking image of detector; processing the image; recognizing joint marking point or auxiliary marker; obtaining the moving state according to the joint of detector if recognizing; otherwise, obtaining the moving state according to the identified auxiliary marker and relative relationship of marker and joint of detector; calculating a series of moving parameter according to moving state of detector.

Owner:HUAZHONG UNIV OF SCI & TECH

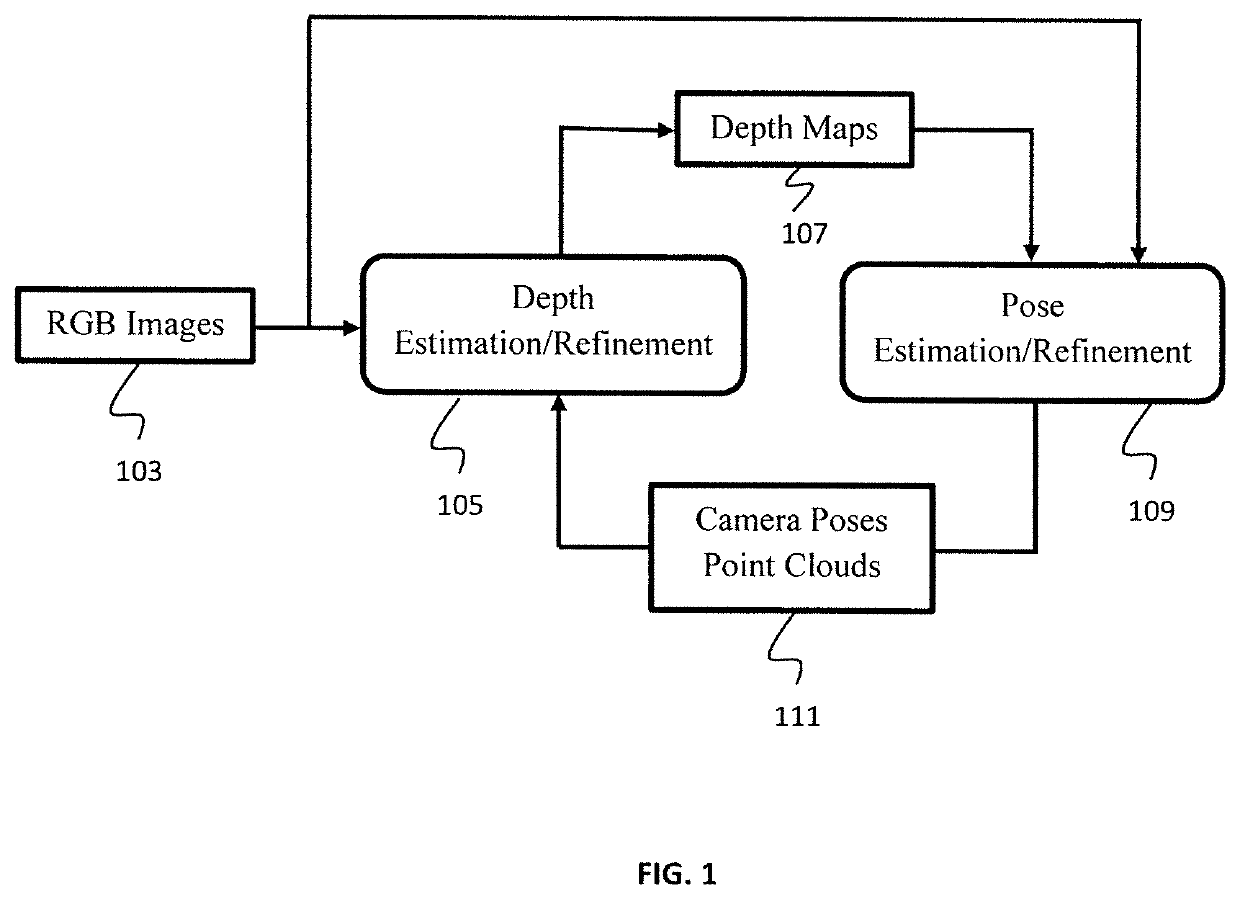

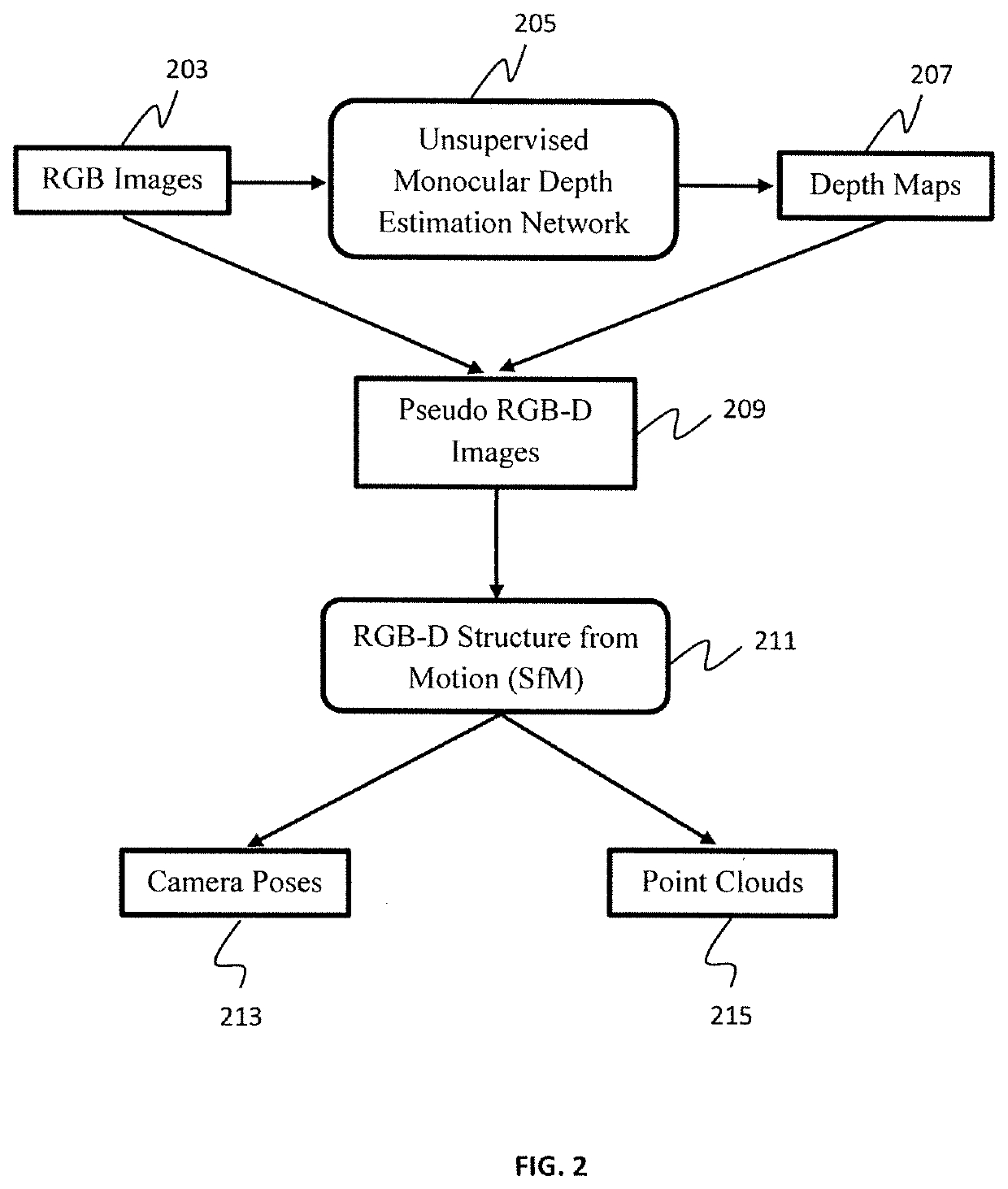

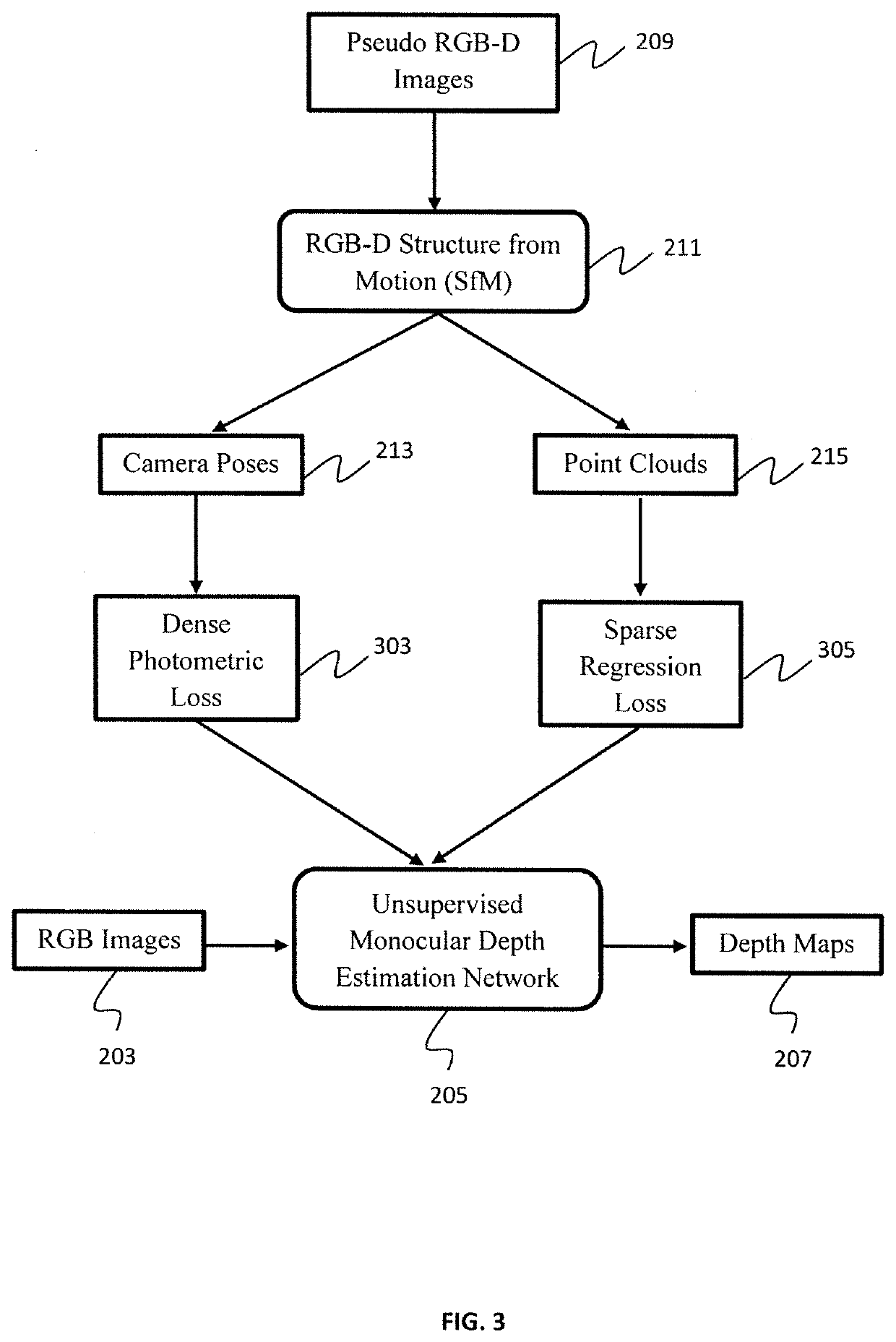

Pseudo rgb-d for self-improving monocular slam and depth prediction

A method for improving geometry-based monocular structure from motion (SfM) by exploiting depth maps predicted by convolutional neural networks (CNNs) is presented. The method includes capturing a sequence of RGB images from an unlabeled monocular video stream obtained by a monocular camera, feeding the RGB images into a depth estimation / refinement module, outputting depth maps, feeding the depth maps and the RGB images to a pose estimation / refinement module, the depths maps and the RGB images collectively defining pseudo RGB-D images, outputting camera poses and point clouds, and constructing a 3D map of a surrounding environment displayed on a visualization device.

Owner:NEC CORP

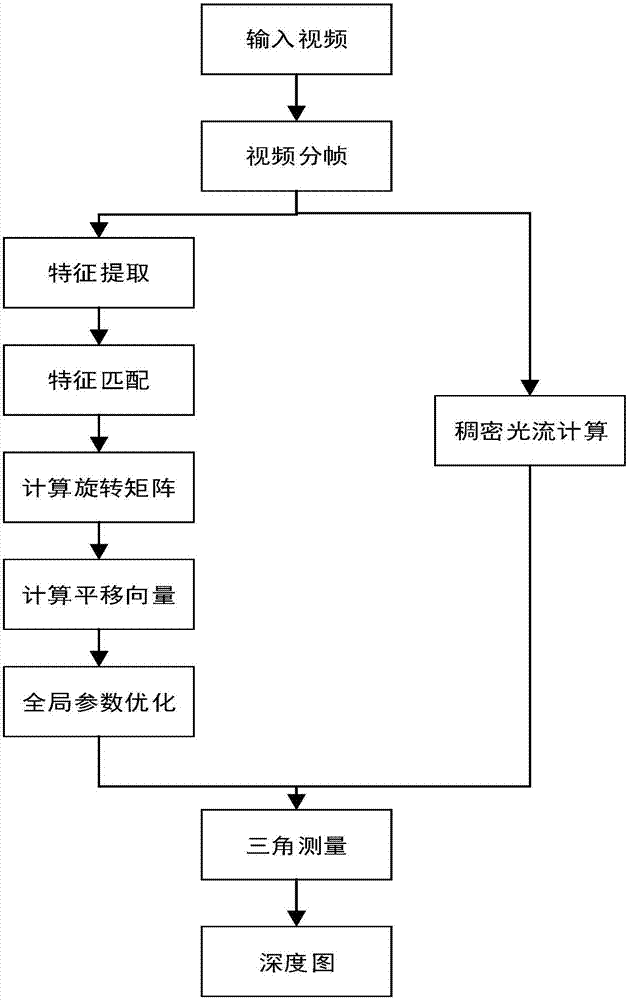

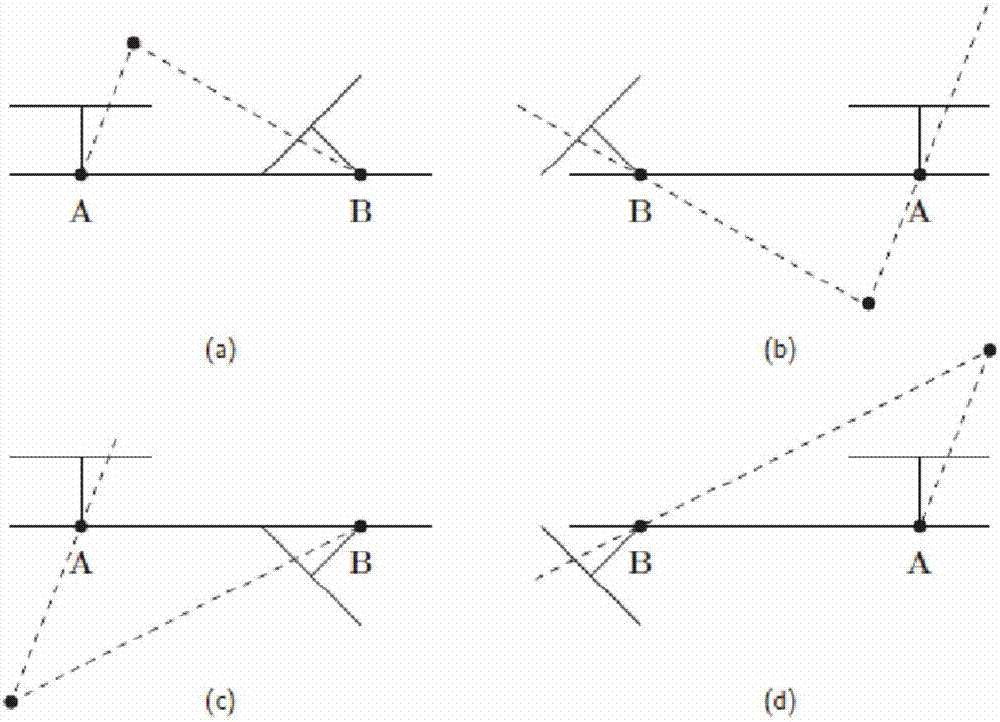

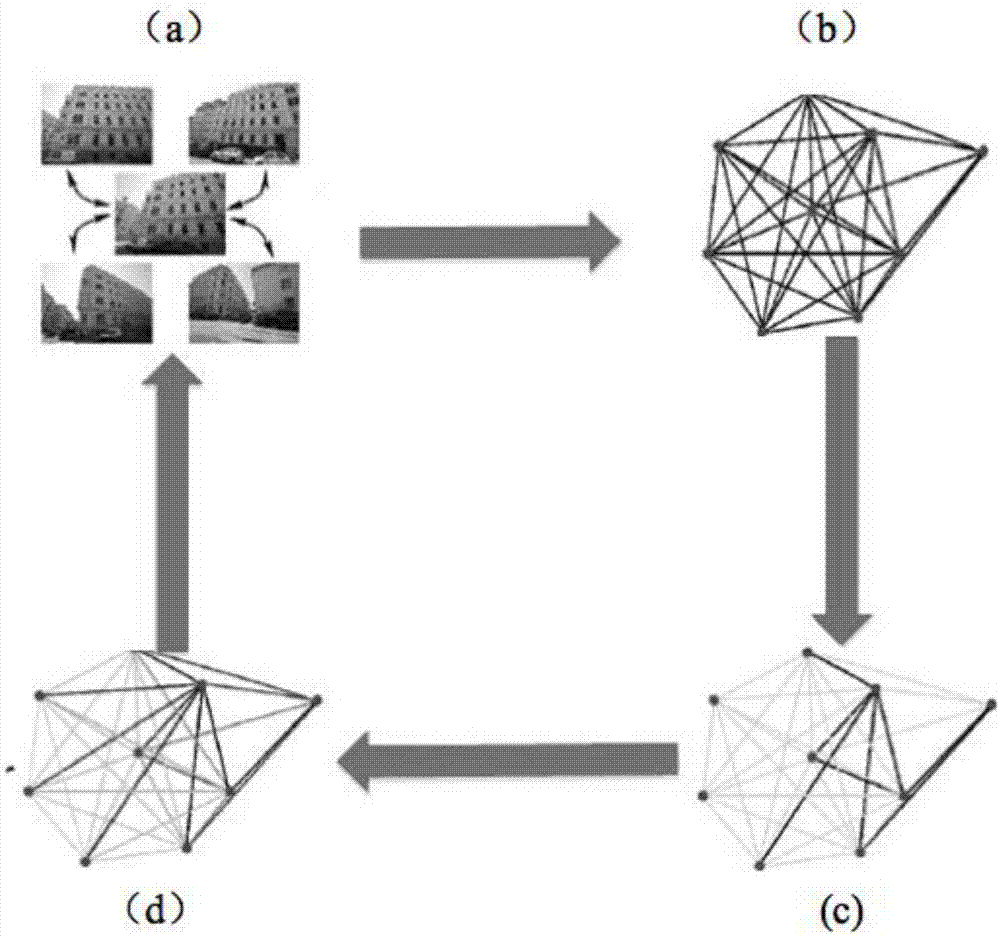

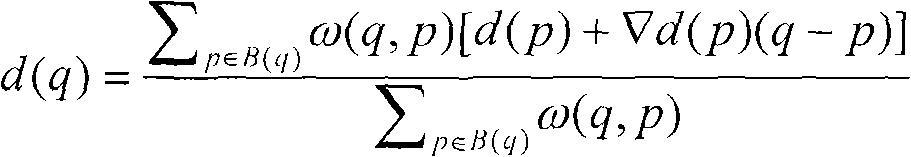

Method for calculating monocular video depth map

ActiveCN107481279AGuaranteed accuracyImprove efficiencyImage enhancementImage analysisEstimation methodsOptical flow

The invention provides a method for calculating a monocular video depth map. The method is characterized by comprising a step of decomposing a video to be recovered into frames according to a frame, a step of extracting picture feature points of each frame, a step of matching the feature points and forming a feature point trajectory, a step of calculating a global rotation matrix and a translation vector, a step of optimizing camera parameters, a step of calculating dense optical flow of a selected frame, and a step of calculating a depth value of the selected frame to obtain a depth map. According to the method of the technical scheme, a depth estimation method of surface from motion (SFM) based on a physical mechanism is used, and a dense optical flow is used for matching. According to the method, no training sample is needed, optimization modes of segmentation, plane fitting and the like are not used, and the calculation quantity is small. At the same time, according to the method, a problem that the depth values of all pixels can not be obtained especially in a texture-free area in a process from sparse reconstruction to dense reconstruction in the prior art is solved, while the calculation efficiency is improved, the accuracy of the depth map is ensured.

Owner:HUAZHONG UNIV OF SCI & TECH

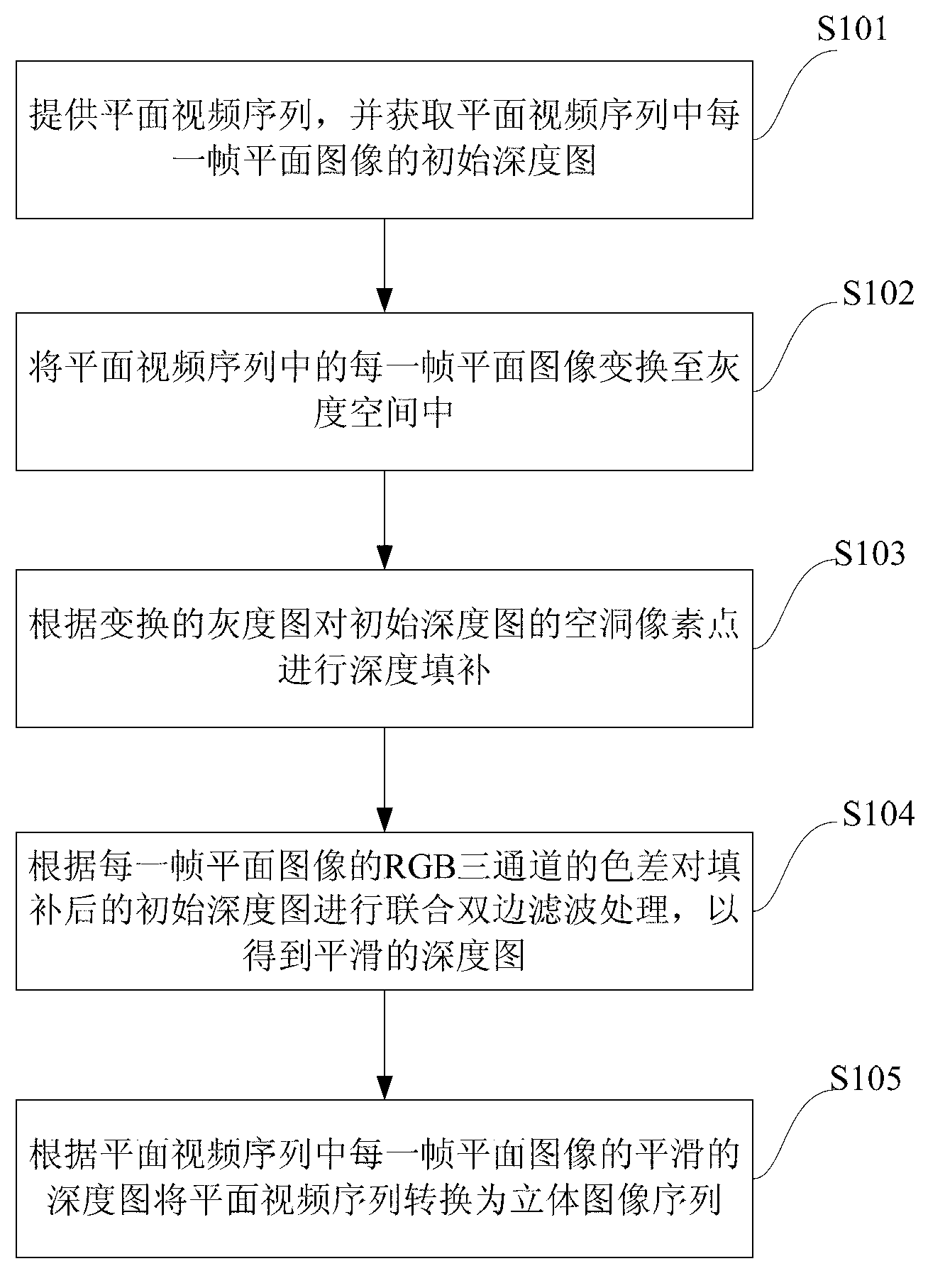

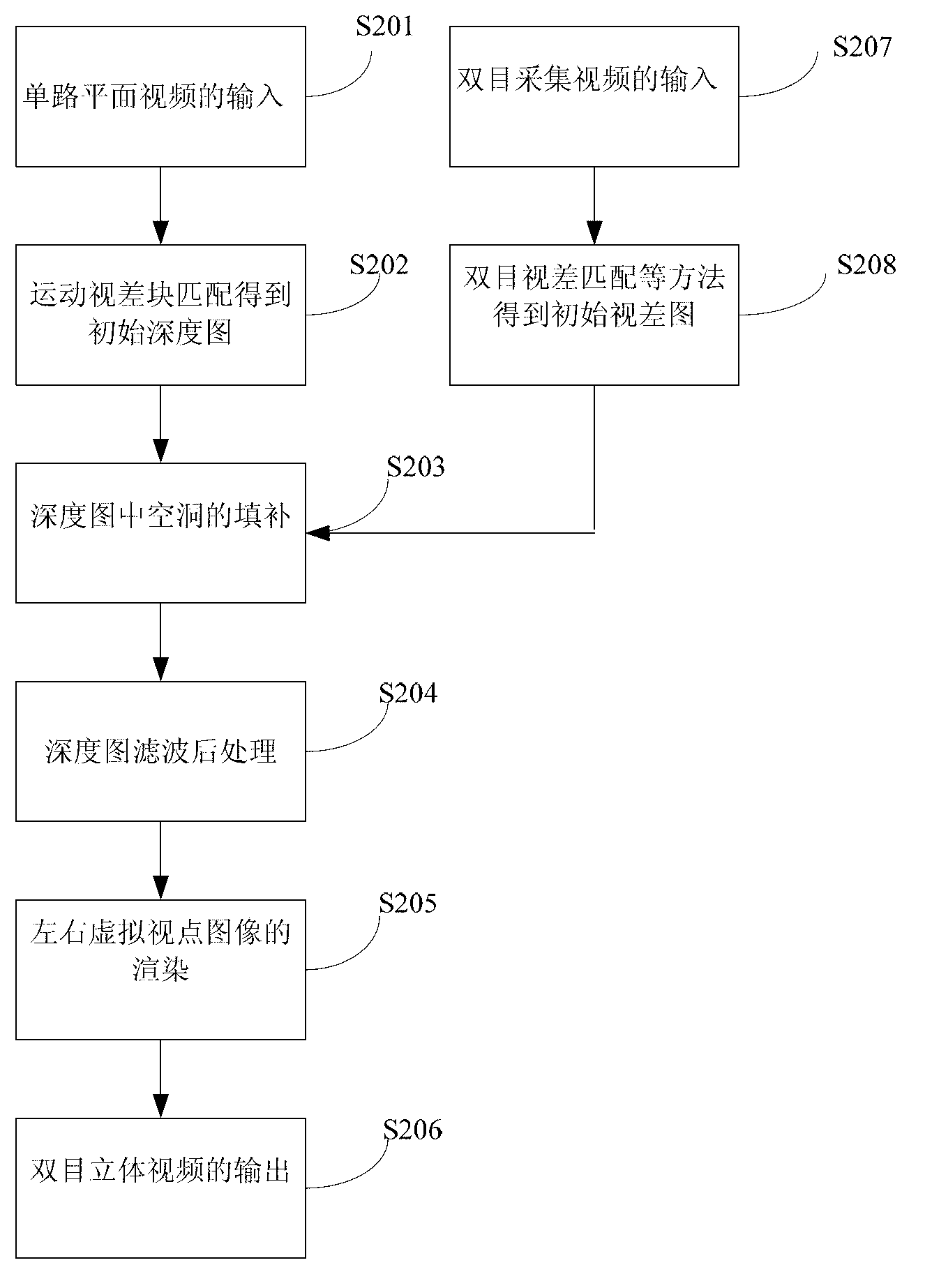

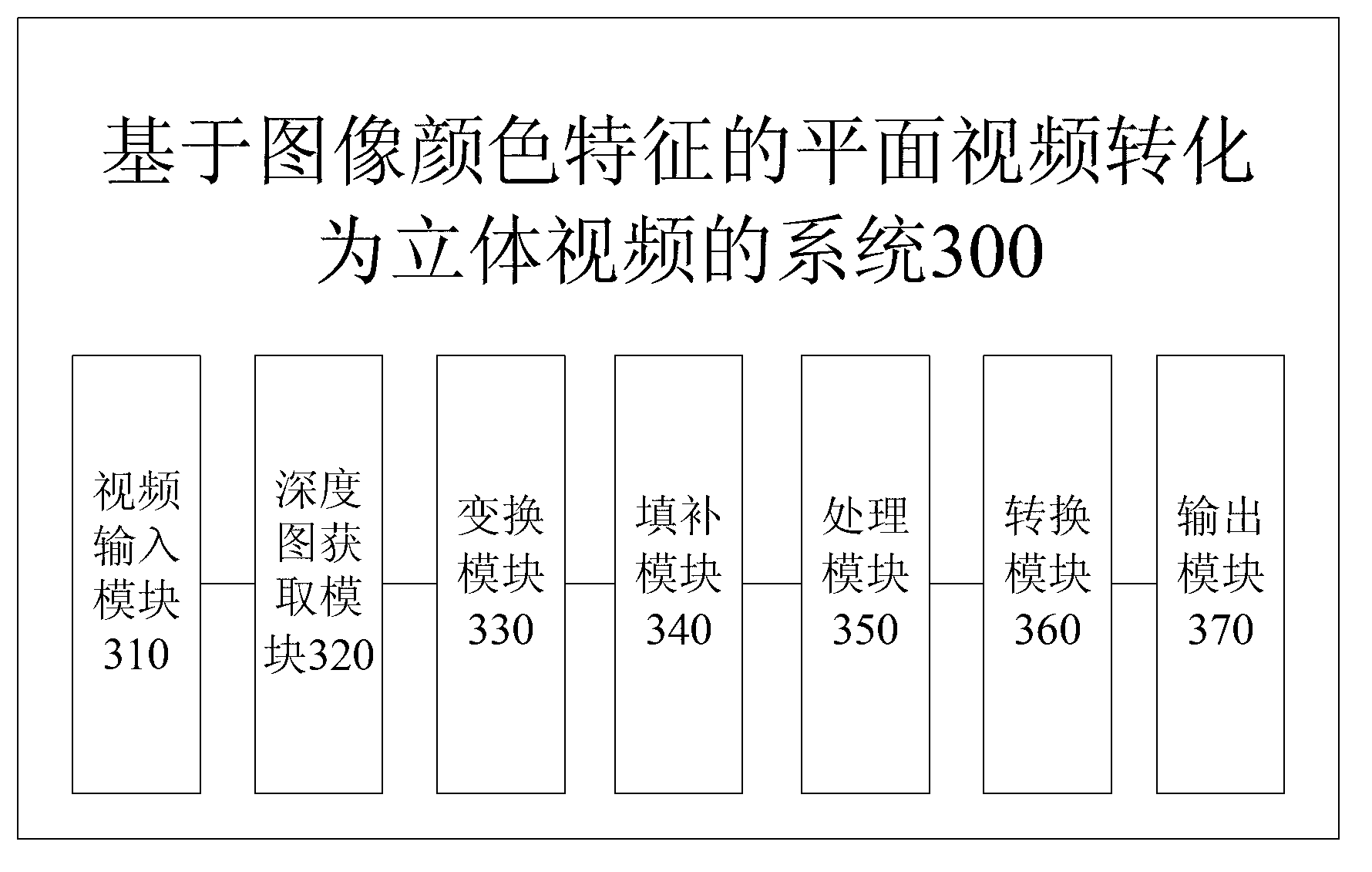

Method and system of converting monocular video into stereoscopic video

ActiveCN103248909AEasy and fast handlingStereoscopic display effect is goodSteroscopic systemsStereoscopic videoGray level

The invention provides a method of converting monocular video into stereoscopic video, the method is based on an image color characteristic and comprises the following steps of providing a monocular video sequence and obtaining an initial depth image of each frame of flat image in the monocular video sequence; converting each frame of flat image in the monocular video sequence into a grey level space; carrying out depth filling on cavity pixel points of the initial depth images according to converted gray level images; carrying out combined bilateral filter processing on the initial depth images which are subjected to filling according to the chromatic aberration of RGB (Red, Green and Blue) three channels of each frame of flat image to obtain a smooth depth image; and converting the monocular video sequence into a stereoscopic image sequence according to the smooth depth image of each frame of flat image in the monocular video sequence. According to the embodiment of the invention, no manual participation is needed, the all-automatic conversion of the monocular video into the stereoscopic video can be realized; the processing is simple and rapid; and the whole stereoscopic display effect is excellent. The invention also provides a system of converting monocular video into stereoscopic video, based on the image color characteristic.

Owner:TSINGHUA UNIV

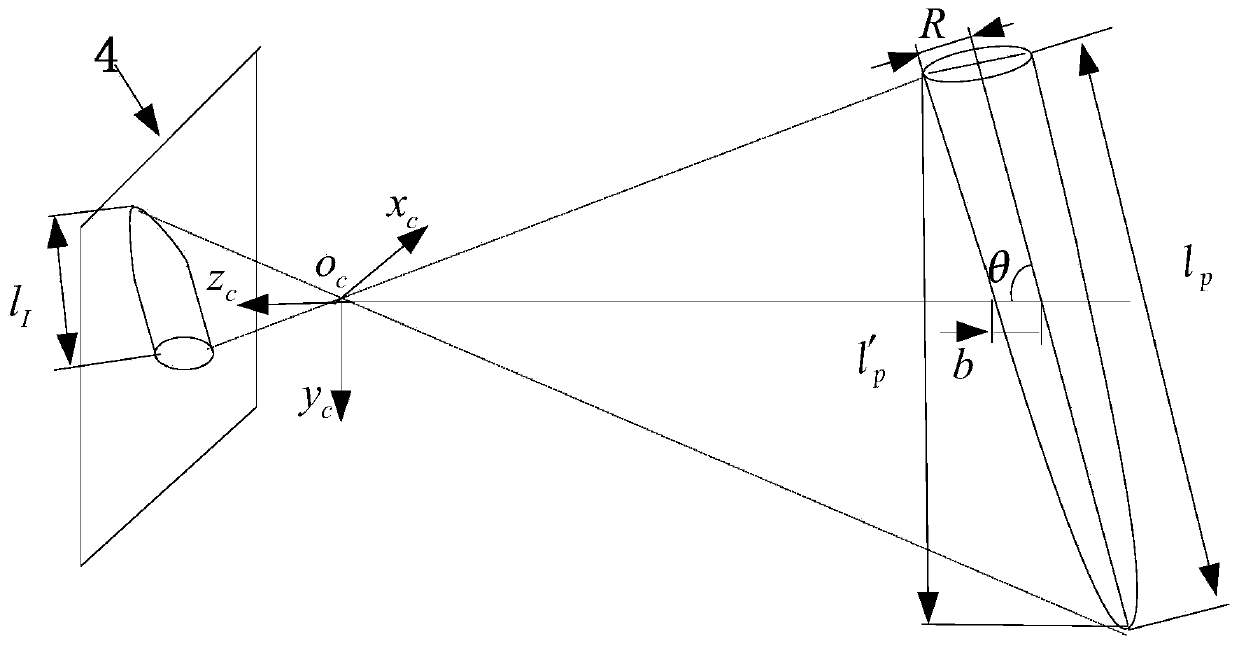

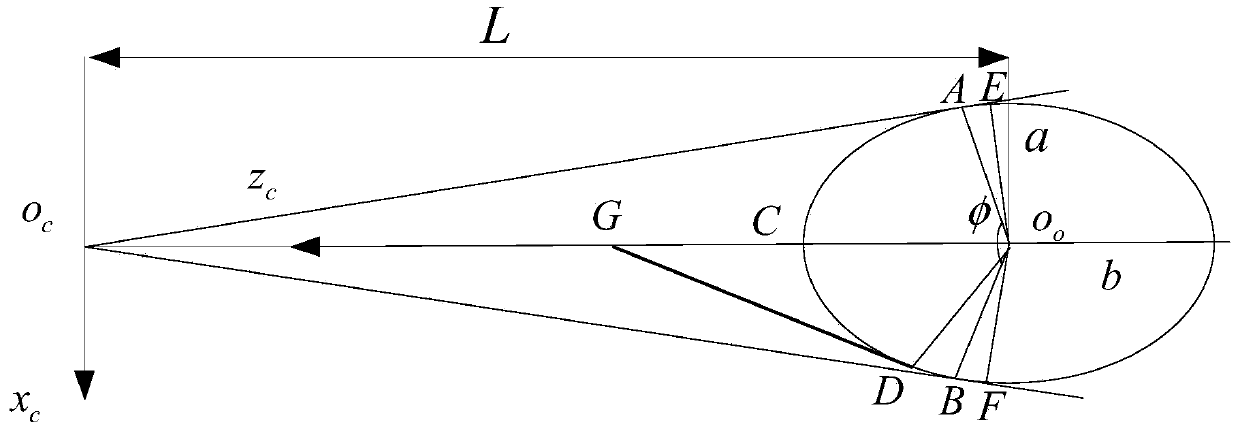

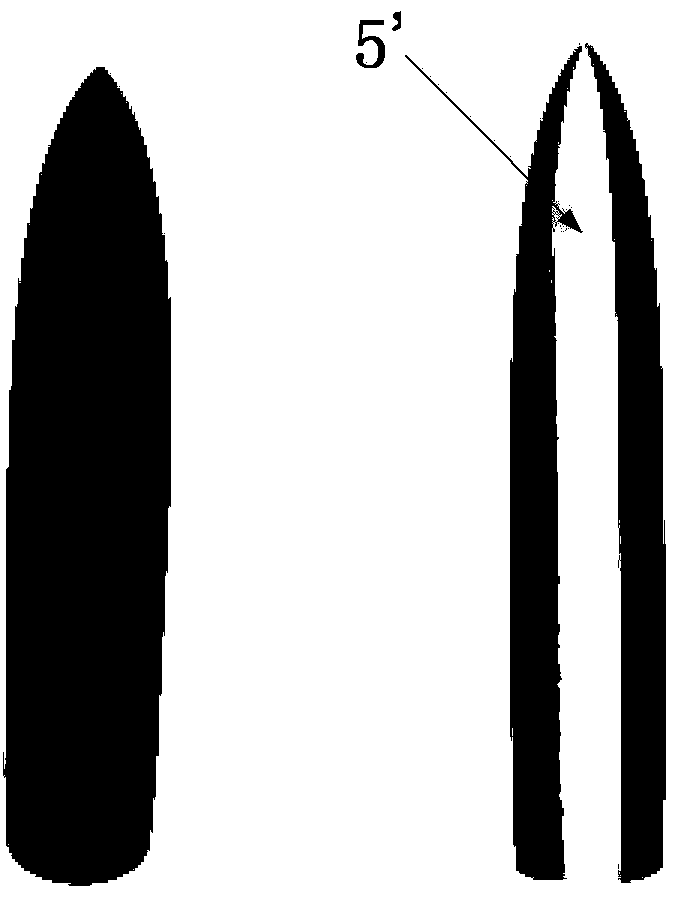

Monocular video measurement method for external store separating locus wind tunnel test

ActiveCN105444982AMonocular video measurement implementationImprove track measurement accuracyAerodynamic testingPhotogrammetry/videogrammetryPattern recognitionStereo matching

The invention relates to the technical field of external store separating wind tunnel tests, and especially relates to a monocular video measurement method for an external store separating locus wind tunnel test. The method overcomes the difficulties of marking point recognition and matching through the circulating coding of marking points when an external store wind tunnel model rotates. The method employs the principle of relative motion, respectively takes a wind tunnel test segment and the external store wind tunnel model as reference objects, solves the position and posture of a camera, further obtains the movement locus of the external store wind tunnel model, achieves the monocular video measurement of an external store separating locus, avoids a stereo matching difficulty of binocular video measurement, and is higher in flexibility and practicality.

Owner:INST OF HIGH SPEED AERODYNAMICS OF CHINA AERODYNAMICS RES & DEV CENT

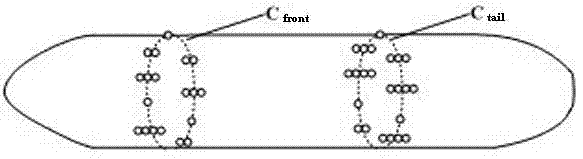

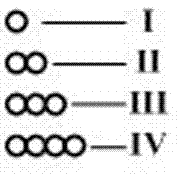

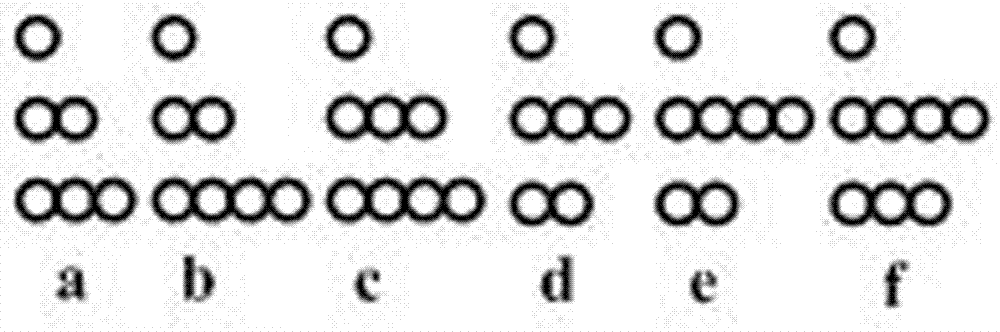

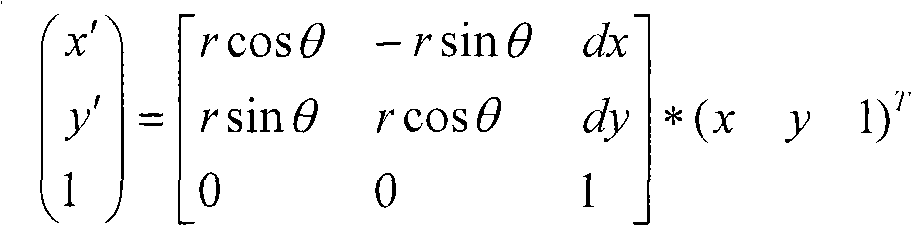

Shot monocular video pose measurement method and target pattern

ActiveCN103512559AOvercome the inability to adapt to the surface structure of projectilesMeasurement errors do not accumulatePhotogrammetry/videogrammetryUsing reradiationBand shapeSystem transformation

The invention relates to a target pattern for a shot and a monocular video pose measurement method applying the pattern. The target pattern is a strip region around the surface of the outer circumference of the shot; 6 sub-regions with colors set at intervals are equally divided in the direction of a shot axis; a code region is arranged inside each sub-region; 4 peaks of each sub-region are used as feature points. When the target pattern is utilized to perform pose measurement, the shot is required to be positioned in the view of a camera. An exclusive triad code is set for each sub-region. The sub-regions are identified according to the codes, and the feature points are identified according to positions of the feature points in the sub-regions. When shot pose is calculated, firstly image coordinates of the feature points in the image of the camera are determined, and then the coordinate value of the shot coordinate system of a corresponding feature point is utilized, and an equation set is established through the coordinate system transformation, so that the shot pose is obtained. The method is simple and wide in application range.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY +1

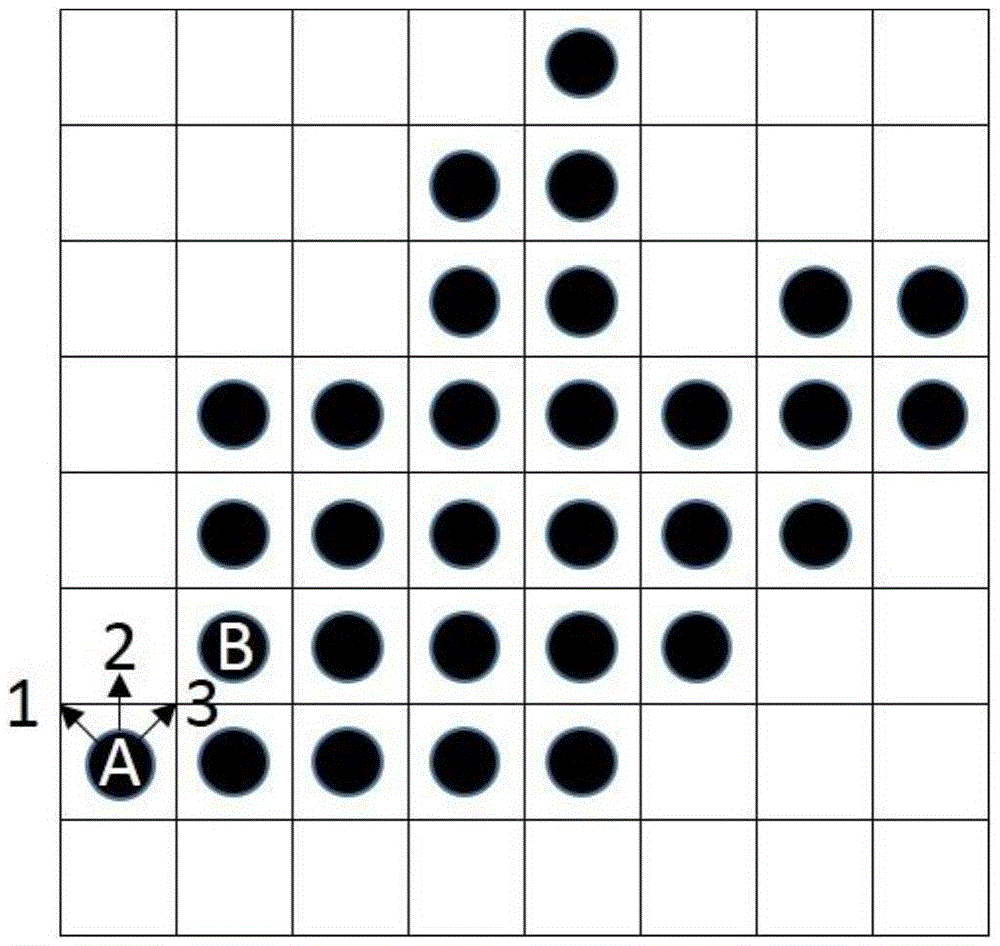

Three-dimensional game interaction system based on monocular video

InactiveCN102622509AImplement interactive functionsInput/output for user-computer interactionGraph readingInteraction systemsComputer module

The invention relates to the technical field of motion capture based on a video, and discloses a three-dimensional game interaction system based on a monocular video. The three-dimensional game interaction system comprises a motion capture module and a three-dimensional engine module, wherein the motion capture module is used for processing video input data of a camera head and outputting motion data of a three-dimensional model; a main game system extracts the motion data and drives a player model according to the motion data; and the three-dimensional engine module as a main module of the system is used for processing a 3D drawing and providing an interface related with an Ogre engine. Compared with the traditional method, the system has the advantages as follows: the key interaction function is realized by software; general computers are equipped with camera heads; and equipment is simple and easily accessible. In this way, players can enjoy the interactive electronic games without buying special equipment when wanting to play games.

Owner:TIANJIN UNIV

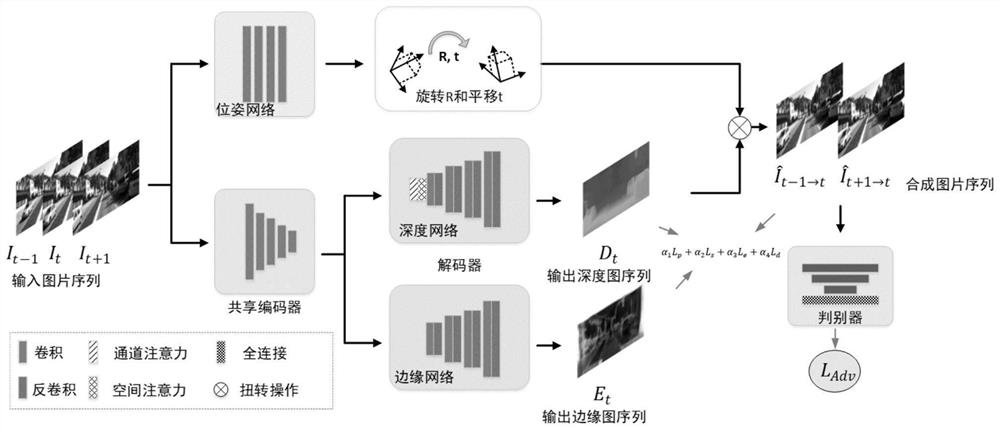

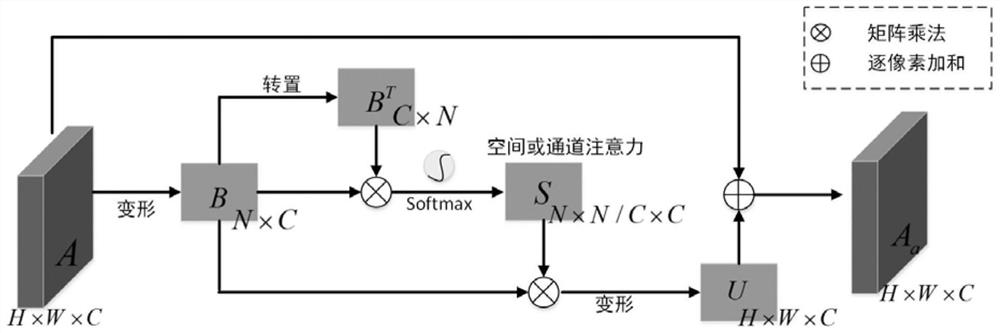

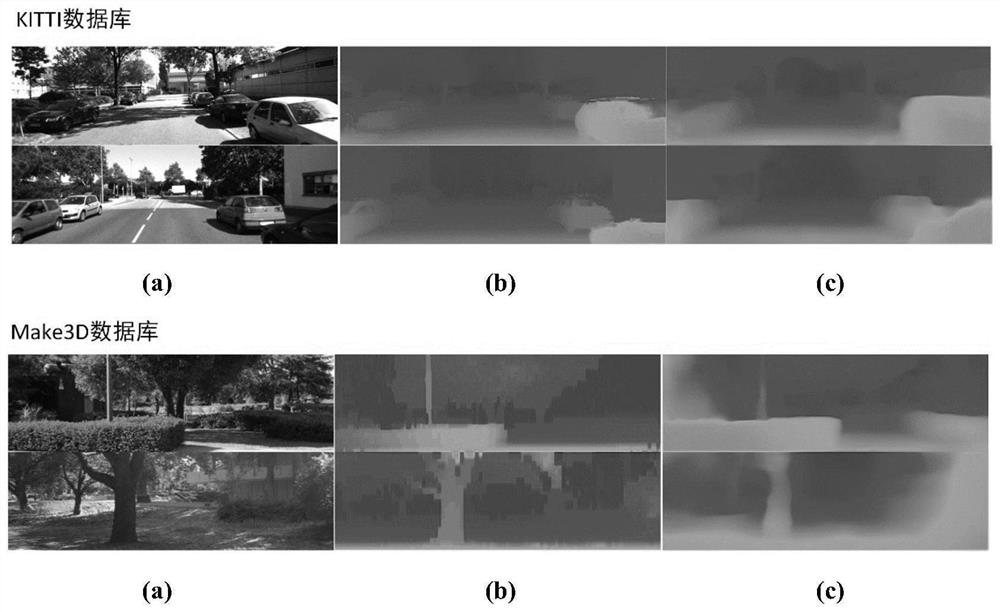

Monocular unsupervised depth estimation method based on context attention mechanism

ActiveCN111739078ARun fastEasy to buildImage enhancementImage analysisImaging processingImage manipulation

The invention discloses a monocular unsupervised depth estimation method based on a context attention mechanism, and belongs to the field of image processing and computer vision. According to the method, a depth estimation method based on a hybrid geometry enhancement loss function and a context attention mechanism is adopted, and a depth estimation sub-network, an edge sub-network and a camera attitude estimation sub-network based on a convolutional neural network are adopted to obtain a high-quality depth map. The system is easy to construct, and a corresponding high-quality depth map is obtained from a monocular video in an end-to-end mode by using a convolutional neural network; the program framework is easy to realize. According to the method, the depth information is solved by usingan unsupervised method, the problem that real data is difficult to obtain in a supervised method is avoided, and the algorithm operation speed is high. According to the method, the depth information is solved by using the monocular video, namely the monocular picture sequence, so the problem that the stereoscopic picture pair is difficult to obtain when the monocular picture depth information is solved by using the stereoscopic picture pair is avoided.

Owner:DALIAN UNIV OF TECH

Method for generating depth map and device thereof

InactiveCN101815225AReduce the amount of calculationShorten the timeSteroscopic systemsFeature vectorMotion parameter

The invention relates to a method for generating a depth map and a device thereof. The method comprises the following steps: according to a dense matching method, obtaining a first depth map representing the pixel depth of a second frame by using a first frame and a second frame of monocular video streaming; detecting the characteristic points of the second frame and a third frame of the monocular video streaming, matching the characteristic point of the third frame with the characteristic point with the second frame to obtain a characteristic vector, and dividing the first depth map into a plurality of areas according to the pixel depth of the second frame; estimating the motion of the detected characteristic point to obtain motion parameters, and updating the parameters of the areas according to the motion parameters and the characteristic vector, thereby updating the areas to be used as a part of the second depth map representing the pixel depth of the third frame; and detecting a new area in the third frame, and calculating the pixel depth of the new area in the third frame to serve as another part of the second depth map, thereby obtaining a second depth map.

Owner:SAMSUNG ELECTRONICS CO LTD +1

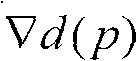

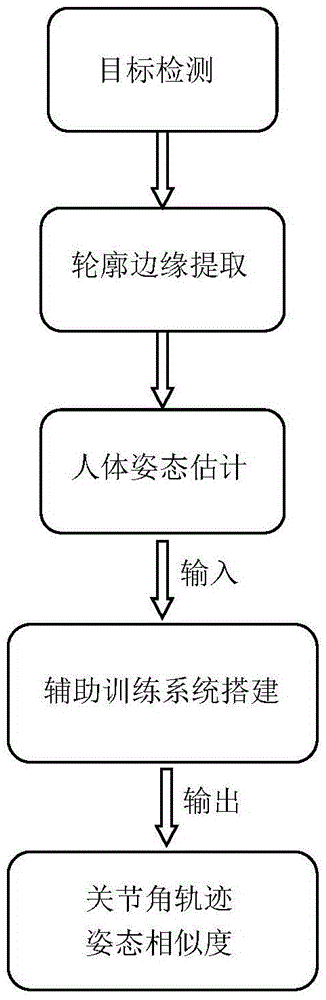

Auxiliary training system based on human body posture estimation algorithm

InactiveCN105664462AImprove the level ofRaise the gradeGymnastic exercisingCharacter and pattern recognitionHuman bodyImaging processing

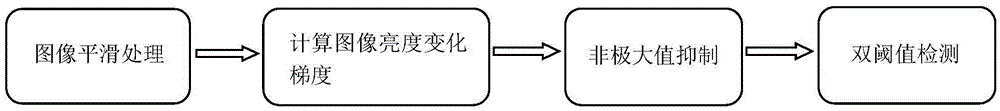

The invention discloses an auxiliary training system based on a human body posture estimation algorithm. The system is achieved through the steps that firstly, a binary outline image of a human body is detected and extracted from a monocular video on the basis of a background modeling method of a ViBe model; secondly, a outline edge graph of the image is obtained through a Canny edge detection algorithm; thirdly, coordinates of 15 main joint points of the human body in the image are acquired through the image processing methods such as horizontal line scanning and human body length ratio constraining; fourthly, on the above basis, the auxiliary training system is built, five joint angels formed by the 15 joint points are adopted as training indexes, the Euclidean distance is adopted as the similarity measure of postures, and the two auxiliary indexes of the joint angle track and the posture similarity are taken as system output. According to the auxiliary training system based on the human body posture estimation algorithm, analysis and contrast of the athlete postures are achieved by quantitatively analyzing the motion characteristics, therefore, the athlete level and grades are scientifically increased, and physical training can get rid of the state of purely depending on experience.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com