HThe invention discloses a human body behavior identification method and system based on attention perception and a tree skeleton point structure

A skeleton point and attention technology, applied in the field of robot vision technology and human-computer interaction, can solve the problems of low algorithm efficiency, inappropriateness, inconvenient maintenance and improvement, etc., and achieve the effect of reducing interference, improving accuracy and efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] In order to make the above objects, features and advantages of the present invention more obvious and understandable, the present invention will be further described below through specific embodiments and accompanying drawings.

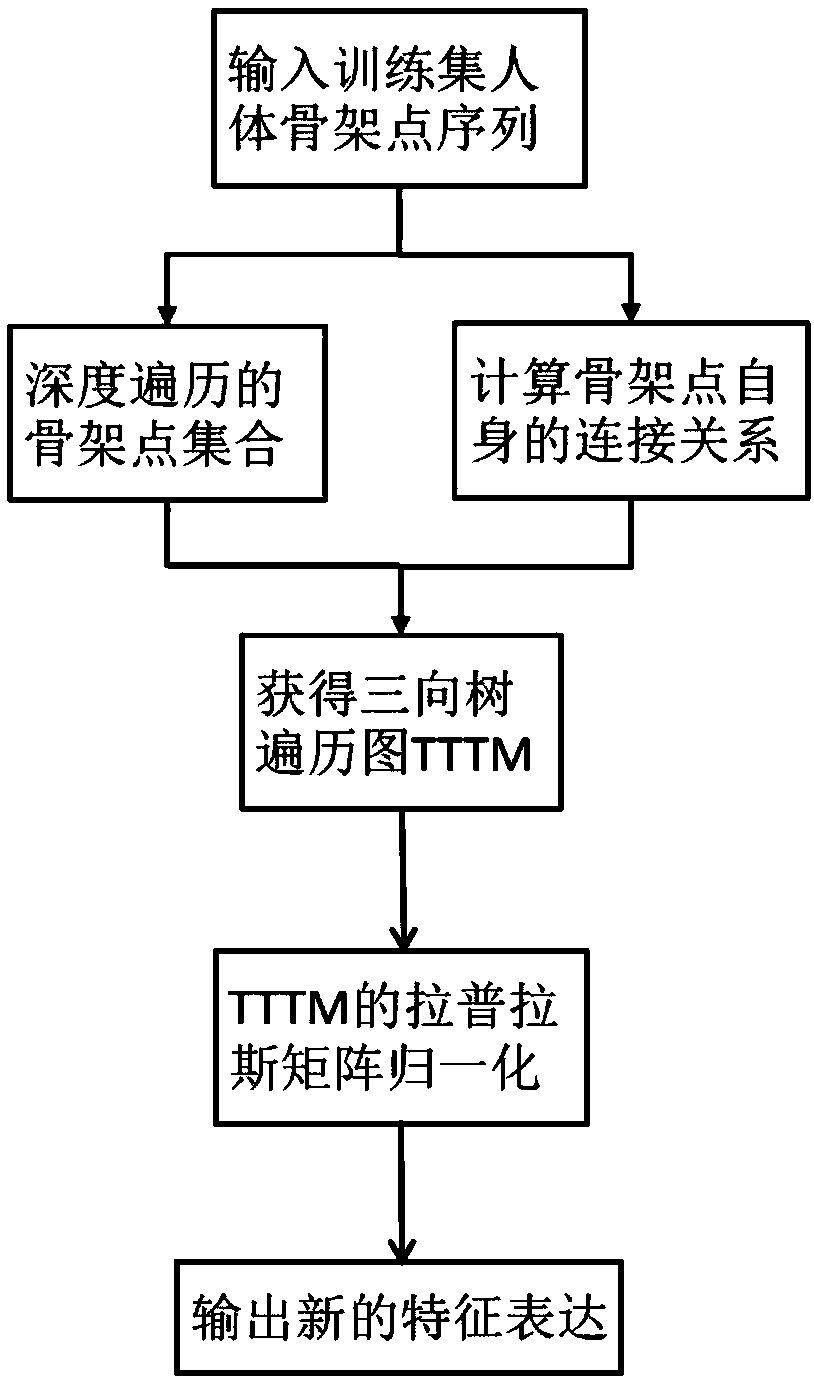

[0030] Such as figure 1 For the data reconstruction flow chart based on the three-way tree traversal rule of the present invention, the following steps are included:

[0031] Step 1, input the sequence of human skeleton points in the training set.

[0032] In graph theory, a tree is an undirected graph. Each frame of a sample sequence contains N skeleton points. These skeleton points are regarded as the nodes of the tree. The set V of these nodes is defined as:

[0033] V={v i |i=1,2,...,N}

[0034] Step 2, use the depth traversal method to traverse the skeleton point set V.

[0035] From the skeleton point set V obtained in step 1, use the depth traversal method to traverse and store the spatial relationship as α, and use the inverse depth...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com