Target tracking method based on space-time feature fusion learning

A space-time feature and target tracking technology, applied in the field of computer vision and pattern recognition, can solve the problems of target object deformation, occlusion tracking difficulty, loss, etc., and achieve the effect of improving speed and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The method of the present invention can be used in various occasions of visual target tracking, including military and civilian fields. Transportation systems, human-computer interaction, virtual reality, etc.

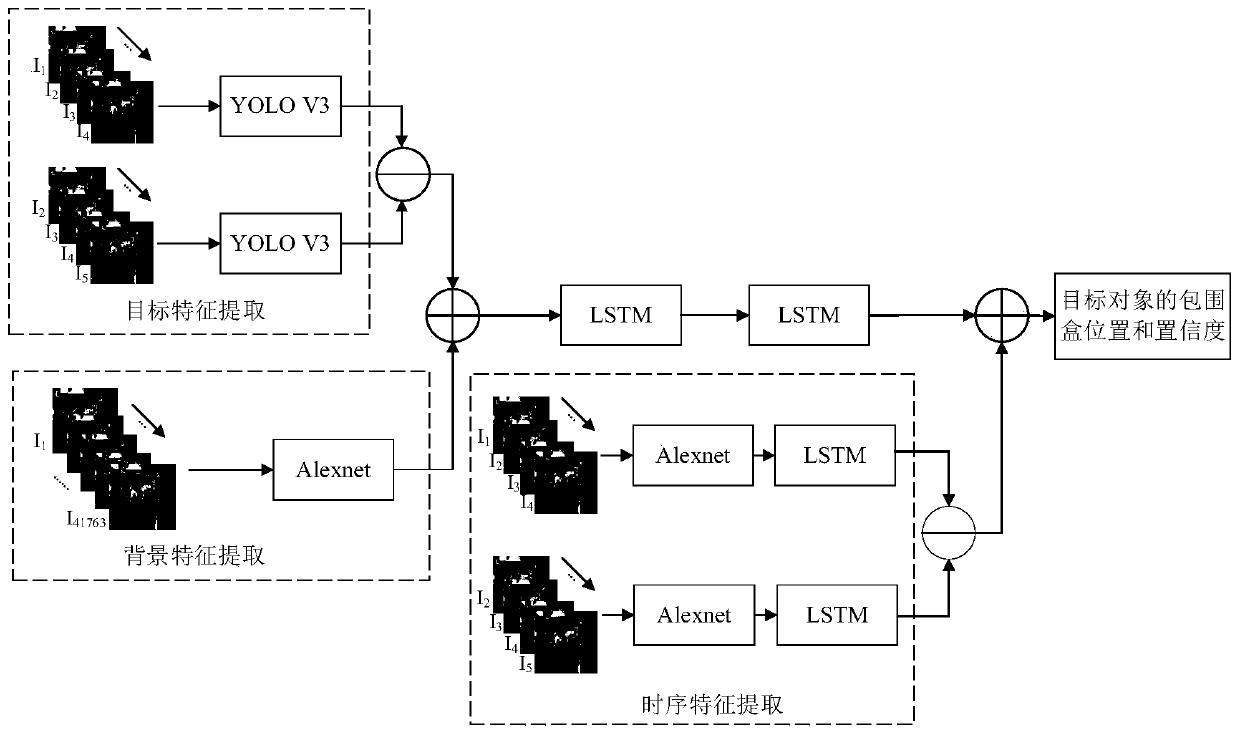

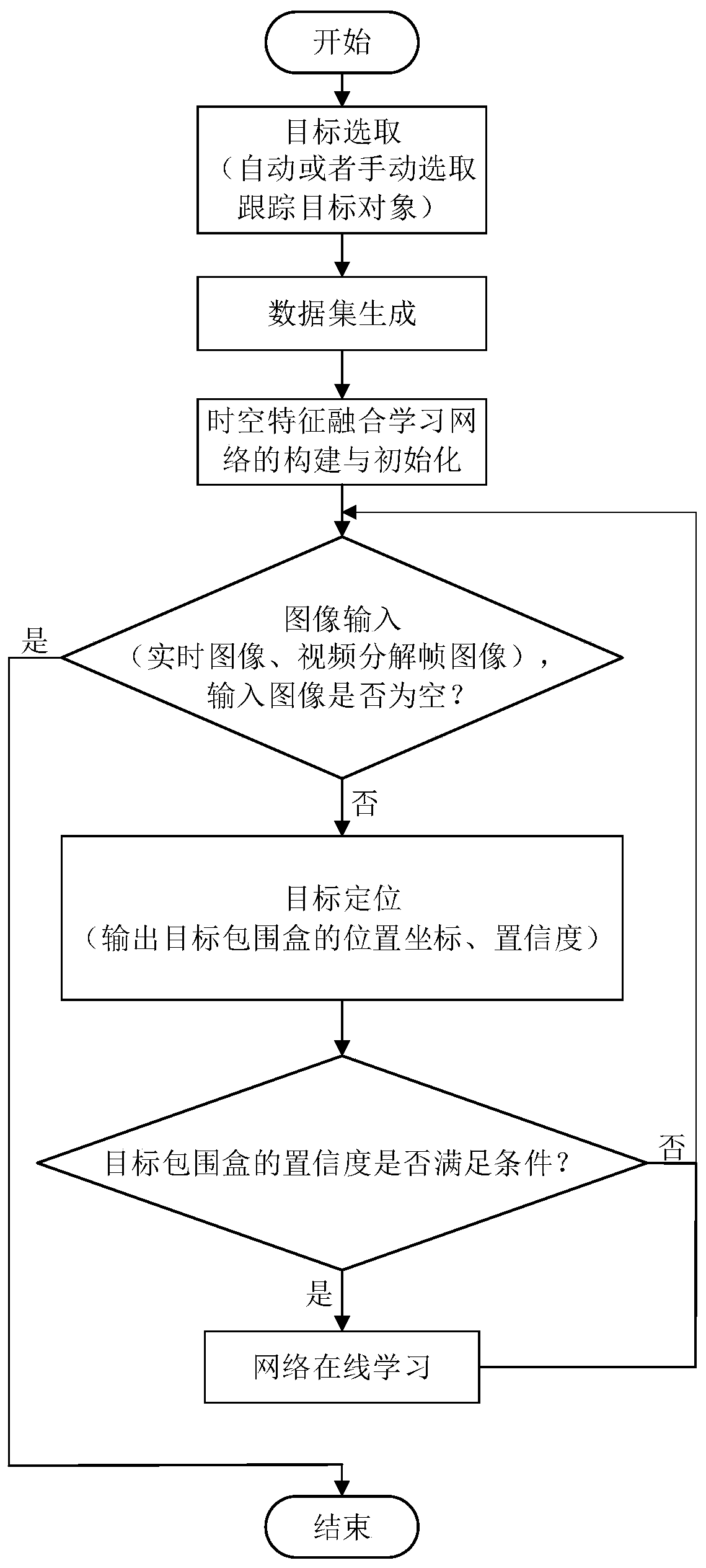

[0030] Take the intelligent video surveillance of traction substation as an example: Intelligent video surveillance of traction substation includes many important automatic analysis tasks, such as intrusion detection, behavior analysis, abnormal alarm, etc., and these tasks must be able to achieve stable target tracking. It can be realized by using the tracking method proposed by the present invention. Specifically, it is first necessary to construct a spatio-temporal feature fusion learning neural network model, such as figure 1 As shown, the network is then trained using the training data set and the stochastic gradient descent method. Due to the mutual influence of the three networks, optimization is difficult, so the network training of spatio-temporal featu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com