Cross-modal Hash retrieval method based on deep learning

A deep learning, cross-modal technology, applied in still image data retrieval, unstructured text data retrieval, text database indexing, etc., can solve the problem of not being able to mine original feature identification information well

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

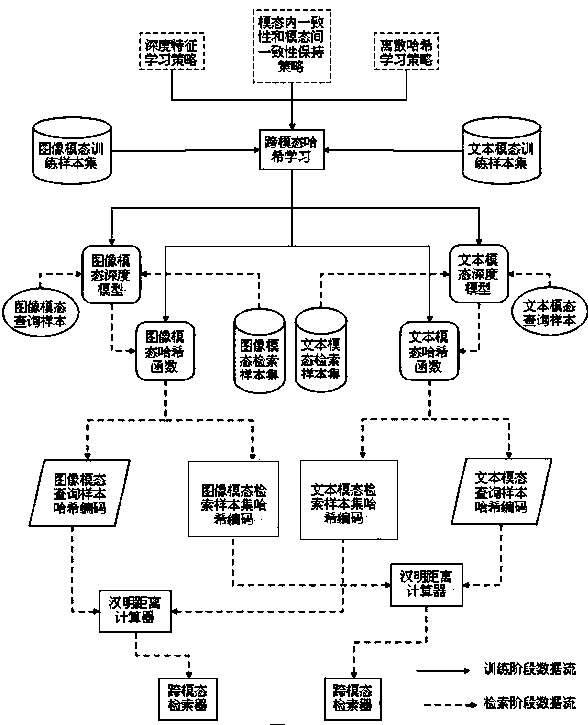

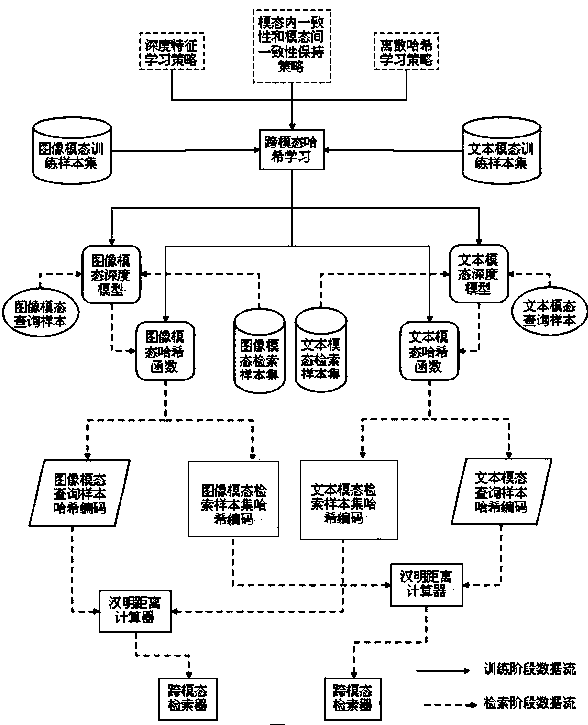

[0034] The technical solution of the present invention will be further described in detail below in conjunction with the accompanying drawings.

[0035] The invention discloses a cross-modal hash retrieval method based on deep learning, such as figure 1 As shown, the specific implementation process mainly includes the following steps: Assume that the pixel feature vector set of the image modality of n objects is Among them, v i Represents the pixel feature vector of the i-th object in the image modality; let Represents the eigenvectors of these n objects in the text mode, where, t i Represents the feature vector of the i-th object in the text mode; expresses the category label vector of n objects as Among them, c represents the number of object categories; for the vector y i For example, if the i-th object belongs to the k-th class, let the vector y i The kth element of is 1, otherwise, the vector y i The kth element of is 0;

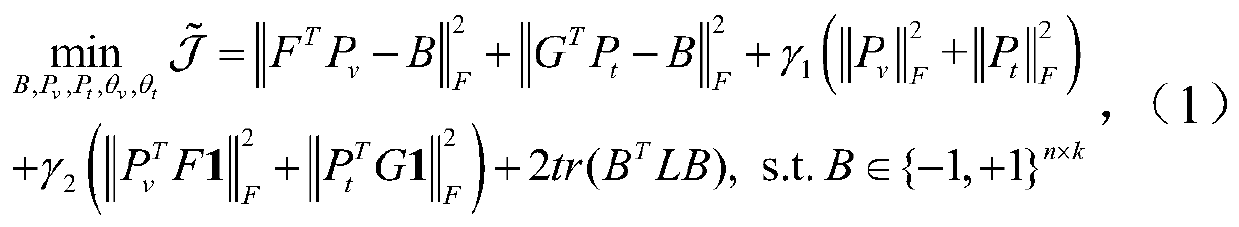

[0036] (1) Construction of cross-modal ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com